import cv2

import sys

import json

import time

import numpy as np

from keras.models import model_from_json

emotion_labels = ['angry', 'fear', 'happy', 'sad', 'surprise', 'neutral']

cascPath = sys.argv[1]

faceCascade = cv2.CascadeClassifier(cascPath)

# load json and create model arch

json_file = open('model.json','r')

loaded_model_json = json_file.read()

json_file.close()

model = model_from_json(loaded_model_json)

# load weights into new model

model.load_weights('model.h5')

def predict_emotion(face_image_gray): # a single cropped face

resized_img = cv2.resize(face_image_gray, (48,48), interpolation = cv2.INTER_AREA)

image = resized_img.reshape(1, 1, 48, 48)

im = cv2.resize(resized_img,(90,100))

cv2.imwrite('face.bmp', im)

list_of_list = model.predict(image, batch_size=1, verbose=1)

angry, fear, happy, sad, surprise, neutral = [prob for lst in list_of_list for prob in lst]

return [angry, fear, happy, sad, surprise, neutral]

video_capture = cv2.VideoCapture(0)

while True:

# Capture frame-by-frame

ret, frame = video_capture.read()

img_gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY,1)

faces = faceCascade.detectMultiScale(

img_gray,

scaleFactor=1.1,

minNeighbors=1,

minSize=(30, 30),

flags=cv2.CASCADE_SCALE_IMAGE

)

emotions = []

# Draw a rectangle around the faces

for (x, y, w, h) in faces:

face_image_gray = img_gray[y:y+h, x:x+w]

angry, fear, happy, sad, surprise, neutral = predict_emotion(face_image_gray)

emotions = [angry, fear, happy, sad, surprise, neutral]

m = emotions.index(max(emotions))

biaoqing = ""

for index, val in enumerate(emotion_labels):

if (m == index):

biaoqing = val

print(biaoqing)

cv2.rectangle(frame, (x, y), (x + w, y + h), (0, 255, 0), 2)

#cv2.putText(frame, biaoqing, (x, y),

# cv2.FONT_HERSHEY_PLAIN, 1, (0, 255, 0),

# thickness=2, lineType=2)

with open('emotion.txt', 'a') as f:

f.write('{},{},{},{},{},{},{},{}\n'.format(time.time(),angry, fear, happy, sad, surprise, neutral,biaoqing))

# Display the resulting frame

cv2.imshow('Video', frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# When everything is done, release the capture

video_capture.release()

cv2.destroyAllWindows()几个主要的东西

运行命令行 python real-time.py haarcascade_frontalface_default.xml

加载haarcascade_frontalface_default.xml是haar分类器,用于检测人脸,别人训练好的加载model.h5模型,这是用caffe训练的,用于表情预测

- model.json经过查看里面内容,就是别人将model通过keras保存为json格式,现在重新将json加载

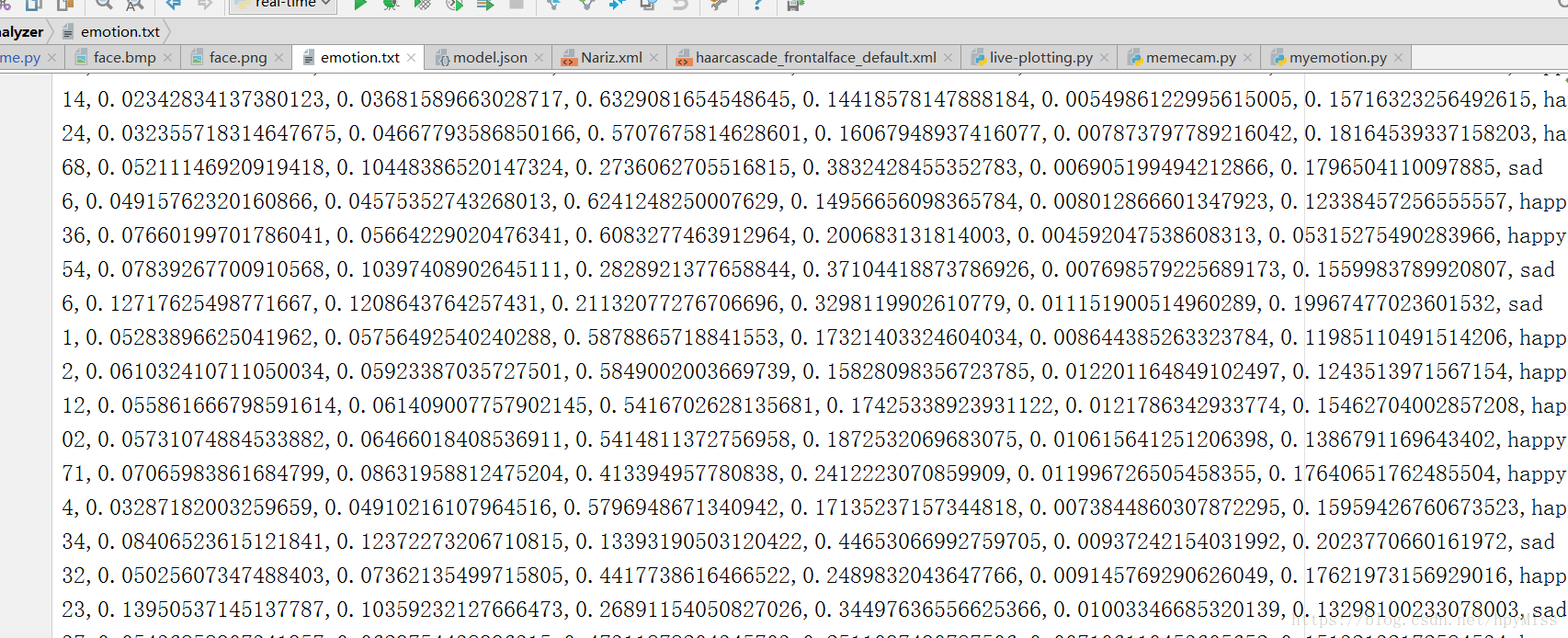

- emotion.txt里面是结果,哪个值越大就是哪个。这仅仅是预测,但效果还是比(一)好很多

- 整个源代码,包括模型从这里下载