爬取唯品会商品评论

爬取各大购物平台的商品评论的方法相似:可以参考以下文章。

链接: https://blog.csdn.net/coffeetogether/article/details/114274960?spm=1001.2014.3001.5501

1.找到目标的url:

2.检查响应结果:

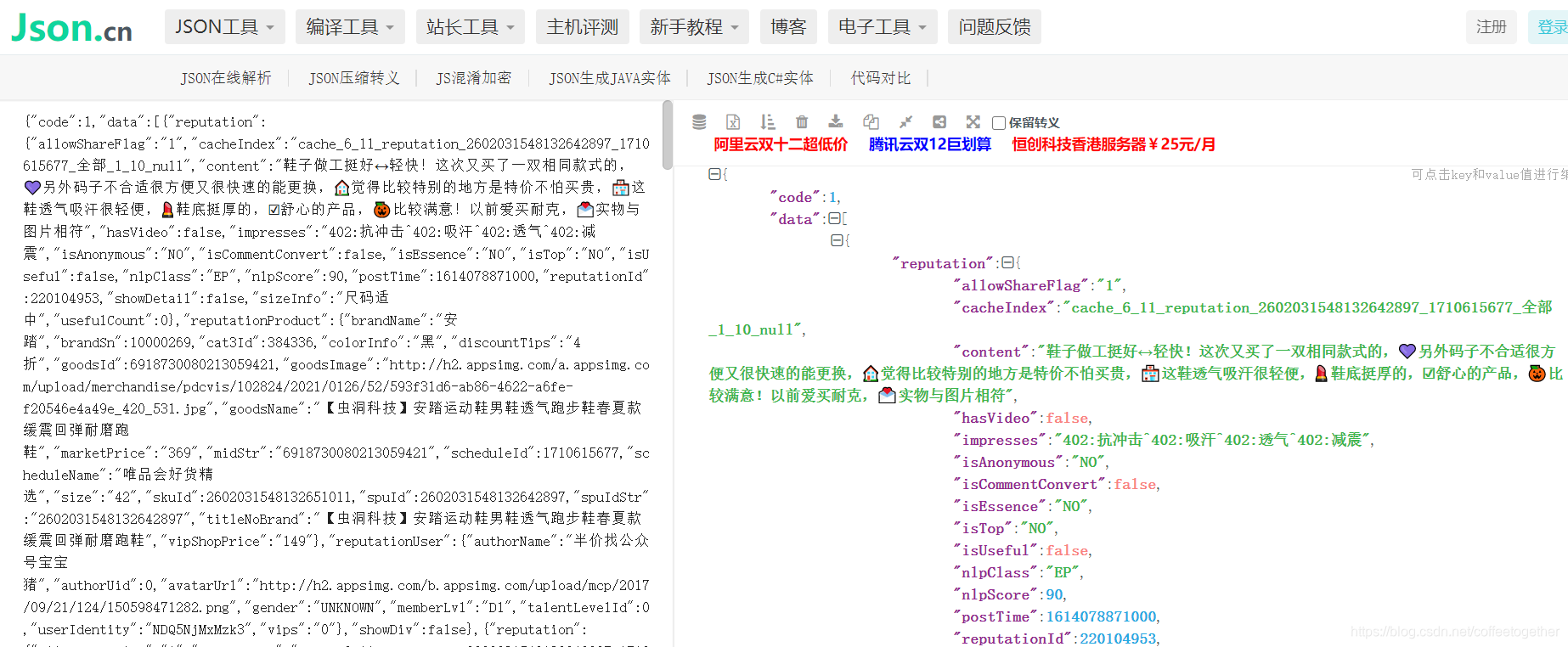

3.删除响应数据中的干扰信息:

注:在代码中我们可以通过正则表达来去除干扰信息。

通过json在先解析,我们可以得到评论和用户昵称的jsonpath语法

4.寻找翻页规律:

https://mapi.vip.com/vips-mobile/rest/content/reputation/queryBySpuId_for_pc?callback=getCommentDataCb&app_name=shop_pc&app_version=4.0&warehouse=VIP_HZ&fdc_area_id=104101102&client=pc&mobile_platform=1&province_id=104101&api_key=70f71280d5d547b2a7bb370a529aeea1&user_id=&mars_cid=1611985620668_86046d3ae583d23339a1a310c41f271f&wap_consumer=a&spuId=2602031548132642897&brandId=1710615677&page=1&pageSize=10×tamp=1614694759000&keyWordNlp=%E5%85%A8%E9%83%A8&_=1614694754945

https://mapi.vip.com/vips-mobile/rest/content/reputation/queryBySpuId_for_pc?callback=getCommentDataCb&app_name=shop_pc&app_version=4.0&warehouse=VIP_HZ&fdc_area_id=104101102&client=pc&mobile_platform=1&province_id=104101&api_key=70f71280d5d547b2a7bb370a529aeea1&user_id=&mars_cid=1611985620668_86046d3ae583d23339a1a310c41f271f&wap_consumer=a&spuId=2602031548132642897&brandId=1710615677&page=2&pageSize=10×tamp=1614695348000&keyWordNlp=%E5%85%A8%E9%83%A8&_=1614695342959

https://mapi.vip.com/vips-mobile/rest/content/reputation/queryBySpuId_for_pc?callback=getCommentDataCb&app_name=shop_pc&app_version=4.0&warehouse=VIP_HZ&fdc_area_id=104101102&client=pc&mobile_platform=1&province_id=104101&api_key=70f71280d5d547b2a7bb370a529aeea1&user_id=&mars_cid=1611985620668_86046d3ae583d23339a1a310c41f271f&wap_consumer=a&spuId=2602031548132642897&brandId=1710615677&page=3&pageSize=10×tamp=1614695378000&keyWordNlp=%E5%85%A8%E9%83%A8&_=1614695342960

对比前三页的url,发现url的规律在于page参数,而后面的timetamp和_参数不影响请求的发送。因此我们可以手动去除干扰参数。

解析完毕,上代码:

import requests

import re

import jsonpath

import json

if __name__ == '__main__':

# 手动输入要爬取的页数

pages = int(input('输入要爬取评论的页数:'))

# 创建for循环,进行翻页操作

for i in range(pages):

page = i+1

# 确认目标的url

url_ = f'https://mapi.vip.com/vips-mobile/rest/content/reputation/queryBySpuId_for_pc?callback=getCommentDataCb&app_name=shop_pc&app_version=4.0&warehouse=VIP_HZ&fdc_area_id=104101102&client=pc&mobile_platform=1&province_id=104101&api_key=70f71280d5d547b2a7bb370a529aeea1&user_id=&mars_cid=1611985620668_86046d3ae583d23339a1a310c41f271f&wap_consumer=a&spuId=2602031548132642897&brandId=1710615677&page={page}&pageSize=10×tamp=1614695344000&keyWordNlp=%E5%85%A8%E9%83%A8&_=1614695342950'

# 构造请求头参数

headers_ = {

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.104 Safari/537.36',

'Referer':'https://detail.vip.com/',

'Cookie':'cps=adp%3Ag1o71nr0%3A%3A%3A%3A; vip_first_visitor=1; vip_address=%257B%2522pid%2522%253A%2522104101%2522%252C%2522cid%2522%253A%2522104101102%2522%252C%2522pname%2522%253A%2522%255Cu6cb3%255Cu5357%255Cu7701%2522%252C%2522cname%2522%253A%2522%255Cu5f00%255Cu5c01%255Cu5e02%2522%257D; vip_province=104101; vip_province_name=%E6%B2%B3%E5%8D%97%E7%9C%81; vip_city_name=%E5%BC%80%E5%B0%81%E5%B8%82; vip_city_code=104101102; vip_wh=VIP_HZ; vip_ipver=31; user_class=a; mars_sid=f59dfc669f4b384e51007fa4e7a9d864; PHPSESSID=1nqdfkfrkqprn0eqvenmdt32v7; mars_pid=0; visit_id=8482B9A56BD0ED0F7428399DD6B79874; VipUINFO=luc%3Aa%7Csuc%3Aa%7Cbct%3Ac_new%7Chct%3Ac_new%7Cbdts%3A0%7Cbcts%3A0%7Ckfts%3A0%7Cc10%3A0%7Crcabt%3A0%7Cp2%3A0%7Cp3%3A1%7Cp4%3A0%7Cp5%3A1%7Cul%3A3105; vip_tracker_source_from=; pg_session_no=16; mars_cid=1611985620668_86046d3ae583d23339a1a310c41f271f'

}

# 发送请求,获取响应

response_ = requests.get(url_,headers=headers_)

# 利用正则表达式,去除多余的干扰信息

str_data = re.findall(r'getCommentDataCb\((.*?)\)',response_.text)[0]

# 将响应的json数据转换为python数据

py_data = json.loads(str_data)

# 提取数据中客户的id和评论

id_list = jsonpath.jsonpath(py_data,'$..authorName')

comment_content = jsonpath.jsonpath(py_data,'$..content')

# 创建字典,将数据保存保存为json格式

for i in range(len(id_list)):

dict_ = {}

dict_[id_list[i]] = comment_content[i]

json_data = json.dumps(dict_,ensure_ascii=False)+',\n'

with open('唯品会商品评论.json','a',encoding='utf-8')as f:

f.write(json_data)

爬取了2页

执行结果如下: