看了一些Baseline,发现有的太简单;有的需要专用数据集,可以参考代码写法

一、Uncertainties

https://github.com/hurrialice/uncertainties

生成数据集

x_test = np.linspace(-test_abs, test_abs, test_vec_size)

y_test = mean_fun(x_test)

模型定义:

class Net(torch.nn.Module):

def __init__(self, p, logvar, n_feature, n_hidden, n_output):

super().__init__()

self.p = p

self.logvar = logvar

self.hidden = torch.nn.Linear(n_feature, n_hidden) # hidden layer

self.mid1 = torch.nn.Linear(n_hidden, n_hidden) # hidden layer

self.mid2 = torch.nn.Linear(n_hidden, n_hidden) # hidden layer

self.mid3 = torch.nn.Linear(n_hidden, n_hidden) # hidden layer

self.mid4 = torch.nn.Linear(n_hidden, n_hidden) # hidden layer

self.predict = torch.nn.Linear(n_hidden, n_output) # output layer

self.get_var = torch.nn.Linear(n_hidden, n_output)

def forward(self, x):

x = F.relu(self.hidden(x)) # activation function for hidden layer

x = F.relu(self.mid1(x)) # activation function for hidden layer

x = F.relu(self.mid2(x))

x = F.relu(self.mid3(x))

x = F.relu(self.mid4(x))

x = F.dropout(x, p = self.p)

# get y and log_sigma

y = self.predict(x)

if self.logvar:

logvar = self.get_var(x)

else:

logvar = torch.zeros(y.size())

return y, logvar

模型在最后一层,分别用self.predict(x)和self.get_var(x)来获得预测值与aleatoric值。

损失函数定义:

class aleatoric_loss(nn.Module):

def __init__(self):

super(aleatoric_loss, self).__init__()

def forward(self, gt, pred_y, logvar):

loss = torch.sum(0.5*(torch.exp((-1)*logvar)) * (gt - pred_y)**2 + 0.5*logvar)

return loss

LOSS = aleatoric_loss()

符合经典loss

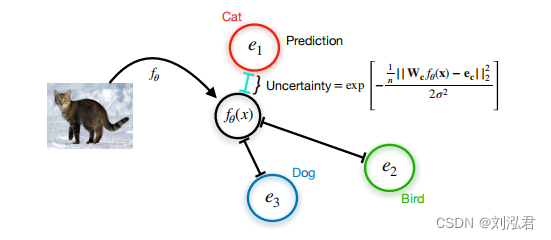

model uncertainty

这里的model uncertainty应该是用mc_dropout来做的

二、ehr_ood_detection

https://github.com/Pacmed/ehr_ood_detection

在clone到本地(甚至是解压zip包)的时候报错,删除了img文件夹:https://github.com/DhrLhj/ehr_ood_detection-master

https://arxiv.org/pdf/1810.05546.pdf

Implement a member of an anchored ensembles as described in [1]. The main difference compared to regular ensembles of Deep Neural Networks is that they use a special kind of weight decay regularization, which makes the whole process Bayesian.

总体来说文件比较乱,与任务关系不大。

三、deep_uncertainty_estimation

https://github.com/uzh-rpg/deep_uncertainty_estimation

已尝试训练(train.py),发现了问题:

- 不能训练_adf的模型

- 首先,直接训练模型会收敛不了,loss始终为nan

- 官网没有给出训练_adf的例子

- 只有训练resnet18,推理resnet18_adf_dropout的例子:

–load_model_name resnet18_dropout --test_model_name resnet18_dropout_adf

总的来说,代码比较简单,数据集使用cifar10,已下载;

该仓库代码对resnet进行了修改,使得模型能够使用已训练好的参数权重传播不确定度;对于其他复杂网络,需要手动修改。

具体源码阅读链接:

-

A General Framework for Uncertainty Estimation in Deep Learning源码阅读

-

A General Framework for Uncertainty Estimation in Deep Learning源码阅读(二)

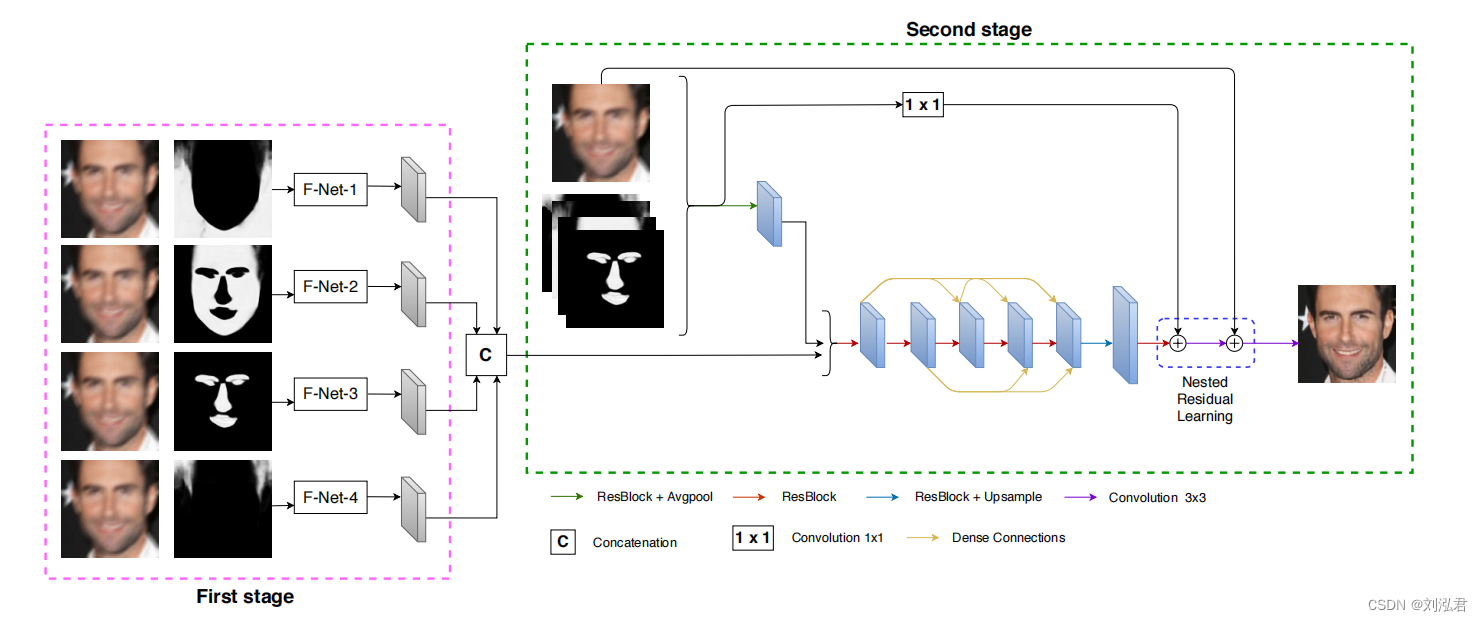

四、UMSN-Face-Deblurring

UMSN-Face-Deblurring

本文针对分割任务,设计了几个部位的mask,在计算loss同时计算各mask的对任务的贡献程度c(文中定义为uncertainty,但其实没有关系),并使用c加权loss来进行梯度反向传播。

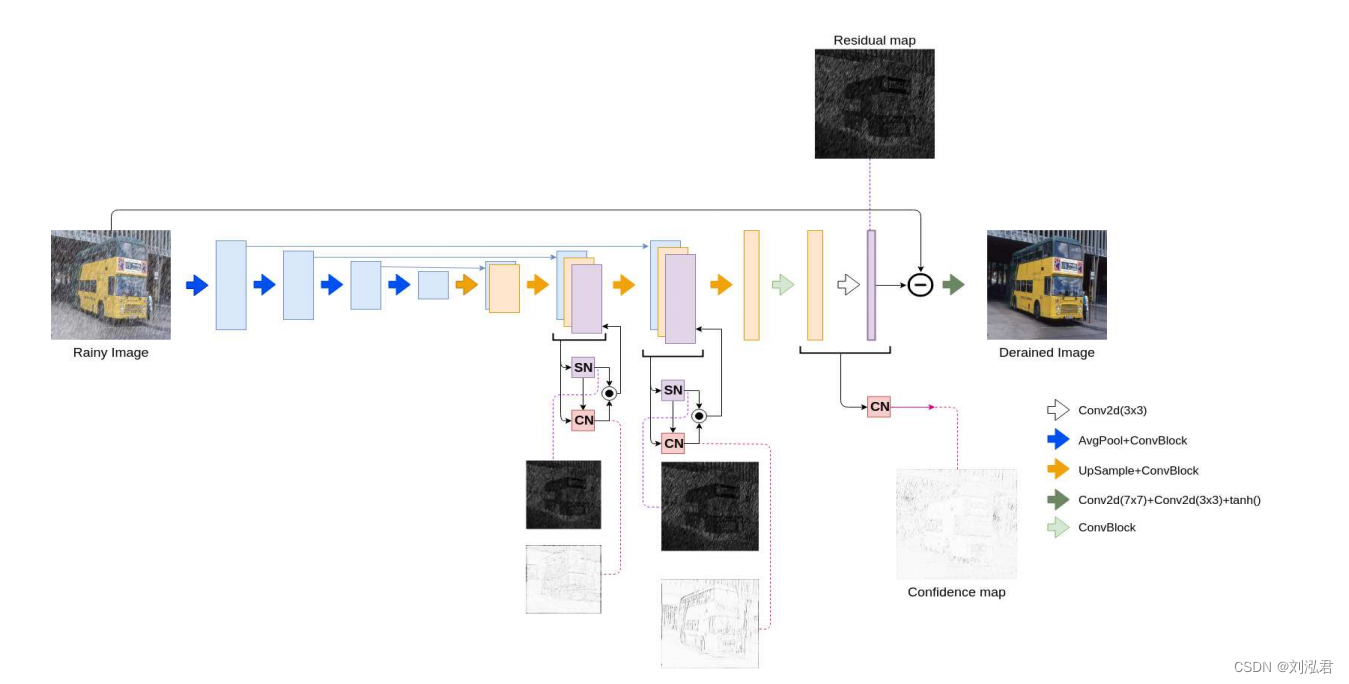

五、UMRL–using-Cycle-Spinning

https://github.com/rajeevyasarla/UMRL–using-Cycle-Spinning

论文体现的内容比较切合,具体代码实现待看。

六、Uncertainty Baselines

https://github.com/google/uncertainty-baselines/tree/master

仓库中有各种baseline(停留在两年前),可以对照着综述论文看一看各个方法的具体实现与结果。待看。

七、Deterministic Uncertainty Quantification

https://github.com/y0ast/deterministic-uncertainty-quantification

如题,是deterministic neural network的实现方法。待看。

八、What Uncertainties Do We Need in Bayesian Deep Learning for Computer Vision?

https://github.com/hmi88/what

经典论文what的非官方实现。待看

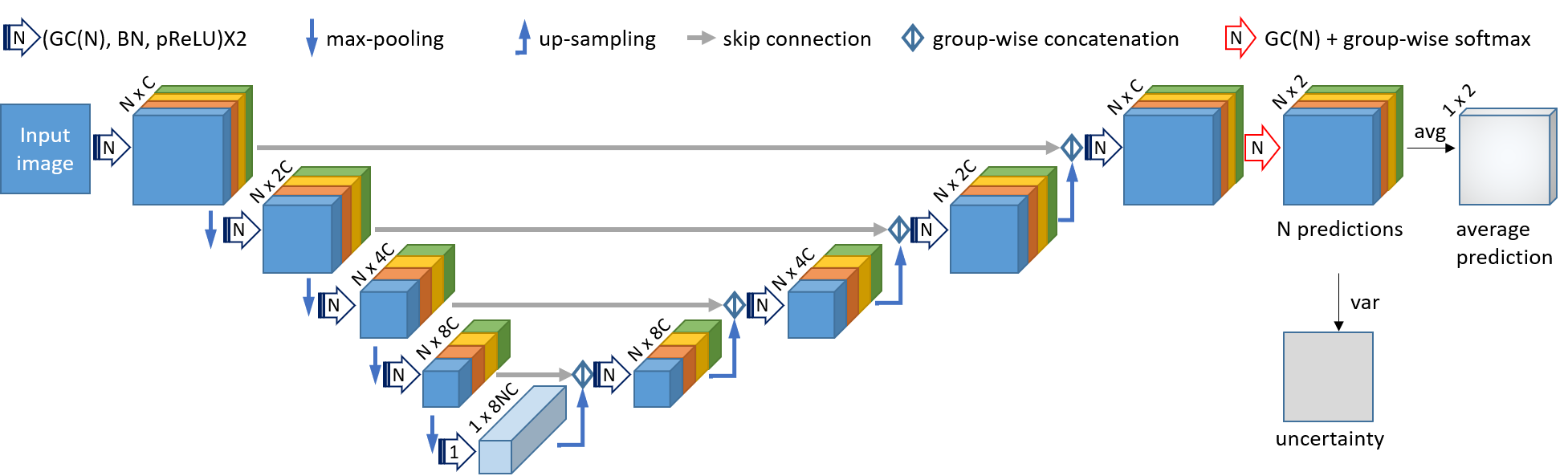

九、UGIR: Uncertainty-Guided Interactive Refinement for Segmentation

https://github.com/HiLab-git/UGIR

待看

还需要看的:

《Uncertainty-Driven Dehazing Network》论文,已向作者发邮件请求源码。