测试map

上一篇训练教程mmrotate框架训练数据集,选用的iou=50,

我想测试iou在75之下的map的值,和iou在0.05-0.95递增的情况的平均map,需要进行以下操作:

测试AP75

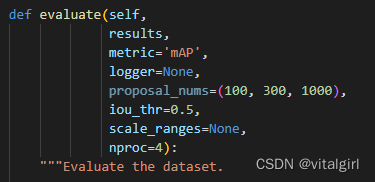

1.修改mmrotate/datasets/dota.py

主要有一个参数:

1)iou_thr: iou阈值,修改成75

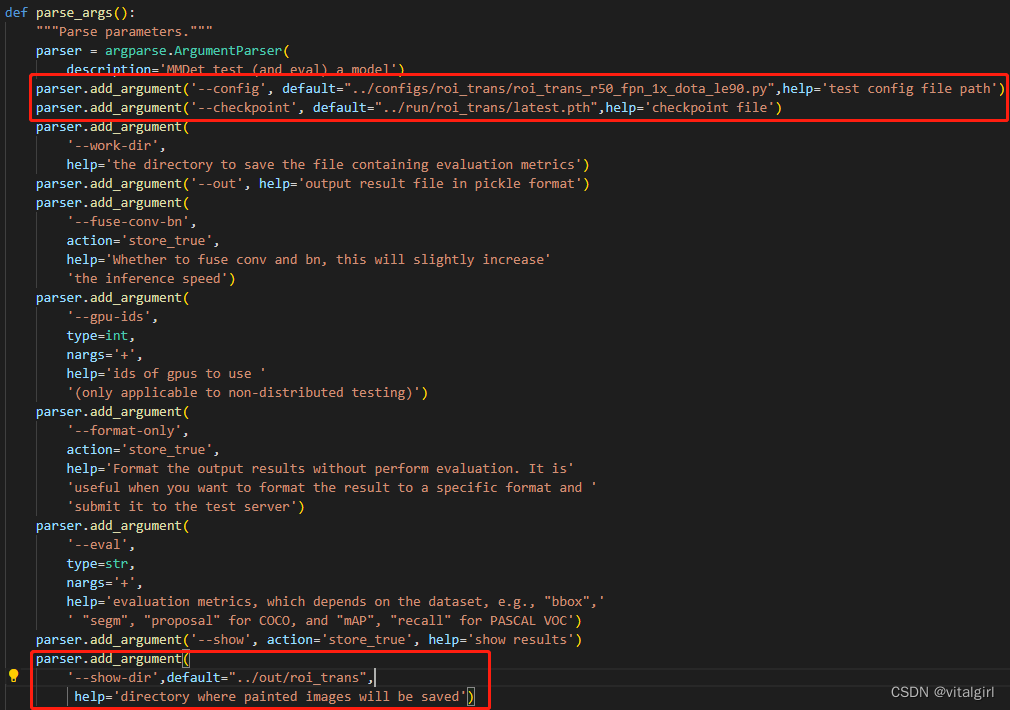

2.修改 test.py 中的参数

主要有三个参数:

1)config: 使用的模型文件 ;

2)checkpoint:训练得到的模型权重文件;

3)show-dir: 预测结果存放的路径

补充:测试用的数据集就是训练的步骤4中的数据集路径

3.输入指令

python test.py --eval mAP

测试AP(iou在0.05-0.95递增的情况的平均map)

需要借助其他的代码

1.修改mmrotate/datasets/dota.py

在类class DOTADataset(CustomDataset)下添加以下子函数

def save_dota_txt(self, results, outfolder,conf_thresh=0.01):

import math

if not os.path.exists(outfolder):

os.mkdir(outfolder)

for i in range(len(self)):

imgname = self.data_infos[i]['filename']

bboxresult = results[i]

txt_name = os.path.join(outfolder,imgname.split('.')[0]+'.txt')

f = open(txt_name, 'w')

for cls, bboxes in zip(self.CLASSES, bboxresult):

for box in bboxes:

if box[-1] < conf_thresh:

continue

xc, yc, w, h, ag, score = box.tolist()

wx, wy = w / 2 * math.cos(ag), w / 2 * math.sin(ag)

hx, hy = -h / 2 * math.sin(ag), h / 2 * math.cos(ag)

p1 = (xc - wx - hx, yc - wy - hy)

p2 = (xc + wx - hx, yc + wy - hy)

p3 = (xc + wx + hx, yc + wy + hy)

p4 = (xc - wx + hx, yc - wy + hy)

ps = (np.concatenate([p1, p2, p3, p4]))

points = ' '.join(ps.astype(float).astype(str))

# soft label and eval result

f.write(points + ' ' + cls + ' ' + str(box[-1]) + '\n')

# hard label

# if float(box[-1]) > 0.1:

# f.write(points + ' ' + cls + ' 0\n')

f.close()

2.借用evaluation.py,修改map步长

import numpy as np

import os

from shapely.geometry import Polygon

import sys

sys.path.append('/home/disk/bing/mmrotate-main/DOTA_devkit')

import polyiou

def parse_gt(filename):

"""

:param filename: ground truth file to parse

:return: all instances in a picture

"""

objects = []

with open(filename, 'r') as f:

while True:

line = f.readline()

if line:

splitlines = line.strip().split(' ')

object_struct = {}

if (len(splitlines) < 9):

continue

object_struct['name'] = splitlines[8]

object_struct['difficult'] = float(splitlines[9])

object_struct['bbox'] = [

float(splitlines[0]),

float(splitlines[1]),

float(splitlines[2]),

float(splitlines[3]),

float(splitlines[4]),

float(splitlines[5]),

float(splitlines[6]),

float(splitlines[7])

]

objects.append(object_struct)

else:

break

return objects

def voc_ap(rec, prec, use_07_metric=False):

""" ap = voc_ap(rec, prec, [use_07_metric])

Compute VOC AP given precision and recall.

If use_07_metric is true, uses the

VOC 07 11 point method (default:False).

"""

if use_07_metric:

# 11 point metric

ap = 0.

for t in np.arange(0., 1.1, 0.1):

if np.sum(rec >= t) == 0:

p = 0

else:

p = np.max(prec[rec >= t])

ap = ap + p / 11.

else:

# correct AP calculation

# first append sentinel values at the end

mrec = np.concatenate(([0.], rec, [1.]))

mpre = np.concatenate(([0.], prec, [0.]))

# compute the precision envelope

for i in range(mpre.size - 1, 0, -1):

mpre[i - 1] = np.maximum(mpre[i - 1], mpre[i])

# to calculate area under PR curve, look for points

# where X axis (recall) changes value

i = np.where(mrec[1:] != mrec[:-1])[0]

# and sum (\Delta recall) * prec

ap = np.sum((mrec[i + 1] - mrec[i]) * mpre[i + 1])

return ap

def parse_predict(detpath, classname):

image_ids = []

confidence = []

BB = []

files = os.listdir(detpath)

for file in files:

image_name = file.split('.')[0]

R = [

obj for obj in parse_gt(os.path.join(detpath, file))

if obj['name'] == classname

]

bbox = [x['bbox'] for x in R]

scores = [x['difficult'] for x in R]

image_names = [image_name for x in R]

image_ids += image_names

confidence += scores

BB += bbox

return np.array(image_ids), np.array(confidence), np.array(BB)

def cal_iou(g, p):

g = np.asarray(g)

p = np.asarray(p)

g = Polygon(g[:8].reshape((4, 2)))

#print('p:', p)

p = Polygon(p[:8].reshape((4, 2)))

if not g.is_valid or not p.is_valid:

return 0

inter = Polygon(g).intersection(Polygon(p)).area

union = g.area + p.area - inter

if union == 0:

return 0

else:

return inter / union

def voc_eval(

detpath,

annopath,

classname,

# cachedir,

ovthresh=0.5,

use_07_metric=False):

recs = {}

for txt in os.listdir(annopath):

recs[txt[:-4]] = parse_gt(os.path.join(annopath, txt))

class_recs = {}

npos = 0

for txt in os.listdir(annopath):

R = [obj for obj in recs[txt[:-4]] if obj['name'] == classname]

bbox = np.array([x['bbox'] for x in R])

difficult = np.array([False for x in R])

det = [False] * len(R)

npos = npos + len(R)

class_recs[txt[:-4]] = {

'bbox': bbox,

'difficult': difficult,

'det': det

}

image_ids, confidence, BB = parse_predict(detpath, classname)

sorted_ind = np.argsort(-confidence)

if BB.shape[0] == 0:

return 0, 0, 0

BB = BB[sorted_ind, :]

image_ids = [image_ids[x] for x in sorted_ind]

nd = len(image_ids)

tp = np.zeros(nd)

fp = np.zeros(nd)

for d in range(nd):

R = class_recs[image_ids[d]]

bb = BB[d, :].astype(float)

ovmax = -np.inf

BBGT = R['bbox'].astype(float)

## compute det bb with each BBGT

if BBGT.size > 0:

# overlaps = np.array([cal_iou(bb_GT, bb) for bb_GT in BBGT])

# ovmax = np.max(overlaps)

# jmax = np.argmax(overlaps)

BBGT_xmin = np.min(BBGT[:, 0::2], axis=1)

BBGT_ymin = np.min(BBGT[:, 1::2], axis=1)

BBGT_xmax = np.max(BBGT[:, 0::2], axis=1)

BBGT_ymax = np.max(BBGT[:, 1::2], axis=1)

bb_xmin = np.min(bb[0::2])

bb_ymin = np.min(bb[1::2])

bb_xmax = np.max(bb[0::2])

bb_ymax = np.max(bb[1::2])

ixmin = np.maximum(BBGT_xmin, bb_xmin)

iymin = np.maximum(BBGT_ymin, bb_ymin)

ixmax = np.minimum(BBGT_xmax, bb_xmax)

iymax = np.minimum(BBGT_ymax, bb_ymax)

iw = np.maximum(ixmax - ixmin + 1., 0.)

ih = np.maximum(iymax - iymin + 1., 0.)

inters = iw * ih

# union

uni = ((bb_xmax - bb_xmin + 1.) * (bb_ymax - bb_ymin + 1.) +

(BBGT_xmax - BBGT_xmin + 1.) *

(BBGT_ymax - BBGT_ymin + 1.) - inters)

overlaps = inters / uni

BBGT_keep_mask = overlaps > 0

BBGT_keep = BBGT[BBGT_keep_mask, :]

BBGT_keep_index = np.where(overlaps > 0)[0]

# pdb.set_trace()

def calcoverlaps(BBGT_keep, bb):

overlaps = []

for index, GT in enumerate(BBGT_keep):

overlap = polyiou.iou_poly(

polyiou.VectorDouble(BBGT_keep[index]),

polyiou.VectorDouble(bb))

overlaps.append(overlap)

return overlaps

if len(BBGT_keep) > 0:

overlaps = calcoverlaps(BBGT_keep, bb)

ovmax = np.max(overlaps)

jmax = np.argmax(overlaps)

# pdb.set_trace()

jmax = BBGT_keep_index[jmax]

if ovmax > ovthresh:

if not R['difficult'][jmax]:

if not R['det'][jmax]:

tp[d] = 1.

R['det'][jmax] = 1

else:

fp[d] = 1.

else:

fp[d] = 1.

# print('check fp:', sum(fp))

# print('check tp', sum(tp))

# print('npos num:', npos)

fp = np.cumsum(fp)

tp = np.cumsum(tp)

rec = tp / float(npos)

prec = tp / np.maximum(tp + fp, np.finfo(np.float64).eps)

ap = voc_ap(rec, prec, use_07_metric)

return rec, prec, ap

def evalution(detpath,annopath):

# predict folder

# detpath = '/home/cv123/data/zyj/ReDet_back/eval/predota_kaggle768_on_fair'

print(detpath)

print(annopath)

# # gt folder

# classes = ['plane', 'baseball-diamond', 'bridge', 'ground-track-field',

# 'small-vehicle', 'large-vehicle', 'ship', 'tennis-court',

# 'basketball-court', 'storage-tank', 'soccer-ball-field',

# 'roundabout', 'harbor', 'swimming-pool', 'helicopter']

classes = ['ship',]

ap_50 = 0

ap_75 = 0

map = 0

for cls in classes:

aps = []

for iou_thr in [0.5, 0.55, 0.6, 0.65, 0.7, 0.75, 0.8, 0.85, 0.9, 0.95]:

rec, prec, ap = voc_eval(

detpath, annopath, cls, ovthresh=iou_thr, use_07_metric=True)

aps.append(ap * 100)

ap_50 += aps[0]

ap_75 += aps[5]

map += np.array(aps).mean()

print(cls, ' ', 'AP50: {:.2f}\tAP75: {:.2f}'.format(aps[0], aps[5]))

print('AP50: {:.2f}\tAP75: {:.2f}\t mAP: {:.2f}'.format(

ap_50 / len(classes), ap_75 / len(classes), map / len(classes)))

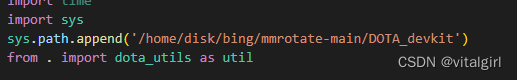

主要有两个参数:

1)sys.path.append(‘/home/disk/bing/mmrotate-main/DOTA_devkit’)

需要借用DOTA_devkit,一般将evaluation.py放在DOTA_devkit文件夹的同级目录下

2)classes = [‘ship’,]

3.修改DOTA_devkit/ResultMerge_multi_process.py

修改sys.path.append(‘/home/disk/bing/mmrotate-main/DOTA_devkit’)路径

4.借用test.py

import argparse

import os

import os.path as osp

import time

import warnings

import mmcv

import torch

from mmcv import Config, DictAction

from mmcv.cnn import fuse_conv_bn

from mmcv.parallel import MMDataParallel, MMDistributedDataParallel

from mmcv.runner import (get_dist_info, init_dist, load_checkpoint,

wrap_fp16_model)

from mmdet.apis import multi_gpu_test, single_gpu_test

from mmdet.datasets import build_dataloader, replace_ImageToTensor

from mmrotate.datasets import build_dataset

from mmrotate.models import build_detector

from mmrotate.utils import setup_multi_processes, compat_cfg

from DOTA_devkit.ResultMerge_multi_process import mergebypoly2

from evaluation import evalution

def forward(config_path,checkpointpath,test_img_path,outpath,imgsize,batchsize):

cfg = Config.fromfile(config_path)

cfg = compat_cfg(cfg)

# set multi-process settings

setup_multi_processes(cfg)

cfg.model.pretrained = None

if cfg.model.get('neck'):

if isinstance(cfg.model.neck, list):

for neck_cfg in cfg.model.neck:

if neck_cfg.get('rfp_backbone'):

if neck_cfg.rfp_backbone.get('pretrained'):

neck_cfg.rfp_backbone.pretrained = None

elif cfg.model.neck.get('rfp_backbone'):

if cfg.model.neck.rfp_backbone.get('pretrained'):

cfg.model.neck.rfp_backbone.pretrained = None

samples_per_gpu = batchsize

distributed = False

test_dict=dict(

type='DOTADataset',

test_mode = True,

img_prefix=test_img_path,

ann_file=test_img_path,

pipeline=[

dict(type='LoadImageFromFile'),

dict(

type='MultiScaleFlipAug',

img_scale=(imgsize, imgsize),

flip=False,

transforms=[

dict(type='RResize'),

dict(

type='Normalize',

mean=[123.675, 116.28, 103.53],

std=[58.395, 57.12, 57.375],

to_rgb=True),

dict(type='Pad', size_divisor=32),

dict(type='DefaultFormatBundle'),

dict(type='Collect', keys=['img'])

])

],

version='le90')

dataset = build_dataset(test_dict)

data_loader = build_dataloader(

dataset,

samples_per_gpu=samples_per_gpu,

workers_per_gpu=min(20, samples_per_gpu),

dist=distributed,

shuffle=False)

# build the model and load checkpoint

cfg.model.train_cfg = None

model = build_detector(cfg.model, test_cfg=cfg.get('test_cfg'))

fp16_cfg = cfg.get('fp16', None)

if fp16_cfg is not None:

wrap_fp16_model(model)

checkpoint = load_checkpoint(model, checkpointpath, map_location='cpu')

if 'CLASSES' in checkpoint.get('meta', {}):

model.CLASSES = checkpoint['meta']['CLASSES']

else:

model.CLASSES = dataset.CLASSES

if not distributed:

model = MMDataParallel(model, device_ids=range(1))

outputs = single_gpu_test(model, data_loader)

dataset.save_dota_txt(outputs,outfolder=outpath)

if __name__ == '__main__':

#cfg and checkpoint

config_path = "/home/disk/bing/mmrotate-main/configs/oriented_reppoints/oriented_reppoints_r50_fpn_1x_dota_le135.py"

checkpointpath = "/home/disk/bing/mmrotate-main/run/oriented_reppoints/epoch_12.pth"

#test

annopath = "/home/disk/bing/datasets/dota/val/labels"

test_img_path = "/home/disk/bing/datasets/dota/val/images"

#output txt folder

outpath = "/home/disk/bing/datasets/dota/val/oriented_reppoints_epoch12_test"

img_size = 1024

batchsize = 2

#forward

forward(config_path,checkpointpath,test_img_path,outpath,img_size,batchsize)

dst_folder = outpath

evalution(dst_folder, annopath)

主要有五个参数:

1)config_path

2)checkpointpath

3)annopath

4)test_img_path

5)outpath

一般将test.py放在evaluation.py的同级目录下

5.输入指令

python test.py