理论部分参见李宏毅机器学习——对抗攻击Adversarial Attack_iwill323的博客-CSDN博客

目录

MIFGSM + Ensemble Attack(pick right models)

DIM-MIFGSM + Ensemble Attack(pick right models)

目标和方法

利用目标网络的训练数据,训练一个或一些proxy网络(本作业不用训练,直接拿来一个训练好的模型),将proxy网络当作被攻击对象,使用proxy网络生成带有攻击性的输入,也就是白盒攻击proxy网络,再把这个训练出来的图片输入到不知道参数的 Network中,就实现了攻击。

○ Attack objective: Non-targeted attack

○ Attack algorithm: FGSM/I-FGSM

○ Attack schema: Black box attack (perform attack on proxy network)

○ Increase attack transferability by Diverse input (DIM)

○ Attack more than one proxy model - Ensemble attack

这个作业如果你不是台大的学生的话,是看不到你的提交结果跟实际的分数的

评价方法

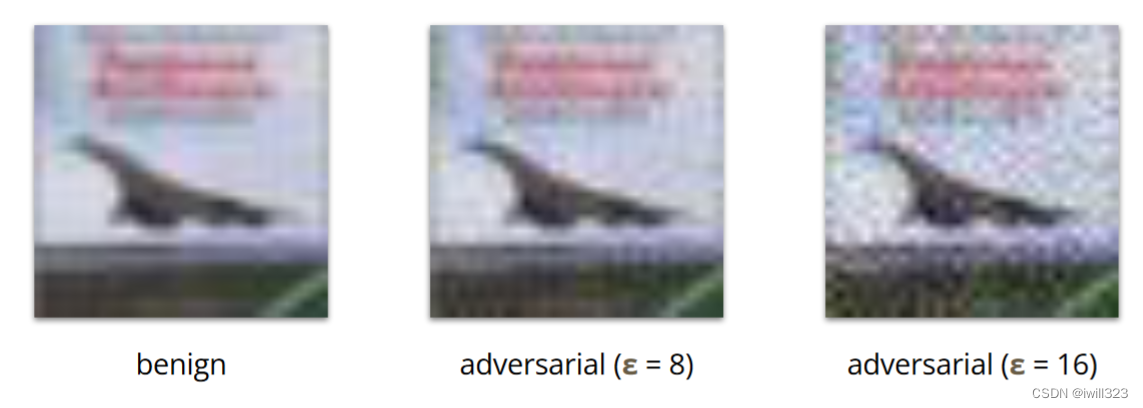

图像像素值为0-255,本次作业把改变的最大像素大小ε限制为8,这样的话图像的改变还不太明显。如果ε等于16,那么图像的改变就比较明显了

○ ε固定为8

○ 距离测量: L-inf. norm

○ 模型准确率(的下降)是唯一的评价准则

导包

import torch

import torch.nn as nn

import torchvision

import os

import glob

import shutil

import numpy as np

from PIL import Image

from torchvision.transforms import transforms

from torch.utils.data import Dataset, DataLoader

import matplotlib.pyplot as plt

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

batch_size = 8Global Settings

主要是图像标准化所用的平均值mean和标准差std,还有ε。ε要除以255和std,解释如下

benign images: images which do not contain adversarial perturbations

adversarial images: images which include adversarial perturbations

# the mean and std are the calculated statistics from cifar_10 dataset

cifar_10_mean = (0.491, 0.482, 0.447) # mean for the three channels of cifar_10 images

cifar_10_std = (0.202, 0.199, 0.201) # std for the three channels of cifar_10 images

# convert mean and std to 3-dimensional tensors for future operations

mean = torch.tensor(cifar_10_mean).to(device).view(3, 1, 1)

std = torch.tensor(cifar_10_std).to(device).view(3, 1, 1)

epsilon = 8/255/std

root = './data' # directory for storing benign images

Data

transform

transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize(cifar_10_mean, cifar_10_std)

])Dataset

可以从李宏毅2022机器学习HW10解析_机器学习手艺人的博客-CSDN博客下载,总共200张图片,分为10个文件夹,每一类20个图片。

data_dir

├── class_dir

│ ├── class1.png

│ ├── ...

│ ├── class20.png

看到这个目录结构,可以发现用ImageFolder函数就可以了。ImageFolder函数可以参考数据集读取与划分_iwill323的博客-CSDN博客

adv_set = torchvision.datasets.ImageFolder(os.path.join(root), transform=transform)

adv_loader = DataLoader(adv_set, batch_size=batch_size, shuffle=False)有意思的是,原代码自定义了一个Dataset函数,短小精悍,值得学习

class AdvDataset(Dataset):

def __init__(self, data_dir, transform):

self.images = []

self.labels = []

self.names = []

'''

data_dir

├── class_dir

│ ├── class1.png

│ ├── ...

│ ├── class20.png

'''

for i, class_dir in enumerate(sorted(glob.glob(f'{data_dir}/*'))):

images = sorted(glob.glob(f'{class_dir}/*'))

self.images += images

self.labels += ([i] * len(images)) # 第i个读到的类文件夹,类别就是i

self.names += [os.path.relpath(imgs, data_dir) for imgs in images] # 返回imgs相对于data_dir的相对路径

self.transform = transform

def __getitem__(self, idx):

image = self.transform(Image.open(self.images[idx]))

label = self.labels[idx]

return image, label

def __getname__(self):

return self.names

def __len__(self):

return len(self.images)

adv_set = AdvDataset(root, transform=transform)

adv_names = adv_set.__getname__()

adv_loader = DataLoader(adv_set, batch_size=batch_size, shuffle=False)

print(f'number of images = {adv_set.__len__()}')代理模型和目标模型

本次作业将已经训练好的模型作为proxy网络和攻击目标模型,这些网络在CIFAR-10上进行了预训练,可以从Pytorchcv 中引入。模型列表 here。要选择那些带_cifar10后缀的模型。

目标模型选择的是resnet110_cifar10。后面代理模型选择的是nin_cifar10,resnet20_cifar10,preresnet20_cifar10,也就是说在这些网络上训练生成攻击图像,然后应用于攻击resnet110_cifar10

from pytorchcv.model_provider import get_model as ptcv_get_model

model = ptcv_get_model('resnet110_cifar10', pretrained=True).to(device)

loss_fn = nn.CrossEntropyLoss()评估目标模型在非攻击性图像上的表现

def epoch_benign(model, loader, loss_fn):

model.eval()

train_acc, train_loss = 0.0, 0.0

with torch.no_grad():

for x, y in loader:

x, y = x.to(device), y.to(device)

yp = model(x)

loss = loss_fn(yp, y)

train_acc += (yp.argmax(dim=1) == y).sum().item()

train_loss += loss.item() * x.shape[0]

return train_acc / len(loader.dataset), train_loss / len(loader.dataset)resnet110_cifar10在被攻击图片中的精度benign_acc=0.95, benign_loss=0.22678。

benign_acc, benign_loss = epoch_benign(model, adv_loader, loss_fn)

print(f'benign_acc = {benign_acc:.5f}, benign_loss = {benign_loss:.5f}')Attack Algorithm

FGSM

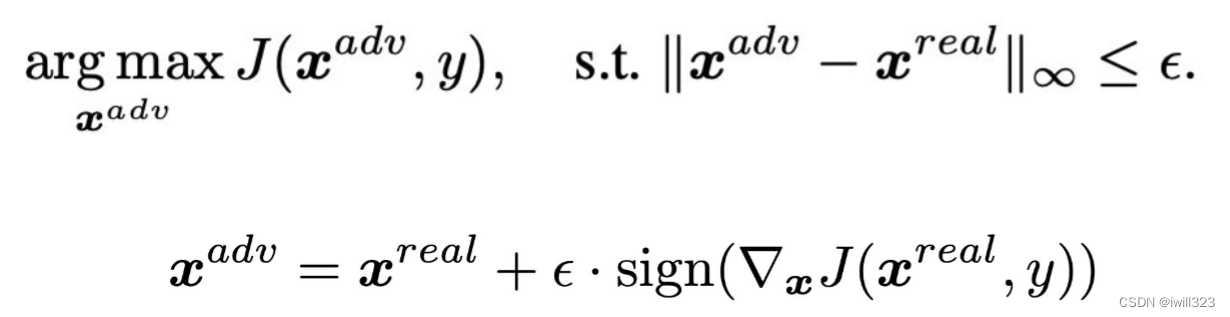

Fast Gradient Sign Method (FGSM)。FGSM只对图片进行一次攻击。

def fgsm(model, x, y, loss_fn, epsilon=epsilon):

x_adv = x.detach().clone() # 克隆x是因为x的值会随着x_adv的改变而改变

x_adv.requires_grad = True # need to obtain gradient of x_adv, thus set required grad

loss = loss_fn(model(x_adv), y)

loss.backward()

# fgsm: use gradient ascent on x_adv to maximize loss

grad = x_adv.grad.detach()

x_adv = x_adv + epsilon * grad.sign() # 不会越界,所以不用clip

return x_advI-FGSM

Iterative Fast Gradient Sign Method (I-FGSM)。ifgsm方法相比与fgsm相比,使用了多次的fgsm循环攻击,为此多了一个参数α

# set alpha as the step size in Global Settings section

# alpha and num_iter can be decided by yourself

alpha = 0.8/255/std

def ifgsm(model, x, y, loss_fn, epsilon=epsilon, alpha=alpha, num_iter=20):

x_adv = x

for i in range(num_iter):

# x_adv = fgsm(model, x_adv, y, loss_fn, alpha) # call fgsm with (epsilon = alpha) to obtain new x_adv

x_adv = x_adv.detach().clone()

x_adv.requires_grad = True # need to obtain gradient of x_adv, thus set required grad

loss = loss_fn(model(x_adv), y)

loss.backward()

# fgsm: use gradient ascent on x_adv to maximize loss

grad = x_adv.grad.detach()

x_adv = x_adv + alpha * grad.sign()

x_adv = torch.max(torch.min(x_adv, x+epsilon), x-epsilon) # clip new x_adv back to [x-epsilon, x+epsilon]

return x_adv

MI-FGSM

https://arxiv.org/pdf/1710.06081.pdf

mifgsm相比于ifgsm,加入了momentum,避免攻击陷入local maxima(这个与optimizer里面momentum的原理类似)

def mifgsm(model, x, y, loss_fn, epsilon=epsilon, alpha=alpha, num_iter=20, decay=0.9):

x_adv = x

# initialze momentum tensor

momentum = torch.zeros_like(x).detach().to(device)

# write a loop of num_iter to represent the iterative times

for i in range(num_iter):

x_adv = x_adv.detach().clone()

x_adv.requires_grad = True # need to obtain gradient of x_adv, thus set required grad

loss = loss_fn(model(x_adv), y) # calculate loss

loss.backward() # calculate gradient

# Momentum calculation

grad = x_adv.grad.detach()

grad = decay * momentum + grad / (grad.abs().sum() + 1e-8)

momentum = grad

x_adv = x_adv + alpha * grad.sign()

x_adv = torch.max(torch.min(x_adv, x+epsilon), x-epsilon) # clip new x_adv back to [x-epsilon, x+epsilon]

return x_advDiverse Input (DIM)

如果生成的图像在代理模型上过拟合,那么这些图像在目标模型上的攻击力可能会下降。

dim-mifgsm在mifgsm的基础上,对被攻击图片加入了transform来避免overfitting。该技巧来自于文章Improving Transferability of Adversarial Examples with Input Diversity(https://arxiv.org/pdf/1803.06978.pdf)。文章中的transform是先随机的resize图片,然后随机padding图片到原size

def dmi_mifgsm(model, x, y, loss_fn, epsilon=epsilon, alpha=alpha, num_iter=50, decay=0.9, p=0.5):

x_adv = x

# initialze momentum tensor

momentum = torch.zeros_like(x).detach().to(device)

# write a loop of num_iter to represent the iterative times

for i in range(num_iter):

x_adv = x_adv.detach().clone()

x_adv_raw = x_adv.clone()

if torch.rand(1).item() >= p: # 以一定几率进行数据增广

#resize img to rnd X rnd

rnd = torch.randint(29, 33, (1,)).item()

x_adv = transforms.Resize((rnd, rnd))(x_adv)

#padding img to 32 X 32 with 0

left = torch.randint(0, 32 - rnd + 1, (1,)).item()

top = torch.randint(0, 32 - rnd + 1, (1,)).item()

right = 32 - rnd - left

bottom = 32 - rnd - top

x_adv = transforms.Pad([left, top, right, bottom])(x_adv)

x_adv.requires_grad = True # need to obtain gradient of x_adv, thus set required grad

loss = loss_fn(model(x_adv), y)

loss.backward()

# Momentum calculation

grad = x_adv.grad.detach()

grad = decay * momentum + grad/(grad.abs().sum() + 1e-8)

momentum = grad

x_adv = x_adv_raw + alpha * grad.sign()

x_adv = torch.max(torch.min(x_adv, x+epsilon), x-epsilon) # clip new x_adv back to [x-epsilon, x+epsilon]

return x_adv攻击函数

生成攻击图像函数

用一个函数gen_adv_examples调用攻击算法,生成攻击图像,计算攻击效果(代理模型识别攻击图像的精度)。

经过transform处理的图像像素位于[0-1],通道也变了,为了生成攻击图像,要进行逆操作。这里的代码是教科书级别的

# perform adversarial attack and generate adversarial examples

def gen_adv_examples(model, loader, attack, loss_fn):

model.eval()

adv_names = []

train_acc, train_loss = 0.0, 0.0

for i, (x, y) in enumerate(loader):

x, y = x.to(device), y.to(device)

x_adv = attack(model, x, y, loss_fn) # obtain adversarial examples

yp = model(x_adv)

loss = loss_fn(yp, y)

_, pred = torch.max(yp, 1)

train_acc += (pred == y.detach()).sum().item()

train_loss += loss.item() * x.shape[0]

# store adversarial examples

adv_ex = ((x_adv) * std + mean).clamp(0, 1) # to 0-1 scale

adv_ex = (adv_ex * 255).clamp(0, 255) # 0-255 scale

adv_ex = adv_ex.detach().cpu().data.numpy().round() # round to remove decimal part

adv_ex = adv_ex.transpose((0, 2, 3, 1)) # transpose (bs, C, H, W) back to (bs, H, W, C)

adv_examples = adv_ex if i == 0 else np.r_[adv_examples, adv_ex]

return adv_examples, train_acc / len(loader.dataset), train_loss / len(loader.dataset)

# create directory which stores adversarial examples

def create_dir(data_dir, adv_dir, adv_examples, adv_names):

if os.path.exists(adv_dir) is not True:

_ = shutil.copytree(data_dir, adv_dir)

for example, name in zip(adv_examples, adv_names):

im = Image.fromarray(example.astype(np.uint8)) # image pixel value should be unsigned int

im.save(os.path.join(adv_dir, name))Ensemble Attack

对多个代理模型进行同时攻击。参考Delving into Transferable Adversarial Examples and Black-box Attacks

ModuleList 接收一个子模块(或层,需属于nn.Module类)的列表作为输入,可以类似List那样进行append和extend操作。同时,子模块或层的权重也会自动添加到网络中来。要特别注意的是,nn.ModuleList 并没有定义一个网络,它只是将不同的模块储存在一起。ModuleList中元素的先后顺序并不代表其在网络中的真实位置顺序,需要经过forward函数指定各个层的先后顺序后才算完成了模型的定义

集成模型函数

class ensembleNet(nn.Module):

def __init__(self, model_names):

super().__init__()

# ModuleList 接收一个子模块(或层,需属于nn.Module类)的列表作为输入,可以类似List那样进行append和extend操作

self.models = nn.ModuleList([ptcv_get_model(name, pretrained=True) for name in model_names])

# self.models.append(undertrain_resnet18) 可以append自己训练的代理网络

def forward(self, x):

emsemble_logits = None

# sum up logits from multiple models

for i, m in enumerate(self.models):

emsemble_logits = m(x) if i == 0 else emsemble_logits + m(x)

return emsemble_logits/len(self.models)构建集成模型

这些事代理模型

model_names = [

'nin_cifar10',

'resnet20_cifar10',

'preresnet20_cifar10'

]

ensemble_model = ensembleNet(model_names).to(device)

ensemble_model.eval()可视化攻击结果

每次攻击都生成并保存了攻击图像。下面改变攻击图像文件夹路径,即可读取攻击图像,传入目标网络,可视化攻击效果

classes = ['airplane', 'automobile', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck']

def show_attck(adv_dir, classes=classes):

plt.figure(figsize=(10, 20))

cnt = 0

for i, cls_name in enumerate(classes):

path = f'{cls_name}/{cls_name}1.png'

# benign image

cnt += 1

plt.subplot(len(classes), 4, cnt)

im = Image.open(os.path.join(adv_dir, path))

logit = model(transform(im).unsqueeze(0).to(device))[0]

predict = logit.argmax(-1).item()

prob = logit.softmax(-1)[predict].item()

plt.title(f'benign: {cls_name}1.png\n{classes[predict]}: {prob:.2%}')

plt.axis('off')

plt.imshow(np.array(im))

# adversarial image

cnt += 1

plt.subplot(len(classes), 4, cnt)

im = Image.open(os.path.join(root, path))

logit = model(transform(im).unsqueeze(0).to(device))[0]

predict = logit.argmax(-1).item()

prob = logit.softmax(-1)[predict].item()

plt.title(f'adversarial: {cls_name}1.png\n{classes[predict]}: {prob:.2%}')

plt.axis('off')

plt.imshow(np.array(im))

plt.tight_layout()

plt.show()进行攻击

FGSM方法

adv_examples, ifgsm_acc, ifgsm_loss = gen_adv_examples(ensemble_model, adv_loader, ifgsm, loss_fn)

print(f'ensemble_ifgsm_acc = {ifgsm_acc:.5f}, ensemble_ifgsm_loss = {ifgsm_loss:.5f}')

adv_dir = 'ifgsm'

create_dir(root, adv_dir, adv_examples, adv_names)

show_attck(adv_dir)fgsm_acc = 0.59000, fgsm_loss = 2.49304

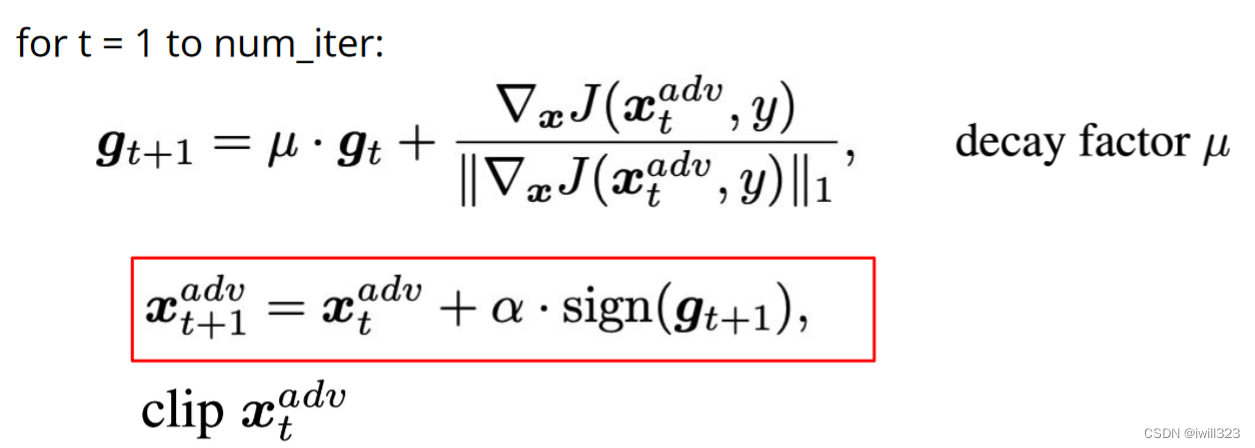

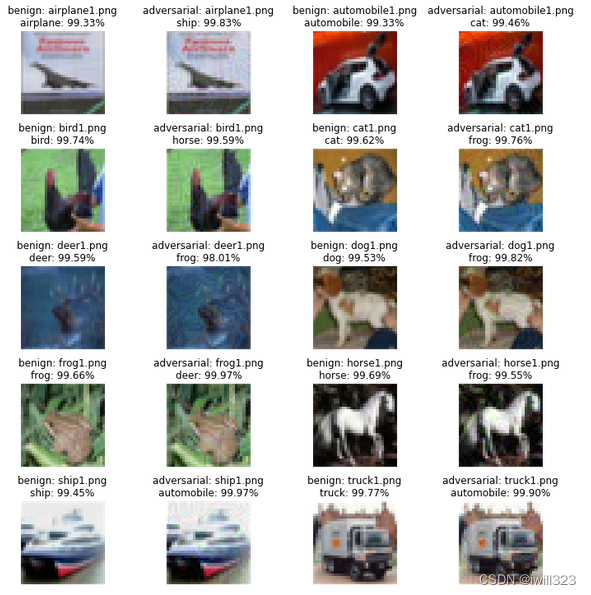

目标网络原来的识别性能benign_acc = 0.95000, benign_loss = 0.22678,过了Simple Baseline

看一下对目标网络resnet110_cifar10攻击效果(使用前面的可视化代码),有成功的有失败的。实际上是白盒攻击

I-FGSM方法 + Ensembel Attack

先观察集成模型在benign图像的准确度

from pytorchcv.model_provider import get_model as ptcv_get_model

benign_acc, benign_loss = epoch_benign(ensemble_model, adv_loader, loss_fn)

print(f'benign_acc = {benign_acc:.5f}, benign_loss = {benign_loss:.5f}')benign_acc = 0.95000, benign_loss = 0.15440

进行攻击

adv_examples, ifgsm_acc, ifgsm_loss = gen_adv_examples(ensemble_model, adv_loader, ifgsm, loss_fn)

print(f'ensemble_ifgsm_acc = {ifgsm_acc:.5f}, ensemble_ifgsm_loss = {ifgsm_loss:.5f}')

adv_dir = 'ensemble_ifgsm'

create_dir(root, adv_dir, adv_examples, adv_names)

show_attck(adv_dir)ensemble_ifgsm_acc = 0.00000, ensemble_ifgsm_loss = 13.41135

过了Medium Baseline(acc <= 0.50)。看一下对目标网络resnet110_cifar10攻击效果(使用后面的可视化代码)

MIFGSM + Ensemble Attack(pick right models)

根据李宏毅2022机器学习HW10解析_机器学习手艺人的博客-CSDN博客,在medium baseline中,随机挑选了一些代理模型,这样很盲目,根据文章Query-Free Adversarial Transfer via Undertrained Surrogates(https://arxiv.org/abs/2007.00806)描述,可以选择一些训练不充分的模型,训练不充分的意思包括两方面:一是模型的训练epoch少,二是模型在验证集(val set)未达到最小loss。依据论文中的一个例子,使用https://github.com/kuangliu/pytorch-cifar中的训练方法,选择resnet18模型,训练30个epoch(正常训练到达最好结果大约需要200个epoch),将其加入ensmbleNet中。(这个训练不充分的模型下面没有做)

adv_examples, ifgsm_acc, ifgsm_loss = gen_adv_examples(ensemble_model, adv_loader, mifgsm, loss_fn)

print(f'ensemble_mifgsm_acc = {ifgsm_acc:.5f}, ensemble_mifgsm_loss = {ifgsm_loss:.5f}')

adv_dir = 'ensemble_mifgsm'

create_dir(root, adv_dir, adv_examples, adv_names)

show_attck(adv_dir)ensemble_mifgsm_acc = 0.00500, ensemble_mifgsm_loss = 13.23710

看一下对目标网络resnet110_cifar10攻击效果(使用后面的可视化代码)

DIM-MIFGSM + Ensemble Attack(pick right models)

adv_examples, ifgsm_acc, ifgsm_loss = gen_adv_examples(ensemble_model, adv_loader, dmi_mifgsm, loss_fn)

print(f'ensemble_dmi_mifgsm_acc = {ifgsm_acc:.5f}, ensemble_dim_mifgsm_loss = {ifgsm_loss:.5f}')

adv_dir = 'ensemble_dmi_mifgsm'

create_dir(root, adv_dir, adv_examples, adv_names)

show_attck(adv_dir)ensemble_dmi_mifgsm_acc = 0.00000, ensemble_dim_mifgsm_loss = 15.16159

看一下对目标网络resnet110_cifar10攻击效果(使用后面的可视化代码)

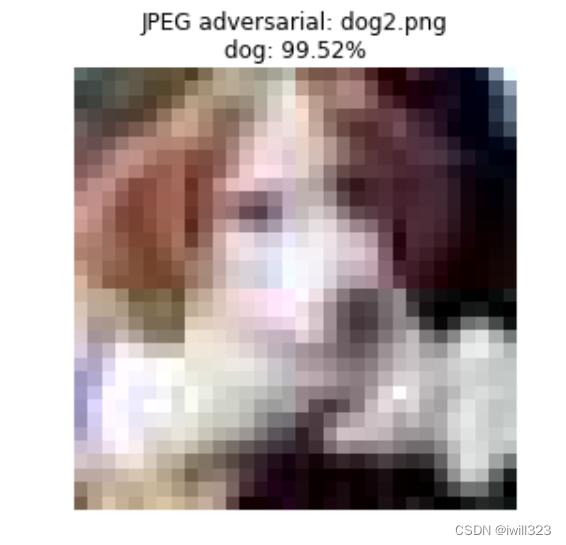

被动防御—JPEG压缩

JPEG compression by imgaug package, compression rate set to 70

Reference: imgaug.augmenters.arithmetic — imgaug 0.4.0 documentation

攻击

# original image

path = f'dog/dog2.png'

im = Image.open(f'./data/{path}')

logit = model(transform(im).unsqueeze(0).to(device))[0]

predict = logit.argmax(-1).item()

prob = logit.softmax(-1)[predict].item()

plt.title(f'benign: dog2.png\n{classes[predict]}: {prob:.2%}')

plt.axis('off')

plt.imshow(np.array(im))

plt.tight_layout()

plt.show()

# adversarial image

adv_im = Image.open(f'./ensemble_dmi_mifgsm/{path}')

logit = model(transform(adv_im).unsqueeze(0).to(device))[0]

predict = logit.argmax(-1).item()

prob = logit.softmax(-1)[predict].item()

plt.title(f'adversarial: dog2.png\n{classes[predict]}: {prob:.2%}')

plt.axis('off')

plt.imshow(np.array(adv_im))

plt.tight_layout()

plt.show()

防御

import imgaug.augmenters as iaa

# pre-process image

x = transforms.ToTensor()(adv_im)*255

x = x.permute(1, 2, 0).numpy()

x = x.astype(np.uint8)

# TODO: use "imgaug" package to perform JPEG compression (compression rate = 70)

compressed_x = iaa.arithmetic.compress_jpeg(x, compression=70)

logit = model(transform(compressed_x).unsqueeze(0).to(device))[0]

predict = logit.argmax(-1).item()

prob = logit.softmax(-1)[predict].item()

plt.title(f'JPEG adversarial: dog2.png\n{classes[predict]}: {prob:.2%}')

plt.axis('off')

plt.imshow(compressed_x)

plt.tight_layout()

plt.show()

防御成功了

拓展:文件读取

原代码手写的dataset函数值得研究。首先读取了root文件夹下的所有文件,并排序,返回一个list变量

>>dir_list = sorted(glob.glob(f'{root}/*'))

>>print(dir_list)

['./data\\airplane', './data\\automobile', './data\\bird', './data\\cat', './data\\deer', './data\\dog', './data\\frog', './data\\horse', './data\\ship', './data\\truck']

读取list变量里的第一个文件夹,取出第一个文件名。这些文件名可以用于Image.open函数

>>images = sorted(glob.glob(f'{dir_list[0]}/*'))

>>print(images[0])

./data\airplane\airplane1.png

取出相对路径

>>print(os.path.relpath(images[0], root))

airplane\airplane1.png