因为业务需要,公司之前的语音识别功能一直使用的是国外一家公司的SDK,但是为了让用户的体验更好,并有针对性的适应用户使用场景,我们在18年底准备使用Speech Recognition 来替换之前的SDK.

苹果在iOS10 中就公开了新的API:Speech Recognition 来帮助用户使用语音识别,并且根据需要来做一些我们想要完成的功能。

老样子废话不多说,直接说正事。

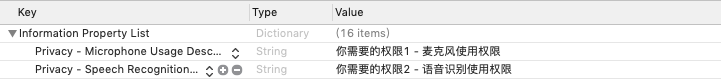

首先,你需要知道如果想使用语音识别功能,那么在Info.plist 文件中需要加上以下两项。

Privacy - Microphone Usage Description 麦克风使用权限。

Privacy - Speech Recognition Usage Description 语音识别使用权限。

其次,就是需要在你需要一些带注释的Demo, 来告诉你怎么调用和使用这些API,嘿嘿~ 我刚好总结了一下并且已经写好了注释,一起来看下吧。

//

// ViewController.m

// SpeechRecognitionEngineDemo

//

// Created by 司文 on 2019/2/18.

// Copyright © 2019 司小文. All rights reserved.

//

#import "ViewController.h"

#import <AVFoundation/AVFoundation.h>

#import <Speech/Speech.h>

#import <Accelerate/Accelerate.h>

@interface ViewController ()

@property(nonatomic,strong)SFSpeechRecognizer *mySpeechRecognizer;//语音识别引擎

@property(nonatomic,strong)SFSpeechAudioBufferRecognitionRequest *myAudioBufferRecognitionRequest;//语音识别请求

@property(nonatomic,strong)SFSpeechRecognitionTask *mySpeechRecognitionTask;//语音识别任务

@property(nonatomic,strong)AVAudioEngine *myAudioEngine;//音频引擎

@property(nonatomic,strong)UILabel *lab_textDisPlay;//文本显示

@property(nonatomic,strong)UILabel *lab_volumeDisPlay;//音量显示

@property (nonatomic, assign) BOOL isRunning;//语音识别引擎是否启动

@property (nonatomic, assign) BOOL isListening;//是否监听声音

@property (nonatomic, assign) BOOL isAudioBufferAppending;//是否开始音频缓冲

@property (nonatomic, assign) float correctNum;//不同设备的修正值(内置麦克风、有线耳机、无线耳机等)

@property (nonatomic, assign) int silenceCount;//结束音频缓冲的计数

@property (nonatomic, assign) int terminalSilenceNum;//拟定结束音频缓冲的次数

@property (nonatomic, assign) int micSensitivityNum;//补充设备修正值,不需要可以为0

@end

@implementation ViewController

- (void)viewDidLoad {

[super viewDidLoad];

self.micSensitivityNum = 0;

self.terminalSilenceNum = 3;

[self makeUI];

[AVCaptureDevice requestAccessForMediaType:AVMediaTypeAudio completionHandler:^(BOOL granted) {

NSLog(@"%@",granted ? @"麦克风准许":@"麦克风不准许");

//如果允许使用麦克风

if (granted) {

[SFSpeechRecognizer requestAuthorization:^(SFSpeechRecognizerAuthorizationStatus status) {

switch (status) {

case SFSpeechRecognizerAuthorizationStatusNotDetermined:

NSLog(@"NotDetermined");

break;

case SFSpeechRecognizerAuthorizationStatusDenied:

NSLog(@"Denied");

break;

case SFSpeechRecognizerAuthorizationStatusRestricted:

NSLog(@"Restricted");

break;

case SFSpeechRecognizerAuthorizationStatusAuthorized:

NSLog(@"Authorized");

break;

default:

break;

}

}];

[self startEngine];

[self configureAudio];

}

}];

}

- (void)makeUI{

self.lab_textDisPlay = [[UILabel alloc] initWithFrame:CGRectMake(30, 100, 260, 30)];

self.lab_textDisPlay.backgroundColor = [UIColor redColor];

self.lab_textDisPlay.text = @"You can say whatever you like.";

[self.view addSubview:self.lab_textDisPlay];

self.lab_volumeDisPlay = [[UILabel alloc] initWithFrame:CGRectMake(30, 200, 260, 30)];

self.lab_volumeDisPlay.backgroundColor = [UIColor whiteColor];

self.lab_volumeDisPlay.textColor = [UIColor blackColor];

self.lab_volumeDisPlay.text = @"Volume:0";

[self.view addSubview:self.lab_volumeDisPlay];

}

- (nullable id)formatPlistDictionary:(NSDictionary*)dic{

NSData *jsonData = [NSJSONSerialization dataWithJSONObject:dic options:NSJSONWritingPrettyPrinted error:nil];

NSString * jsonString = [[NSString alloc] initWithData:jsonData encoding:NSUTF8StringEncoding];

jsonString = [jsonString uppercaseString];

//NSUTF32LittleEndianStringEncoding

NSData *jsonDataA = [jsonString dataUsingEncoding:NSUTF8StringEncoding];

return [NSJSONSerialization JSONObjectWithData:jsonDataA options:NSJSONReadingMutableContainers error:nil];

}

#pragma mark 启动音频识别引擎

- (void)startEngine{

[self startListening];

if (self.mySpeechRecognizer == nil) {

NSString *strLanguage = @"en-US";//这个参数可以选择需要的语言 例如改为 @"es" 则为西班牙语。

self.mySpeechRecognizer = [[SFSpeechRecognizer alloc] initWithLocale:[NSLocale localeWithLocaleIdentifier:strLanguage]];

[self.mySpeechRecognizer setDefaultTaskHint:SFSpeechRecognitionTaskHintConfirmation];

}

if (self.myAudioEngine == nil) {

self.myAudioEngine = [[AVAudioEngine alloc] init];

}

__weak ViewController *weakSelf = self;

AVAudioInputNode *inputNode = [self.myAudioEngine inputNode];

AVAudioFormat *format = [inputNode outputFormatForBus:0];

[inputNode installTapOnBus:0 bufferSize:1024 format:format block:^(AVAudioPCMBuffer * _Nonnull buffer, AVAudioTime * _Nonnull when) {

//获取现在收集到的音量

float volume = [weakSelf getVolume:buffer.audioBufferList];

//修正音量值

volume = volume + self.correctNum;

// NSLog(@"Currect Volume: %f", volume);

if(volume < 0){

volume = 0;

}

//展示音量值

[self.lab_volumeDisPlay performSelectorOnMainThread:@selector(setText:) withObject:[NSString stringWithFormat:@"Volume:%0.f",volume] waitUntilDone:YES];

if (volume <= self.correctNum) {

//如果音量值在范围内

if (weakSelf.isListening) {

//如果开启监听声音

if (volume > 25 || weakSelf.isAudioBufferAppending) {

//如果音量大于25,或者已经开始音频缓冲进入

if (volume < 15){

//如果小于15准备结束音频缓冲

weakSelf.silenceCount ++;

if (weakSelf.silenceCount >= self.terminalSilenceNum){

//如果到达缓冲次数,结束缓冲

weakSelf.silenceCount = 0;

weakSelf.isAudioBufferAppending = NO;

[weakSelf stopListening];//结束监听

[weakSelf.myAudioBufferRecognitionRequest appendAudioPCMBuffer:buffer];

[weakSelf.myAudioBufferRecognitionRequest endAudio];

}else{

//继续音频缓冲

[weakSelf.myAudioBufferRecognitionRequest appendAudioPCMBuffer:buffer];

}

}else{

//开始音频缓冲

weakSelf.isAudioBufferAppending = YES;

weakSelf.silenceCount = 0;

if (weakSelf.myAudioBufferRecognitionRequest == nil) {

[weakSelf startPrepareSpeechRequest];

}

[weakSelf.myAudioBufferRecognitionRequest appendAudioPCMBuffer:buffer];

}

}

}

}

}];

if (![self.myAudioEngine isRunning]) {

//如果音频引擎没有开启,则开启音频引擎

[self.myAudioEngine prepare];

[self.myAudioEngine startAndReturnError:nil];

}

//语音识别引擎启动

self.isRunning = YES;

}

#pragma mark 启动语音识别

- (void)startPrepareSpeechRequest

{

[self.mySpeechRecognitionTask cancel];

self.mySpeechRecognitionTask = nil;

self.myAudioBufferRecognitionRequest = [[SFSpeechAudioBufferRecognitionRequest alloc] init];

self.myAudioBufferRecognitionRequest.shouldReportPartialResults = NO;

__weak ViewController *weakSelf = self;

self.mySpeechRecognitionTask = [self.mySpeechRecognizer recognitionTaskWithRequest:self.myAudioBufferRecognitionRequest resultHandler:^(SFSpeechRecognitionResult * _Nullable result, NSError * _Nullable error) {

if (result != nil) {

//识别内容

weakSelf.lab_textDisPlay.text = result.bestTranscription.formattedString;

NSLog(@"%@",result.bestTranscription.formattedString);

}

if (error != nil) {

NSLog(@"%@",error.userInfo);

}

//从新开启监听

[self startListening];

self.myAudioBufferRecognitionRequest = nil;

}];

}

#pragma mark 清除

- (void)stopEngine{

[self.mySpeechRecognitionTask cancel];

self.mySpeechRecognitionTask = nil;

self.isAudioBufferAppending = NO;

[self.myAudioEngine.inputNode removeTapOnBus:0];

[self.myAudioEngine stop];

self.myAudioEngine = nil;

self.mySpeechRecognizer = nil;

self.isRunning = NO;

}

- (void)startListening

{

self.isListening = YES;

}

- (void)stopListening

{

self.isListening = NO;

}

#pragma mark 获取设备状态

- (void)configureAudio

{

AVAudioSession *audioSession = [AVAudioSession sharedInstance];

BOOL success;

NSError* error;

success = [audioSession setCategory:AVAudioSessionCategoryPlayAndRecord withOptions:AVAudioSessionCategoryOptionDefaultToSpeaker|AVAudioSessionCategoryOptionAllowBluetooth|AVAudioSessionCategoryOptionMixWithOthers error:&error];

if(!success)

NSLog(@"AVAudioSession error setCategory = %@",error.debugDescription);

[[NSNotificationCenter defaultCenter] addObserver:self selector:@selector(audioRouteChanged:) name:AVAudioSessionRouteChangeNotification object:nil];

[audioSession setActive:YES error:&error];

//Restrore default audio output to BuildinReceiver

AVAudioSessionRouteDescription *currentRoute = [[AVAudioSession sharedInstance] currentRoute];

[self changeCorrectNum:currentRoute];

}

- (void)audioRouteChanged:(NSNotification*)notify {

NSDictionary *dic = notify.userInfo;

AVAudioSessionRouteDescription *currentRoute = [[AVAudioSession sharedInstance] currentRoute];

AVAudioSessionRouteDescription *oldRoute = [dic objectForKey:AVAudioSessionRouteChangePreviousRouteKey];

NSInteger routeChangeReason = [[dic objectForKey:AVAudioSessionRouteChangeReasonKey] integerValue];

NSLog(@"audio route changed: reason: %ld\n input:%@->%@, output:%@->%@",(long)routeChangeReason,oldRoute.inputs,currentRoute.inputs,oldRoute.outputs,currentRoute.outputs);

[self changeCorrectNum:currentRoute];

[self performSelector:@selector(restartEngine) withObject:nil afterDelay:0.3f];

}

- (void)restartEngine{

if (self.isRunning) {

[self stopEngine];

[self startEngine];

}

}

- (void)changeCorrectNum:(AVAudioSessionRouteDescription*)currentRoute{

self.correctNum = 65.0 + self.micSensitivityNum;

for (AVAudioSessionPortDescription *portDesc in [currentRoute inputs]){

if([portDesc.portType isEqualToString:@"MicrophoneBuiltIn"])

{

self.correctNum = 50.0 + self.micSensitivityNum;

break;

}

}

}

#pragma mark 获取音量

- (float) getVolume:(const AudioBufferList *) inputBuffer

{

float* data = (float*)malloc(inputBuffer->mBuffers[0].mDataByteSize*sizeof(float));

(memcpy(data, (float *)inputBuffer->mBuffers[0].mData, inputBuffer->mBuffers[0].mDataByteSize));//初始化data

vDSP_vsq(data, 1, data, 1, inputBuffer->mBuffers[0].mDataByteSize);

float meanVal = 0.0;

vDSP_meanv(data, 1, &meanVal, inputBuffer->mBuffers[0].mDataByteSize);

float one = 1.0;

vDSP_vdbcon(&meanVal, 1, &one, &meanVal, 1, 1, 0);

float decibel = meanVal;

free(data);

return decibel;

// numFrames*numChannels:AudioBuffer中的mDataByteSize

}

@end

这段代码中获取音量的方法,获取到的音量大小并不是很准确,并且针对有线耳机、无线耳机、iPhone内置麦克风获取的音量是不同的,初步判断是因为降噪系数的不同,导致了这一差别,所以我加入了一个修正值correctNum 来进行修正,保证各个场景下功能不受影响,当然你的场景如果特殊,请自己适配一下这个参数。

另外Buffer 的传递是这段代码中比较核心的部分,这里的判断逻辑如果是最初使用的小伙伴,可以认真看一下,其他的就木有什么了,有什么问题可以评论叫我咱们一起聊聊。

Demo下载地址:SpeechRecognitionEngineDemo