社交媒体上有些讨论是关于灾难,疾病,暴乱的,有些只是开玩笑或者是电影情节,我们该如何让机器能分辨出这两种讨论呢?

一、数据处理

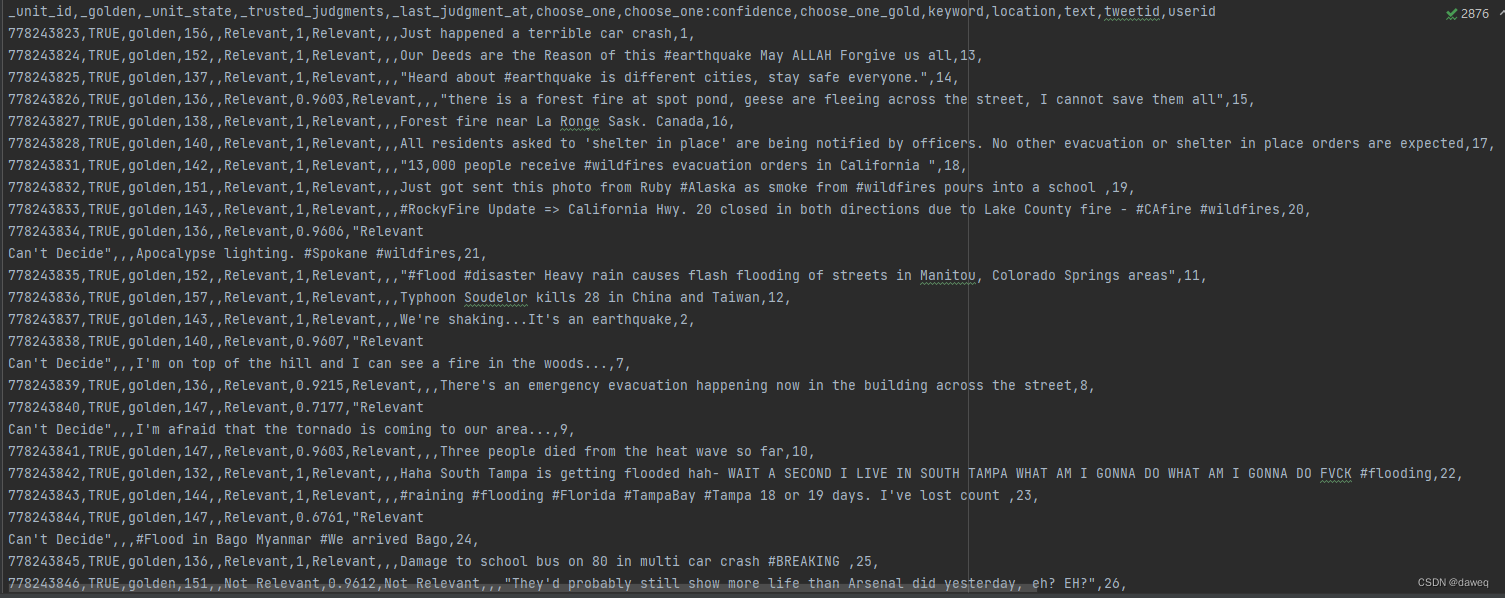

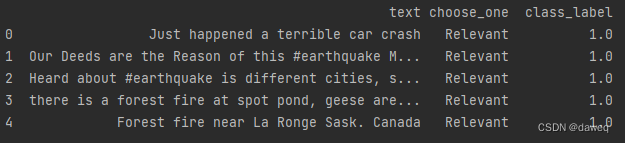

(1)提取原数据的有用部分

对于这个题目,有用的数据只有text(新闻内容),choose_one(是否和灾难等有联系)。为了便于后续的机器学习还要把不能被识别的choose_one转换为简单编码的形式。

questions = pd.read_csv("socialmedia_relevant_cols_clean.csv")

questions = questions[['text','choose_one']]

A = {

'Not Relevant':0,'Relevant':1,"Can't Decide":2}

for i in A:

questions.loc[questions.choose_one==i,'class_label'] = int(A[i])

#print(questions.head(5))

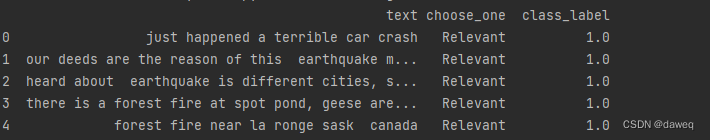

(2)对文本内容进行数据清理

采用正则化的方法,删除掉文本内不要的部分。

def standardize_text(df, text_field):

df[text_field] = df[text_field].apply(lambda x: re.sub(re.compile(r'http\S+'), '',x))

df[text_field] = df[text_field].apply(lambda x: re.sub(re.compile(r'http'), '',x))

df[text_field] = df[text_field].apply(lambda x: re.sub(re.compile(r'@\S+'), '',x))

df[text_field] = df[text_field].apply(lambda x: re.sub(re.compile(r'@'), 'at',x))

df[text_field] = df[text_field].apply(lambda x: re.sub(re.compile(r'[^A-Za-z0-9(),!?@\'\`\"\_\n]'), ' ',x))

df[text_field] = df[text_field].str.lower()

return df

questions = standardize_text(questions,'text')

questions.to_csv('clean_data.csv', encoding='utf-8')

#print(questions.head(5))

(3)分词

通过这种分词,直接将数据分成list的形式。

clean_questions = pd.read_csv("clean_data.csv")

from nltk.tokenize import RegexpTokenizer

tokenizer = RegexpTokenizer(r'\w+')

clean_questions.loc[:,'tokens'] = clean_questions['text'].apply(tokenizer.tokenize)

print(clean_questions['tokens'].head(5))

二、使用词袋模型进行测试

(1)使用词袋模型,将数据转换为词向量。

from sklearn.feature_extraction.text import CountVectorizer, TfidfVectorizer

def cv(data):

count_vectorizer = CountVectorizer()

emb = count_vectorizer.fit_transform(data)

return emb, count_vectorizer

X_train_counts, count_vectorizer = cv(X_train)

X_test_counts = count_vectorizer.transform(X_test)

(2)训练,测试模型

from sklearn.linear_model import LogisticRegression

clf = LogisticRegression(C=30, class_weight='balanced', solver='newton-cg',

multi_class='multinomial', n_jobs=None, random_state=40)

clf.fit(X_train_counts, y_train)

y_predicted_counts = clf.predict(X_test_counts)

from sklearn.metrics import accuracy_score, f1_score, precision_score, recall_score, classification_report

def get_metrics(y_test, y_predicted):

# true positives / (true positives + false positives)

precision = precision_score(y_test, y_predicted, pos_label=None, average='weighted')

# true positives / (true positives + false negatives)

recall = recall_score(y_test, y_predicted, pos_label=None, average='weighted')

# harmonic mean of precision and recall

f1 = f1_score(y_test, y_predicted, pos_label=None, average='weighted')

# (true positives + true negatives)/total

accuracy = accuracy_score(y_test, y_predicted)

return accuracy, precision, recall, f1

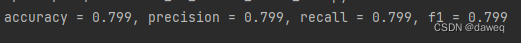

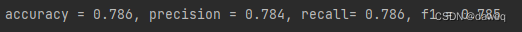

accuracy, precision, recall, f1 = get_metrics(y_test, y_predicted_counts)

print("accuracy = %0.3f, precision = %0.3f, recall= %0.3f, f1 = %0.3f" % (accuracy, precision, recall, f1))

(3)TF-IDF bag

def tfidf(data):

tfidf_vectorizer = TfidfVectorizer()

train = tfidf_vectorizer.fit_transform(data)

return train, tfidf_vectorizer

三、使用W2V模型进行测试

word2vec_path =('data/GoogleNews-vectors-negative300.bin')

word2vec = gensim.models.KeyedVectors.load_word2vec_format(word2vec_path, binary=True)

def get_average_word2vec(tokens_list, vector, generate_missing=False, k=300):

if len(tokens_list)<1:

return np.zeros(k)

if generate_missing:

vectorized = [vector[word] if word in vector else np.random.rand(k) for word in tokens_list]

else:

vectorized = [vector[word] if word in vector else np.zeros(k) for word in tokens_list]

length = len(vectorized)

summed = np.sum(vectorized, axis=0)

averaged = np.divide(summed,length)

return averaged

def get_word2vec_embeddings(vectors, clean_questions, generate_missing=False):

embeddings = clean_questions['tokens'].apply(lambda x: get_average_word2vec(x, vectors,

generate_missing=generate_missing))

return list(embeddings)

embeddings = get_word2vec_embeddings(word2vec, clean_questions)

X_train_word2vec, X_test_word2vec, y_train_word2vec, y_test_word2vec = train_test_split(embeddings,

list_labels, test_size=0.2,

random_state=3)

clf_w2v = LogisticRegression(C=30, class_weight='balanced', solver='newton-cg',

multi_class='multinomial', n_jobs=None, random_state=40)

clf_w2v = clf_w2v.fit(X_train_word2vec, y_train_word2vec)

y_predicted_word2vec = clf_w2v.predict(X_test_word2vec)

accuracy_w2v, precision_w2v, recall_w2v, f1_w2v = get_metrics(y_test_word2vec, y_predicted_word2vec)

print("accuracy = %.3f, precision = %.3f, recall = %.3f, f1 = %.3f" %

(accuracy_w2v, precision_w2v, recall_w2v, f1_w2v ))