由于Swin_Transformer_minivit代码是在Swin_Transformer的基础上改的,所以文章仅解读相对原改变的部分,有需要的reader可移步到下面的链接看Swin_Transformer的代码解读。swin-transformer代码详解_社区小铁匠的博客-CSDN博客![]() https://blog.csdn.net/tiehanhanzainal/article/details/125041407?spm=1001.2014.3001.5501

https://blog.csdn.net/tiehanhanzainal/article/details/125041407?spm=1001.2014.3001.5501

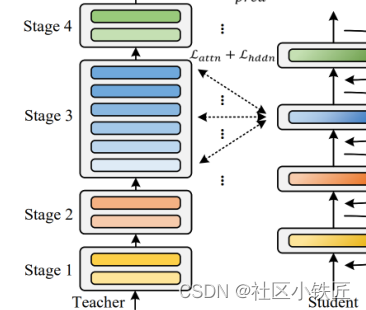

如上图所示,在每个阶段共享MSA和MLP的权值,并添加两个转换块来增加参数的多样性。转换块和规范化层不是共享的。

在代码层面,第一个不同的地方是在WindowAttention模块的结构,第二个存在差异的是SwintransformBlock部分,对应到上图中为第二个transform模块。

WindowAttention

该模块在window上进行自注意力操作中的之后为每个window添加相对位置坐标,再经过一个线性层,执行掩模操作,再经过一个线性层,再执行dropout正则化以防止过拟合,到这了已经完成了WindowAttention模块里面的Transform变化,后续的变化与原结构相同就不一一赘述。

class WindowAttention(nn.Module):

""" Window based multi-head self attention (W-MSA) module with relative position bias.

It supports both of shifted and non-shifted window.

Args:

dim (int): Number of input channels.

window_size (tuple[int]): The height and width of the window.

num_heads (int): Number of attention heads.

qkv_bias (bool, optional): If True, add a learnable bias to query, key, value. Default: True

qk_scale (float | None, optional): Override default qk scale of head_dim ** -0.5 if set

attn_drop (float, optional): Dropout ratio of attention weight. Default: 0.0

proj_drop (float, optional): Dropout ratio of output. Default: 0.0

"""

def __init__(self, dim, window_size, num_heads, qkv_bias=True, qk_scale=None, attn_drop=0., proj_drop=0.,):

super().__init__()

self.dim = dim

self.window_size = window_size # Wh, Ww

self.num_heads = num_heads

head_dim = dim // num_heads

self.scale = qk_scale or head_dim ** -0.5

self.qkv = nn.Linear(dim, dim * 3, bias=qkv_bias)

self.attn_drop = nn.Dropout(attn_drop)

self.proj = nn.Linear(dim, dim)

self.proj_drop = nn.Dropout(proj_drop)

self.softmax = nn.Softmax(dim=-1)

# define a parameter table of relative position bias

# 相邻window的相对位置偏差

self.relative_position_bias_table = nn.Parameter(

torch.zeros((2 * window_size[0] - 1) * (2 * window_size[1] - 1), num_heads)) # 2*Wh-1 * 2*Ww-1, nH

# get pair-wise relative position index for each token inside the window

# 获取窗口内每个标记的成对相对位置索引

coords_h = torch.arange(self.window_size[0]) # 产生wh个元素的一维张量

coords_w = torch.arange(self.window_size[1])

# torch.stack():沿着一个新维度对输入张量序列进行连接,一维变二维,二维变三维

coords = torch.stack(torch.meshgrid([coords_h, coords_w])) # 2, Wh, Ww

coords_flatten = torch.flatten(coords, 1) # 2, Wh*Ww

relative_coords = coords_flatten[:, :, None] - coords_flatten[:, None, :] # 2, Wh*Ww, Wh*Ww 广播减法

relative_coords = relative_coords.permute(1, 2, 0).contiguous() # Wh*Ww, Wh*Ww, 2

# 因为采取的是相减 ,所以得到的索引是从负数开始的 ,所以加上偏移量 ,让其从0开始

relative_coords[:, :, 0] += self.window_size[0] - 1 # shift to start from 0

relative_coords[:, :, 1] += self.window_size[1] - 1

# 后续我们需要将其展开成一维偏移量 而对于(x ,y)和(y ,x)这两个坐标 在二维上是不同的,

# 但是通过将x,y坐标相加转换为一维偏移的时候,他的偏移量是相等的,所以对其做乘法以进行区分

relative_coords[:, :, 0] *= 2 * self.window_size[1] - 1

relative_position_index = relative_coords.sum(-1) # Wh*Ww, Wh*Ww

self.register_buffer("relative_position_index", relative_position_index)

trunc_normal_(self.relative_position_bias_table, std=.02)

def forward(self, x, mask=None, proj_l=None, proj_w=None):

"""

Args:

x: input features with shape of (num_windows*B, N, C)

mask: (0/-inf) mask with shape of (num_windows, Wh*Ww, Wh*Ww) or None

"""

B_, N, C = x.shape # (B*H*W/(window_size)**2, window_size, window_size, c)

qkv = self.qkv(x).reshape(B_, N, 3, self.num_heads, C // self.num_heads).permute(2, 0, 3, 1, 4) # (B_, num_heads, N, D)

q, k, v = qkv[0], qkv[1], qkv[2] # make torchscript happy (cannot use tensor as tuple)

q = q * self.scale

# (B_, num_heads, N, N)

attn = (q @ k.transpose(-2, -1))

# 添加相对位置坐标

relative_position_bias = self.relative_position_bias_table[self.relative_position_index.view(-1)].view(

self.window_size[0] * self.window_size[1], self.window_size[0] * self.window_size[1], -1) # Wh*Ww,Wh*Ww,nH

relative_position_bias = relative_position_bias.permute(2, 0, 1).contiguous() # nH, Wh*Ww, Wh*Ww

attn = attn + relative_position_bias.unsqueeze(0)

# ------------------------------------------------------------------------------------------------------

# 第一个transform的第一个线性层

if proj_l is not None:

attn = proj_l(attn.permute(0, 2, 3, 1)).permute(0, 3, 1, 2)

if mask is not None:

nW = mask.shape[0]

attn = attn.view(B_ // nW, nW, self.num_heads, N, N) + mask.unsqueeze(1).unsqueeze(0)

attn = attn.view(-1, self.num_heads, N, N)

attn = self.softmax(attn)

else:

attn = self.softmax(attn)

# 第一个transform的第二个线性层

if proj_w is not None:

attn = proj_w(attn.permute(0, 2, 3, 1)).permute(0, 3, 1, 2)

attn = self.attn_drop(attn)

# ------------------------------------------------------------------------------------------------------

x = (attn @ v).transpose(1, 2).reshape(B_, N, C)

x = self.proj(x)

x = self.proj_drop(x)

return x

SwintransformBlock

该模块在两个shortcut之间添加了一个层归一化与一个线性层 。具体代码如下:

class SwinTransformerBlock(nn.Module):

r""" Swin Transformer Block.

Args:

dim (int): Number of input channels.

input_resolution (tuple[int]): Input resulotion.

num_heads (int): Number of attention heads.

window_size (int): Window size.

shift_size (int): Shift size for SW-MSA.

mlp_ratio (float): Ratio of mlp hidden dim to embedding dim.

qkv_bias (bool, optional): If True, add a learnable bias to query, key, value. Default: True

qk_scale (float | None, optional): Override default qk scale of head_dim ** -0.5 if set.

drop (float, optional): Dropout rate. Default: 0.0

attn_drop (float, optional): Attention dropout rate. Default: 0.0

drop_path (float, optional): Stochastic depth rate. Default: 0.0

act_layer (nn.Module, optional): Activation layer. Default: nn.GELU

norm_layer (nn.Module, optional): Normalization layer. Default: nn.LayerNorm

"""

def __init__(self, dim, input_resolution, num_heads, window_size=7,

mlp_ratio=4., qkv_bias=True, qk_scale=None, drop=0., attn_drop=0., shift_size=0., drop_path=[0],

act_layer=nn.GELU, norm_layer=nn.LayerNorm,

# The following arguments are for MiniViT

# 是否使用window滑块

is_init_window_shift=False,

# 是否在每个共享层中使用单独的层归一化

is_sep_layernorm = False,

# 第二个transform:层归一化+分组卷积

is_transform_FFN=False,

# 是否对 MSA 使用转换(windowAttention模块里面的transform)

is_transform_heads = False,):

super().__init__()

self.dim = dim

self.input_resolution = input_resolution # H, W

self.num_heads = num_heads

self.window_size = window_size # 7

self.mlp_ratio = mlp_ratio

self.share_num = len(drop_path) # students网络参数共享层数

self.is_init_window_shift = is_init_window_shift

self.is_sep_layernorm = is_sep_layernorm

self.is_transform_FFN = is_transform_FFN

self.is_transform_heads = is_transform_heads

# ------------------------------------------------------------------

# 对参与权重共享的层进行归一化操作

if self.is_sep_layernorm:

self.norm1_list = nn.ModuleList()

for _ in range(self.share_num):

self.norm1_list.append(norm_layer(dim))

else:

self.norm1 = norm_layer(dim)

# 对MSA使用转换(windowAttention模块里面的transform)

if self.is_transform_heads:

self.proj_l = nn.ModuleList()

self.proj_w = nn.ModuleList()

for _ in range(self.share_num):

self.proj_l.append(nn.Linear(num_heads, num_heads))

self.proj_w.append(nn.Linear(num_heads, num_heads))

else:

self.proj_l = None

self.proj_w = None

# ------------------------------------------------------------------

self.attn = WindowAttention(

dim, window_size=to_2tuple(self.window_size), num_heads=num_heads,

qkv_bias=qkv_bias, qk_scale=qk_scale, attn_drop=attn_drop, proj_drop=drop,)

if min(self.input_resolution) <= self.window_size:

# if window size is larger than input resolution, we don't partition windows

shift_size = 0

self.window_size = min(self.input_resolution)

assert 0 <= shift_size < self.window_size, "shift_size must in 0-window_size"

self.shift_size = shift_size

if shift_size > 0:

# calculate attention mask for SW-MSA

H, W = self.input_resolution

img_mask = torch.zeros((1, H, W, 1)) # 1 H W 1

h_slices = (slice(0, -self.window_size),

slice(-self.window_size, -self.shift_size),

slice(-self.shift_size, None))

w_slices = (slice(0, -self.window_size),

slice(-self.window_size, -self.shift_size),

slice(-self.shift_size, None))

cnt = 0

for h in h_slices:

for w in w_slices:

img_mask[:, h, w, :] = cnt

cnt += 1

mask_windows = window_partition(img_mask, self.window_size) # nW, window_size, window_size, 1

mask_windows = mask_windows.view(-1, self.window_size * self.window_size)

attn_mask = mask_windows.unsqueeze(1) - mask_windows.unsqueeze(2)

attn_mask = attn_mask.masked_fill(attn_mask != 0, float(-100.0)).masked_fill(attn_mask == 0, float(0.0))

else:

attn_mask = None

self.register_buffer("attn_mask", attn_mask)

# ------------------------------------------------------------------

if self.is_sep_layernorm:

self.norm2_list = nn.ModuleList()

for _ in range(self.share_num):

self.norm2_list.append(norm_layer(dim))

else:

self.norm2 = norm_layer(dim)

# ------------------------------------------------------------------

mlp_hidden_dim = int(dim * mlp_ratio)

self.mlp = Mlp(in_features=dim, hidden_features=mlp_hidden_dim, act_layer=act_layer, drop=drop)

self.drop_path = nn.ModuleList()

for index, drop_path_value in enumerate(drop_path):

self.drop_path.append(DropPath(drop_path_value) if drop_path_value > 0. else nn.Identity())

# ------------------------------------------------------------------

# 第二个transform的结构,层归一化+分组卷积

if self.is_transform_FFN:

self.local_norm_list = nn.ModuleList() # 层进行归一化

self.local_conv_list = nn.ModuleList() # 分组卷积卷积层

self.local_norm_list.append(norm_layer(dim))

_window_size = 7

self.local_conv_list.append(nn.Conv2d(dim, dim, _window_size, 1, _window_size // 2, groups=dim, bias=qkv_bias))

else:

self.local_conv_list = None

# ------------------------------------------------------------------

def forward_feature(self, x, is_shift=False, layer_index=0):

H, W = self.input_resolution

B, L, C = x.shape

assert L == H * W, "input feature has wrong size"

shortcut = x

if self.is_sep_layernorm:

x = self.norm1_list[layer_index](x) # 取每个stage的第一层进行归一化

else:

x = self.norm1(x) # 对所有层进行层归一化

x = x.view(B, H, W, C)

# cyclic shift

if is_shift and self.shift_size > 0:

shifted_x = torch.roll(x, shifts=(-self.shift_size, -self.shift_size), dims=(1, 2))

else:

shifted_x = x

# partition windows

x_windows = window_partition(shifted_x, self.window_size) # nW*B, window_size, window_size, C

x_windows = x_windows.view(-1, self.window_size * self.window_size, C) # nW*B, window_size*window_size, C

# 对stage的第一层进行操作,第一个transform的第一个线性变换,在windowAttention调用

proj_l = self.proj_l[layer_index] if self.is_transform_heads else self.proj_l

# 第一个transform的第二个线性变换,在windowAttention调用

proj_w = self.proj_w[layer_index] if self.is_transform_heads else self.proj_w

# W-MSA/SW-MSA

attn_windows = self.attn(x_windows, mask=self.attn_mask, proj_l=proj_l, proj_w=proj_w) # nW*B, window_size*window_size, C

# merge windows

attn_windows = attn_windows.view(-1, self.window_size, self.window_size, C)

shifted_x = window_reverse(attn_windows, self.window_size, H, W) # B H' W' C

# reverse cyclic shift

if is_shift and self.shift_size > 0:

x = torch.roll(shifted_x, shifts=(self.shift_size, self.shift_size), dims=(1, 2))

else:

x = shifted_x

x = x.view(B, H * W, C)

# FFN

x = shortcut + self.drop_path[layer_index](x)

# -----------------------------------------------------------------------------------

# 第二个transform的结构,层归一化+分组卷积

if self.local_conv_list is not None:

x = self.local_norm_list[layer_index](x)

x = x.permute(0, 2, 1).view(B, C, H, W)

x = x + self.local_conv_list[layer_index](x)

x = x.view(B, C, H * W).permute(0, 2, 1)

# -----------------------------------------------------------------------------------

norm2 = self.norm2_list[layer_index] if self.is_sep_layernorm else self.norm2

x = x + self.drop_path[layer_index](self.mlp(norm2(x)))

return x

def forward(self, x):

init_window_shift = self.is_init_window_shift

for index in range(self.share_num):

x = self.forward_feature(x, init_window_shift, index)

init_window_shift = not init_window_shift

return x

BasicLayer

首先swin_transform_minivit在主体结构上的变化如上图所示,swin_transform_minivit阶段的数量是可配置的,而不是固定。待压缩的原始模型各阶段的transformer层应具有相同的结构和尺寸。但是没有采用权重蒸馏,所以代码里面只有将stage压缩的部分具体代码如下:

class BasicLayer(nn.Module):

""" A basic Swin Transformer layer for one stage.

Args:

dim (int): Number of input channels.

input_resolution (tuple[int]): Input resolution.

depth (int): Number of blocks.

num_heads (int): Number of attention heads.

window_size (int): Local window size.

mlp_ratio (float): Ratio of mlp hidden dim to embedding dim.

qkv_bias (bool, optional): If True, add a learnable bias to query, key, value. Default: True

qk_scale (float | None, optional): Override default qk scale of head_dim ** -0.5 if set.

drop (float, optional): Dropout rate. Default: 0.0

attn_drop (float, optional): Attention dropout rate. Default: 0.0

drop_path (float | tuple[float], optional): Stochastic depth rate. Default: 0.0

norm_layer (nn.Module, optional): Normalization layer. Default: nn.LayerNorm

downsample (nn.Module | None, optional): Downsample layer at the end of the layer. Default: None

use_checkpoint (bool): Whether to use checkpointing to save memory. Default: False.

"""

def __init__(self, dim, input_resolution, depth, num_heads, window_size,

mlp_ratio=4., qkv_bias=True, qk_scale=None, drop=0., attn_drop=0.,

drop_path=[0.], norm_layer=nn.LayerNorm, downsample=None, use_checkpoint=False,

# The following parameters are for MiniViT

is_sep_layernorm = False,

is_transform_FFN=False,

is_transform_heads = False,

separate_layer_num = 1,

):

super().__init__()

## drop path must be a list

assert(isinstance(drop_path, list))

self.dim = dim

self.input_resolution = input_resolution # W ,H

self.depth = depth

self.use_checkpoint = use_checkpoint

self.share_times = depth // separate_layer_num # 共享次数 = 网络深度//需要分离的层数

self.separate_layer_num = separate_layer_num

# build blocks

self.blocks = nn.ModuleList()

# 每个stage模块生成一个larly

for i in range(self.separate_layer_num):

# 确定dropout使用的比例

drop_path_list = drop_path[(i*self.share_times): min((i+1)*self.share_times, depth)]

self.blocks.append(SwinTransformerBlock(dim=dim,

input_resolution=input_resolution,

num_heads=num_heads, window_size=window_size,

shift_size=window_size // 2,

mlp_ratio=mlp_ratio,

qkv_bias=qkv_bias, qk_scale=qk_scale,

drop=drop, attn_drop=attn_drop,

drop_path=drop_path_list,

norm_layer=norm_layer,

## The following arguments are for MiniViT

is_init_window_shift = (i*self.share_times)%2==1,

is_sep_layernorm = is_sep_layernorm,

is_transform_FFN = is_transform_FFN,

is_transform_heads = is_transform_heads,

))

# patch merging layer

if downsample is not None:

self.downsample = downsample(input_resolution, dim=dim, norm_layer=norm_layer)

else:

self.downsample = None

def forward(self, x):

for blk in self.blocks:

if self.use_checkpoint:

x = checkpoint.checkpoint(blk, x)

else:

x = blk(x)

if self.downsample is not None:

x = self.downsample(x)

return x

后续再更新。