目录

How does backpropagation work?

How does backpropagation work?

we got new value in red

another example:

add gate :gradient distributor

Q:WHAT'S A MAX GATE? gradient router

the max gate will just take the gradient and route to one of the branches

like this,

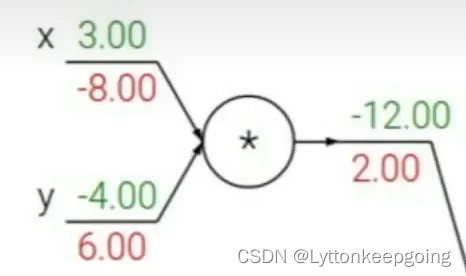

Q:WHAT'S A MUL GATE?

it depends on the another value to multiply

if it's a vectorized example:

xT is the highlight

codes for forward/backward API

class ComputationalGraph(object):

def forward(inputs):

# 1.[pass inputs to input gates...]

# 2.forward the computational graph:

for gate in self.graph.nodes_topologically_sorted():

gate.forward()

return loss # the final gate in the graph outputs the loss

def backward():

for gate in reversed(self.graph.nodes_topologically_sorted()):

gate.backward()

return inputs_pradients

codes for multiplygates

class MultiplyGate(object):

def forward(x, y):

z = x*y

return z

def backward(dz):

# dx = ... # todo

# dy = ... # todo

return [dx, dy]if you just stack linear layers on top of each other, they're just going to collapse to like a single linear function!!

activation functions