Envoy Tcp请求静态配置

1 准备工作

准备工作包括:

- docker-compose环境准备

- yaml及docker-compse配置文件获取(来自github/ikubernetes)

# apt install docker-compose -y

# docker pull envoyproxy/envoy-alpine:v1.21.5

# mkdir /apps/envoy -p

# cd /apps/envoy

# git clone https://github.com/ikubernetes/servicemesh_in_practise.git

# cd servicemesh_in_practise/

# ls

admin-interface eds-filesystem envoy.echo http-egress LICENSE security tls-front-proxy

cds-eds-filesystem eds-grpc front-proxy http-ingress monitoring-and-tracing tcpproxy

cluster-manager eds-rest http-connection-manager lds-cds-grpc README.md template

# git checkout develop

Branch 'develop' set up to track remote branch 'develop' from 'origin'.

Switched to a new branch 'develop'

# ls

Cluster-Manager Envoy-Basics HTTP-Connection-Manager Monitoring-and-Tracing Security

Dynamic-Configuration Envoy-Mesh LICENSE README.md template

# cd Envoy-Basics/tcp-front-proxy/

2 docker-compose配置

docker-compose定义了3个容器

- 业务容器webserver01 IP地址172.31.1.11

- 业务容器webserver02 IP地址172.31.1.12

- envoy容器 IP地址172.31.1.2

- 定义envoymesh的网桥

/apps/envoy/servicemesh_in_practise/Envoy-Basics/tcp-front-proxy# cat docker-compose.yaml

version: '3.3'

services:

envoy:

image: envoyproxy/envoy-alpine:v1.21.5

volumes:

- ./envoy.yaml:/etc/envoy/envoy.yaml

networks:

envoymesh:

ipv4_address: 172.31.1.2

aliases:

- front-proxy

depends_on:

- webserver01

- webserver02

webserver01:

image: ikubernetes/demoapp:v1.0

environment:

- PORT=8080

hostname: webserver01

networks:

envoymesh:

ipv4_address: 172.31.1.11

aliases:

- webserver01

webserver02:

image: ikubernetes/demoapp:v1.0

environment:

- PORT=8080

hostname: webserver02

networks:

envoymesh:

ipv4_address: 172.31.1.12

aliases:

- webserver02

networks:

envoymesh:

driver: bridge

ipam:

config:

- subnet: 172.31.1.0/24

3 envoy.yaml配置

envoy.yaml中定义:

- 使用的是静态配置static_resources

- 监听器监听 0.0.0.0:80 端口

- 监听器接收到请求转发给filter_chains,filter_chains串联了tcp_proxy过滤器对请求进行解码,代理给local_cluster

- clusters中定义了local_cluster,其中包含2个Endpoint,172.31.1.11:8080 和172.31.1.12:8080

static_resources:

listeners:

name: listener_0

address:

socket_address: {

address: 0.0.0.0, port_value: 80 }

filter_chains:

- filters:

- name: envoy.tcp_proxy

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.tcp_proxy.v3.TcpProxy

stat_prefix: tcp

cluster: local_cluster

clusters:

- name: local_cluster

connect_timeout: 0.25s

type: STATIC

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: local_cluster

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address: {

address: 172.31.1.11, port_value: 8080 }

- endpoint:

address:

socket_address: {

address: 172.31.1.12, port_value: 8080 }

4 部署并测试访问

启动docker-compose后,客户端访问172.31.1.2查看转发效果

# docker-compose up

## 客户端上执行

# curl 172.31.1.2

iKubernetes demoapp v1.0 !! ClientIP: 172.31.1.2, ServerName: webserver01, ServerIP: 172.31.1.11!

# curl 172.31.1.2

iKubernetes demoapp v1.0 !! ClientIP: 172.31.1.2, ServerName: webserver02, ServerIP: 172.31.1.12!

# curl 172.31.1.2

iKubernetes demoapp v1.0 !! ClientIP: 172.31.1.2, ServerName: webserver02, ServerIP: 172.31.1.12!

# curl 172.31.1.2

iKubernetes demoapp v1.0 !! ClientIP: 172.31.1.2, ServerName: webserver01, ServerIP: 172.31.1.11!

## docker-compose服务器上可以看到请求的记录

envoy_1 | [2022-09-23 06:36:33.256][1][info][main] [source/server/server.cc:868] starting main dispatch loop

webserver01_1 | 172.31.1.2 - - [23/Sep/2022 06:38:19] "GET / HTTP/1.1" 200 -

webserver02_1 | 172.31.1.2 - - [23/Sep/2022 06:38:20] "GET / HTTP/1.1" 200 -

webserver02_1 | 172.31.1.2 - - [23/Sep/2022 06:38:21] "GET / HTTP/1.1" 200 -

webserver01_1 | 172.31.1.2 - - [23/Sep/2022 06:38:21] "GET / HTTP/1.1" 200 -

## 测试完清理环境

# docker-compose down

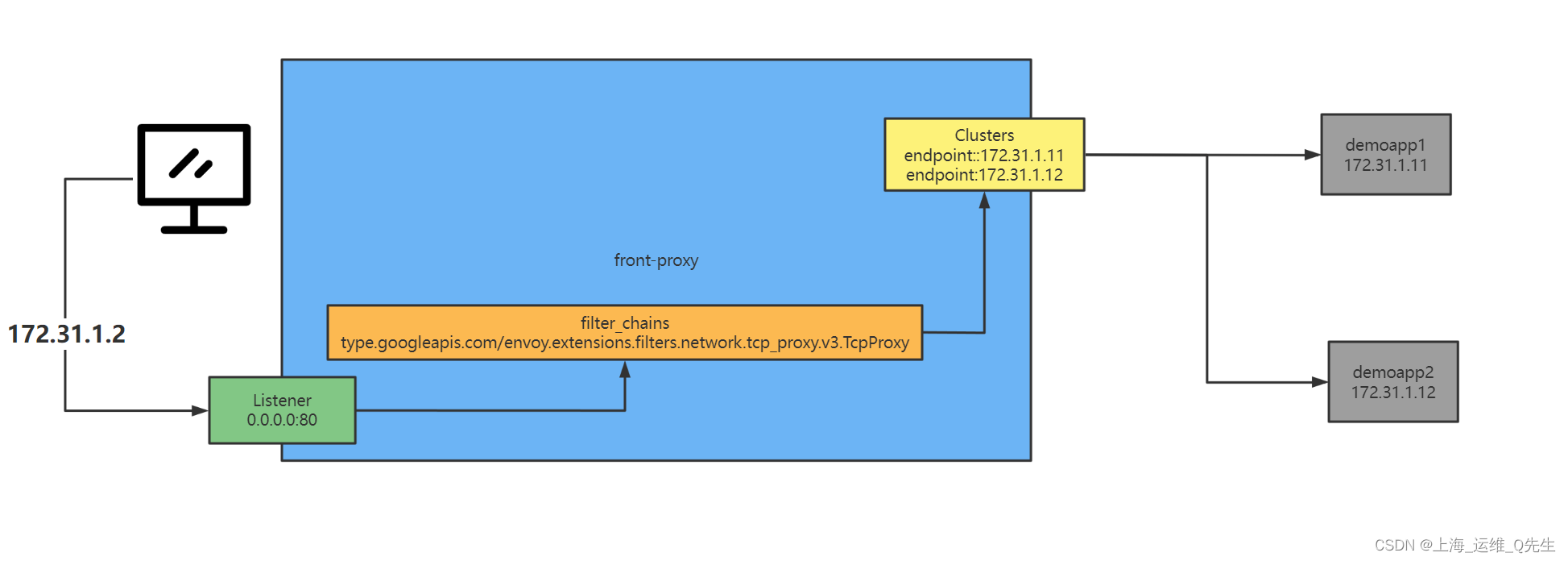

5 访问流程梳理

- 当客户端访问172.31.1.2:80(docker-compose中的envoy容器),请求被转发到front-proxy的Listener上的80端口(listener中socket_address定义)

- 由filter chains定义的tcp_proxy过滤器对请求进行解码,将传输层报文通过tcp_proxy(typed_config中定义)解码到Clusters中的local_cluster

- Cluster使用轮询算法将请求转发到后端的Endpoint(clusters中socket_address字段定义)上.