1. Envoy Http Ingress Proxy Demo

1.1 Docker-compose配置

docker-compose中定义了:

- 网桥172.31.3.0/24

- envoy ip地址172.31.3.2,别名ingress

- webserver01 和service中的envoy共享同一个网络,监听127.0.0.1:8080

version: '3'

services:

envoy:

image: envoyproxy/envoy-alpine:v1.21.5

volumes:

- ./envoy.yaml:/etc/envoy/envoy.yaml

environment:

- ENVOY_UID=0

- ENVOY_GID=0

networks:

envoymesh:

ipv4_address: 172.31.3.2

aliases:

- ingress

webserver01:

image: ikubernetes/demoapp:v1.0

environment:

- PORT=8080

- HOST=127.0.0.1

network_mode: "service:envoy"

depends_on:

- envoy

networks:

envoymesh:

driver: bridge

ipam:

config:

- subnet: 172.31.3.0/24

1.2 envoy.yaml配置

yaml中配置了:

- 监听在0.0.0.0:80

- 过滤器链中定义过滤器http_connection_manager.

- http_connection_manager是四层代理,可以理解http,https,grpc的7层协议

- http_connection_manager的编解码格式(codec_type)是自动选择

- 激活了4层的http_connection_manager以后可以声明激活由他支持的7层过滤器router,激活了router才能使用route_config的配置

- 虚拟主机的名字是web_service_1,因为domains匹配的是*,那么只要到达了监听器的所有请求都会被转发给web_service_1并由它处理

- 到达该虚拟主机的所有请求都路由给local_cluster(clusters中定义)

- local_cluster侦听在127.0.0.1:8080端口

# cat envoy.yaml

static_resources:

listeners:

- name: listener_0

address:

socket_address: {

address: 0.0.0.0, port_value: 80 }

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: ingress_http

codec_type: AUTO

route_config:

name: local_route

virtual_hosts:

- name: web_service_1

domains: ["*"]

routes:

- match: {

prefix: "/" }

route: {

cluster: local_cluster }

http_filters:

- name: envoy.filters.http.router

clusters:

- name: local_cluster

connect_timeout: 0.25s

type: STATIC

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: local_cluster

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address: {

address: 127.0.0.1, port_value: 8080 }

1.3 运行环境并测试

## 启动环境

# docker-compose up

## 测试访问

# curl 172.31.3.2

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: 6e1fd48db4dc, ServerIP: 172.31.3.2!

# docker ps |grep 6e1fd48db4dc

6e1fd48db4dc envoyproxy/envoy-alpine:v1.21.5 "/docker-entrypoint.…" About a minute ago Up About a minute 10000/tcp httpingress_envoy_1

# curl -vv 172.31.3.2

* Rebuilt URL to: 172.31.3.2/

* Trying 172.31.3.2...

* TCP_NODELAY set

* Connected to 172.31.3.2 (172.31.3.2) port 80 (#0)

> GET / HTTP/1.1

> Host: 172.31.3.2

> User-Agent: curl/7.58.0

> Accept: */*

>

< HTTP/1.1 200 OK

< content-type: text/html; charset=utf-8

< content-length: 97

< server: envoy

< date: Fri, 23 Sep 2022 08:44:39 GMT

< x-envoy-upstream-service-time: 1

<

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: 6e1fd48db4dc, ServerIP: 172.31.3.2!

* Connection #0 to host 172.31.3.2 left intact

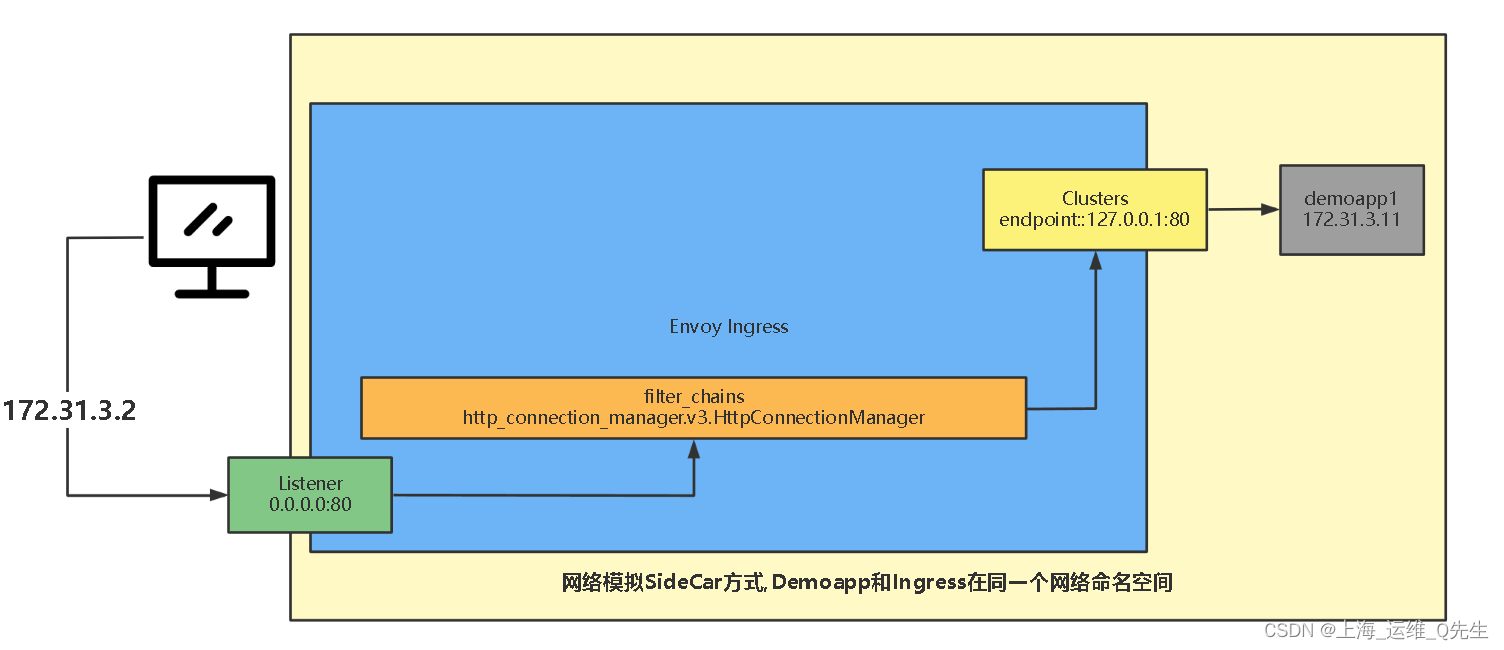

1.4 访问流程

访问流程梳理

-

当客户端访问172.31.1.2:80,请求被转发到Ingress的Listener上的80端口

-

由filter chains过滤器链定义的http_connection_manager过滤器通过envoy.filters.http.router模块将请求转发至名字为web_service_1的虚拟主机

-

由于只有一个虚拟主机,便将所有请求都匹配到名字为local_cluster的clusters上

-

local_cluster使用轮询算法将请求转发至ep,ep只有一个127.0.0.1:8080,由它进行应答

-

在docker-compose中webserver01监听了127.0.0.1:8080

-

由于webserver01的网络模式设定为和envoy共用通一个网络命名空间(network_mode: “service:envoy”)所以envoy可以直接将请求转发至127.0.0.1:8080,并由webserver01进行客户端请求应答.

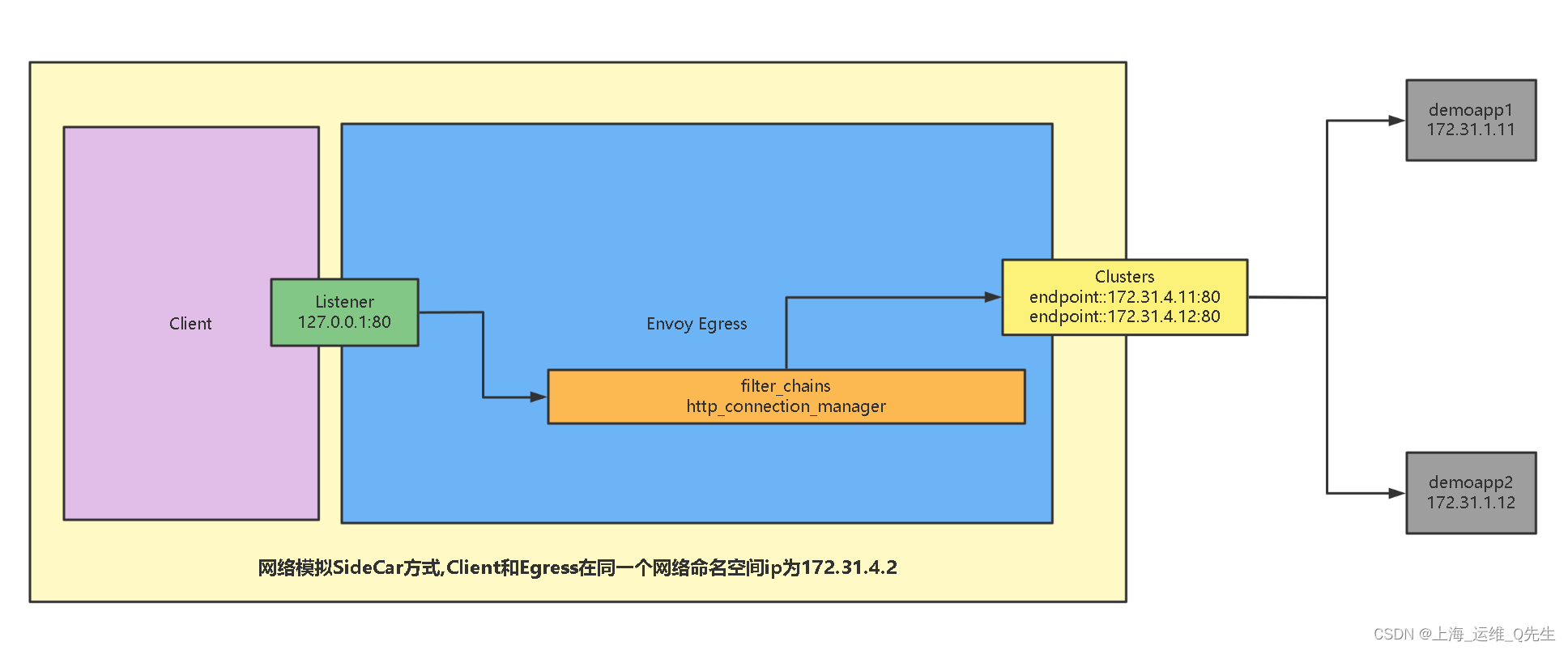

2. Envoy Http Egress Proxy Demo

2.1 Docker-compose配置

docker-compose中定义了:

- 定义了4个镜像,分别是client,envoy,webserver01和webserver01

- client和envoy共用一个ip地址的命名空间,模拟了sidecar模式

- webserver01和webserver01为同网段的11和12.模拟了同一集群环境或不同集群中后端服务器

version: '3.3'

services:

envoy:

image: envoyproxy/envoy-alpine:v1.21.5

volumes:

- ./envoy.yaml:/etc/envoy/envoy.yaml

networks:

envoymesh:

ipv4_address: 172.31.4.2

aliases:

- front-proxy

depends_on:

- webserver01

- webserver02

client:

image: ikubernetes/admin-toolbox:v1.0

network_mode: "service:envoy"

depends_on:

- envoy

webserver01:

image: ikubernetes/demoapp:v1.0

hostname: webserver01

networks:

envoymesh:

ipv4_address: 172.31.4.11

aliases:

- webserver01

webserver02:

image: ikubernetes/demoapp:v1.0

hostname: webserver02

networks:

envoymesh:

ipv4_address: 172.31.4.12

aliases:

- webserver02

networks:

envoymesh:

driver: bridge

ipam:

config:

- subnet: 172.31.4.0/24

2.2 envoy.yaml配置

yaml中配置了:

- listener监听了本机的127.0.0.1:80

- 过滤器链通过http_connection_manager过滤器,调用http.router方法,将所有请求转发至web_cluster集群

- web_cluster中定义了EP分别为172.31.4.11:80和172.31.4.12:80

static_resources:

listeners:

- name: listener_0

address:

socket_address: {

address: 127.0.0.1, port_value: 80 }

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: egress_http

codec_type: AUTO

route_config:

name: local_route

virtual_hosts:

- name: web_service_1

domains: ["*"]

routes:

- match: {

prefix: "/" }

route: {

cluster: web_cluster }

http_filters:

- name: envoy.filters.http.router

clusters:

- name: web_cluster

connect_timeout: 0.25s

type: STATIC

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: web_cluster

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address: {

address: 172.31.4.11, port_value: 80 }

- endpoint:

address:

socket_address: {

address: 172.31.4.12, port_value: 80 }

2.3 运行环境并测试

# docker-compose

## 进入cluster容器测试效果

root@k8s-node-1:/apps/envoy/servicemesh_in_practise/Envoy-Basics/http-egress# docker exec -it bc629f1a6022 bash

[root@c2752b7f0101 /]$ ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

34: eth0@if35: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:1f:04:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.31.4.2/24 brd 172.31.4.255 scope global eth0

valid_lft forever preferred_lft forever

[root@c2752b7f0101 /]$ ss -tnpl

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

LISTEN 0 128 127.0.0.1:80 0.0.0.0:*

LISTEN 0 128 127.0.0.1:80 0.0.0.0:*

LISTEN 0 128 127.0.0.1:80 0.0.0.0:*

LISTEN 0 128 127.0.0.1:80 0.0.0.0:*

LISTEN 0 128 127.0.0.11:34193 0.0.0.0:*

[root@c2752b7f0101 /]$ curl 127.0.0.1

iKubernetes demoapp v1.0 !! ClientIP: 172.31.4.2, ServerName: webserver02, ServerIP: 172.31.4.12!

[root@c2752b7f0101 /]$ curl 127.0.0.1

iKubernetes demoapp v1.0 !! ClientIP: 172.31.4.2, ServerName: webserver02, ServerIP: 172.31.4.12!

[root@c2752b7f0101 /]$ curl 127.0.0.1

iKubernetes demoapp v1.0 !! ClientIP: 172.31.4.2, ServerName: webserver02, ServerIP: 172.31.4.12!

[root@c2752b7f0101 /]$ curl 127.0.0.1

iKubernetes demoapp v1.0 !! ClientIP: 172.31.4.2, ServerName: webserver01, ServerIP: 172.31.4.11!

[root@c2752b7f0101 /]$ curl 127.0.0.1

iKubernetes demoapp v1.0 !! ClientIP: 172.31.4.2, ServerName: webserver02, ServerIP: 172.31.4.12!

[root@c2752b7f0101 /]$ curl 127.0.0.1

iKubernetes demoapp v1.0 !! ClientIP: 172.31.4.2, ServerName: webserver01, ServerIP: 172.31.4.11!

[root@c2752b7f0101 /]$ curl 127.0.0.1

iKubernetes demoapp v1.0 !! ClientIP: 172.31.4.2, ServerName: webserver02, ServerIP: 172.31.4.12!

[root@c2752b7f0101 /]$ curl 127.0.0.1

iKubernetes demoapp v1.0 !! ClientIP: 172.31.4.2, ServerName: webserver01, ServerIP: 172.31.4.11!

## 在docker-compose 的输出可以看到

webserver02_1 | 172.31.4.2 - - [24/Sep/2022 03:02:11] "GET / HTTP/1.1" 200 -

webserver02_1 | 172.31.4.2 - - [24/Sep/2022 03:02:12] "GET / HTTP/1.1" 200 -

webserver02_1 | 172.31.4.2 - - [24/Sep/2022 03:02:13] "GET / HTTP/1.1" 200 -

webserver01_1 | 172.31.4.2 - - [24/Sep/2022 03:02:15] "GET / HTTP/1.1" 200 -

webserver02_1 | 172.31.4.2 - - [24/Sep/2022 03:02:17] "GET / HTTP/1.1" 200 -

webserver01_1 | 172.31.4.2 - - [24/Sep/2022 03:02:17] "GET / HTTP/1.1" 200 -

webserver02_1 | 172.31.4.2 - - [24/Sep/2022 03:02:18] "GET / HTTP/1.1" 200 -

webserver01_1 | 172.31.4.2 - - [24/Sep/2022 03:02:19] "GET / HTTP/1.1" 200 -

2.4 访问流程

- 由于Client和Envoy在同一个网络命令空间,所以登录client访问127.0.0.1:80就是在访问Envoy的127.0.0.1:80监听端口

- Envoy通过http_connection_manager的route模块,将请求转发给Ep集群

- Ep集群根据配置中的内容将请求以轮询算法转发至外部的后端服务器

- 后端服务器可以是与Client,Envoy pod同集群的其他pod,也可以是之外的服务器.

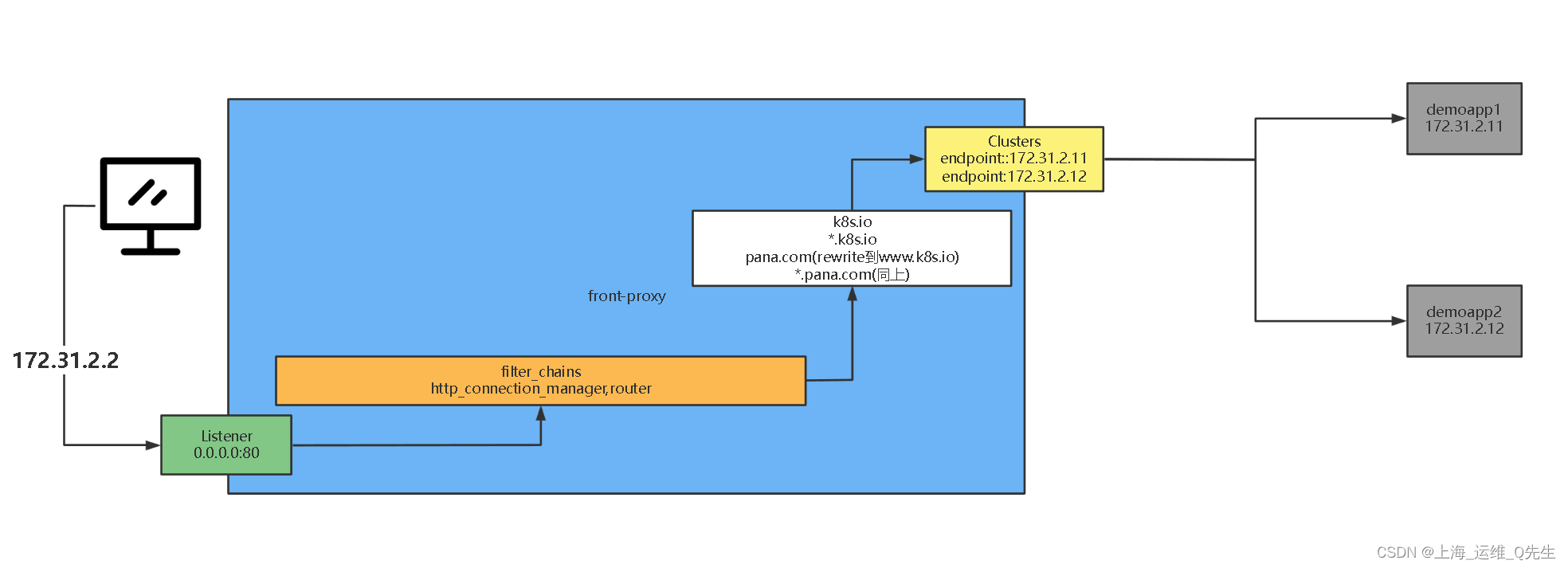

3. Envoy Http front Proxy Demo

3.1 Docker-compose配置

- 定义了envoy ip:172.31.2.2

- 定义webserver01 172.31.2.11:8080

- 定义webserver02 172.31.2.12:8080

version: '3.3'

services:

envoy:

image: envoyproxy/envoy-alpine:v1.21.5

volumes:

- ./envoy.yaml:/etc/envoy/envoy.yaml

networks:

envoymesh:

ipv4_address: 172.31.2.2

aliases:

- front-proxy

depends_on:

- webserver01

- webserver02

webserver01:

image: ikubernetes/demoapp:v1.0

environment:

- PORT=8080

hostname: webserver01

networks:

envoymesh:

ipv4_address: 172.31.2.11

aliases:

- webserver01

webserver02:

image: ikubernetes/demoapp:v1.0

environment:

- PORT=8080

hostname: webserver02

networks:

envoymesh:

ipv4_address: 172.31.2.12

aliases:

- webserver02

networks:

envoymesh:

driver: bridge

ipam:

config:

- subnet: 172.31.2.0/24

3.2 envoy.yaml配置

yaml中配置了:

- listener监听了0.0.0.0:80

- 过滤器链使用4层http_connection_manager调用7层router模块实现虚拟主机调用的功能

- 配置了2个虚拟主机web_service_1和web_service_2

- web_service_1监听"*.k8s.io"和"k8s.io",将请求这两个域名的所有清幽匹配后转发至local_cluster

static_resources:

listeners:

- name: listener_0

address:

socket_address: {

address: 0.0.0.0, port_value: 80 }

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: ingress_http

codec_type: AUTO

route_config:

name: local_route

virtual_hosts:

- name: web_service_1

domains: ["*.k8s.io", "k8s.io"]

routes:

- match: {

prefix: "/" }

route: {

cluster: local_cluster }

- name: web_service_2

domains: ["*.pana.com","pana.com"]

routes:

- match: {

prefix: "/" }

redirect:

host_redirect: "www.k8s.io"

http_filters:

- name: envoy.filters.http.router

clusters:

- name: local_cluster

connect_timeout: 0.25s

type: STATIC

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: local_cluster

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address: {

address: 172.31.2.11, port_value: 8080 }

- endpoint:

address:

socket_address: {

address: 172.31.2.12, port_value: 8080 }

3.3 运行并测试

- 当访问*.k8s.io和k8s.io时直接将请求交给了后端local_cluster再转发到172.31.2.11,172.31.2.12

- 当访问*.pana.com和pana.com就会被跳转到ww.k8s.io上去,再根据 *.k8s.io的匹配将请求交给了后端local_cluster再转发到172.31.2.11,172.31.2.12

# docker-compose up

## 将域名解析写入hosts文件

# echo "172.31.2.2 www.k8s.io k8s.io www.pana.com pana.com web.k8s.io" >> /etc/hosts

root@k8s-node-1:~# curl www.k8s.io

iKubernetes demoapp v1.0 !! ClientIP: 172.31.2.2, ServerName: webserver01, ServerIP: 172.31.2.11!

root@k8s-node-1:~# curl k8s.io

iKubernetes demoapp v1.0 !! ClientIP: 172.31.2.2, ServerName: webserver01, ServerIP: 172.31.2.11!

root@k8s-node-1:~# curl web.k8s.io

iKubernetes demoapp v1.0 !! ClientIP: 172.31.2.2, ServerName: webserver02, ServerIP: 172.31.2.12!

root@k8s-node-1:~# curl -I www.pana.com

HTTP/1.1 301 Moved Permanently

location: http://www.k8s.io/

date: Sat, 24 Sep 2022 05:03:28 GMT

server: envoy

transfer-encoding: chunked

root@k8s-node-1:~# curl -I pana.com

HTTP/1.1 301 Moved Permanently

location: http://www.k8s.io/

date: Sat, 24 Sep 2022 05:03:36 GMT

server: envoy

transfer-encoding: chunked

3.4 访问流程

- 当客户端访问172.31.2.2:80(docker-compose中的envoy容器),请求被转发到front-proxy的Listener上的80端口(listener中socket_address定义)

- 过滤链调用4层http_connection_manager,再调用7层的router,实现7层转发功能

- 当访问*.k8s.io和k8s.io时直接将请求交给了后端local_cluster再转发到172.31.2.11,172.31.2.12

- 当访问*.pana.com和pana.com就会被跳转到ww.k8s.io上去,再根据 *.k8s.io的匹配将请求交给了后端local_cluster再转发到172.31.2.11,172.31.2.12

4. 静态资源和动态资源

-

静态资源配置方式是直接在配置文件中通过static_resources配置定义明确的listeners,clusters和secrets对应的值.

- listeners用于配置纯静态类型的侦听器列表,clusters用于定义可用的集群列表及每个集群的端点,而可选的secrets用于定义TLS通信中用到数字证书等配置信息

- 具体使用时,admin和static_resources两参数即可提供一个最小化的资源配置,甚至admin也可省略

{ "listeners":[], "clusters":[], "secrets":[] } -

动态资源,是指由envoy通过xDS协议发现所需要的各项配置机制,相关的配置保存于称为管理服务区(Manager Server)的主机上,经由xDS API向外暴露

{

"lds_config":"{...}",

"cds_config":"{...}",

"ads_config":"{...}"

}

5. HTTP L7路由基础配置

route_config.virtual_hosts.routes配置的路由信息用于将下游客户端请求路由至合适的上游集群中的某个server上;

-

其路由方式是将url匹配match字段的定义

match字段可通过prefix(前缀),path(路径),或safe_regex(正则表达式)三者之一来表示匹配模式

-

与match相关的请求由route(路由规则),redirect(重定向规则)或direct_response(直接响应)三个字段其中之一完成路由;

-

由route定义的路由目标必须是cluster(上游集群名称),cluster_header(根据请求标头中的cluster_header的值确定目标集群)或weihted_cluster(路由目标有多个集群,每个集群拥有一定的权重)其中之一;

routes:

- name: # 此条路由的名字

match: # 匹配条件,过滤请求报文,只有符合条件的,才使用这条路由机制,没有符合的就过.如果都没有匹配到,就往默认路由上匹配.

prefix: .....# 请求url前缀

route: # 路由条目

cluster: # 目标下游服务器

match

-

基于prefix,path或regex三者其中任何一个进行url匹配

prefix,uri的左前缀匹配path,uri的路径精确匹配

regex uri做正则匹配,已经被safe_regex取代 -

可额外根据headers(标头)和query_parameters(查询参数)完成报文匹配

-

匹配得到报文可有三种机制

- redirect 重写

- direct_response 直接响应

- route 转发给cluster

route

- 支持cluster,weighted_clusters和cluster_header三者之一定义目标路由

- 转发期间可根据prefix_rewrite和host_rewrite完成url重写

- 可额外配置流量管理机制.例如timeout,retry_policy,cors,request_mirror_policy(请求镜像)和rate_limits(限流)等.