1. Envoy 动态配置及配置源

1.1 Envoy 动态配置

- xDS API为Envoy提供了资源的动态配置机制,它也被称为Data Plane API(遵循UPDA规范)

- Envoy支持三种类型的配置信息的动态发现机制,相关的发现服务及相应的API联合起来称为xDS API

- 基于文件发现: 指定要监视的文件系统路径

- 通过查询一到多个管理服务区(Manager Server)发现: 通过DiscoveryRequest协议报文发送请求,并要求服务方以DiscoveryResponse协议报文进行响应.

- gRPC服务: 启动gRPC流(长连接)

- REST服务: 轮询REST-JSON URL(轮询间隔一定时间访问一次REST)

- v3 xDS支持如下几种资源类型

- envoy.config.listener.v3.Listener

- envoy.config.route.v3.RouteConfiguration

- envoy.config.route.v3.ScopedRouteConfiguration

- envoy.config.route.v3.VirtualHost

- envoy.config.cluster.v3.Cluster

- envoy.config.endpoint.v3.ClusterLoadAssignment

- envoy.extensions.transport_sockets.tls.v3.Secret

- envoy.service.runtime.v3.Runtime

1.2 xDS API概述

Envoy对xDS API的管理由后端服务器实现,包括LDS,CDS,RDS,SRDS(Scpoed Route),VHDS,EDS,SDS,RTDS等

- 所有这些API都提供了最终的一致性.并彼此之间不存在相互影响

- 部分高级操作需要进行排序以防止流量被丢弃,因此,基于一个管理服务器提供多类API时还需要使用聚合发现服务(ADS)API

ADS API允许所有其他API通过来自单个管理服务器的单个gRPC双向流进行编组,从而允许对操作进行确定性排序 - xDS的各API还支持增量传输机制,包括ADS

1.3 Bootstrap node 配置

一个Managerment Server实例可能需要同时响应多个不同的Envoy实例的资源发现请求

- Managerment Server上的配置需要为适配到不同的Envoy实例

- Envoy实例请求发现配置时,需要在请求报文中上报自身的信息

- id,cluster,metadata,locality等

- 这些配置信息定义在Bootstrap配置文件中

node:

id: # 必选

cluster: # 必选

metadata: {

...} # 非必选

locality: # 非必选

region:

zone:

sub_zone:

user_agent_name: # 向上游模拟身份

user_agent_version:

user_agent_build_version:

version:

metadata: {

...}

extensions: []

client_features: []

listening_addresses: []

1.4 API基本工作流程

对于经典HTTP路由方案,xDS API的Managerment Server需要为其客户端(Envoy实例)配置的核心资源类型为Listener,RouteConfiguration,Cluster和ClusterLoadAssignment(endpoint)四个(还有Virtual Host)

- 每个Listener资源可以指向一个RouteConfiguration资源,该资源可以指向一个或多个Cluster资源,并且每一个Cluster资源可以指向一个ClusterLoadAssignment资源

Envoy实例启动时请求加载所有Listener和Cluster资源,而后再获取这些Listener和Cluster所依赖的RouteConfiguration和ClusterLoadAssignment配置

- Listener资源和Cluster资源分别代表着客户端上的根(root)配置,并且可并行加载

类型gRPC一类的非代理式客户端可以仅在启动是请求加载其感兴趣的Listener资源,而后再加载这些特定的Listener相关的RouteConfiguration资源,最后这些RouteConfiguration资源指向的Cluster资源,以及由这些cluster资源依赖的ClusterLoadAssignment资源

- Listener资源是客户端整个配置的根

1.5 Envoy 资源的配置源(ConfigSource)

配置源(ConfigSource)用于指定资源配置数据的来源,用于为Listener,Cluster,Route,Endpoint,Secret和Virtual Host等资源提供配置数据

目前,Envoy支持的资源配置源只能是path,api_config_souorce或ads其中之一

api_config_source或ads的数据来源于xDS API Server,即Manager Server

2. 基于文件系统的订阅

为Envoy提供动态配置的最简单方法是将其放置在ConfigSource中显示指定的文件路径中

- Envoy将使用inotify来监视文件的更改,并在更新时解析文件中的DiscoveryResponse报文

- 二进制protobufs,Json,Yaml和proto文本都是DiscoveryResponse所支持的数据格式

- 除了统计计数日志外,没有任何机制可用于文件系统订阅ACK/NACK更新

- 若配置更新被拒绝,xDS API的最后一个有效配置将继续使用

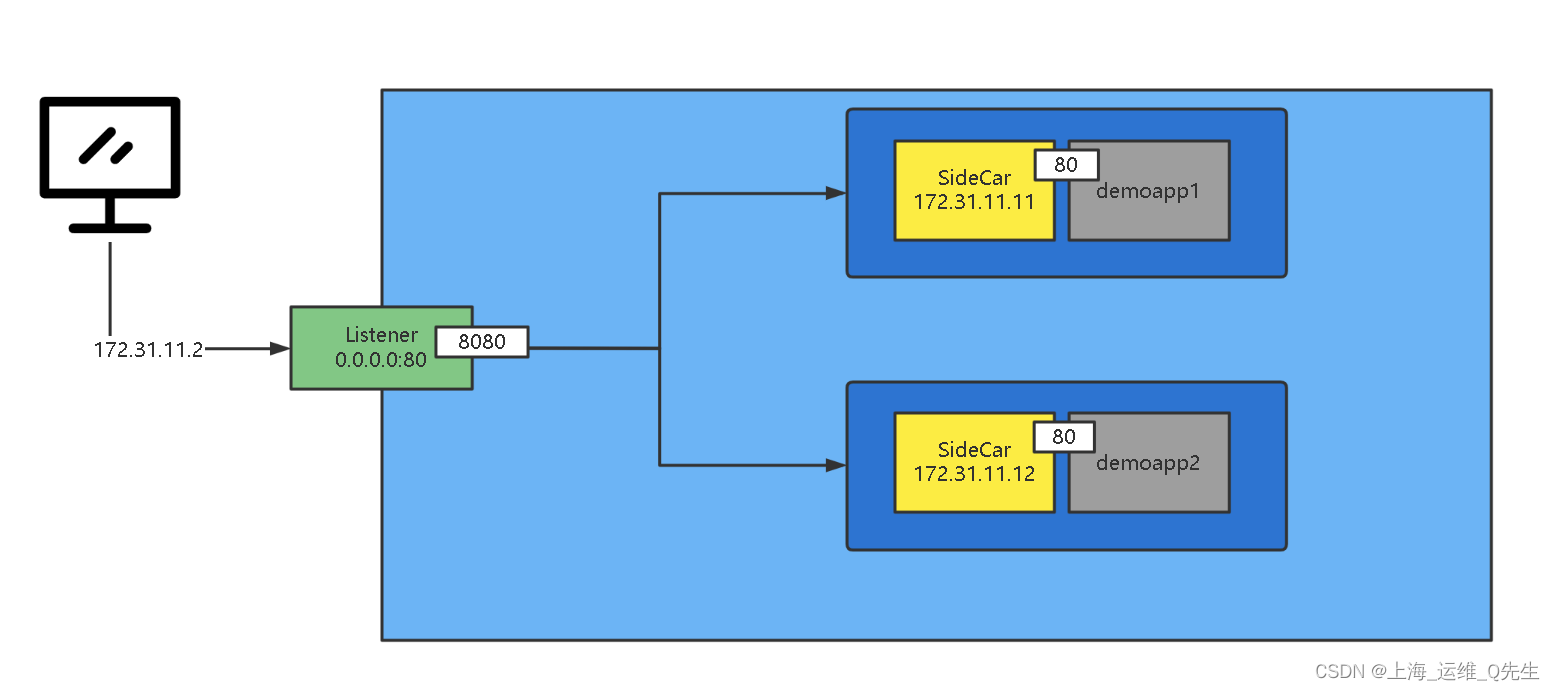

2.1 基于文件系统EDS动态实现

2.1.1 Docker-compose.yaml

五个Service:

- envoy: Front Proxy,地址为172.31.11.2

- webserver01: 第一个后端服务

- webserver01-sidecat: 第一个后端服务的sidecar Proxy,地址为172.31.11.11

- webserver02: 第二个后端服务

- webserver02-sidecar: 第二个后端服务的sidecar Proxy,地址为172.31.11.12

version: '3.3'

services:

envoy:

image: envoyproxy/envoy-alpine:v1.21.5

environment:

- ENVOY_UID=0

- ENVOY_GID=0

volumes:

- ./front-envoy.yaml:/etc/envoy/envoy.yaml ## 将本地的front-envoy.yaml映射给/etc/envoy/envoy.yaml

- ./eds.conf.d/:/etc/envoy/eds.conf.d/ # 将本地eds.conf.d目录下的文件映射给/etc/envoy/eds.conf.d/目录

networks:

envoymesh:

ipv4_address: 172.31.11.2

aliases:

- front-proxy

depends_on:

- webserver01-sidecar

- webserver02-sidecar

webserver01-sidecar:

image: envoyproxy/envoy-alpine:v1.21.5

volumes:

- ./envoy-sidecar-proxy.yaml:/etc/envoy/envoy.yaml

hostname: webserver01

networks:

envoymesh:

ipv4_address: 172.31.11.11

aliases:

- webserver01-sidecar

webserver01:

image: ikubernetes/demoapp:v1.0

environment:

- PORT=8080

- HOST=127.0.0.1

network_mode: "service:webserver01-sidecar"

depends_on:

- webserver01-sidecar

webserver02-sidecar:

image: envoyproxy/envoy-alpine:v1.21.5

volumes:

- ./envoy-sidecar-proxy.yaml:/etc/envoy/envoy.yaml

hostname: webserver02

networks:

envoymesh:

ipv4_address: 172.31.11.12

aliases:

- webserver02-sidecar

webserver02:

image: ikubernetes/demoapp:v1.0

environment:

- PORT=8080

- HOST=127.0.0.1

network_mode: "service:webserver02-sidecar"

depends_on:

- webserver02-sidecar

networks:

envoymesh:

driver: bridge

ipam:

config:

- subnet: 172.31.11.0/24

2.1.2 front envoy.yaml

node:

id: envoy_front_proxy # 说明自己是谁

cluster: pana_Cluster # 说明自己是哪个集群

admin: # 打开admin接口,方便后面通过curl查询集群信息

profile_path: /tmp/envoy.prof

access_log_path: /tmp/admin_access.log

address:

socket_address:

address: 0.0.0.0

port_value: 9901

static_resources:

listeners:

- name: listener_0

address:

socket_address: {

address: 0.0.0.0, port_value: 80 }

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: ingress_http

codec_type: AUTO

route_config:

name: local_route

virtual_hosts:

- name: web_service_01

domains: ["*"]

routes:

- match: {

prefix: "/" }

route: {

cluster: webcluster }

http_filters:

- name: envoy.filters.http.router

clusters:

- name: webcluster

connect_timeout: 0.25s

type: EDS # 动态eds配置方式

lb_policy: ROUND_ROBIN

eds_cluster_config:

service_name: webcluster

eds_config:

path: '/etc/envoy/eds.conf.d/eds.yaml' # 配置文件为/etc/envoy/eds.conf.d/eds.yaml

2.1.3 Sidecar Envoy

admin:

profile_path: /tmp/envoy.prof

access_log_path: /tmp/admin_access.log

address:

socket_address:

address: 0.0.0.0

port_value: 9901

static_resources:

listeners:

- name: listener_0

address:

socket_address: {

address: 0.0.0.0, port_value: 80 }

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: ingress_http

codec_type: AUTO

route_config:

name: local_route

virtual_hosts:

- name: local_service

domains: ["*"]

routes:

- match: {

prefix: "/" }

route: {

cluster: local_cluster }

http_filters:

- name: envoy.filters.http.router

clusters:

- name: local_cluster

connect_timeout: 0.25s

type: STATIC

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: local_cluster

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address: {

address: 127.0.0.1, port_value: 8080 }

2.1.4 eds.yaml

默认配置中只有172.31.11.11的配置

# cat eds.yaml

resources:

- "@type": type.googleapis.com/envoy.config.endpoint.v3.ClusterLoadAssignment

cluster_name: webcluster

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: 172.31.11.11

port_value: 80

2.1.5 运行测试

- 默认情况下,cluster中只包含了172.31.11.11:80

# docker-compose up

## 此时可以看到默认情况下,cluster中只包含了172.31.11.11:80

# curl 172.31.11.2:9901/clusters

webcluster::observability_name::webcluster

webcluster::default_priority::max_connections::1024

webcluster::default_priority::max_pending_requests::1024

webcluster::default_priority::max_requests::1024

webcluster::default_priority::max_retries::3

webcluster::high_priority::max_connections::1024

webcluster::high_priority::max_pending_requests::1024

webcluster::high_priority::max_requests::1024

webcluster::high_priority::max_retries::3

webcluster::added_via_api::false

webcluster::172.31.11.11:80::cx_active::0

webcluster::172.31.11.11:80::cx_connect_fail::0

webcluster::172.31.11.11:80::cx_total::0

webcluster::172.31.11.11:80::rq_active::0

webcluster::172.31.11.11:80::rq_error::0

webcluster::172.31.11.11:80::rq_success::0

webcluster::172.31.11.11:80::rq_timeout::0

webcluster::172.31.11.11:80::rq_total::0

webcluster::172.31.11.11:80::hostname::

webcluster::172.31.11.11:80::health_flags::healthy

webcluster::172.31.11.11:80::weight::1

webcluster::172.31.11.11:80::region::

webcluster::172.31.11.11:80::zone::

webcluster::172.31.11.11:80::sub_zone::

webcluster::172.31.11.11:80::canary::false

webcluster::172.31.11.11:80::priority::0

webcluster::172.31.11.11:80::success_rate::-1.0

webcluster::172.31.11.11:80::local_origin_success_rate::-1.0

## 进入容器

# docker exec -it c73086aa69f7 sh

/ # cd /etc/envoy/eds.conf.d

/etc/envoy/eds.conf.d # cat eds.yaml

resources:

- "@type": type.googleapis.com/envoy.config.endpoint.v3.ClusterLoadAssignment

cluster_name: webcluster

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: 172.31.11.11

port_value: 80

## 给eds.yaml追加配置后

/etc/envoy/eds.conf.d # cat eds.yaml

version_info: '2'

resources:

- "@type": type.googleapis.com/envoy.config.endpoint.v3.ClusterLoadAssignment

cluster_name: webcluster

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: 172.31.11.11

port_value: 80

- endpoint:

address:

socket_address:

address: 172.31.11.12

port_value: 80

## 强行改名后触发配置生效

/etc/envoy/eds.conf.d # mv eds.yaml tmp && mv tmp eds.yaml

## 此时在docker容器之外可以看到,集群下除了172.31.11.11还有172.31.11.12

# curl 172.31.11.2:9901/clusters

webcluster::observability_name::webcluster

webcluster::default_priority::max_connections::1024

webcluster::default_priority::max_pending_requests::1024

webcluster::default_priority::max_requests::1024

webcluster::default_priority::max_retries::3

webcluster::high_priority::max_connections::1024

webcluster::high_priority::max_pending_requests::1024

webcluster::high_priority::max_requests::1024

webcluster::high_priority::max_retries::3

webcluster::added_via_api::false

webcluster::172.31.11.11:80::cx_active::0

webcluster::172.31.11.11:80::cx_connect_fail::0

webcluster::172.31.11.11:80::cx_total::0

webcluster::172.31.11.11:80::rq_active::0

webcluster::172.31.11.11:80::rq_error::0

webcluster::172.31.11.11:80::rq_success::0

webcluster::172.31.11.11:80::rq_timeout::0

webcluster::172.31.11.11:80::rq_total::0

webcluster::172.31.11.11:80::hostname::

webcluster::172.31.11.11:80::health_flags::healthy

webcluster::172.31.11.11:80::weight::1

webcluster::172.31.11.11:80::region::

webcluster::172.31.11.11:80::zone::

webcluster::172.31.11.11:80::sub_zone::

webcluster::172.31.11.11:80::canary::false

webcluster::172.31.11.11:80::priority::0

webcluster::172.31.11.11:80::success_rate::-1.0

webcluster::172.31.11.11:80::local_origin_success_rate::-1.0

webcluster::172.31.11.12:80::cx_active::0

webcluster::172.31.11.12:80::cx_connect_fail::0

webcluster::172.31.11.12:80::cx_total::0

webcluster::172.31.11.12:80::rq_active::0

webcluster::172.31.11.12:80::rq_error::0

webcluster::172.31.11.12:80::rq_success::0

webcluster::172.31.11.12:80::rq_timeout::0

webcluster::172.31.11.12:80::rq_total::0

webcluster::172.31.11.12:80::hostname::

webcluster::172.31.11.12:80::health_flags::healthy

webcluster::172.31.11.12:80::weight::1

webcluster::172.31.11.12:80::region::

webcluster::172.31.11.12:80::zone::

webcluster::172.31.11.12:80::sub_zone::

webcluster::172.31.11.12:80::canary::false

webcluster::172.31.11.12:80::priority::0

webcluster::172.31.11.12:80::success_rate::-1.0

webcluster::172.31.11.12:80::local_origin_success_rate::-1.0

## 尝试请求listener,会分别向2个ep调度请求

root@k8s-node-1:~# curl 172.31.11.2

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: webserver01, ServerIP: 172.31.11.11!

root@k8s-node-1:~# curl 172.31.11.2

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: webserver01, ServerIP: 172.31.11.11!

root@k8s-node-1:~# curl 172.31.11.2

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: webserver02, ServerIP: 172.31.11.12!

root@k8s-node-1:~# curl 172.31.11.2

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: webserver01, ServerIP: 172.31.11.11!

## 当webserver1出现故障时,对文件进行修改移除故障的endpoint

/etc/envoy/eds.conf.d # cat eds.yaml

version_info: '2'

resources:

- "@type": type.googleapis.com/envoy.config.endpoint.v3.ClusterLoadAssignment

cluster_name: webcluster

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: 172.31.11.12

port_value: 80

## 刷新服务

/etc/envoy/eds.conf.d # mv eds.yaml bak && mv bak eds.yaml

## 此时查看clusters的情况可以看到172.31.11.11已经被移除

# curl 172.31.11.2:9901/clusters

webcluster::observability_name::webcluster

webcluster::default_priority::max_connections::1024

webcluster::default_priority::max_pending_requests::1024

webcluster::default_priority::max_requests::1024

webcluster::default_priority::max_retries::3

webcluster::high_priority::max_connections::1024

webcluster::high_priority::max_pending_requests::1024

webcluster::high_priority::max_requests::1024

webcluster::high_priority::max_retries::3

webcluster::added_via_api::false

webcluster::172.31.11.12:80::cx_active::0

webcluster::172.31.11.12:80::cx_connect_fail::0

webcluster::172.31.11.12:80::cx_total::0

webcluster::172.31.11.12:80::rq_active::0

webcluster::172.31.11.12:80::rq_error::0

webcluster::172.31.11.12:80::rq_success::0

webcluster::172.31.11.12:80::rq_timeout::0

webcluster::172.31.11.12:80::rq_total::0

webcluster::172.31.11.12:80::hostname::

webcluster::172.31.11.12:80::health_flags::healthy

webcluster::172.31.11.12:80::weight::1

webcluster::172.31.11.12:80::region::

webcluster::172.31.11.12:80::zone::

webcluster::172.31.11.12:80::sub_zone::

webcluster::172.31.11.12:80::canary::false

webcluster::172.31.11.12:80::priority::0

webcluster::172.31.11.12:80::success_rate::-1.0

webcluster::172.31.11.12:80::local_origin_success_rate::-1.0

## 访问集群只会被调度到172.31.11.12上了

# curl 172.31.11.2

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: webserver02, ServerIP: 172.31.11.12!

# curl 172.31.11.2

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: webserver02, ServerIP: 172.31.11.12!

# curl 172.31.11.2

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: webserver02, ServerIP: 172.31.11.12!

# curl 172.31.11.2

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: webserver02, ServerIP: 172.31.11.12!

通过修改eds.yaml文件,实现后端endpoint动态增删

至此,通过文件的动态修改实现了对后端EP的增删.这就是EDS

2.2 基于文件系统LDS,CDS动态实现

2.2.1 Docker-compose

五个Service:

- envoy: Front Proxy,地址为172.31.12.2

- webserver01: 第一个后端服务

- webserver01-sidecat: 第一个后端服务的sidecar Proxy,地址为172.31.12.11

- webserver02: 第二个后端服务

- webserver02-sidecar: 第二个后端服务的sidecar Proxy,地址为172.31.12.12

version: '3.3'

services:

envoy:

image: envoyproxy/envoy-alpine:v1.21.5

environment:

- ENVOY_UID=0

- ENVOY_GID=0

volumes:

- ./front-envoy.yaml:/etc/envoy/envoy.yaml

- ./eds.conf.d/:/etc/envoy/eds.conf.d/

networks:

envoymesh:

ipv4_address: 172.31.11.2

aliases:

- front-proxy

depends_on:

- webserver01-sidecar

- webserver02-sidecar

webserver01-sidecar:

image: envoyproxy/envoy-alpine:v1.21.5

volumes:

- ./envoy-sidecar-proxy.yaml:/etc/envoy/envoy.yaml

hostname: webserver01

networks:

envoymesh:

ipv4_address: 172.31.11.11

aliases:

- webserver01-sidecar

- webserver

webserver01:

image: ikubernetes/demoapp:v1.0

environment:

- PORT=8080

- HOST=127.0.0.1

network_mode: "service:webserver01-sidecar"

depends_on:

- webserver01-sidecar

webserver02-sidecar:

image: envoyproxy/envoy-alpine:v1.21.5

volumes:

- ./envoy-sidecar-proxy.yaml:/etc/envoy/envoy.yaml

hostname: webserver02

networks:

envoymesh:

ipv4_address: 172.31.11.12

aliases:

- webserver02-sidecar

- webserver

webserver02:

image: ikubernetes/demoapp:v1.0

environment:

- PORT=8080

- HOST=127.0.0.1

network_mode: "service:webserver02-sidecar"

depends_on:

- webserver02-sidecar

networks:

envoymesh:

driver: bridge

ipam:

config:

- subnet: 172.31.11.0/24

2.2.2 front envoy.yaml

这里就相对之前的配置简单很多,除了node的id标记和用于查询admin之外,其他配置只有5行.

通过发现L(listener)发现下面的VirtualHost,Route配置

通过Route调用Client

通过发现Client,发现下面的Endpoint配置

所以这里只需要指定L和C即可

node:

id: envoy_front_proxy

cluster: MageEdu_Cluster

admin:

profile_path: /tmp/envoy.prof

access_log_path: /tmp/admin_access.log

address:

socket_address:

address: 0.0.0.0

port_value: 9901

dynamic_resources:

lds_config:

path: /etc/envoy/conf.d/lds.yaml

cds_config:

path: /etc/envoy/conf.d/cds.yaml

2.2.3 lds.yaml

这部分的设定和之前静态的配置内容几乎相同,将后端cluster转发到webcluster

resources:

- "@type": type.googleapis.com/envoy.config.listener.v3.Listener

name: listener_http

address:

socket_address: {

address: 0.0.0.0, port_value: 80 }

filter_chains:

- filters:

name: envoy.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: ingress_http

route_config:

name: local_route

virtual_hosts:

- name: local_service

domains: ["*"]

routes:

- match:

prefix: "/"

route:

cluster: webcluster

http_filters:

- name: envoy.filters.http.router

2.2.4 cds.yaml

这里type设置为了静态.也可以将type设置成EDS,在使用eds_config去做endpoint的动态发现

resources:

- "@type": type.googleapis.com/envoy.config.cluster.v3.Cluster

name: webcluster

connect_timeout: 1s

type: STRICT_DNS

load_assignment:

cluster_name: webcluster

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: webserver01

port_value: 80

- endpoint:

address:

socket_address:

address: webserver02

port_value: 80

2.2.5 运行测试

# docker-compose up

## 可以看到在12.11和12.12之间轮询

# curl 172.31.12.2

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: webserver01, ServerIP: 172.31.12.11!

# curl 172.31.12.2

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: webserver02, ServerIP: 172.31.12.12!

# curl 172.31.12.2

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: webserver01, ServerIP: 172.31.12.11!

# curl 172.31.12.2

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: webserver02, ServerIP: 172.31.12.12!

# curl 172.31.12.2

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: webserver01, ServerIP: 172.31.12.11!

## cluster中也可以看到2个endpoint

# curl 172.31.12.2:9901/clusters

webcluster::observability_name::webcluster

webcluster::default_priority::max_connections::1024

webcluster::default_priority::max_pending_requests::1024

webcluster::default_priority::max_requests::1024

webcluster::default_priority::max_retries::3

webcluster::high_priority::max_connections::1024

webcluster::high_priority::max_pending_requests::1024

webcluster::high_priority::max_requests::1024

webcluster::high_priority::max_retries::3

webcluster::added_via_api::true

webcluster::172.31.12.11:80::cx_active::2

webcluster::172.31.12.11:80::cx_connect_fail::0

webcluster::172.31.12.11:80::cx_total::2

webcluster::172.31.12.11:80::rq_active::0

webcluster::172.31.12.11:80::rq_error::0

webcluster::172.31.12.11:80::rq_success::4

webcluster::172.31.12.11:80::rq_timeout::0

webcluster::172.31.12.11:80::rq_total::4

webcluster::172.31.12.11:80::hostname::webserver01

webcluster::172.31.12.11:80::health_flags::healthy

webcluster::172.31.12.11:80::weight::1

webcluster::172.31.12.11:80::region::

webcluster::172.31.12.11:80::zone::

webcluster::172.31.12.11:80::sub_zone::

webcluster::172.31.12.11:80::canary::false

webcluster::172.31.12.11:80::priority::0

webcluster::172.31.12.11:80::success_rate::-1.0

webcluster::172.31.12.11:80::local_origin_success_rate::-1.0

webcluster::172.31.12.12:80::cx_active::1

webcluster::172.31.12.12:80::cx_connect_fail::0

webcluster::172.31.12.12:80::cx_total::1

webcluster::172.31.12.12:80::rq_active::0

webcluster::172.31.12.12:80::rq_error::0

webcluster::172.31.12.12:80::rq_success::2

webcluster::172.31.12.12:80::rq_timeout::0

webcluster::172.31.12.12:80::rq_total::2

webcluster::172.31.12.12:80::hostname::webserver02

webcluster::172.31.12.12:80::health_flags::healthy

webcluster::172.31.12.12:80::weight::1

webcluster::172.31.12.12:80::region::

webcluster::172.31.12.12:80::zone::

webcluster::172.31.12.12:80::sub_zone::

webcluster::172.31.12.12:80::canary::false

webcluster::172.31.12.12:80::priority::0

webcluster::172.31.12.12:80::success_rate::-1.0

webcluster::172.31.12.12:80::local_origin_success_rate::-1.0

# curl 172.31.12.2:9901/listeners

listener_http::0.0.0.0:80

2.2.5.1 测试修改lds

将listener的监听由80改为8081,并使用mv触发变更生效

resources:

- "@type": type.googleapis.com/envoy.config.listener.v3.Listener

name: listener_http

address:

socket_address: {

address: 0.0.0.0, port_value: 8081 }

filter_chains:

- filters:

name: envoy.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: ingress_http

route_config:

name: local_route

virtual_hosts:

- name: local_service

domains: ["*"]

routes:

- match:

prefix: "/"

route:

cluster: webcluster

http_filters:

- name: envoy.filters.http.router

/etc/envoy/conf.d # mv lds.yaml tmp && mv tmp lds.yaml

可以看到监听 已经变为了8081,尝试访问8081

root@k8s-node-1:~# curl 172.31.12.2:9901/listeners

listener_http::0.0.0.0:8081

root@k8s-node-1:~# curl 172.31.12.2:8081

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: webserver01, ServerIP: 172.31.12.11!

root@k8s-node-1:~# curl 172.31.12.2:8081

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: webserver02, ServerIP: 172.31.12.12!

root@k8s-node-1:~# curl 172.31.12.2:8081

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: webserver02, ServerIP: 172.31.12.12!

root@k8s-node-1:~# curl 172.31.12.2:8081

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: webserver01, ServerIP: 172.31.12.11!

2.2.5.2 测试修改cds

在cds中删除12服务部分

resources:

- "@type": type.googleapis.com/envoy.config.cluster.v3.Cluster

name: webcluster

connect_timeout: 1s

type: STRICT_DNS

load_assignment:

cluster_name: webcluster

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: webserver01

port_value: 80

## 触发配置生效

/etc/envoy/conf.d # mv cds.yaml tmp&& mv tmp cds.yaml

此时集群中endpoint只有11了

root@k8s-node-1:~# curl 172.31.12.2:9901/clusters

webcluster::observability_name::webcluster

webcluster::default_priority::max_connections::1024

webcluster::default_priority::max_pending_requests::1024

webcluster::default_priority::max_requests::1024

webcluster::default_priority::max_retries::3

webcluster::high_priority::max_connections::1024

webcluster::high_priority::max_pending_requests::1024

webcluster::high_priority::max_requests::1024

webcluster::high_priority::max_retries::3

webcluster::added_via_api::true

webcluster::172.31.12.11:80::cx_active::0

webcluster::172.31.12.11:80::cx_connect_fail::0

webcluster::172.31.12.11:80::cx_total::0

webcluster::172.31.12.11:80::rq_active::0

webcluster::172.31.12.11:80::rq_error::0

webcluster::172.31.12.11:80::rq_success::0

webcluster::172.31.12.11:80::rq_timeout::0

webcluster::172.31.12.11:80::rq_total::0

webcluster::172.31.12.11:80::hostname::webserver01

webcluster::172.31.12.11:80::health_flags::healthy

webcluster::172.31.12.11:80::weight::1

webcluster::172.31.12.11:80::region::

webcluster::172.31.12.11:80::zone::

webcluster::172.31.12.11:80::sub_zone::

webcluster::172.31.12.11:80::canary::false

webcluster::172.31.12.11:80::priority::0

webcluster::172.31.12.11:80::success_rate::-1.0

webcluster::172.31.12.11:80::local_origin_success_rate::-1.0

root@k8s-node-1:~# curl 172.31.12.2:8081

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: webserver01, ServerIP: 172.31.12.11!

root@k8s-node-1:~# curl 172.31.12.2:8081

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: webserver01, ServerIP: 172.31.12.11!

root@k8s-node-1:~# curl 172.31.12.2:8081

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: webserver01, ServerIP: 172.31.12.11!

root@k8s-node-1:~# curl 172.31.12.2:8081

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: webserver01, ServerIP: 172.31.12.11!

2.2.5.3 再次修改cds

由于cds配置的是STRICT_DNS,STRICT_DNS是依次解析出所有域名中的ip

又由于11和12都有别名webserver

ipv4_address: 172.31.11.11

aliases:

- webserver01-sidecar

- webserver

....

ipv4_address: 172.31.11.12

aliases:

- webserver02-sidecar

- webserver

将配置文件的address改为了webserver,并重新触发配置生效

resources:

- "@type": type.googleapis.com/envoy.config.cluster.v3.Cluster

name: webcluster

connect_timeout: 1s

type: STRICT_DNS

load_assignment:

cluster_name: webcluster

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: webserver

port_value: 80

/etc/envoy/conf.d # mv cds.yaml tmp && mv tmp cds.yaml

此时cluster有了11和12的ep

root@k8s-node-1:~# curl 172.31.12.2:9901/clusters

webcluster::observability_name::webcluster

webcluster::default_priority::max_connections::1024

webcluster::default_priority::max_pending_requests::1024

webcluster::default_priority::max_requests::1024

webcluster::default_priority::max_retries::3

webcluster::high_priority::max_connections::1024

webcluster::high_priority::max_pending_requests::1024

webcluster::high_priority::max_requests::1024

webcluster::high_priority::max_retries::3

webcluster::added_via_api::true

webcluster::172.31.12.11:80::cx_active::0

webcluster::172.31.12.11:80::cx_connect_fail::0

webcluster::172.31.12.11:80::cx_total::0

webcluster::172.31.12.11:80::rq_active::0

webcluster::172.31.12.11:80::rq_error::0

webcluster::172.31.12.11:80::rq_success::0

webcluster::172.31.12.11:80::rq_timeout::0

webcluster::172.31.12.11:80::rq_total::0

webcluster::172.31.12.11:80::hostname::webserver

webcluster::172.31.12.11:80::health_flags::healthy

webcluster::172.31.12.11:80::weight::1

webcluster::172.31.12.11:80::region::

webcluster::172.31.12.11:80::zone::

webcluster::172.31.12.11:80::sub_zone::

webcluster::172.31.12.11:80::canary::false

webcluster::172.31.12.11:80::priority::0

webcluster::172.31.12.11:80::success_rate::-1.0

webcluster::172.31.12.11:80::local_origin_success_rate::-1.0

webcluster::172.31.12.12:80::cx_active::0

webcluster::172.31.12.12:80::cx_connect_fail::0

webcluster::172.31.12.12:80::cx_total::0

webcluster::172.31.12.12:80::rq_active::0

webcluster::172.31.12.12:80::rq_error::0

webcluster::172.31.12.12:80::rq_success::0

webcluster::172.31.12.12:80::rq_timeout::0

webcluster::172.31.12.12:80::rq_total::0

webcluster::172.31.12.12:80::hostname::webserver

webcluster::172.31.12.12:80::health_flags::healthy

webcluster::172.31.12.12:80::weight::1

webcluster::172.31.12.12:80::region::

webcluster::172.31.12.12:80::zone::

webcluster::172.31.12.12:80::sub_zone::

webcluster::172.31.12.12:80::canary::false

webcluster::172.31.12.12:80::priority::0

webcluster::172.31.12.12:80::success_rate::-1.0

webcluster::172.31.12.12:80::local_origin_success_rate::-1.0

再次访问listener,可以在2个endpoint中进行轮询

root@k8s-node-1:~# curl 172.31.12.2:8081

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: webserver01, ServerIP: 172.31.12.11!

root@k8s-node-1:~# curl 172.31.12.2:8081

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: webserver01, ServerIP: 172.31.12.11!

root@k8s-node-1:~# curl 172.31.12.2:8081

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: webserver02, ServerIP: 172.31.12.12!

root@k8s-node-1:~# curl 172.31.12.2:8081

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: webserver02, ServerIP: 172.31.12.12!

root@k8s-node-1:~# curl 172.31.12.2:8081

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: webserver01, ServerIP: 172.31.12.11!

webserver01-app_1 | 127.0.0.1 - - [26/Sep/2022 01:47:12] "GET / HTTP/1.1" 200 -

webserver01-app_1 | 127.0.0.1 - - [26/Sep/2022 01:47:13] "GET / HTTP/1.1" 200 -

webserver02-app_1 | 127.0.0.1 - - [26/Sep/2022 01:47:14] "GET / HTTP/1.1" 200 -

webserver02-app_1 | 127.0.0.1 - - [26/Sep/2022 01:47:15] "GET / HTTP/1.1" 200 -

webserver01-app_1 | 127.0.0.1 - - [26/Sep/2022 01:47:16] "GET / HTTP/1.1" 200 -