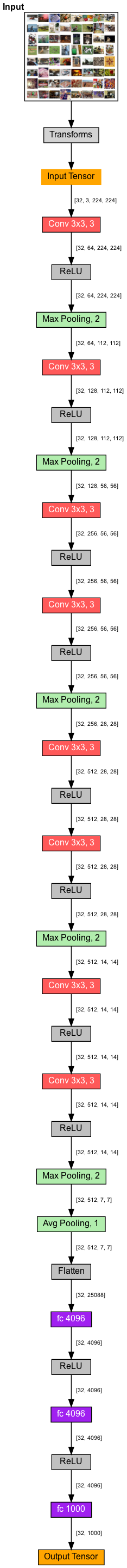

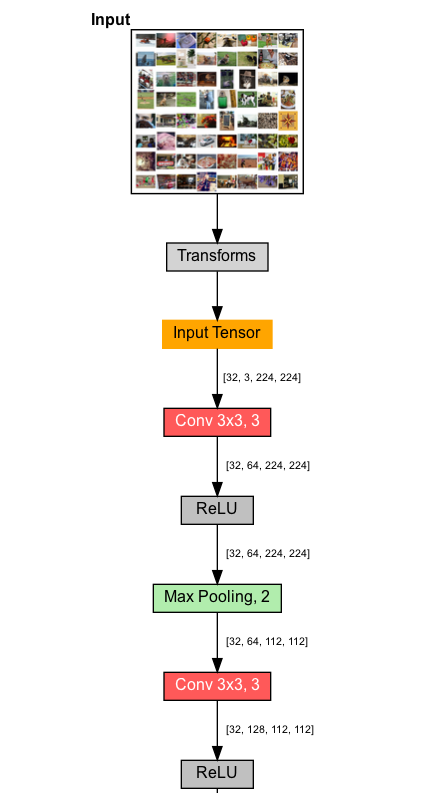

开局一张图,首先抛出vgg11的网络架构(完整版放在文章最下方)

下面,再配合pytorch官方代码,解析一下vgg11。以vgg11为切入点,由浅入深,理解vgg架构

源码解析

demo

import torchvision.models as models

vgg11 = models.vgg11(pretrained=True)

print(vgg11)

ctrl+鼠标左键点击vgg11,进入vgg.py文件下 映入眼帘的是vgg11的构造函数

vgg11分为两种:

不带BN层(‘A’后面的参数为False)

def vgg11(pretrained: bool = False, progress: bool = True, **kwargs: Any) -> VGG:

r"""VGG 11-layer model (configuration "A") from

`"Very Deep Convolutional Networks For Large-Scale Image Recognition" <https://arxiv.org/pdf/1409.1556.pdf>`_.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

progress (bool): If True, displays a progress bar of the download to stderr

"""

return _vgg('vgg11', 'A', False, pretrained, progress, **kwargs)#A是论文中表1的A列,后边的False是不带BN层,如果是True则带BN层

带BN层(‘A’后面的参数为True)

def vgg11_bn(pretrained: bool = False, progress: bool = True, **kwargs: Any) -> VGG:

r"""VGG 11-layer model (configuration "A") with batch normalization

`"Very Deep Convolutional Networks For Large-Scale Image Recognition" <https://arxiv.org/pdf/1409.1556.pdf>`_.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

progress (bool): If True, displays a progress bar of the download to stderr

"""

return _vgg('vgg11_bn', 'A', True, pretrained, progress, **kwargs)

构造函数用到了_vgg()方法来创建网络

def _vgg(arch: str, cfg: str, batch_norm: bool, pretrained: bool, progress: bool, **kwargs: Any) -> VGG:

if pretrained:#如果加载预训练模型,那么就不初始化权重

kwargs['init_weights'] = False

model = VGG(make_layers(cfgs[cfg], batch_norm=batch_norm), **kwargs)

if pretrained:

state_dict = load_state_dict_from_url(model_urls[arch],

progress=progress)

model.load_state_dict(state_dict)

return model

再看VGG方法的第一个参数make_layers(cfgs[cfg], batch_norm=batch_norm)

ctrl+鼠标左键点击cfgs,跳转到cfgs

cfgs: Dict[str, List[Union[str, int]]] = { #根据网络结构和参数设置,构造 cfgs 字典,存放这些结构参数

'A': [64, 'M', 128, 'M', 256, 256, 'M', 512, 512, 'M', 512, 512, 'M'],#数字代表卷积层输出特征图通道数,M 代表最大池化层

'B': [64, 64, 'M', 128, 128, 'M', 256, 256, 'M', 512, 512, 'M', 512, 512, 'M'],

'D': [64, 64, 'M', 128, 128, 'M', 256, 256, 256, 'M', 512, 512, 512, 'M', 512, 512, 512, 'M'],

'E': [64, 64, 'M', 128, 128, 'M', 256, 256, 256, 256, 'M', 512, 512, 512, 512, 'M', 512, 512, 512, 512, 'M'],

}

然后返回上一级,ctrl+鼠标左键点击make_layers

这函数负责构建特征提取网络

def make_layers(cfg: List[Union[str, int]], batch_norm: bool = False) -> nn.Sequential:

layers: List[nn.Module] = [] # 层列表初始化

in_channels = 3 #RGB图像为3通道

for v in cfg:

if v == 'M': # 添加池化层 核大小和步长都为2

layers += [nn.MaxPool2d(kernel_size=2, stride=2)]

else:#添加卷积层

v = cast(int, v)#检查v的type

conv2d = nn.Conv2d(in_channels, v, kernel_size=3, padding=1) # 3×3 卷积

if batch_norm:

layers += [conv2d, nn.BatchNorm2d(v), nn.ReLU(inplace=True)]

else:

layers += [conv2d, nn.ReLU(inplace=True)]

in_channels = v#下一层的channel是上一层的out_channel

return nn.Sequential(*layers)

接下来,返回上一级

在_vgg()方法中,又调用了VGG()方法创建模型

class VGG(nn.Module):

def __init__(

self,

features: nn.Module,

num_classes: int = 1000,

init_weights: bool = True

) -> None:

super(VGG, self).__init__()

self.features = features #特征提取部分

self.avgpool = nn.AdaptiveAvgPool2d((7, 7))## 自适应平均池化,特征图池化到 7×7 大小

# 分类部分

self.classifier = nn.Sequential(

nn.Linear(512 * 7 * 7, 4096),

nn.ReLU(True),

nn.Dropout(),

nn.Linear(4096, 4096),

nn.ReLU(True),

nn.Dropout(),

nn.Linear(4096, num_classes),

)

if init_weights:# 权重初始化

self._initialize_weights()

def forward(self, x: torch.Tensor) -> torch.Tensor:

# 特征提取

x = self.features(x)

# 自适应平均池化

x = self.avgpool(x)

# 展平

x = torch.flatten(x, 1)

# 分类

x = self.classifier(x)

return x

```

pytorch官方实现做了一点改进,用的自适应平均池化,为的让下一步flatten操作接收固定向量,网络能够喂入不同大小的输入

分类这块,pytorch官方加入了两个dropout操作

```

def _initialize_weights(self) -> None:

for m in self.modules():

if isinstance(m, nn.Conv2d):# 卷积层使用 kaimming 初始化

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

if m.bias is not None:# 偏置初始化为0

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.BatchNorm2d):# BN层权重初始化为1

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.Linear):#全连接层权重初始化

nn.init.normal_(m.weight, 0, 0.01)

nn.init.constant_(m.bias, 0)

解析完毕,总体来说vgg采用堆叠小卷积核来实现与大卷积核一样的感受野,而增大网络的深度,引入了多个非线性,增强了网络的拟合能力。从参数量来看,多个堆叠小卷积核减少了参数量。从卷积核大小来看,堆叠多个小卷积核来代替大卷积核,相当于对大卷积核引入正则化,增强了网络的健壮性特征提取。

最后,再对照vgg11完整网络结构,理解一下vgg架构。