文章目录

1. What & Why: 什么是深度学习,为什么要用深度学习?

计算机以类人脑的方式构建“神经网络”,通常包括

输入层、中间层/隐藏层、输出层

这些隐藏层即“深度”:从”输入层“到”输出层“所经历层次的数目,即”隐藏层“的层数,层数越多,板凳的深度也越深。所以越是复杂的选择问题,越需要深度的层次多。

当然,除了层数多外,每层”神经元“数目也要多。例如,AlphaGo的策略网络是13层。每一层的神经元数量为192个。

虽然计算机能通过多层的深度学习,达到识别的某一项技能,如MNISt中识别手写数字,但需多层的复杂计算,更重要的是需要大量的数据:计算机的神经网络需要大量的数据才能训练出一个基本的技能,而人类的思维具有高度的抽象。所以计算机看成千上万只猫的图片才能识别出什么是猫,而哪怕是一个小孩看两三次猫,就有同样的本领。

ref:https://www.zhihu.com/question/24097648

深度学习相较于普通机器学习,能解决机器学习未能很好解决的问题。传统的机器学习算法包括决策树、聚类、贝叶斯分类、支持向量机、EM、Adaboost等等。

从学习方法上来分,机器学习算法可以分为监督学习(如分类问题)、无监督学习(如聚类问题)、半监督学习、集成学习、深度学习和强化学习。

传统的机器学习算法在指纹识别、基于Haar的人脸检测、基于HoG特征的物体检测等领域的应用基本达到了商业化的要求或者特定场景的商业化水平,但每前进一步都异常艰难,直到深度学习算法的出现。深度学习利用复杂的多层结构和大量的输入数据,使得似乎所有的机器辅助功能都变为可能。

常见类型

ref:https://zhuanlan.zhihu.com/p/159305118

CNN

ref:https://www.cnblogs.com/wuzhitj/p/6433871.html

卷积参数

-

卷积的输入input:指需要做卷积的输入图像/音频等,它要求是一个Tensor,具有[batch, in_height, in_width, in_channels]这样的shape,具体图片的含义是[训练时一个batch的图片数量, 图片高度, 图片宽度, 图像通道数],注意这是一个4维的Tensor,要求类型为float32和float64其中之一

-

第一个参数filter:卷积核个数,也是输出通道数。Integer, the dimensionality of the output space (i.e. the number of output filters in the convolution).

-

第二个参数kernel_size: 卷积核大小,指定二维卷积窗口的高和宽,(如果kernel_size只有一个整数,代表宽和高相等):An integer or tuple/list of 2 integers, specifying the height and width of the 2D convolution window. Can be a single integer to specify the same value for all spatial dimensions.

-

第三个参数strides: 卷积步长,指定卷积窗沿高和宽方向的每次移动步长,An integer or tuple/list of 2 integers, (如果strides只有一个整数,代表沿着宽和高方向的步长相等) specifying the strides of the convolution along the height and width. Can be a single integer to specify the same value for all spatial dimensions. Specifying any stride value != 1 is incompatible with specifying any

dilation_ratevalue != 1. -

第四个参数padding: 为valid或same一种, one of

"valid"or"same"(case-insensitive). 两种padding方式的区别如下:-

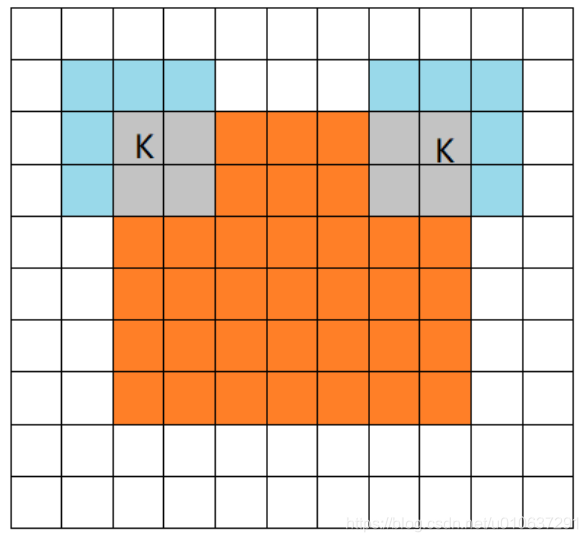

same mode

当filter的中心(K)与image的边角重合时,开始做卷积运算。注意:这里的same还有一个意思,卷积之后输出的feature map尺寸保持不变(相对于输入图片)。当然,same模式不代表完全输入输出尺寸一样,也跟卷积核的步长有关系。same模式也是最常见的模式,因为这种模式可以在前向传播的过程中让特征图的大小保持不变,调参师不需要精准计算其尺寸变化(因为尺寸根本就没变化)。

-

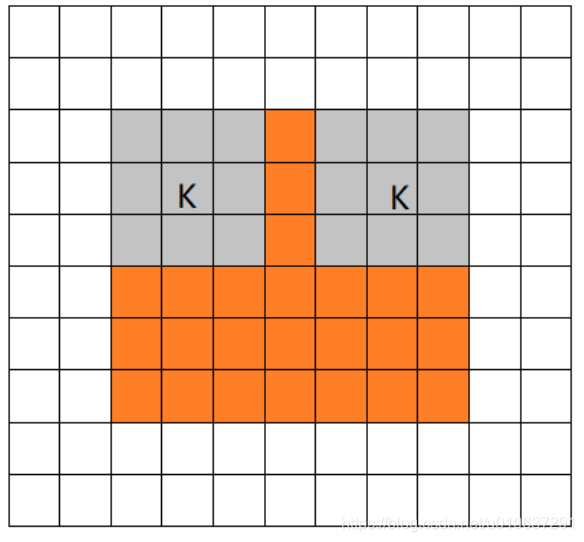

valid mode

当filter全部在image里面的时候,进行卷积运算,可见filter的移动范围较same更小了。

-

其它还有一些参数不细讲,可参考源码中对于参数的说明。结果返回一个Tensor,这个输出,就是我们常说的feature map。

使用VALID方式,feature map的尺寸为:

out_height = ceil(float(in_height - filter_height + 1) / float(strides[0])) out_width = ceil(float(in_width - filter_width + 1) / float(strides[1]))卷积示例

在tensoflow2.x keras中构建conv2D卷积层:

outputs_conv = keras.layers.Conv2D(filters=128,

kernel_size=(10, 4),

strides=(3, 2),

padding='VALID',

name=scope + '/conv_1')(inputs)

则在给定输入input = 1 × 34 × 13 × 1,即batch_size=1,in_height=34, in_width=13, in_channel=1。

设定参数filter=128,即卷积核大小=128,

kernel_size=10 × 4,

stride=3 × 2,

padding=‘VALID’

输出output_height = ceil((34 - 10 + 1)/3) = 9,

output_width = ceil((13 - 4 + 1)/2) = 5,

最后输出为:output=1 × 9 × 5 × 128,即 batch_size=1,out_height=9,out_width=5,out_channel=128(也即卷积核)。

Conv2D源码

class Conv2D(Conv):

"""2D convolution layer (e.g. spatial convolution over images).

This layer creates a convolution kernel that is convolved

with the layer input to produce a tensor of

outputs. If `use_bias` is True,

a bias vector is created and added to the outputs. Finally, if

`activation` is not `None`, it is applied to the outputs as well.

When using this layer as the first layer in a model,

provide the keyword argument `input_shape`

(tuple of integers, does not include the sample axis),

e.g. `input_shape=(128, 128, 3)` for 128x128 RGB pictures

in `data_format="channels_last"`.

Examples:

>>> # The inputs are 28x28 RGB images with `channels_last` and the batch

>>> # size is 4.

>>> input_shape = (4, 28, 28, 3)

>>> x = tf.random.normal(input_shape)

>>> y = tf.keras.layers.Conv2D(

... 2, 3, activation='relu', input_shape=input_shape)(x)

>>> print(y.shape)

(4, 26, 26, 2)

>>> # With `dilation_rate` as 2.

>>> input_shape = (4, 28, 28, 3)

>>> x = tf.random.normal(input_shape)

>>> y = tf.keras.layers.Conv2D(

... 2, 3, activation='relu', dilation_rate=2, input_shape=input_shape)(x)

>>> print(y.shape)

(4, 24, 24, 2)

>>> # With `padding` as "same".

>>> input_shape = (4, 28, 28, 3)

>>> x = tf.random.normal(input_shape)

>>> y = tf.keras.layers.Conv2D(

... 2, 3, activation='relu', padding="same", input_shape=input_shape)(x)

>>> print(y.shape)

(4, 28, 28, 2)

Arguments:

filters: Integer, the dimensionality of the output space

(i.e. the number of output filters in the convolution).

kernel_size: An integer or tuple/list of 2 integers, specifying the

height and width of the 2D convolution window.

Can be a single integer to specify the same value for

all spatial dimensions.

strides: An integer or tuple/list of 2 integers,

specifying the strides of the convolution along the height and width.

Can be a single integer to specify the same value for

all spatial dimensions.

Specifying any stride value != 1 is incompatible with specifying

any `dilation_rate` value != 1.

padding: one of `"valid"` or `"same"` (case-insensitive).

data_format: A string,

one of `channels_last` (default) or `channels_first`.

The ordering of the dimensions in the inputs.

`channels_last` corresponds to inputs with shape

`(batch_size, height, width, channels)` while `channels_first`

corresponds to inputs with shape

`(batch_size, channels, height, width)`.

It defaults to the `image_data_format` value found in your

Keras config file at `~/.keras/keras.json`.

If you never set it, then it will be "channels_last".

dilation_rate: an integer or tuple/list of 2 integers, specifying

the dilation rate to use for dilated convolution.

Can be a single integer to specify the same value for

all spatial dimensions.

Currently, specifying any `dilation_rate` value != 1 is

incompatible with specifying any stride value != 1.

activation: Activation function to use.

If you don't specify anything, no activation is applied (

see `keras.activations`).

use_bias: Boolean, whether the layer uses a bias vector.

kernel_initializer: Initializer for the `kernel` weights matrix (

see `keras.initializers`).

bias_initializer: Initializer for the bias vector (

see `keras.initializers`).

kernel_regularizer: Regularizer function applied to

the `kernel` weights matrix (see `keras.regularizers`).

bias_regularizer: Regularizer function applied to the bias vector (

see `keras.regularizers`).

activity_regularizer: Regularizer function applied to

the output of the layer (its "activation") (

see `keras.regularizers`).

kernel_constraint: Constraint function applied to the kernel matrix (

see `keras.constraints`).

bias_constraint: Constraint function applied to the bias vector (

see `keras.constraints`).

Input shape:

4D tensor with shape:

`(batch_size, channels, rows, cols)` if data_format='channels_first'

or 4D tensor with shape:

`(batch_size, rows, cols, channels)` if data_format='channels_last'.

Output shape:

4D tensor with shape:

`(batch_size, filters, new_rows, new_cols)` if data_format='channels_first'

or 4D tensor with shape:

`(batch_size, new_rows, new_cols, filters)` if data_format='channels_last'.

`rows` and `cols` values might have changed due to padding.

Returns:

A tensor of rank 4 representing

`activation(conv2d(inputs, kernel) + bias)`.

Raises:

ValueError: if `padding` is "causal".

ValueError: when both `strides` > 1 and `dilation_rate` > 1.

"""

def __init__(self,

filters,

kernel_size,

strides=(1, 1),

padding='valid',

data_format=None,

dilation_rate=(1, 1),

activation=None,

use_bias=True,

kernel_initializer='glorot_uniform',

bias_initializer='zeros',

kernel_regularizer=None,

bias_regularizer=None,

activity_regularizer=None,

kernel_constraint=None,

bias_constraint=None,

**kwargs):

super(Conv2D, self).__init__(

rank=2,

filters=filters,

kernel_size=kernel_size,

strides=strides,

padding=padding,

data_format=data_format,

dilation_rate=dilation_rate,

activation=activations.get(activation),

use_bias=use_bias,

kernel_initializer=initializers.get(kernel_initializer),

bias_initializer=initializers.get(bias_initializer),

kernel_regularizer=regularizers.get(kernel_regularizer),

bias_regularizer=regularizers.get(bias_regularizer),

activity_regularizer=regularizers.get(activity_regularizer),

kernel_constraint=constraints.get(kernel_constraint),

bias_constraint=constraints.get(bias_constraint),

**kwargs)

构建DS-CNN模型代码

def create_ds_cnn_model(model_settings, model_size_info, is_training):

'''

model_size_info=[5, 128, 10, 4, 3, 2, 128, 3, 3, 1, 1, 128, 3, 3, 1, 1, 128, 3, 3, 1, 1, 128, 3, 3, 1, 1],

'''

def _conv_block(inputs, filters, kernel_size, strides):

x = keras.layers.Conv2D(filters=filters, kernel_size=kernel_size, strides=strides, padding='valid')(inputs)

x = keras.layers.BatchNormalization()(x)

return x

def _depthwise_seperable_conv(inputs, pointwise_conv_filters, kernel_size, strides):

# Depthwise conv

x = keras.layers.DepthwiseConv2D(kernel_size=kernel_size,strides=strides, padding='valid')(inputs)

x = keras.layers.BatchNormalization()(x)

# Pointwise conv

x = keras.layers.Conv2D(pointwise_conv_filters, kernel_size=kernel_size, padding='valid', )(x)

x = keras.layers.BatchNormalization()(x)

return x

label_count = model_settings['label_count']

input_frequency_size = model_settings['dct_coefficient_count']

input_time_size = model_settings['spectrogram_length']

inputs = keras.Input(shape=(input_time_size * input_frequency_size,))

inputs_reshape = keras.layers.Reshape((input_time_size, input_frequency_size, 1,))(inputs)

# Extract model dimensions from model_size_info

num_layers = model_size_info[0]

conv_feat = [None] * num_layers

conv_kt = [None] * num_layers

conv_kf = [None] * num_layers

conv_st = [None] * num_layers

conv_sf = [None] * num_layers

i = 1

for layer_no in range(0, num_layers):

conv_feat[layer_no] = model_size_info[i]

i += 1

conv_kt[layer_no] = model_size_info[i]

i += 1

conv_kf[layer_no] = model_size_info[i]

i += 1

conv_st[layer_no] = model_size_info[i]

i += 1

conv_sf[layer_no] = model_size_info[i]

i += 1

t_dim = model_settings['spectrogram_length']

f_dim = model_settings['dct_coefficient_count']

scope = 'DS-CNN'

# with tf.compat.v1.variable_scope(scope) as sc:

for layer_no in range(0, num_layers):

if layer_no == 0:

pf_left = (conv_kf[layer_no] - 1) // 2

pf_right = conv_kf[layer_no] - 1 - pf_left

inputs_padding = keras.layers.ZeroPadding2D(((0, 0), (pf_left, pf_right)))(inputs_reshape)

net = keras.layers.Conv2D(filters=conv_feat[layer_no],

kernel_size=(conv_kt[layer_no], conv_kf[layer_no]),

strides=(conv_st[layer_no], conv_sf[layer_no]),

padding='VALID',

name=scope + '/conv_1')(inputs_padding)

if is_training:

net = keras.layers.BatchNormalization(name=scope + '/conv_1/batch_norm')(net, training=is_training)

else:

net = tf.nn.relu(net)

else:

pf_left = (conv_kt[layer_no] - 1) // 2

pf_right = conv_kt[layer_no] - 1 - pf_left

inputs_padding = keras.layers.ZeroPadding2D(((0, 0), (pf_left, pf_right)))(net)

net = keras.layers.DepthwiseConv2D(kernel_size=[conv_kt[layer_no], conv_kf[layer_no]],

strides=[conv_st[layer_no], conv_sf[layer_no]],

padding='VALID',

depth_multiplier=1,

name=scope+'/conv_ds_'+str(layer_no) +'/dw_conv')(inputs_padding)

if is_training:

net = keras.layers.BatchNormalization(name=scope+'/conv_ds_'+str(layer_no) +'/dw_conv/batch_norm')(net, training=is_training)

else:

net = tf.nn.relu(net)

net = keras.layers.Conv2D(filters=conv_feat[layer_no],

kernel_size=[1, 1],

padding='VALID',

name=scope + '/conv_ds_' + str(layer_no) + '/pw_conv')(net)

if is_training:

net = keras.layers.BatchNormalization(name=scope + '/conv_ds_' + str(layer_no) + '/pw_conv/batch_norm')(net, training=is_training)

else:

net = tf.nn.relu(net)

t_dim = (t_dim - conv_kt[layer_no]) // conv_st[layer_no] + 1

f_dim = np.ceil(f_dim / conv_sf[layer_no])

assert (net.shape[1] == t_dim and net.shape[2] == f_dim)

net = keras.layers.AveragePooling2D([t_dim, f_dim])(net)

net = keras.layers.Lambda(squeeze_tensor, arguments={

'axis': 1})(net)

net = keras.layers.Lambda(squeeze_tensor, arguments={

'axis': 1})(net)

net = keras.layers.Dense(label_count, activation=None, name=scope + '/fc1')(net)

outputs = keras.layers.Softmax(axis=1)(net)

model = keras.Model(inputs=inputs, outputs=outputs)

model.compile(

loss=keras.losses.categorical_crossentropy,

optimizer=keras.optimizers.Adadelta(),

metrics=['accuracy']

)

# print and plot model

model.summary()

keras.utils.plot_model(model, "./model/ds_cnn_model.png", show_shapes=True)

# print model variables

print_model_variables(model)

# convert ckpt to tflite

# convert_saved_ckpt_to_tflite(model)

# convert to tflite

# convert_keras_model_to_tflite(model)

# convert_saved_ckpt_to_tflite('./log/20200827/ds_cnn_Sun-30-Aug-2020-10-46-29.ckpt-13400000_bnfused')

return model

模型summary:

Model: "model"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_1 (InputLayer) [(None, 340)] 0

_________________________________________________________________

reshape (Reshape) (None, 34, 10, 1) 0

_________________________________________________________________

zero_padding2d (ZeroPadding2 (None, 34, 13, 1) 0

_________________________________________________________________

DS-CNN/conv_1 (Conv2D) (None, 9, 5, 128) 5248

_________________________________________________________________

tf_op_layer_Relu (TensorFlow [(None, 9, 5, 128)] 0

_________________________________________________________________

zero_padding2d_1 (ZeroPaddin (None, 9, 7, 128) 0

_________________________________________________________________

DS-CNN/conv_ds_1/dw_conv (De (None, 7, 5, 128) 1280

_________________________________________________________________

tf_op_layer_Relu_1 (TensorFl [(None, 7, 5, 128)] 0

_________________________________________________________________

DS-CNN/conv_ds_1/pw_conv (Co (None, 7, 5, 128) 16512

_________________________________________________________________

tf_op_layer_Relu_2 (TensorFl [(None, 7, 5, 128)] 0

_________________________________________________________________

zero_padding2d_2 (ZeroPaddin (None, 7, 7, 128) 0

_________________________________________________________________

DS-CNN/conv_ds_2/dw_conv (De (None, 5, 5, 128) 1280

_________________________________________________________________

tf_op_layer_Relu_3 (TensorFl [(None, 5, 5, 128)] 0

_________________________________________________________________

DS-CNN/conv_ds_2/pw_conv (Co (None, 5, 5, 128) 16512

_________________________________________________________________

tf_op_layer_Relu_4 (TensorFl [(None, 5, 5, 128)] 0

_________________________________________________________________

zero_padding2d_3 (ZeroPaddin (None, 5, 7, 128) 0

_________________________________________________________________

DS-CNN/conv_ds_3/dw_conv (De (None, 3, 5, 128) 1280

_________________________________________________________________

tf_op_layer_Relu_5 (TensorFl [(None, 3, 5, 128)] 0

_________________________________________________________________

DS-CNN/conv_ds_3/pw_conv (Co (None, 3, 5, 128) 16512

_________________________________________________________________

tf_op_layer_Relu_6 (TensorFl [(None, 3, 5, 128)] 0

_________________________________________________________________

zero_padding2d_4 (ZeroPaddin (None, 3, 7, 128) 0

_________________________________________________________________

DS-CNN/conv_ds_4/dw_conv (De (None, 1, 5, 128) 1280

_________________________________________________________________

tf_op_layer_Relu_7 (TensorFl [(None, 1, 5, 128)] 0

_________________________________________________________________

DS-CNN/conv_ds_4/pw_conv (Co (None, 1, 5, 128) 16512

_________________________________________________________________

tf_op_layer_Relu_8 (TensorFl [(None, 1, 5, 128)] 0

_________________________________________________________________

average_pooling2d (AveragePo (None, 1, 1, 128) 0

_________________________________________________________________

lambda (Lambda) (None, 1, 128) 0

_________________________________________________________________

lambda_1 (Lambda) (None, 128) 0

_________________________________________________________________

DS-CNN/fc1 (Dense) (None, 31) 3999

_________________________________________________________________

softmax (Softmax) (None, 31) 0

=================================================================

Total params: 80,415

Trainable params: 80,415

Non-trainable params: 0

_________________________________________________________________

模型变量:

DS-CNN/conv_1/kernel:0

DS-CNN/conv_1/bias:0

DS-CNN/conv_ds_1/dw_conv/depthwise_kernel:0

DS-CNN/conv_ds_1/dw_conv/bias:0

DS-CNN/conv_ds_1/pw_conv/kernel:0

DS-CNN/conv_ds_1/pw_conv/bias:0

DS-CNN/conv_ds_2/dw_conv/depthwise_kernel:0

DS-CNN/conv_ds_2/dw_conv/bias:0

DS-CNN/conv_ds_2/pw_conv/kernel:0

DS-CNN/conv_ds_2/pw_conv/bias:0

DS-CNN/conv_ds_3/dw_conv/depthwise_kernel:0

DS-CNN/conv_ds_3/dw_conv/bias:0

DS-CNN/conv_ds_3/pw_conv/kernel:0

DS-CNN/conv_ds_3/pw_conv/bias:0

DS-CNN/conv_ds_4/dw_conv/depthwise_kernel:0

DS-CNN/conv_ds_4/dw_conv/bias:0

DS-CNN/conv_ds_4/pw_conv/kernel:0

DS-CNN/conv_ds_4/pw_conv/bias:0

DS-CNN/fc1/kernel:0

DS-CNN/fc1/bias:0

模型打印

RNN/LSTM

DNN

Tool:Netron

主页:https://www.electronjs.org/apps/netron

github:https://github.com/lutzroeder/netron

下载:https://github.com/lutzroeder/netron/releases/tag/v4.5.1

安装

Ubuntu:

snap install netron

下载.AppImage后未安装成功,下载方式为:

wget https://github.com/lutzroeder/netron/releases/tag/v4.5.1 -o Netron-4.5.1.AppImage

使用

Ubuntu下输入:

netron

再选择模型位置。示例,查看一个tensorflow lite 模型(.tflite)

常见应用

2. How: 模型训练过程

搭建模型

CNN结构:

- DS-CNN

DNN结构

LSTM结构

构建输入数据和输入层

模型训练

模型评估

模型测试