本系列基于spark-2.4.6

我们知道,Spark中必须Action算子才会真正执行,这里我们以saveAsTextFile为例来说明整个过程。

def saveAsTextFile(path: String): Unit = withScope {

val nullWritableClassTag = implicitly[ClassTag[NullWritable]]

val textClassTag = implicitly[ClassTag[Text]]

val r = this.mapPartitions {

iter =>

val text = new Text()

iter.map {

x =>

text.set(x.toString)

(NullWritable.get(), text)

}

}

RDD.rddToPairRDDFunctions(r)(nullWritableClassTag, textClassTag, null)

.saveAsHadoopFile[TextOutputFormat[NullWritable, Text]](path)

}

最后调用了saveAsHadoopFile:

def saveAsHadoopFile(

path: String,

keyClass: Class[_],

valueClass: Class[_],

outputFormatClass: Class[_ <: OutputFormat[_, _]],

conf: JobConf = new JobConf(self.context.hadoopConfiguration),

codec: Option[Class[_ <: CompressionCodec]] = None): Unit = self.withScope {

// Rename this as hadoopConf internally to avoid shadowing (see SPARK-2038).

val hadoopConf = conf

hadoopConf.setOutputKeyClass(keyClass)

hadoopConf.setOutputValueClass(valueClass)

conf.setOutputFormat(outputFormatClass)

for (c <- codec) {

hadoopConf.setCompressMapOutput(true)

hadoopConf.set("mapreduce.output.fileoutputformat.compress", "true")

hadoopConf.setMapOutputCompressorClass(c)

hadoopConf.set("mapreduce.output.fileoutputformat.compress.codec", c.getCanonicalName)

hadoopConf.set("mapreduce.output.fileoutputformat.compress.type",

CompressionType.BLOCK.toString)

}

// Use configured output committer if already set

if (conf.getOutputCommitter == null) {

hadoopConf.setOutputCommitter(classOf[FileOutputCommitter])

}

// When speculation is on and output committer class name contains "Direct", we should warn

// users that they may loss data if they are using a direct output committer.

val speculationEnabled = self.conf.getBoolean("spark.speculation", false)

val outputCommitterClass = hadoopConf.get("mapred.output.committer.class", "")

if (speculationEnabled && outputCommitterClass.contains("Direct")) {

val warningMessage =

s"$outputCommitterClass may be an output committer that writes data directly to " +

"the final location. Because speculation is enabled, this output committer may " +

"cause data loss (see the case in SPARK-10063). If possible, please use an output " +

"committer that does not have this behavior (e.g. FileOutputCommitter)."

logWarning(warningMessage)

}

FileOutputFormat.setOutputPath(hadoopConf,

SparkHadoopWriterUtils.createPathFromString(path, hadoopConf))

saveAsHadoopDataset(hadoopConf)

}

def saveAsHadoopDataset(conf: JobConf): Unit = self.withScope {

val config = new HadoopMapRedWriteConfigUtil[K, V](new SerializableJobConf(conf))

SparkHadoopWriter.write(

rdd = self,

config = config)

}

def write[K, V: ClassTag](

rdd: RDD[(K, V)],

config: HadoopWriteConfigUtil[K, V]): Unit = {

// Extract context and configuration from RDD.

val sparkContext = rdd.context

val commitJobId = rdd.id

// Set up a job.

val jobTrackerId = createJobTrackerID(new Date())

val jobContext = config.createJobContext(jobTrackerId, commitJobId)

config.initOutputFormat(jobContext)

config.assertConf(jobContext, rdd.conf)

val committer = config.createCommitter(commitJobId)

committer.setupJob(jobContext)

try {

val ret = sparkContext.runJob(rdd, (context: TaskContext, iter: Iterator[(K, V)]) => {

val attemptId = (context.stageAttemptNumber << 16) | context.attemptNumber

executeTask(

context = context,

config = config,

jobTrackerId = jobTrackerId,

commitJobId = commitJobId,

sparkPartitionId = context.partitionId,

sparkAttemptNumber = attemptId,

committer = committer,

iterator = iter)

})

committer.commitJob(jobContext, ret)

logInfo(s"Job ${jobContext.getJobID} committed.")

} catch {

case cause: Throwable =>

logError(s"Aborting job ${jobContext.getJobID}.", cause)

committer.abortJob(jobContext)

throw new SparkException("Job aborted.", cause)

}

}

然后调用SparkContext的runJob,runJob又调用了dagScheduler.runJob:

def runJob[T, U: ClassTag](

rdd: RDD[T],

func: (TaskContext, Iterator[T]) => U,

partitions: Seq[Int],

resultHandler: (Int, U) => Unit): Unit = {

if (stopped.get()) {

throw new IllegalStateException("SparkContext has been shutdown")

}

val callSite = getCallSite

val cleanedFunc = clean(func)

logInfo("Starting job: " + callSite.shortForm)

if (conf.getBoolean("spark.logLineage", false)) {

logInfo("RDD's recursive dependencies:\n" + rdd.toDebugString)

}

dagScheduler.runJob(rdd, cleanedFunc, partitions, callSite, resultHandler, localProperties.get)

progressBar.foreach(_.finishAll())

rdd.doCheckpoint()

}

def runJob[T, U](

rdd: RDD[T],

func: (TaskContext, Iterator[T]) => U,

partitions: Seq[Int],

callSite: CallSite,

resultHandler: (Int, U) => Unit,

properties: Properties): Unit = {

val start = System.nanoTime

val waiter = submitJob(rdd, func, partitions, callSite, resultHandler, properties)

ThreadUtils.awaitReady(waiter.completionFuture, Duration.Inf)

waiter.completionFuture.value.get match {

case scala.util.Success(_) =>

logInfo("Job %d finished: %s, took %f s".format

(waiter.jobId, callSite.shortForm, (System.nanoTime - start) / 1e9))

case scala.util.Failure(exception) =>

logInfo("Job %d failed: %s, took %f s".format

(waiter.jobId, callSite.shortForm, (System.nanoTime - start) / 1e9))

// SPARK-8644: Include user stack trace in exceptions coming from DAGScheduler.

val callerStackTrace = Thread.currentThread().getStackTrace.tail

exception.setStackTrace(exception.getStackTrace ++ callerStackTrace)

throw exception

}

}

可以看到DAGScheduler中调用了submitJob:,而submitJob实际上是将提交任务封装成一个消息发送到DAGScheduler内部的event(DAGSchedulerEventProcessLoop)事件队列中,其内部有一个eventThread线程用来专门处理这些事件消息:

private[spark] val eventThread = new Thread(name) {

setDaemon(true)

override def run(): Unit = {

try {

while (!stopped.get) {

val event = eventQueue.take()

try {

onReceive(event)

} catch {

case NonFatal(e) =>

try {

onError(e)

} catch {

case NonFatal(e) => logError("Unexpected error in " + name, e)

}

}

}

} catch {

}

}

}

private def doOnReceive(event: DAGSchedulerEvent): Unit = event match {

case JobSubmitted(jobId, rdd, func, partitions, callSite, listener, properties) =>

dagScheduler.handleJobSubmitted(jobId, rdd, func, partitions, callSite, listener, properties)

case MapStageSubmitted(jobId, dependency, callSite, listener, properties) =>

dagScheduler.handleMapStageSubmitted(jobId, dependency, callSite, listener, properties)

case StageCancelled(stageId, reason) =>

dagScheduler.handleStageCancellation(stageId, reason)

case JobCancelled(jobId, reason) =>

dagScheduler.handleJobCancellation(jobId, reason)

case JobGroupCancelled(groupId) =>

dagScheduler.handleJobGroupCancelled(groupId)

case AllJobsCancelled =>

dagScheduler.doCancelAllJobs()

case ExecutorAdded(execId, host) =>

dagScheduler.handleExecutorAdded(execId, host)

case ExecutorLost(execId, reason) =>

val workerLost = reason match {

case SlaveLost(_, true) => true

case _ => false

}

dagScheduler.handleExecutorLost(execId, workerLost)

case WorkerRemoved(workerId, host, message) =>

dagScheduler.handleWorkerRemoved(workerId, host, message)

case BeginEvent(task, taskInfo) =>

dagScheduler.handleBeginEvent(task, taskInfo)

case SpeculativeTaskSubmitted(task) =>

dagScheduler.handleSpeculativeTaskSubmitted(task)

case GettingResultEvent(taskInfo) =>

dagScheduler.handleGetTaskResult(taskInfo)

case completion: CompletionEvent =>

dagScheduler.handleTaskCompletion(completion)

case TaskSetFailed(taskSet, reason, exception) =>

dagScheduler.handleTaskSetFailed(taskSet, reason, exception)

case ResubmitFailedStages =>

dagScheduler.resubmitFailedStages()

}

我们看到,job提交事件还是由dagScheduler自己来处理,到这里就完成了job任务的提交。

接下来我们看看具体怎么处理任务的提交:

private[scheduler] def handleJobSubmitted(jobId: Int,

finalRDD: RDD[_],

func: (TaskContext, Iterator[_]) => _,

partitions: Array[Int],

callSite: CallSite,

listener: JobListener,

properties: Properties) {

var finalStage: ResultStage = null

try {

finalStage = createResultStage(finalRDD, func, partitions, jobId, callSite)

} catch {

case e: BarrierJobSlotsNumberCheckFailed =>

val numCheckFailures = barrierJobIdToNumTasksCheckFailures.compute(jobId,

new BiFunction[Int, Int, Int] {

override def apply(key: Int, value: Int): Int = value + 1

})

if (numCheckFailures <= maxFailureNumTasksCheck) {

messageScheduler.schedule(

new Runnable {

override def run(): Unit = eventProcessLoop.post(JobSubmitted(jobId, finalRDD, func,

partitions, callSite, listener, properties))

},

timeIntervalNumTasksCheck,

TimeUnit.SECONDS

)

return

} else {

barrierJobIdToNumTasksCheckFailures.remove(jobId)

listener.jobFailed(e)

return

}

case e: Exception =>

return

}

barrierJobIdToNumTasksCheckFailures.remove(jobId)

val job = new ActiveJob(jobId, finalStage, callSite, listener, properties)

clearCacheLocs()

val jobSubmissionTime = clock.getTimeMillis()

jobIdToActiveJob(jobId) = job

activeJobs += job

finalStage.setActiveJob(job)

val stageIds = jobIdToStageIds(jobId).toArray

val stageInfos = stageIds.flatMap(id => stageIdToStage.get(id).map(_.latestInfo))

listenerBus.post(

SparkListenerJobStart(job.jobId, jobSubmissionTime, stageInfos, properties))

submitStage(finalStage)

}

其实初步看来,handleJobSubmitted主要就是创建了ResultStage并进行提交,我们看看ResultStage怎么创建的:

private def createResultStage(

rdd: RDD[_],

func: (TaskContext, Iterator[_]) => _,

partitions: Array[Int],

jobId: Int,

callSite: CallSite): ResultStage = {

checkBarrierStageWithDynamicAllocation(rdd)

checkBarrierStageWithNumSlots(rdd)

checkBarrierStageWithRDDChainPattern(rdd, partitions.toSet.size)

val parents = getOrCreateParentStages(rdd, jobId)

val id = nextStageId.getAndIncrement()

val stage = new ResultStage(id, rdd, func, partitions, parents, jobId, callSite)

stageIdToStage(id) = stage

updateJobIdStageIdMaps(jobId, stage)

stage

}

注意getOrCreateParentStages接下来会一直往前追溯,划分STAGE:

private def getOrCreateParentStages(rdd: RDD[_], firstJobId: Int): List[Stage] = {

getShuffleDependencies(rdd).map {

shuffleDep =>

getOrCreateShuffleMapStage(shuffleDep, firstJobId)

}.toList

}

private[scheduler] def getShuffleDependencies(

rdd: RDD[_]): HashSet[ShuffleDependency[_, _, _]] = {

val parents = new HashSet[ShuffleDependency[_, _, _]]

val visited = new HashSet[RDD[_]]

val waitingForVisit = new ArrayStack[RDD[_]]

waitingForVisit.push(rdd)

while (waitingForVisit.nonEmpty) {

val toVisit = waitingForVisit.pop()

if (!visited(toVisit)) {

visited += toVisit

toVisit.dependencies.foreach {

case shuffleDep: ShuffleDependency[_, _, _] =>

parents += shuffleDep

case dependency =>

waitingForVisit.push(dependency.rdd)

}

}

}

parents

}

上述逻辑如下:如果遇到ShuffleDependency,则生成一个新的Stage,否则继续遍历一直遇到ShuffleDependency。

而获取当前RDD的依赖关系通过如下方式:

final def dependencies: Seq[Dependency[_]] = {

checkpointRDD.map(r => List(new OneToOneDependency(r))).getOrElse {

if (dependencies_ == null) {

stateLock.synchronized {

if (dependencies_ == null) {

dependencies_ = getDependencies

}

}

}

dependencies_

}

}

这里如果不是checkpointRDD,则通过getDependencies获取依赖:

getDependencies有如图几个实现,如果是ShuffleRDD返回:

override def getDependencies: Seq[Dependency[_]] = {

val serializer = userSpecifiedSerializer.getOrElse {

val serializerManager = SparkEnv.get.serializerManager

if (mapSideCombine) {

serializerManager.getSerializer(implicitly[ClassTag[K]], implicitly[ClassTag[C]])

} else {

serializerManager.getSerializer(implicitly[ClassTag[K]], implicitly[ClassTag[V]])

}

}

List(new ShuffleDependency(prev, part, serializer, keyOrdering, aggregator, mapSideCombine))

}

获取到RDD的shuffleDepedency之后,接下来开始创建Stage,通过getOrCreateShuffleMapStage实现:

private def getOrCreateShuffleMapStage(

shuffleDep: ShuffleDependency[_, _, _],

firstJobId: Int): ShuffleMapStage = {

shuffleIdToMapStage.get(shuffleDep.shuffleId) match {

case Some(stage) =>

stage

case None =>

getMissingAncestorShuffleDependencies(shuffleDep.rdd).foreach {

dep =>

if (!shuffleIdToMapStage.contains(dep.shuffleId)) {

createShuffleMapStage(dep, firstJobId)

}

}

// Finally, create a stage for the given shuffle dependency.

createShuffleMapStage(shuffleDep, firstJobId)

}

}

def createShuffleMapStage(shuffleDep: ShuffleDependency[_, _, _], jobId: Int): ShuffleMapStage = {

val rdd = shuffleDep.rdd

checkBarrierStageWithDynamicAllocation(rdd)

checkBarrierStageWithNumSlots(rdd)

checkBarrierStageWithRDDChainPattern(rdd, rdd.getNumPartitions)

// 可以看到,这里task的数量就是根据rdd分区来划分的,rdd有多少个分区就划分为多少个task

val numTasks = rdd.partitions.length

val parents = getOrCreateParentStages(rdd, jobId)

val id = nextStageId.getAndIncrement()

// 可以看到ShuffleMapStage包含了Master节点生成的一个stageId,任务的数量,父Stage,shuffle的依赖信息以及一个mapOutputTracker信息

val stage = new ShuffleMapStage(

id, rdd, numTasks, parents, jobId, rdd.creationSite, shuffleDep, mapOutputTracker)

stageIdToStage(id) = stage

shuffleIdToMapStage(shuffleDep.shuffleId) = stage

updateJobIdStageIdMaps(jobId, stage)

if (!mapOutputTracker.containsShuffle(shuffleDep.shuffleId)) {

// 这里会把这个ShuffleMapStage的主要是分区的信息放到一个Map中保存,后续根据shufleId来这个Map中找对应的分区信息。

mapOutputTracker.registerShuffle(shuffleDep.shuffleId, rdd.partitions.length)

}

stage

}

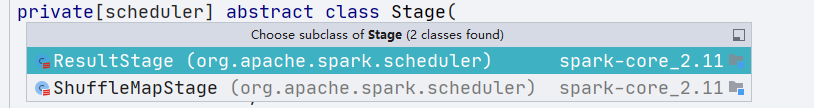

通过createShuffleMapStage创建了ShuffleMapStage这就是我们常说的shuffleStage实现。其继承Stage,有两个实现:

stage创建完之后,会返回一个ShuffleMapStage的集合,我们回到createResultStage方法中:

private def createResultStage(

rdd: RDD[_],

func: (TaskContext, Iterator[_]) => _,

partitions: Array[Int],

jobId: Int,

callSite: CallSite): ResultStage = {

checkBarrierStageWithDynamicAllocation(rdd)

checkBarrierStageWithNumSlots(rdd)

checkBarrierStageWithRDDChainPattern(rdd, partitions.toSet.size)

val parents = getOrCreateParentStages(rdd, jobId)

val id = nextStageId.getAndIncrement()

val stage = new ResultStage(id, rdd, func, partitions, parents, jobId, callSite)

stageIdToStage(id) = stage

updateJobIdStageIdMaps(jobId, stage)

stage

}

getOrCreateParentStages方法返回的是一个ShuffleMapStage的集合,提交任务时是从后向前追溯,划分Stage的,这时候最后的Stage一定是ResultStage,也就是所谓的finalStage.

Stage划分完了之后,接下来就把Stage提交给Spark集群开始处理,会向前追溯将父Stage先提交。

private def submitStage(stage: Stage) {

val jobId = activeJobForStage(stage)

if (jobId.isDefined) {

if (!waitingStages(stage) && !runningStages(stage) && !failedStages(stage)) {

val missing = getMissingParentStages(stage).sortBy(_.id)

logDebug("missing: " + missing)

if (missing.isEmpty) {

logInfo("Submitting " + stage + " (" + stage.rdd + "), which has no missing parents")

submitMissingTasks(stage, jobId.get)

} else {

for (parent <- missing) {

submitStage(parent)

}

waitingStages += stage

}

}

} else {

abortStage(stage, "No active job for stage " + stage.id, None)

}

}

这里getMissingParentStages是来获取对应父Stage:

private def getMissingParentStages(stage: Stage): List[Stage] = {

val missing = new HashSet[Stage]

val visited = new HashSet[RDD[_]]

val waitingForVisit = new ArrayStack[RDD[_]]

def visit(rdd: RDD[_]) {

if (!visited(rdd)) {

visited += rdd

val rddHasUncachedPartitions = getCacheLocs(rdd).contains(Nil)

if (rddHasUncachedPartitions) {

for (dep <- rdd.dependencies) {

dep match {

case shufDep: ShuffleDependency[_, _, _] =>

val mapStage = getOrCreateShuffleMapStage(shufDep, stage.firstJobId)

if (!mapStage.isAvailable) {

missing += mapStage

}

case narrowDep: NarrowDependency[_] =>

waitingForVisit.push(narrowDep.rdd)

}

}

}

}

}

waitingForVisit.push(stage.rdd)

while (waitingForVisit.nonEmpty) {

visit(waitingForVisit.pop())

}

missing.toList

}

最后通过submitMissingTasks来提交Stage对应的任务。

从这里可以看出,Spark中根据ShuffleDependency来划分Stage,如果遇到ShuffleDependency则划分Stage,同一个Stage中,多个计算任务为Task,每个Stage都有一组计算任务Task。

下一章节我们来具体分析一下Stage提交的细节,以及Task的划分和提交