本系列基于spark-2.4.6

通过上一节的分析,我们最后发现spark通过submitMissingTasks来提交Stage。这个章节我们来分析一下其实现以及Task的划分和提交。

private def submitMissingTasks(stage: Stage, jobId: Int) {

val partitionsToCompute: Seq[Int] = stage.findMissingPartitions()

val properties = jobIdToActiveJob(jobId).properties

runningStages += stage

stage match {

case s: ShuffleMapStage =>

outputCommitCoordinator.stageStart(stage = s.id, maxPartitionId = s.numPartitions - 1)

case s: ResultStage =>

outputCommitCoordinator.stageStart(

stage = s.id, maxPartitionId = s.rdd.partitions.length - 1)

}

val taskIdToLocations: Map[Int, Seq[TaskLocation]] = try {

stage match {

case s: ShuffleMapStage =>

partitionsToCompute.map {

id => (id, getPreferredLocs(stage.rdd, id))}.toMap

case s: ResultStage =>

partitionsToCompute.map {

id =>

val p = s.partitions(id)

(id, getPreferredLocs(stage.rdd, p))

}.toMap

}

} catch {

case NonFatal(e) =>

stage.makeNewStageAttempt(partitionsToCompute.size)

listenerBus.post(SparkListenerStageSubmitted(stage.latestInfo, properties))

abortStage(stage, s"Task creation failed: $e\n${Utils.exceptionString(e)}", Some(e))

runningStages -= stage

return

}

stage.makeNewStageAttempt(partitionsToCompute.size, taskIdToLocations.values.toSeq)

if (partitionsToCompute.nonEmpty) {

stage.latestInfo.submissionTime = Some(clock.getTimeMillis())

}

listenerBus.post(SparkListenerStageSubmitted(stage.latestInfo, properties))

var taskBinary: Broadcast[Array[Byte]] = null

var partitions: Array[Partition] = null

try {

var taskBinaryBytes: Array[Byte] = null

RDDCheckpointData.synchronized {

taskBinaryBytes = stage match {

case stage: ShuffleMapStage =>

JavaUtils.bufferToArray(

closureSerializer.serialize((stage.rdd, stage.shuffleDep): AnyRef))

case stage: ResultStage =>

JavaUtils.bufferToArray(closureSerializer.serialize((stage.rdd, stage.func): AnyRef))

}

partitions = stage.rdd.partitions

}

taskBinary = sc.broadcast(taskBinaryBytes)

} catch {

case e: NotSerializableException =>

abortStage(stage, "Task not serializable: " + e.toString, Some(e))

runningStages -= stage

return

case e: Throwable =>

abortStage(stage, s"Task serialization failed: $e\n${Utils.exceptionString(e)}", Some(e))

runningStages -= stage

return

}

val tasks: Seq[Task[_]] = try {

val serializedTaskMetrics = closureSerializer.serialize(stage.latestInfo.taskMetrics).array()

stage match {

case stage: ShuffleMapStage =>

stage.pendingPartitions.clear()

partitionsToCompute.map {

id =>

val locs = taskIdToLocations(id)

val part = partitions(id)

stage.pendingPartitions += id

new ShuffleMapTask(stage.id, stage.latestInfo.attemptNumber,

taskBinary, part, locs, properties, serializedTaskMetrics, Option(jobId),

Option(sc.applicationId), sc.applicationAttemptId, stage.rdd.isBarrier())

}

case stage: ResultStage =>

partitionsToCompute.map {

id =>

val p: Int = stage.partitions(id)

val part = partitions(p)

val locs = taskIdToLocations(id)

new ResultTask(stage.id, stage.latestInfo.attemptNumber,

taskBinary, part, locs, id, properties, serializedTaskMetrics,

Option(jobId), Option(sc.applicationId), sc.applicationAttemptId,

stage.rdd.isBarrier())

}

}

} catch {

case NonFatal(e) =>

abortStage(stage, s"Task creation failed: $e\n${Utils.exceptionString(e)}", Some(e))

runningStages -= stage

return

}

if (tasks.size > 0) {

logInfo(s"Submitting ${tasks.size} missing tasks from $stage (${stage.rdd}) (first 15 " +

s"tasks are for partitions ${tasks.take(15).map(_.partitionId)})")

taskScheduler.submitTasks(new TaskSet(

tasks.toArray, stage.id, stage.latestInfo.attemptNumber, jobId, properties))

} else {

markStageAsFinished(stage, None)

stage match {

case stage: ShuffleMapStage =>

markMapStageJobsAsFinished(stage)

case stage : ResultStage =>

logDebug(s"Stage ${stage} is actually done; (partitions: ${stage.numPartitions})")

}

submitWaitingChildStages(stage)

}

}

上面代码有点长,这里先说一下,通过前面的代码,我们发现Spark中的Stage只有两种:

- ShuffleMapStage

- ResultStage

最后提交的都是ResultStage。

这里首先通过val partitionsToCompute: Seq[Int] = stage.findMissingPartitions()来找到当前Stage的所有分区:

override def findMissingPartitions(): Seq[Int] = {

mapOutputTrackerMaster

.findMissingPartitions(shuffleDep.shuffleId)

.getOrElse(0 until numPartitions)

}

}

def findMissingPartitions(shuffleId: Int): Option[Seq[Int]] = {

shuffleStatuses.get(shuffleId).map(_.findMissingPartitions())

}

def findMissingPartitions(): Seq[Int] = synchronized {

val missing = (0 until numPartitions).filter(id => mapStatuses(id) == null)

assert(missing.size == numPartitions - _numAvailableOutputs,

s"${missing.size} missing, expected ${numPartitions - _numAvailableOutputs}")

missing

}

可以看到Spark针对每个ShuffleMaStage的每个分区维护了一个状态ShuffleStatus,通过他来记录一些状态。

outputCommitCoordinator.stageStart主要用来标记当前Stage的状态。

然后就是获取Stage分区数据的位置,方便后续分配给Executor执行器执行任务的时候与数据更近。

然后创建ShuffleMapTask,这里每个分区都创建一个ShuffleMapTask:

case stage: ShuffleMapStage =>

stage.pendingPartitions.clear()

partitionsToCompute.map {

id =>

val locs = taskIdToLocations(id)

val part = partitions(id)

stage.pendingPartitions += id

new ShuffleMapTask(stage.id, stage.latestInfo.attemptNumber,

taskBinary, part, locs, properties, serializedTaskMetrics, Option(jobId),

Option(sc.applicationId), sc.applicationAttemptId, stage.rdd.isBarrier())

}

同时会将stage的rdd信息和依赖信息序列化,并broadcast,同时也放入到了Task中:

taskBinaryBytes = stage match {

case stage: ShuffleMapStage =>

JavaUtils.bufferToArray(

closureSerializer.serialize((stage.rdd, stage.shuffleDep): AnyRef))

case stage: ResultStage =>

JavaUtils.bufferToArray(closureSerializer.serialize((stage.rdd, stage.func): AnyRef))

}

taskBinary = sc.broadcast(taskBinaryBytes)

到这里就获取到了ShuffleMapStage的所有ShuffleMapTask,然后封装成TaskSet,通过taskScheduler提交:

taskScheduler.submitTasks(new TaskSet(

tasks.toArray, stage.id, stage.latestInfo.attemptNumber, jobId, properties))

yarn cluster最终通过TaskSchedulerImpl实现:

override def submitTasks(taskSet: TaskSet) {

val tasks = taskSet.tasks

this.synchronized {

val manager = createTaskSetManager(taskSet, maxTaskFailures)

val stage = taskSet.stageId

val stageTaskSets =

taskSetsByStageIdAndAttempt.getOrElseUpdate(stage, new HashMap[Int, TaskSetManager])

stageTaskSets.foreach {

case (_, ts) =>

ts.isZombie = true

}

stageTaskSets(taskSet.stageAttemptId) = manager

schedulableBuilder.addTaskSetManager(manager, manager.taskSet.properties)

if (!isLocal && !hasReceivedTask) {

starvationTimer.scheduleAtFixedRate(new TimerTask() {

override def run() {

if (!hasLaunchedTask) {

} else {

this.cancel()

}

}

}, STARVATION_TIMEOUT_MS, STARVATION_TIMEOUT_MS)

}

hasReceivedTask = true

}

backend.reviveOffers()

}

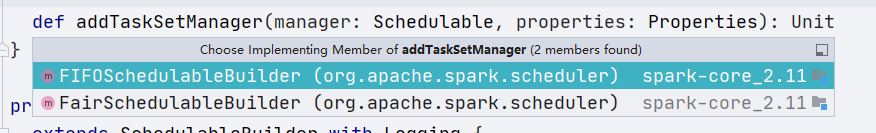

这里将所有的Task都放入到了一个TaskSetManager,然后会将其放入到一个执行任务的池中,这里有两个实现:

而调度获取的是spark.scheduler.mode配置,默认FIFO.

在这里创建TaskSetManager的时候,会执行如下逻辑:

for (i <- (0 until numTasks).reverse) {

addPendingTask(i)

}

private[spark] def addPendingTask(index: Int) {

for (loc <- tasks(index).preferredLocations) {

loc match {

case e: ExecutorCacheTaskLocation =>

pendingTasksForExecutor.getOrElseUpdate(e.executorId, new ArrayBuffer) += index

case e: HDFSCacheTaskLocation =>

val exe = sched.getExecutorsAliveOnHost(loc.host)

exe match {

case Some(set) =>

for (e <- set) {

pendingTasksForExecutor.getOrElseUpdate(e, new ArrayBuffer) += index

}

logInfo(s"Pending task $index has a cached location at ${e.host} " +

", where there are executors " + set.mkString(","))

case None => logDebug(s"Pending task $index has a cached location at ${e.host} " +

", but there are no executors alive there.")

}

case _ =>

}

pendingTasksForHost.getOrElseUpdate(loc.host, new ArrayBuffer) += index

for (rack <- sched.getRackForHost(loc.host)) {

pendingTasksForRack.getOrElseUpdate(rack, new ArrayBuffer) += index

}

}

if (tasks(index).preferredLocations == Nil) {

pendingTasksWithNoPrefs += index

}

allPendingTasks += index // No point scanning this whole list to find the old task there

}

在这里addPendingTask逻辑是根据task中每个RDD的分区的位置来放入不同的map中,主要看分区数据的位置类型,放入不同的Map中,如果是在Cache中,则记录其所在的executorId,如果是在HDFS中,记录其Host,后续分配的时候,会优先按照位置进行任务分配。

最后通过backend.reviveOffers()来通知Driver自己,实现:

override def reviveOffers() {

driverEndpoint.send(ReviveOffers)

}

在CoarseGrainedSchedulerBackend处理如下:

override def receive: PartialFunction[Any, Unit] = {

case StatusUpdate(executorId, taskId, state, data) =>

scheduler.statusUpdate(taskId, state, data.value)

if (TaskState.isFinished(state)) {

executorDataMap.get(executorId) match {

case Some(executorInfo) =>

executorInfo.freeCores += scheduler.CPUS_PER_TASK

makeOffers(executorId)

case None =>

}

}

case ReviveOffers =>

makeOffers()

case KillTask(taskId, executorId, interruptThread, reason) =>

executorDataMap.get(executorId) match {

case Some(executorInfo) =>

executorInfo.executorEndpoint.send(

KillTask(taskId, executorId, interruptThread, reason))

case None =>

}

case KillExecutorsOnHost(host) =>

scheduler.getExecutorsAliveOnHost(host).foreach {

exec =>

killExecutors(exec.toSeq, adjustTargetNumExecutors = false, countFailures = false,

force = true)

}

case UpdateDelegationTokens(newDelegationTokens) =>

executorDataMap.values.foreach {

ed =>

ed.executorEndpoint.send(UpdateDelegationTokens(newDelegationTokens))

}

case RemoveExecutor(executorId, reason) =>

executorDataMap.get(executorId).foreach(_.executorEndpoint.send(StopExecutor))

removeExecutor(executorId, reason)

}

调用makeOffers方法,最后调用了launchTasks执行任务:

private def makeOffers() {

val taskDescs = withLock {

val activeExecutors = executorDataMap.filterKeys(executorIsAlive)

val workOffers = activeExecutors.map {

case (id, executorData) =>

new WorkerOffer(id, executorData.executorHost, executorData.freeCores,

Some(executorData.executorAddress.hostPort))

}.toIndexedSeq

scheduler.resourceOffers(workOffers)

}

if (!taskDescs.isEmpty) {

launchTasks(taskDescs)

}

}

在这里,会将Task分配给具体的Executor。首先是获取目前应用可用的资源,然后将资源分配给任务。在scheduler.resourceOffers进行资源的分配:

def resourceOffers(offers: IndexedSeq[WorkerOffer]): Seq[Seq[TaskDescription]] = synchronized {

var newExecAvail = false

for (o <- offers) {

if (!hostToExecutors.contains(o.host)) {

hostToExecutors(o.host) = new HashSet[String]()

}

if (!executorIdToRunningTaskIds.contains(o.executorId)) {

hostToExecutors(o.host) += o.executorId

executorAdded(o.executorId, o.host)

executorIdToHost(o.executorId) = o.host

executorIdToRunningTaskIds(o.executorId) = HashSet[Long]()

newExecAvail = true

}

for (rack <- getRackForHost(o.host)) {

hostsByRack.getOrElseUpdate(rack, new HashSet[String]()) += o.host

}

}

blacklistTrackerOpt.foreach(_.applyBlacklistTimeout())

val filteredOffers = blacklistTrackerOpt.map {

blacklistTracker =>

offers.filter {

offer =>

!blacklistTracker.isNodeBlacklisted(offer.host) &&

!blacklistTracker.isExecutorBlacklisted(offer.executorId)

}

}.getOrElse(offers)

// 上面这么多主要就是对资源过滤,

// 对资源进行混洗,随机分配资源

val shuffledOffers = shuffleOffers(filteredOffers)

val tasks = shuffledOffers.map(o => new ArrayBuffer[TaskDescription](o.cores / CPUS_PER_TASK))

val availableCpus = shuffledOffers.map(o => o.cores).toArray

val sortedTaskSets = rootPool.getSortedTaskSetQueue

for (taskSet <- sortedTaskSets) {

//如果有可用节点

if (newExecAvail) {

// 这步主要还是为了计算task中rdd位置的优先级,

taskSet.executorAdded()

}

}

for (taskSet <- sortedTaskSets) {

val availableSlots = availableCpus.map(c => c / CPUS_PER_TASK).sum

if (taskSet.isBarrier && availableSlots < taskSet.numTasks) {

} else {

var launchedAnyTask = false

val addressesWithDescs = ArrayBuffer[(String, TaskDescription)]()

// 遍历当前TaskSet的本地亲和性,按照顺序遍历并分配任务

for (currentMaxLocality <- taskSet.myLocalityLevels) {

var launchedTaskAtCurrentMaxLocality = false

do {

launchedTaskAtCurrentMaxLocality = resourceOfferSingleTaskSet(taskSet,

currentMaxLocality, shuffledOffers, availableCpus, tasks, addressesWithDescs)

launchedAnyTask |= launchedTaskAtCurrentMaxLocality

} while (launchedTaskAtCurrentMaxLocality)

}

if (!launchedAnyTask) {

taskSet.getCompletelyBlacklistedTaskIfAny(hostToExecutors).foreach {

taskIndex =>

executorIdToRunningTaskIds.find(x => !isExecutorBusy(x._1)) match {

case Some ((executorId, _)) =>

if (!unschedulableTaskSetToExpiryTime.contains(taskSet)) {

blacklistTrackerOpt.foreach(blt => blt.killBlacklistedIdleExecutor(executorId))

abortTimer.schedule(

createUnschedulableTaskSetAbortTimer(taskSet, taskIndex), timeout)

}

case None => // Abort Immediately

taskSet.abortSinceCompletelyBlacklisted(taskIndex)

}

}

} else {

if (unschedulableTaskSetToExpiryTime.nonEmpty) {

unschedulableTaskSetToExpiryTime.clear()

}

}

if (launchedAnyTask && taskSet.isBarrier) {

if (addressesWithDescs.size != taskSet.numTasks) {

taskSet.abort(errorMsg)

throw new SparkException(errorMsg)

}

maybeInitBarrierCoordinator()

val addressesStr = addressesWithDescs

.sortBy(_._2.partitionId)

.map(_._1)

.mkString(",")

addressesWithDescs.foreach(_._2.properties.setProperty("addresses", addressesStr))

}

}

}

if (tasks.size > 0) {

hasLaunchedTask = true

}

return tasks

}

这里resourceOffers用了synchronized同步代码块进行同步,resourceOffers不同同时运行多个,只能运行一个。

后续调用resourceOfferSingleTaskSet给每个TaskSet分配资源,源代码如下:

private def resourceOfferSingleTaskSet(

taskSet: TaskSetManager,

maxLocality: TaskLocality,

shuffledOffers: Seq[WorkerOffer],

availableCpus: Array[Int],

tasks: IndexedSeq[ArrayBuffer[TaskDescription]],

addressesWithDescs: ArrayBuffer[(String, TaskDescription)]) : Boolean = {

var launchedTask = false

for (i <- 0 until shuffledOffers.size) {

val execId = shuffledOffers(i).executorId

val host = shuffledOffers(i).host

if (availableCpus(i) >= CPUS_PER_TASK) {

try {

for (task <- taskSet.resourceOffer(execId, host, maxLocality)) {

tasks(i) += task

val tid = task.taskId

taskIdToTaskSetManager.put(tid, taskSet)

taskIdToExecutorId(tid) = execId

executorIdToRunningTaskIds(execId).add(tid)

availableCpus(i) -= CPUS_PER_TASK

assert(availableCpus(i) >= 0)

if (taskSet.isBarrier) {

addressesWithDescs += (shuffledOffers(i).address.get -> task)

}

launchedTask = true

}

} catch {

case e: TaskNotSerializableException =>

return launchedTask

}

}

}

return launchedTask

}

这里resourceOfferSingleTaskSet主要是给列出的资源分配一个合适的Task。

通过resourceOffer给资源分配任务。还记得我们上面说的,在TaskSetManager初始化的时候回根据task的rdd的分区的位置来放入不同的map中,这里也会根据传入的资源(主要是executor的id和host)来判断在上述的两个map中有没有对应的task,如果有,表示这些task对应的rdd的分区与给出的资源位置近(在同一个进程或者同一个host上)优先分配。

def resourceOffer(

execId: String,

host: String,

maxLocality: TaskLocality.TaskLocality)

: Option[TaskDescription] =

{

val offerBlacklisted = taskSetBlacklistHelperOpt.exists {

blacklist =>

blacklist.isNodeBlacklistedForTaskSet(host) ||

blacklist.isExecutorBlacklistedForTaskSet(execId)

}

if (!isZombie && !offerBlacklisted) {

val curTime = clock.getTimeMillis()

var allowedLocality = maxLocality

if (maxLocality != TaskLocality.NO_PREF) {

allowedLocality = getAllowedLocalityLevel(curTime)

if (allowedLocality > maxLocality) {

// We're not allowed to search for farther-away tasks

allowedLocality = maxLocality

}

}

dequeueTask(execId, host, allowedLocality).map {

case ((index, taskLocality, speculative)) =>

val task = tasks(index)

val taskId = sched.newTaskId()

// Do various bookkeeping

copiesRunning(index) += 1

val attemptNum = taskAttempts(index).size

val info = new TaskInfo(taskId, index, attemptNum, curTime,

execId, host, taskLocality, speculative)

taskInfos(taskId) = info

taskAttempts(index) = info :: taskAttempts(index)

if (maxLocality != TaskLocality.NO_PREF) {

currentLocalityIndex = getLocalityIndex(taskLocality)

lastLaunchTime = curTime

}

// Serialize and return the task

val serializedTask: ByteBuffer = try {

ser.serialize(task)

} catch {

throw new TaskNotSerializableException(e)

}

if (serializedTask.limit() > TaskSetManager.TASK_SIZE_TO_WARN_KB * 1024 &&

!emittedTaskSizeWarning) {

emittedTaskSizeWarning = true

addRunningTask(taskId)

val taskName = s"task ${info.id} in stage ${taskSet.id}"

sched.dagScheduler.taskStarted(task, info)

new TaskDescription(

taskId,

attemptNum,

execId,

taskName,

index,

task.partitionId,

addedFiles,

addedJars,

task.localProperties,

serializedTask)

}

} else {

None

}

}

这里有一个dequeueTask就是给出的资源的ExecutorId和Host去这两个Map中找有没有对应的任务(根据task的rdd分区),有的话优先分配,没有的话也会分配,但是本地亲和性则没有:

private def dequeueTask(execId: String, host: String, maxLocality: TaskLocality.Value)

: Option[(Int, TaskLocality.Value, Boolean)] =

{

for (index <- dequeueTaskFromList(execId, host, getPendingTasksForExecutor(execId))) {

return Some((index, TaskLocality.PROCESS_LOCAL, false))

}

if (TaskLocality.isAllowed(maxLocality, TaskLocality.NODE_LOCAL)) {

for (index <- dequeueTaskFromList(execId, host, getPendingTasksForHost(host))) {

return Some((index, TaskLocality.NODE_LOCAL, false))

}

}

if (TaskLocality.isAllowed(maxLocality, TaskLocality.NO_PREF)) {

for (index <- dequeueTaskFromList(execId, host, pendingTasksWithNoPrefs)) {

return Some((index, TaskLocality.PROCESS_LOCAL, false))

}

}

if (TaskLocality.isAllowed(maxLocality, TaskLocality.RACK_LOCAL)) {

for {

rack <- sched.getRackForHost(host)

index <- dequeueTaskFromList(execId, host, getPendingTasksForRack(rack))

} {

return Some((index, TaskLocality.RACK_LOCAL, false))

}

}

if (TaskLocality.isAllowed(maxLocality, TaskLocality.ANY)) {

for (index <- dequeueTaskFromList(execId, host, allPendingTasks)) {

return Some((index, TaskLocality.ANY, false))

}

}

dequeueSpeculativeTask(execId, host, maxLocality).map {

case (taskIndex, allowedLocality) => (taskIndex, allowedLocality, true)}

}

然后会将上面分配的任务通过launchTasks进行提交:

private def launchTasks(tasks: Seq[Seq[TaskDescription]]) {

for (task <- tasks.flatten) {

val serializedTask = TaskDescription.encode(task)

if (serializedTask.limit() >= maxRpcMessageSize) {

Option(scheduler.taskIdToTaskSetManager.get(task.taskId)).foreach {

taskSetMgr =>

try {

serializedTask.limit(), maxRpcMessageSize)

taskSetMgr.abort(msg)

} catch {

case e: Exception => logError("Exception in error callback", e)

}

}

}

else {

val executorData = executorDataMap(task.executorId)

executorData.freeCores -= scheduler.CPUS_PER_TASK

executorData.executorEndpoint.send(LaunchTask(new SerializableBuffer(serializedTask)))

}

}

}

可以看到任务提交就是根对应的executor节点发送了一个LaunchTask指令信息,里面包含了这个节点分配到的所有任务。

好,到这里我们总结下目前分析的过程:

- 当

DAGScheduler收到submitMissingTasks的时候回判断提交的Stage的类型(注意scala中只有两种Stage:ShuffleMapStage、ResultStage,获取Stage的所有的分区,并记录分区的位置信息 - 根据获取的分区信息,给每个分区生成一个

ShuffleMapTask - 将生成的task集合放入

TaskSet中,调用TaskSchedulerImpl.submitTasks提交任务 TaskSchedulerImpl.submitTasks中同步方法执行生成TaskSetManager,然后发送ReviveOffers通知CoarseGrainedSchedulerBackend- 在生成

TaskSetManager会调用addPendingTask方法,根据每个task的分区的不同位置,将任务放入不同的map中,key主要是分区的executorId和host - 在

CoarseGrainedSchedulerBackend判断消息是ReviveOffers的时候,调用makeOffers来获取资源、分配任务,makeOffers首先会获取到当前任务可用的资源,然后通过TaskSchedulerImpl.resourceOffers来进行资源资源任务分配 - 在

TaskSchedulerImpl.resourceOffers中会对所有的taskSet进行资源分配(如果资源可以),通过resourceOfferSingleTaskSet对单个TaskSet进行资源任务分配,分配的逻辑是从可用的资源列表中遍历,然后去TaskSet中根据分区数据本地亲和性选取适合该资源的任务(通过上面两个Map,记录了该任务对应的分区的ExecutorId和host属性) - 然后通过

CoarseGrainedSchedulerBackend.launchTasks将上面获取到的任务列表提交,通过发送一个LaunchTask指令信息给到对应的executor节点。

到这里就完成了Task的划分和资源分配以及任务提交

下一节我们分析Task怎么执行的。