1、新建一个maven工程,pom.xml中添加hadoop-common,hadoop-hdfs引用。

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.7.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.7.0</version>

</dependency>

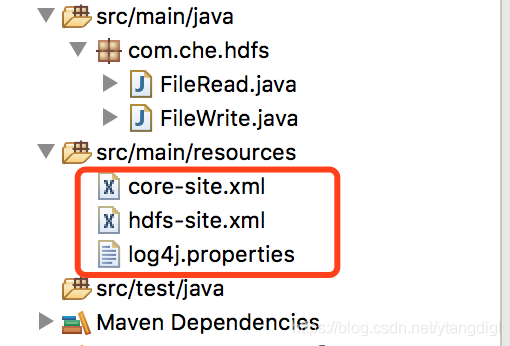

2、将部署在centos7上的hadoop的配置文件core-site.xml、hdfs-site.xml、log4j.properties拷贝到工程中的resources目录下,

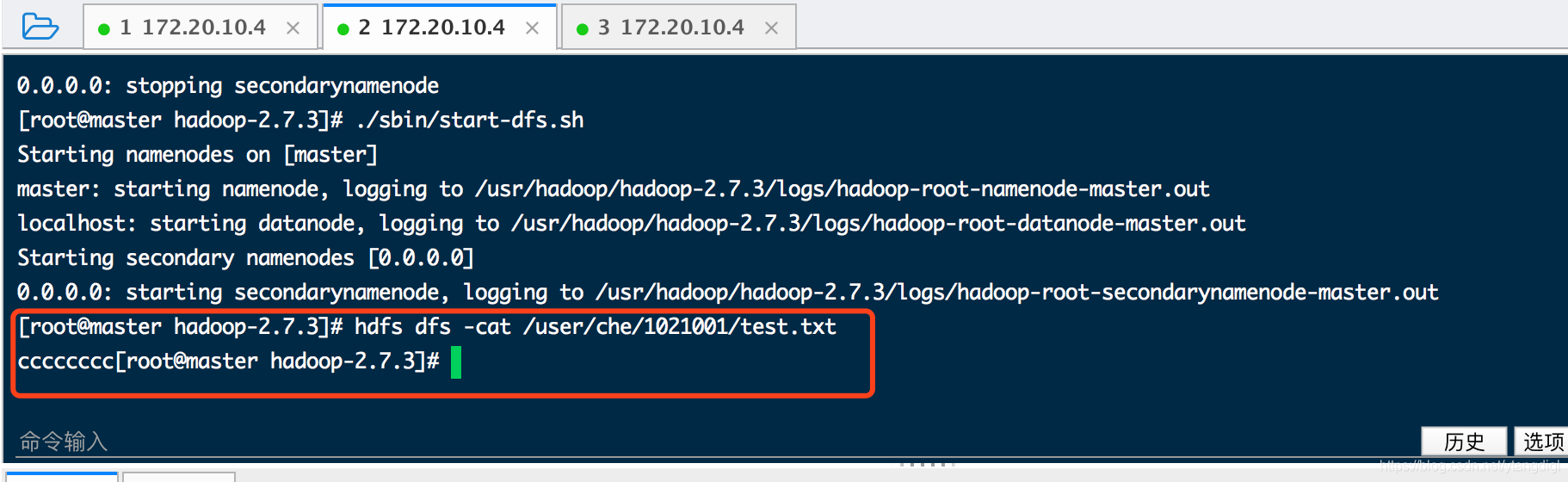

3、hdfs读文件示例

package com.che.hdfs;

import java.io.IOException;

import java.io.OutputStream;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

/**

* hdfs 读文件

*

*/

public class FileRead {

public static void main(String[] args) {

Configuration conf = new Configuration();

try {

// 指定hdfs入口地址 与 core-site.xml中fs.defaultFs值一致

conf.set("fs.defaultFs", "hdfs://172.20.10.4:9000");

FileSystem fs = FileSystem.get(conf);

// 指定读取hdfs文件系统中的文件地址

String filePath = "/user/che/1021001/test.txt";

Path targetFile = new Path(filePath);

if (!fs.exists(targetFile)) {

System.out.println("hdfs文件:" + filePath + "不存在");

}

// 指定输出流

FSDataInputStream in = fs.open(targetFile);

OutputStream out = System.out;

byte buffer[] = new byte[256];

try {

int bytesRead = 0;

while ((bytesRead = in.read(buffer)) > 0) {

out.write(buffer, 0, bytesRead);

}

} catch (IOException e) {

e.printStackTrace();

} finally {

in.close();

out.close();

}

} catch (Exception e) {

e.printStackTrace();

}

}

}

4、hdfs写文件示例

package com.che.hdfs;

import java.io.BufferedInputStream;

import java.io.File;

import java.io.FileInputStream;

import java.io.IOException;

import java.io.InputStream;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

/**

* hdfs 写文件

*/

public class FileWrite {

public static void main(String[] args) {

Configuration conf = new Configuration();

try {

// 指定hdfs入口地址 与 core-site.xml中fs.defaultFs值一致

conf.set("fs.defaultFs", "hdfs://172.20.10.4:9000");

FileSystem fs = FileSystem.get(conf);

// 要读取的文件路径(本地文件)

String inFilePath = "/Users/diguoliang/Desktop/test01.txt";

// 要写到hdfs文件系统中的文件路径

String outFilePath = "/user/che/1021001/test1.txt";

File inFile = new File(inFilePath);

if (!inFile.exists()) {

System.out.println("要读取的文件:" + inFilePath + "不存在。");

}

InputStream in = new BufferedInputStream(new FileInputStream(inFilePath));

// 构造输出流

Path outFile = new Path(outFilePath);

FSDataOutputStream out = fs.create(outFile);

// 开始写入

byte buffer[] = new byte[256];

try {

int bytesRead = 0;

while ((bytesRead = in.read(buffer)) > 0) {

out.write(buffer, 0, bytesRead);

}

} catch (IOException e) {

System.out.println("写文件异常:" + e.getMessage());

} finally {

in.close();

out.close();

}

} catch (IOException e) {

e.printStackTrace();

}

}

}

4.1 hdfs写文件报权限错误如下:

org.apache.hadoop.security.AccessControlException: org.apache.hadoop.security .AccessControlException: Permission denied: user=che, access=WRITE, inode=“hadoop”: hadoop:supergroup:rwxr-xr-x

解除办法是:hadoop的hdfs-site.xml文件中添以下配置,然后重启hdfs

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>