本篇文章主要介绍在idea下使用java API操作HDFS分布式文件系统,分别用配置文件方式和直接使用URL的方式演示

一、通过URL的方式操作HDFS分布式文件系统

①、代码

package com.hdfs;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import org.apache.hadoop.util.Progressable;

import org.junit.After;

import org.junit.Before;

import org.junit.Test;

import java.io.*;

import java.net.URI;

/**

* java操作HDFS的CRUD(通过URL方式)

* Created by zhoujh on 2018/7/31.

*/

public class JavaHDFSAPIJunit {

Configuration configuration;

FileSystem fileSystem;

String HDFS_PATH = "hdfs://node1:9000";

@Before

public void before() throws Exception{

configuration = new Configuration();

fileSystem=FileSystem.get(new URI(HDFS_PATH),configuration,"hadoop");

}

@Test

public void mkdir() throws Exception{//新建文件夹

boolean result = fileSystem.mkdirs(new Path("/logData"));

System.out.print(result);

}

@Test

public void write() throws Exception {//新建文件

FSDataOutputStream fsDataOutputStream = fileSystem.create(new Path("/first/hello.txt"));

fsDataOutputStream.writeBytes("hello hadoop hdfs!");

fsDataOutputStream.close();

}

@Test

public void read() throws Exception {//查看HDFS上的文件

FSDataInputStream fsDataInputStream = fileSystem.open(new Path("/first/hello.txt"));

InputStreamReader isr = new InputStreamReader(fsDataInputStream);//创建输入流

BufferedReader br = new BufferedReader(isr);//按行读取

String str = br.readLine();

while(str!=null)

{

System.out.println(str);

str = br.readLine();

}

br.close();

isr.close();

fsDataInputStream.close();

}

@Test

public void upload() throws Exception{//上传文件到HDFS

fileSystem.copyFromLocalFile(new Path("E:/b_hadoop/日志数据/Info/infoLog_20180423.txt.1"),new Path("/input/infoLog_20180423.txt.1"));

fileSystem.close();

}

@Test

public void rename() throws Exception{//文件重命名

fileSystem.rename(new Path("/first/hello.txt"),new Path("/first/h.txt"));

fileSystem.close();

}

@Test

public void download() throws Exception{//从HDFS下载文件

fileSystem.copyToLocalFile(new Path("/first/h.txt"),new Path("D:/test"));

fileSystem.close();

}

@Test

public void query() throws Exception{//查询某目录下所有文件

FileStatus[] fileStatuses = fileSystem.listStatus(new Path("/"));

for(int i=0;i<fileStatuses.length;i++)

{

System.out.println(fileStatuses[i].getPath().toString());

}

fileSystem.close();

}

@Test

public void delete() throws Exception{//删除文件

fileSystem.delete(new Path("/logData"),true);

fileSystem.close();

}

@Test

public void queryFile() throws Exception{//查询某目录下所有文件

FileStatus[] fileStatuses = fileSystem.listStatus(new Path("/"));

for(int i=0;i<fileStatuses.length;i++)

{

System.out.println(fileStatuses[i].getPath().toString());

}

fileSystem.close();

}

@After

public void destroy(){

}

}

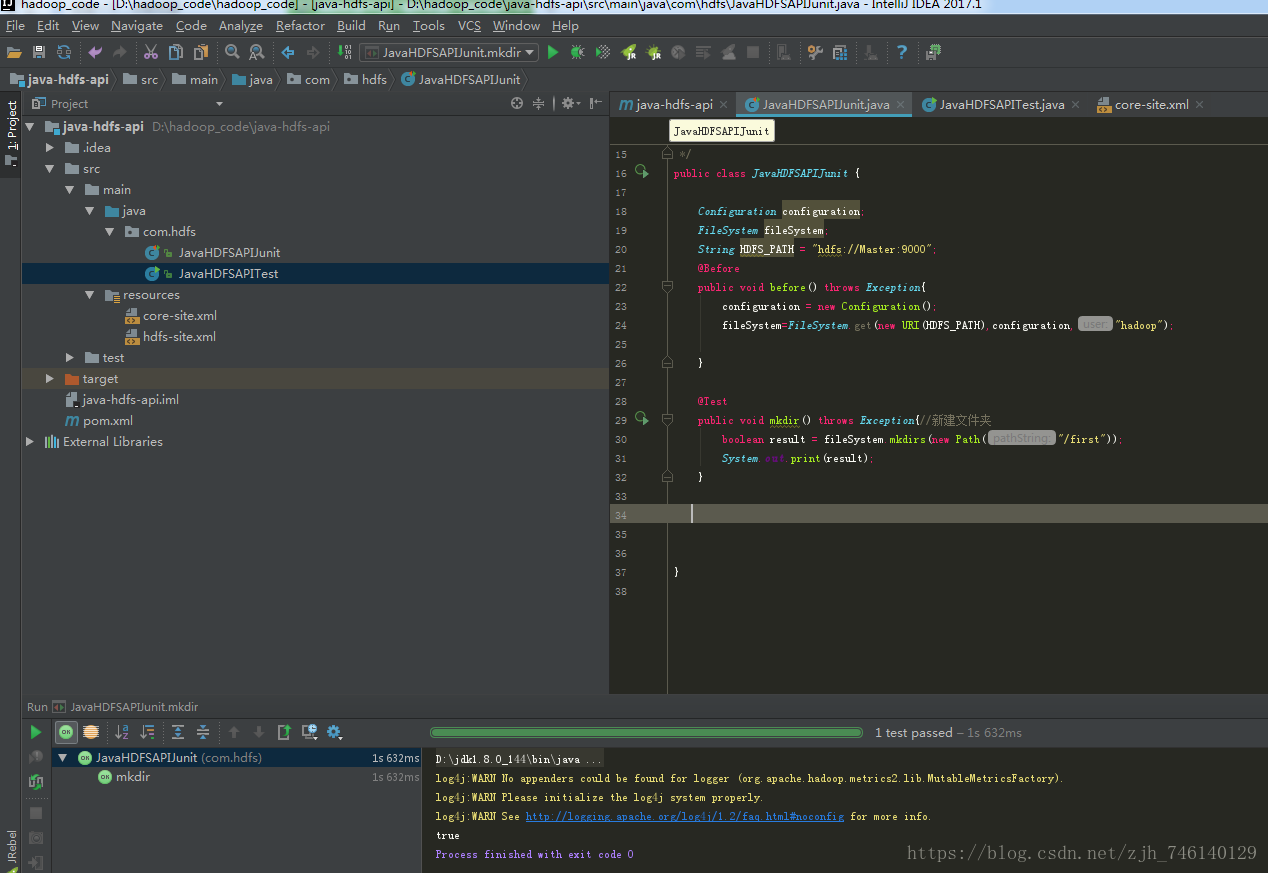

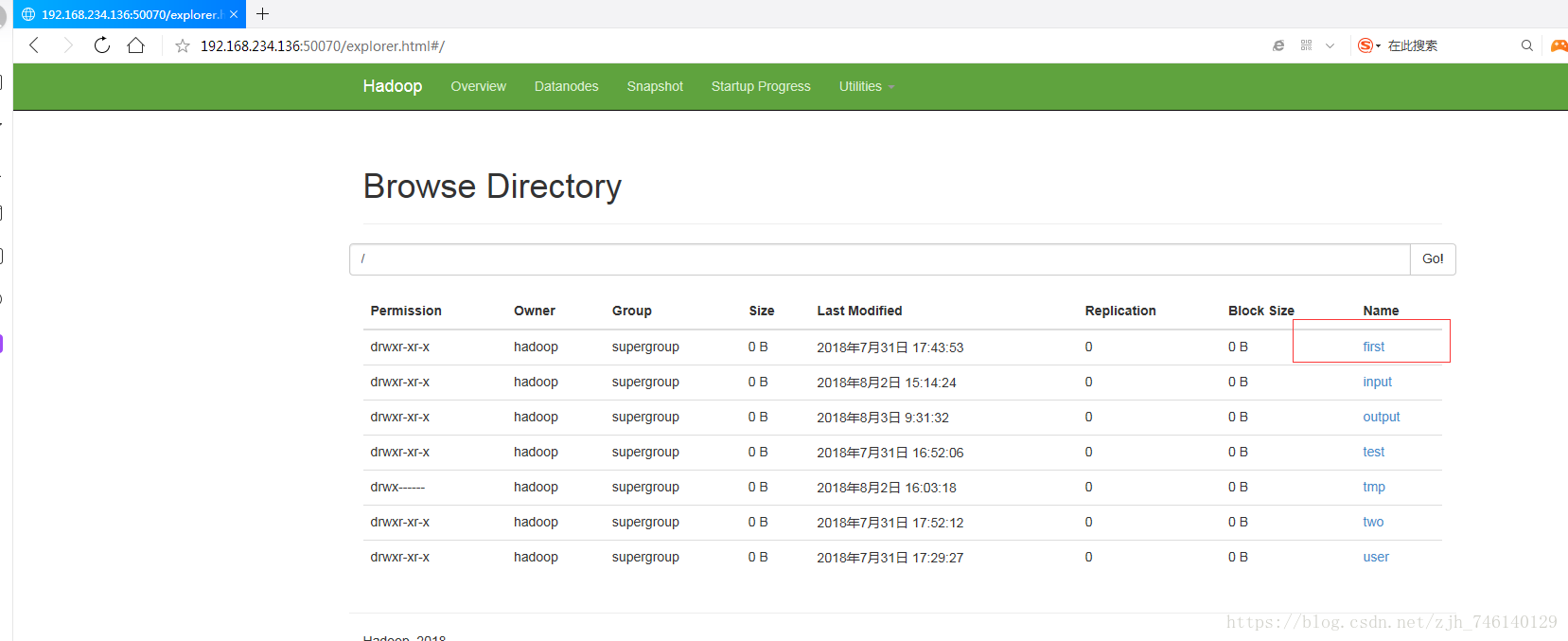

②、操作 创建文件夹

③、结果

二、配置文件方式操作HDFS分布式文件系统

①、代码

package com.hdfs;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import org.apache.hadoop.io.IOUtils;

import org.junit.After;

import org.junit.Before;

import org.junit.Test;

import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStream;

import java.io.InputStreamReader;

import java.net.URI;

/**

* java操作HDFS的CRUD(通过配置文件方式)

* Created by zhoujh on 2018/7/31.

*/

public class JavaHDFSAPITest {

//如果遇到权限问题 一种.添加环境变量 HADOOP_USER_NAME=hadoop

//另一种.在运行Java程序时添加虚拟机参数 -DHADOOP_USER_NAME=hadoop

Configuration configuration;

FileSystem fileSystem;

String HDFS_PATH = "hdfs://Master:9000";

@Before

public void before() throws Exception{

configuration = new Configuration();

fileSystem=FileSystem.get(new URI(HDFS_PATH),configuration,"hadoop");

}

@Test

public void mkdir() throws Exception{//新建文件夹

boolean result = fileSystem.mkdirs(new Path("/input"));

System.out.print(result);

}

@Test

public void write() throws Exception {//新建文件

FSDataOutputStream fsDataOutputStream = fileSystem.create(new Path("/two/hello.txt"));

fsDataOutputStream.writeBytes("hello hadoop hdfs!");

fsDataOutputStream.close();

}

@Test

public void read() throws Exception {//查看HDFS上的文件

FSDataInputStream fsDataInputStream = fileSystem.open(new Path("/two/hello.txt"));

InputStreamReader isr = new InputStreamReader(fsDataInputStream);//创建输入流

BufferedReader br = new BufferedReader(isr);//按行读取

String str = br.readLine();

while(str!=null)

{

System.out.println(str);

str = br.readLine();

}

br.close();

isr.close();

fsDataInputStream.close();

}

@Test

public void upload() throws Exception{//上传文件到HDFS

fileSystem.copyFromLocalFile(new Path("D:/two/data.txt"),new Path("/input"));

fileSystem.close();

}

@Test

public void rename() throws Exception{//文件重命名

fileSystem.rename(new Path("/two/hello.txt"),new Path("/two/h.txt"));

fileSystem.close();

}

@Test

public void download() throws Exception{//从HDFS下载文件

fileSystem.copyToLocalFile(new Path("/two/h.txt"),new Path("D:/test"));

fileSystem.close();

}

@Test

public void query() throws Exception{//查询某目录下所有文件

FileStatus[] fileStatuses = fileSystem.listStatus(new Path("/"));

for(int i=0;i<fileStatuses.length;i++)

{

System.out.println(fileStatuses[i].getPath().toString());

}

fileSystem.close();

}

@Test

public void delete() throws Exception{//删除文件

fileSystem.delete(new Path("/two/h.txt"),true);

fileSystem.close();

}

@After

public void destroy(){

}

}

②、配置文件

1、core-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<!-- Hadoop 文件系统的临时目录(NameNode和DataNode默认存放在hadoop.tmp.dir目录)-->

<property>

<name>hadoop.tmp.dir</name>

<value>file:/usr/local/hadoop/tmp</value>

</property>

<!-- 配置NameNode的URI -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://Master:9000</value>

</property>

</configuration>

2、hdfs-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<!-- Master可替换为IP -->

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>Master:50070</value>

</property>

<!-- 设置系统里面的文件块的数据备份个数,默认是3 -->

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<!-- NameNode结点存储hadoop文件系统信息的本地系统路径 -->

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/usr/local/hadoop/tmp/dfs/name</value>

</property>

<!-- DataNode结点被指定要存储数据的本地文件系统路径,这个值只对NameNode有效,DataNode并不需要使用到它 -->

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/usr/local/hadoop/tmp/dfs/data</value>

</property>

</configuration>

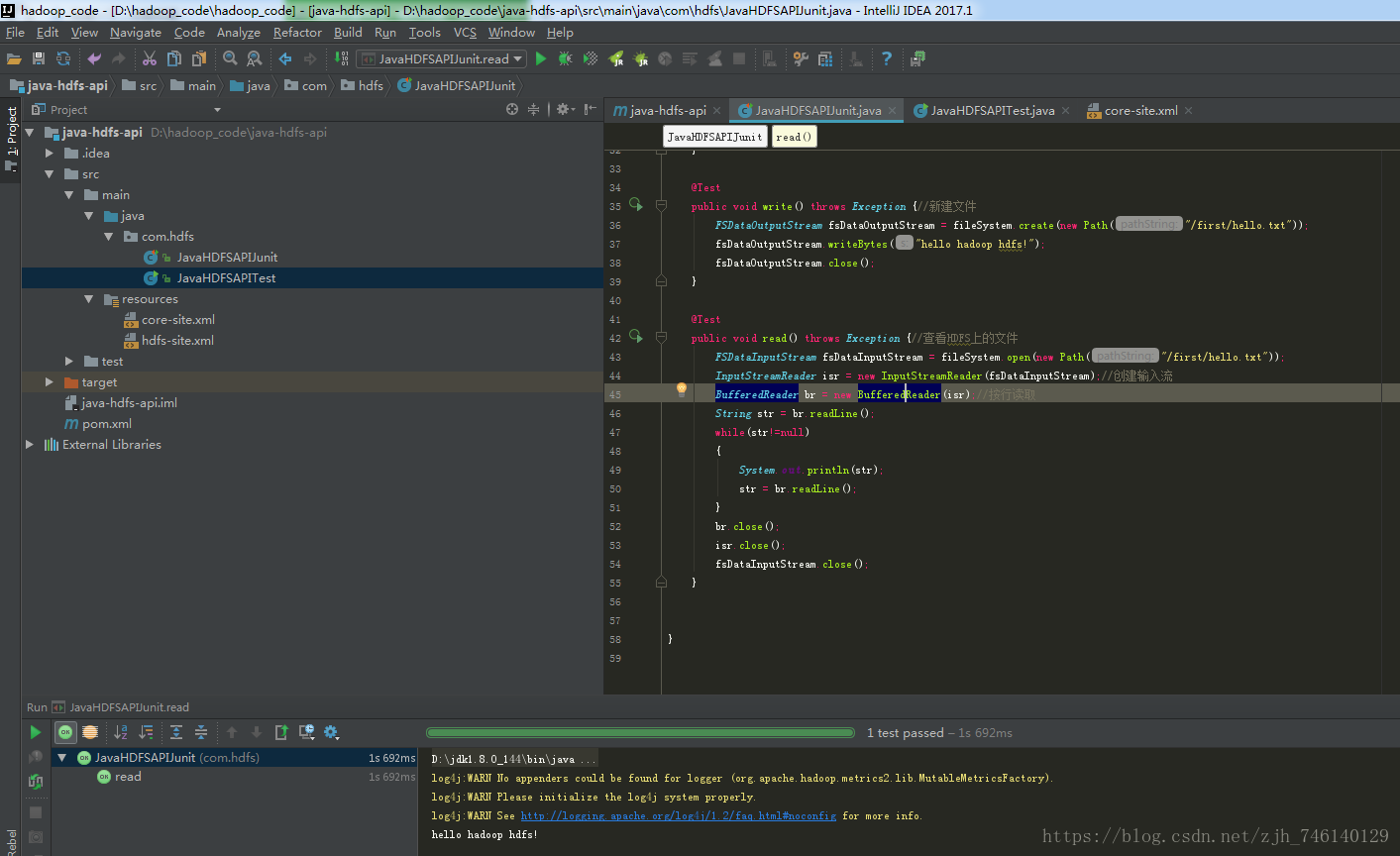

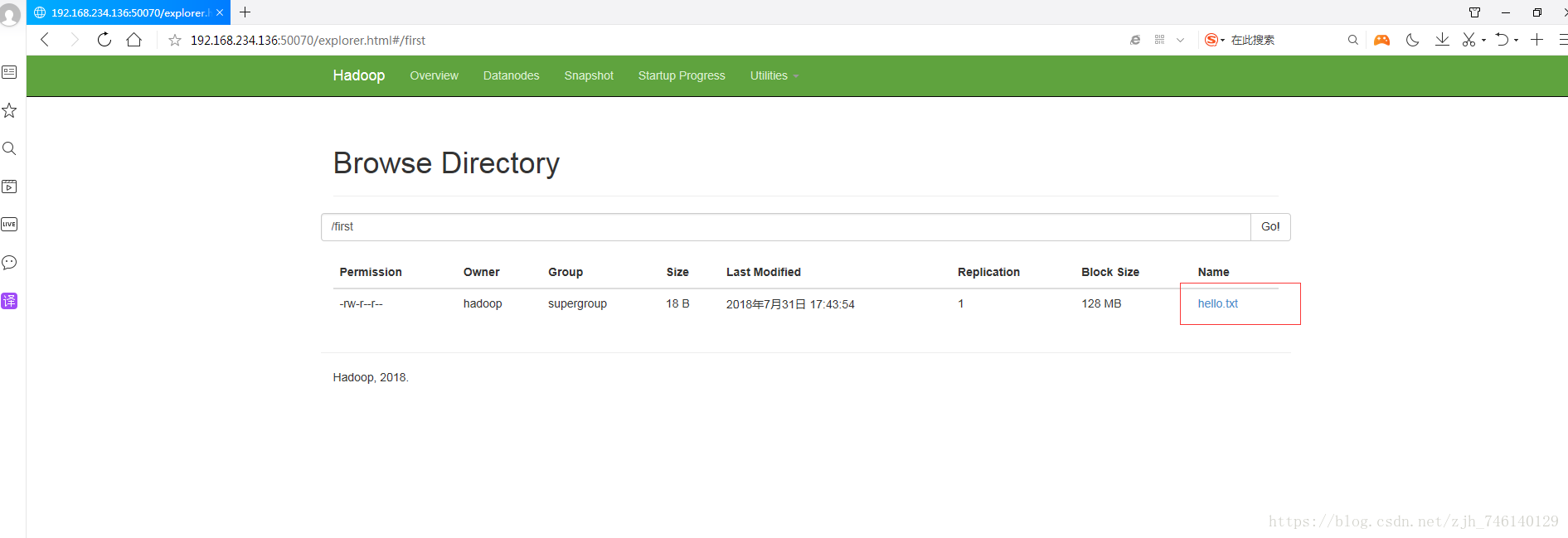

③、操作创建文件

④、结果