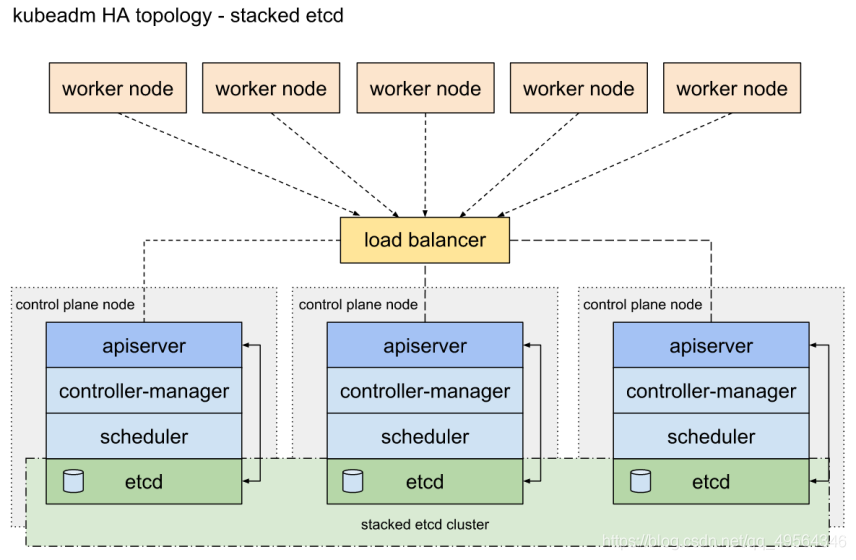

在server8部署haproxy, 对后端k8s master(server2 3 4)调度,实现了k8s的高可用

node节点server9

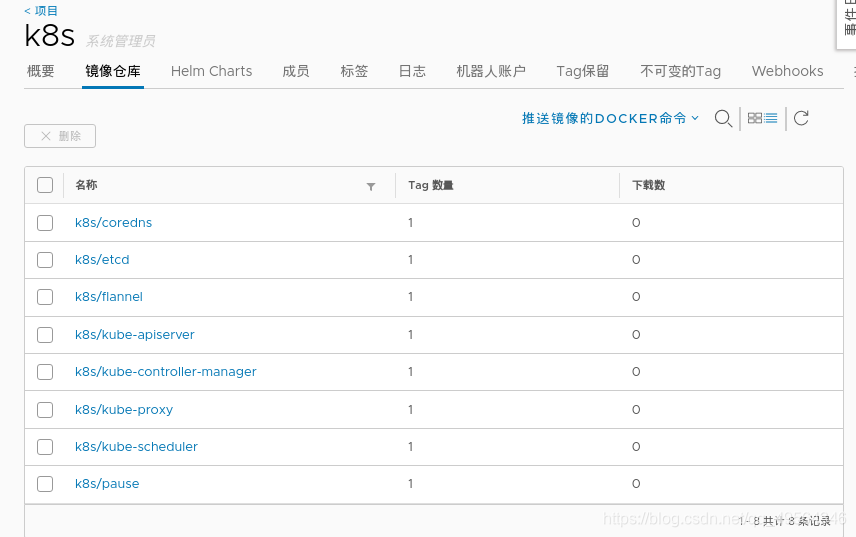

本地仓库 server1

在对master实现高可用时,也可以在对haproxy实现高可用

server2

清理之前的k8s

kubectl delete node server4 #所有节点都删除

kubeadm reset

ipvsadm --clear

1.准备新的环境

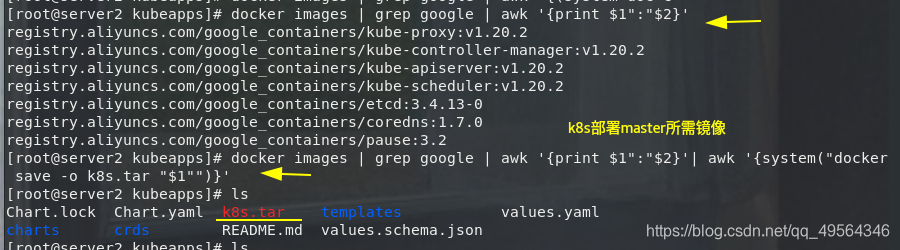

docker images | grep google | awk '{print $1":"$2}'

docker images | grep google | awk '{print $1":"$2}'| awk '{system("docker save -o k8s.tar "$1"")}'

docker rmi `docker images | awk '{print $3}'| grep -v ^IMAGE` #清理所有节点的镜像

或者改名上传到本地仓库

docker images | grep k8s

docker images | grep k8s | awk '{system("docker push "$1":"$2"")}'

2.Loadbalancer部署

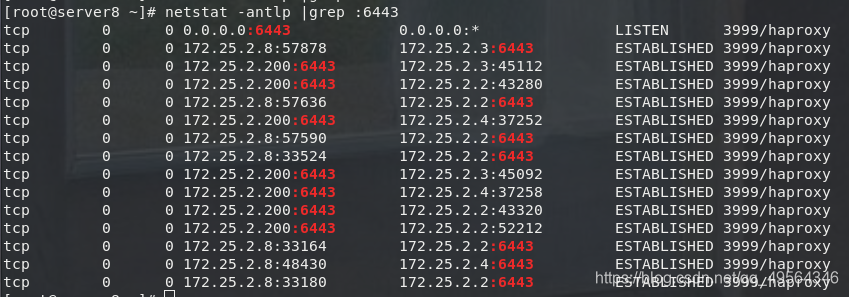

server8

yum install -y haproxy

vim /etc/haproxy/haproxy.cfg

frontend main *:6443

mode tcp

default_backend apiserver

backend apiserver

balance roundrobin

mode tcp

server app1 172.25.2.2:6443 check

server app2 172.25.2.3:6443 check

server app3 172.25.2.4:6443 check

systemctl enable --now haproxy.service

netstat -antlp

ip addr add 172.25.2.200/24 dev eth0

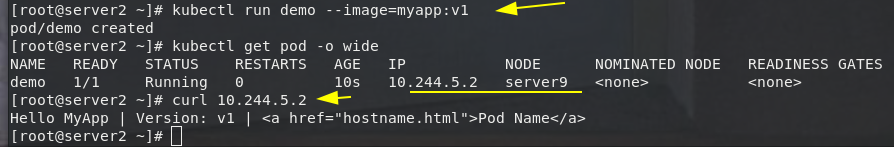

3.node节点部署

server9

yum install docker-ce -y

systemctl enable --now docker

[root@server2 docker]# scp -r certs.d/ server9:/etc/docker/

vim /etc/docker/daemon.json

{

"registry-mirrors": ["https://reg.westos.org"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

]

}

配置内核参数

vim /etc/sysctl.d/docker.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

vm.swappiness=0

sysctl --system

加载内核模块:(kube_proxy使用IPVS模式)

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack_ipv4

yum install -y ipvsadm

lsmod | grep ip

yum install kubeadm-1.20.2-0.x86_64 kubelet-1.20.2-0.x86_64 -y #集群内保证版本一致

4.k8s master部署

server2

yum install -y kubeadm kubelet kubectl

kubeadm config print init-defaults > kubeadm-init.yaml

kubeadm config images list --config kubeadm-init.yaml #列出所需镜像

vim kubeadm-init.yaml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 172.25.2.2 #第一个master地址

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: server2

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: "172.25.2.200:6443" #调度IP

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: reg.westos.org/k8s #本地镜像拉取

kind: ClusterConfiguration

kubernetesVersion: v1.20.2

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16 #k8s容器IP网段

serviceSubnet: 10.96.0.0/12

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1 #ipvs

kind: KubeProxyConfiguration

mode: ipvs

kubeadm init --config kubeadm-init.yaml --upload-certs

export KUBECONFIG=/etc/kubernetes/admin.conf #root用户

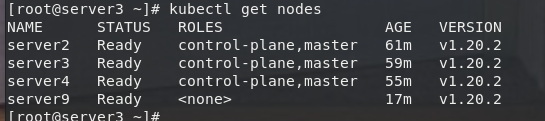

kubectl get node

/etc/kubernetes/admin.conf #api集群化用户

server3 4

kubeadm join 172.25.2.200:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:5b51593e6fd6b5e55139250ff95815b55bb5530a284291ecfb3608313718d0be \

--control-plane --certificate-key 15b04641f9c16b0e273e1b30ca684f7a20af588b92a01c33e4acb2eb42090f84

export KUBECONFIG=/etc/kubernetes/admin.conf

5.Flannel网络组件部署

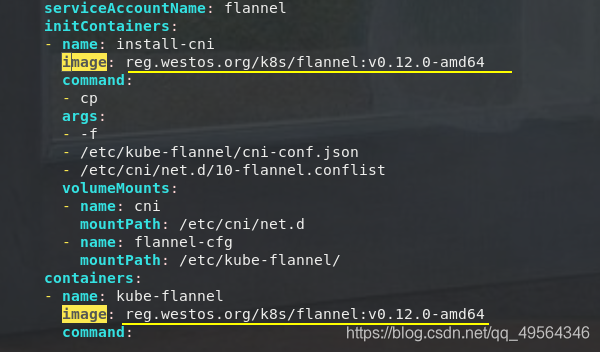

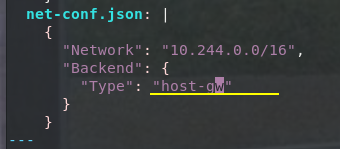

vim kube-flannel.yml #网络插件,修改镜像位置和主机网关模式

kubectl apply -f kube-flannel.yml

kubectl get pod -n kube-system |grep coredns | awk '{system("kubectl delete pod "$1" -n kube-system")}'

/etc/cni/net.d #之前安装过其他网络组件时,所有节点注意清理配置信息