环境说明

[root@ha ~]# cat /etc/redhat-release

CentOS Linux release 7.7.1908 (Core)

| 主机名 | IP地址 | 说明 |

|---|---|---|

| master-1 | 192.168.3.128 | |

| master-2 | 192.168.3.131 | |

| master-3 | 192.168.3.132 | |

| node-1 | 192.168.3.133 | haprox主机 |

| ha | 192.168.3.134 | haproxy主机 |

| VIP | 192.168.3.100 |

安装haproxy和keepalive

yum -y install keepalive

yum -y install haproxy

修改keepalive配置文件

- ha主机

global_defs {

router_id LVS_01

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51

priority 150

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.3.100/24

}

}

- node主机

global_defs {

router_id LVS_02

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.3.100/24

}

}

要注意interface指定的网卡名称一定要与实际网卡名称一致

修改haproxy配置文件

global

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

stats socket /var/lib/haproxy/stats

defaults

mode tcp

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

listen stats

mode http

bind *:9443

stats uri /admin/stats

monitor-uri /monitoruri

frontend showDoc

bind *:6443

use_backend k8s-cluster

backend k8s-cluster

balance roundrobin

server k8s-cluster1 192.168.3.128:6443 check

server k8s-cluster2 192.168.3.131:6443 check

server k8s-cluster3 192.168.3.132:6443 check

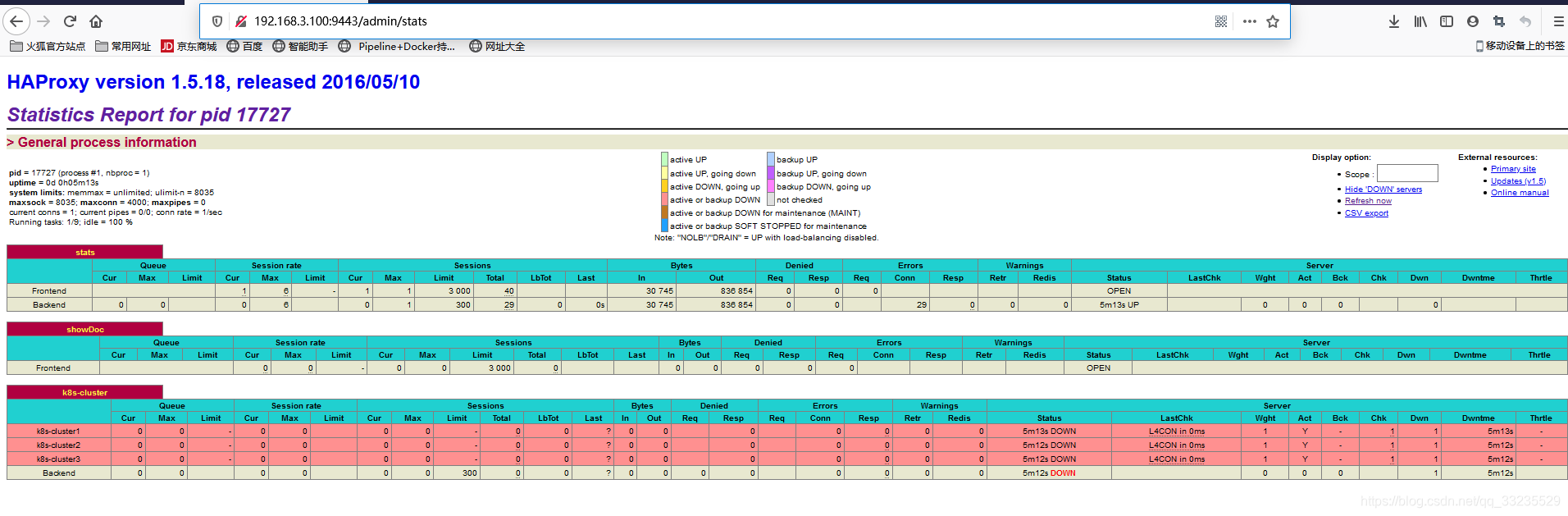

- 验证

访问VIP+9443/admin/stats

由于3台master机器还没有部署所以是红色,至此keepalive+haproxyd搭建完成

搭建k8s集群

基础环境准备(在集群内所有节点执行)

- 设置 yum repository

yum install -y yum-utils \

device-mapper-persistent-data \

lvm2

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

- 安装docker

yum install -y docker-ce-18.09.7 docker-ce-cli-18.09.7 containerd.io

- 安装 nfs-utils与wget

yum install -y nfs-utils

yum install -y wget

- 关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

- 关闭selinux

setenforce 0

sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

- 关闭swap

swapoff -a

yes | cp /etc/fstab /etc/fstab_bak

cat /etc/fstab_bak |grep -v swap > /etc/fstab

- 修改 /etc/sysctl.conf

echo "net.ipv4.ip_forward = 1" >> /etc/sysctl.conf

echo "net.bridge.bridge-nf-call-ip6tables = 1" >> /etc/sysctl.conf

echo "net.bridge.bridge-nf-call-iptables = 1" >> /etc/sysctl.conf

sysctl -p

- 配置k8s的yum源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

- 安装kubelet、kubeadm、kubectl

yum install -y kubelet-1.16.2 kubeadm-1.16.2 kubectl-1.16.2

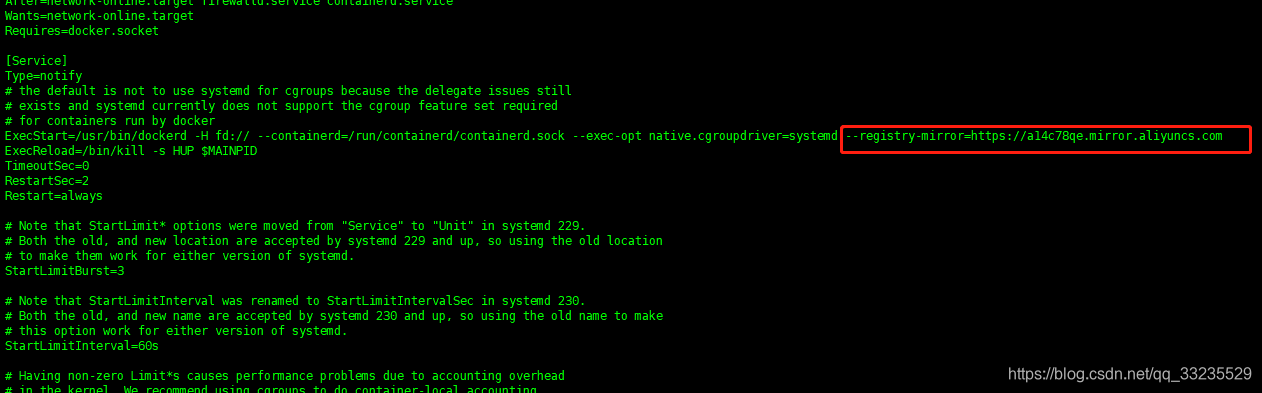

- 修改docker Cgroup Driver为systemd

sed -i "s#^ExecStart=/usr/bin/dockerd.*#ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock --exec-opt native.cgroupdriver=systemd#g" /usr/lib/systemd/system/docker.service

- 修改docker镜像源提高下载速度

vim /lib/systemd/system/docker.service

- 启动docker

systemctl daemon-reload

systemctl start docker

systemctl enable docker

systemctl status docker

初始化第一个master节点

- 在master-1上执行

export APISERVER_NAME=apiserver.demo

export POD_SUBNET=10.100.0.1/16

echo "127.0.0.1 ${APISERVER_NAME}" >> /etc/hosts

cat <<EOF > ./kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta2

kind: ClusterConfiguration

kubernetesVersion: v1.16.2

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

controlPlaneEndpoint: "${APISERVER_NAME}:6443"

networking:

serviceSubnet: "10.96.0.0/16"

podSubnet: "${POD_SUBNET}"

dnsDomain: "cluster.local"

EOF

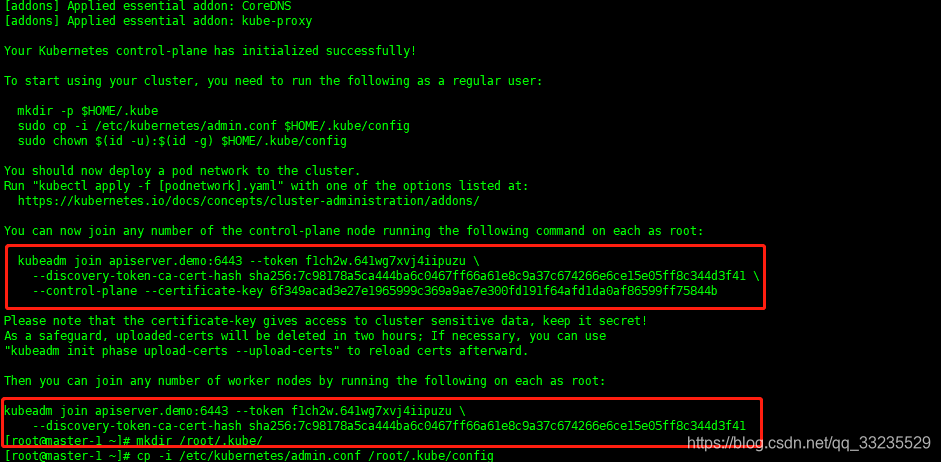

kubeadm init --config=kubeadm-config.yaml --upload-certs

注意输出的内容,上面的用于初始化剩下的master节点,下面的用于初始化node节点

注意输出的内容,上面的用于初始化剩下的master节点,下面的用于初始化node节点

- 配置kubectl

rm -rf /root/.kube/

mkdir /root/.kube/

cp -i /etc/kubernetes/admin.conf /root/.kube/config

- 安装calico网络插件

wget https://kuboard.cn/install-script/calico/calico-3.9.2.yaml

sed -i "s#192\.168\.0\.0/16#${POD_SUBNET}#" calico-3.9.2.yaml

kubectl apply -f calico-3.9.2.yaml

初始化其他master节点

- 修改hosts解析

echo "192.168.3.100 apiserver.demo" >> /etc/hosts

- 初始化

kubeadm join apiserver.demo:6443 --token f1ch2w.641wg7xvj4iipuzu \

--discovery-token-ca-cert-hash sha256:7c98178a5ca444ba6c0467ff66a61e8c9a37c674266e6ce15e05ff8c344d3f41 \

--control-plane --certificate-key 6f349acad3e27e1965999c369a9ae7e300fd191f64afd1da0af86599ff75844b

- 配置kubectl

mkdir /root/.kube/

cp -i /etc/kubernetes/admin.conf /root/.kube/config

初始化node节点

- 修改hosts解析

echo "192.168.3.100 apiserver.demo" >> /etc/hosts

- 初始化

kubeadm join apiserver.demo:6443 --token f1ch2w.641wg7xvj4iipuzu \

--discovery-token-ca-cert-hash sha256:7c98178a5ca444ba6c0467ff66a61e8c9a37c674266e6ce15e05ff8c344d3f41

验证