k8s高可用集群

1. 环境清理与虚拟机配置

1.1 环境清理

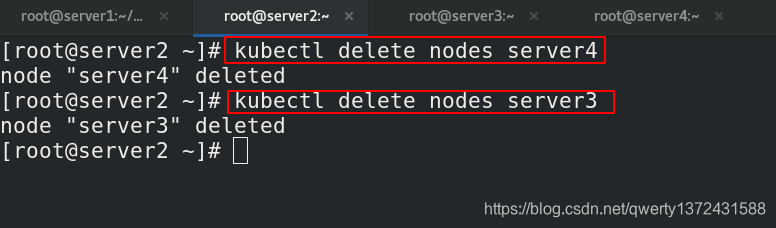

[root@server2 ~]# kubectl delete nodes server4 ##将server3和server4从server2上剥离

[root@server2 ~]# kubectl delete nodes server3

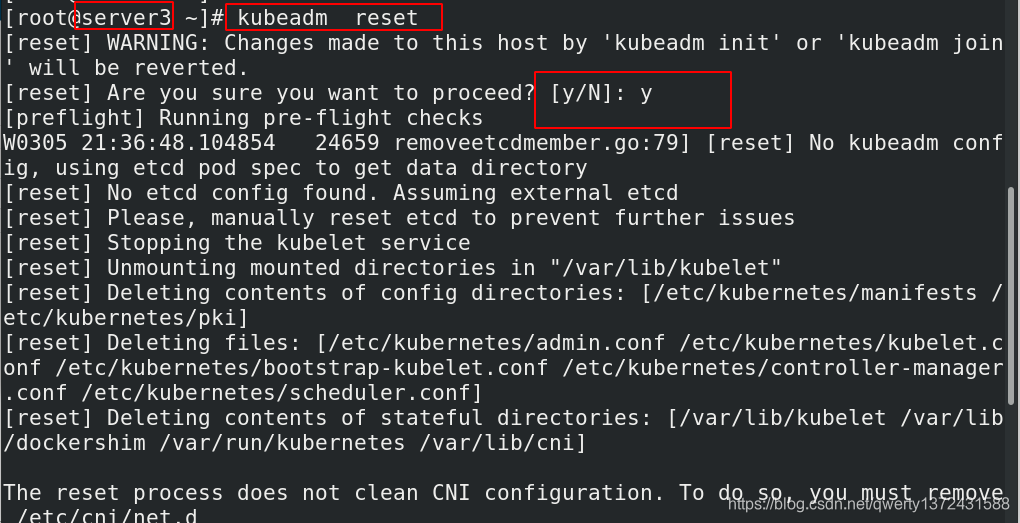

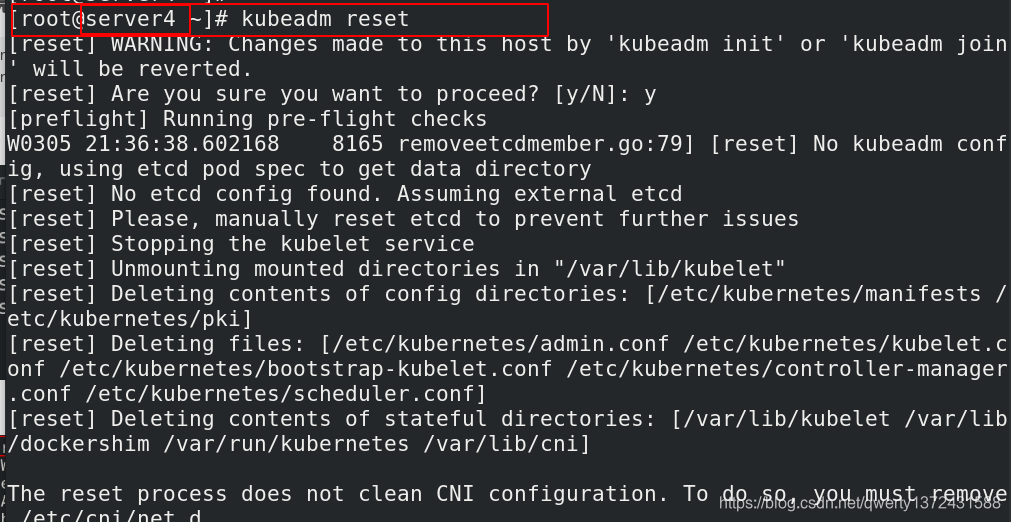

[root@server4 ~]# kubeadm reset ##重置server3和server4

[root@server4 ~]# reboot ##重启server3和server4

[root@server2 ~]# kubectl delete nodes server2 ##删除server2节点并重置重启

[root@server2 ~]# kubeadm reset

[root@server2 ~]# ipvsadm --clear ##每台机器都需要做

[root@server2 ~]# reboot

[root@server2 ~]# ipvsadm -l ##server2、3、4查看ipvs是否存在ipvs策略,如果存在ipvsadm --clear

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

1.2 虚拟机配置

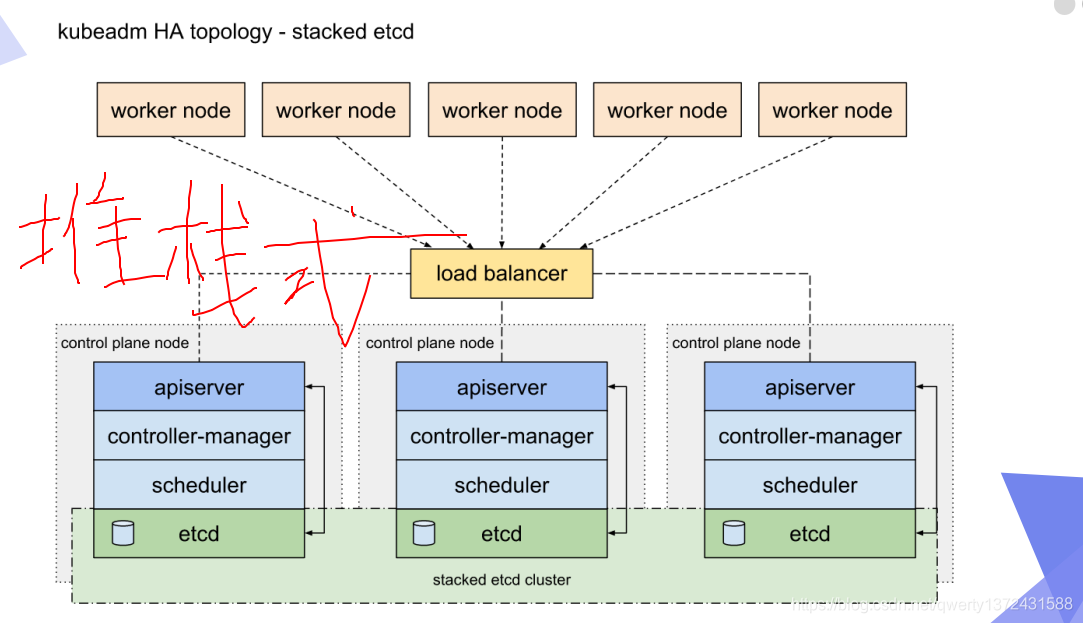

设置两台虚拟机,做高可用。原来的三个做全部做k8s的master。

2. 部署k8s高可用原理图

3. Loadbalancer部署

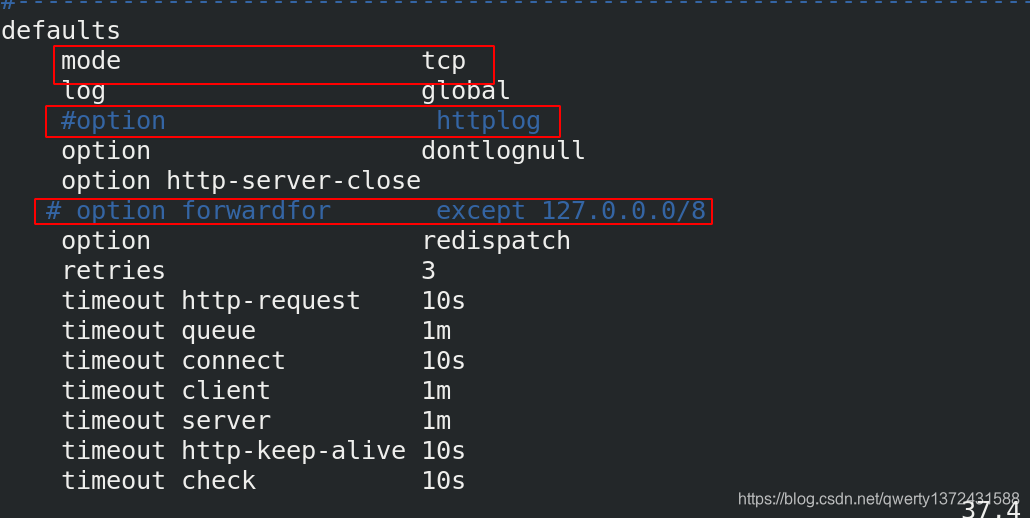

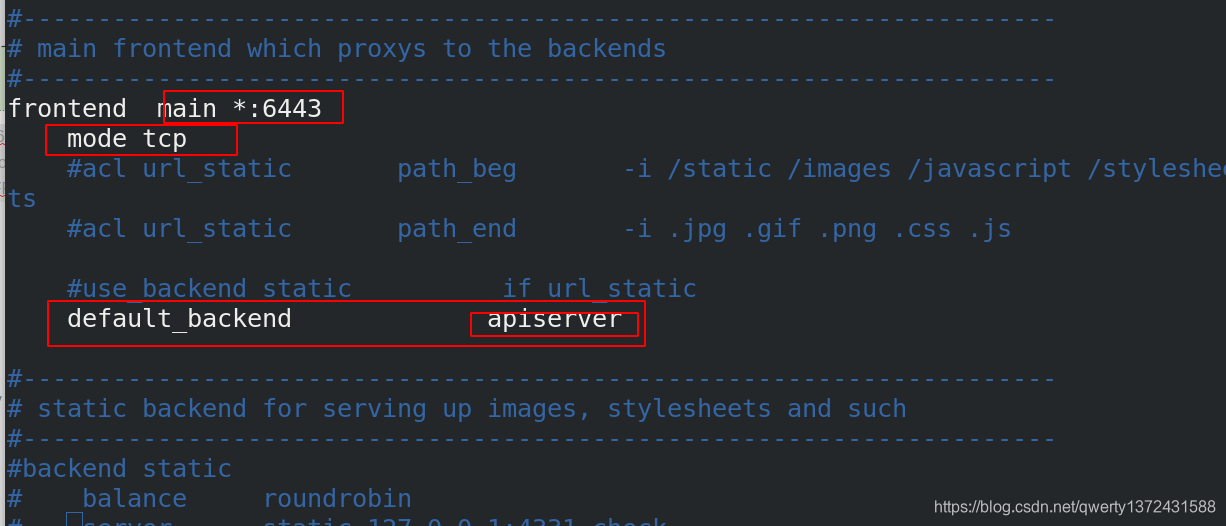

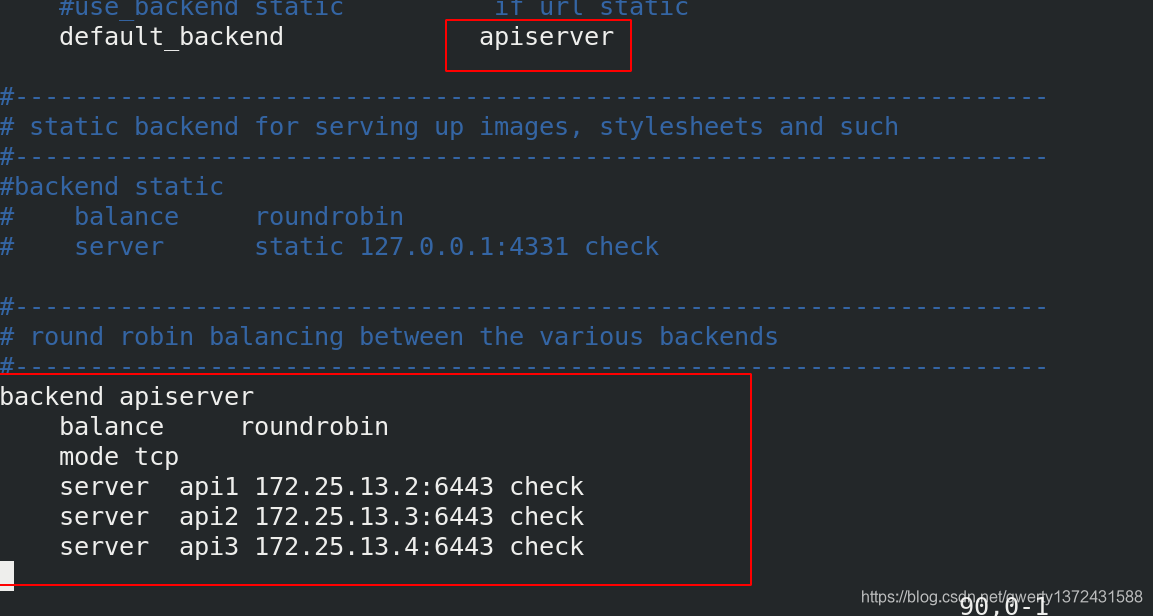

3.1 配置haproxy

## 1. Loadbalancer部署(负载均衡的部署)(server5作为负载均衡器)

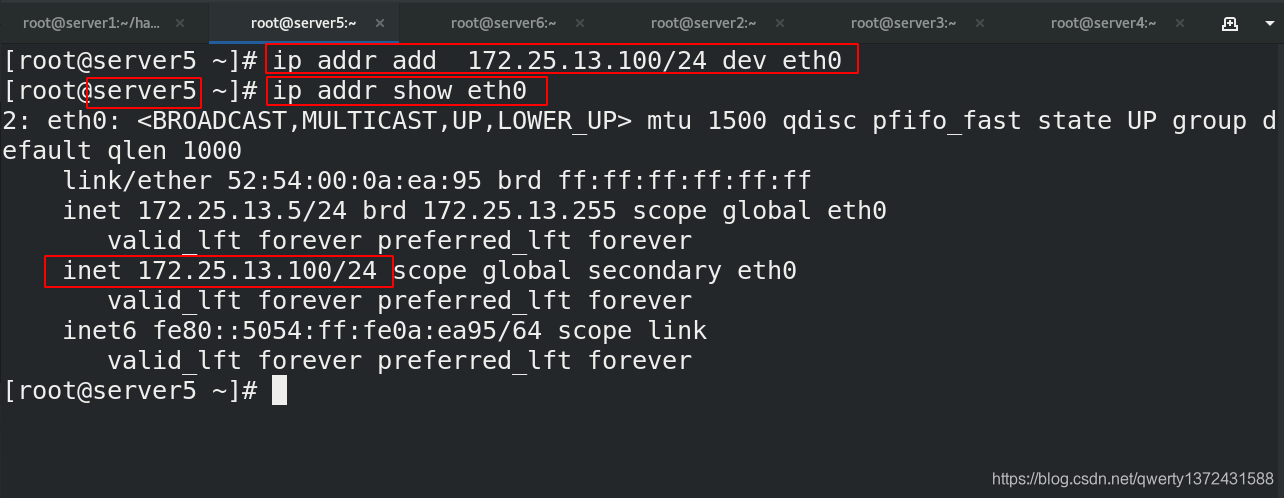

[root@server5 haproxy]# ip addr add 172.25.13.100/24 dev eth0 ##添加vip,为了之后做haproxy高可用

[root@server5 ~]# yum install -y haproxy ##搭建haproxy

[root@server5 haproxy]# vim haproxy.cfg #编辑内容如下图

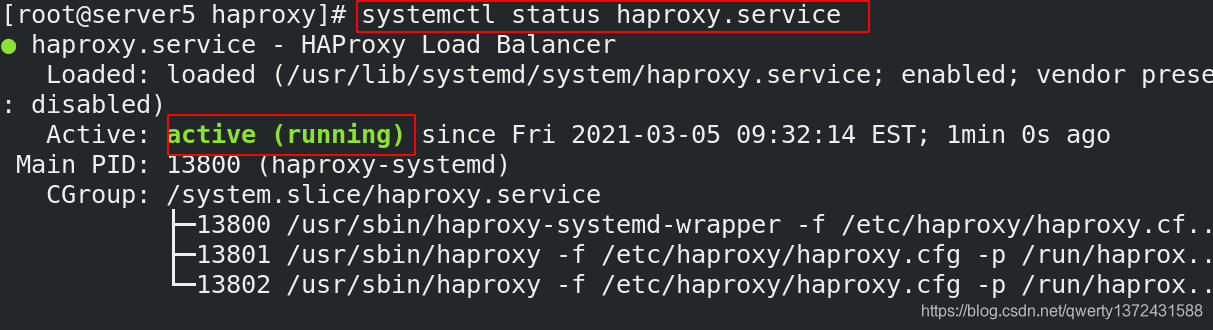

[root@server5 haproxy]# systemctl enable --now haproxy.service ##启动

[root@server5 haproxy]# cat /var/log/messages ##出错查看日志

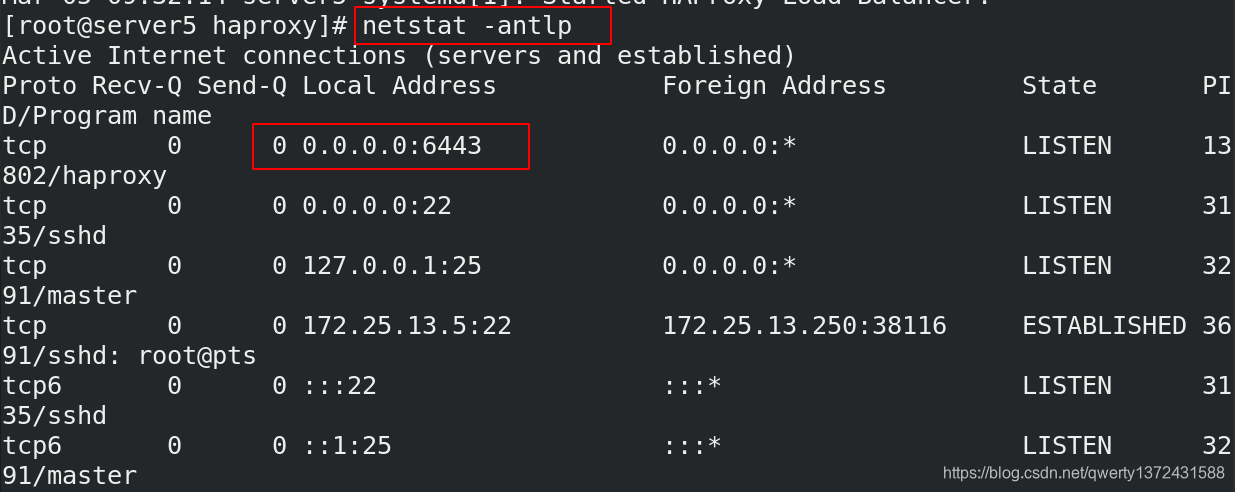

[root@server5 haproxy]# netstat -antlp ##必须看到6443端口起来

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:6443 0.0.0.0:* LISTEN 13802/haproxy

3.1 设置一个vip,来做高可用

4. k8s集群部署

4.1 docker部署

[root@server2 ~]# vim /etc/docker/daemon.json ##之前都配置过

{

"registry-mirrors": ["https://reg.westos.org"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

]

}

[root@server2 ~]# cat /etc/sysctl.d/docker.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

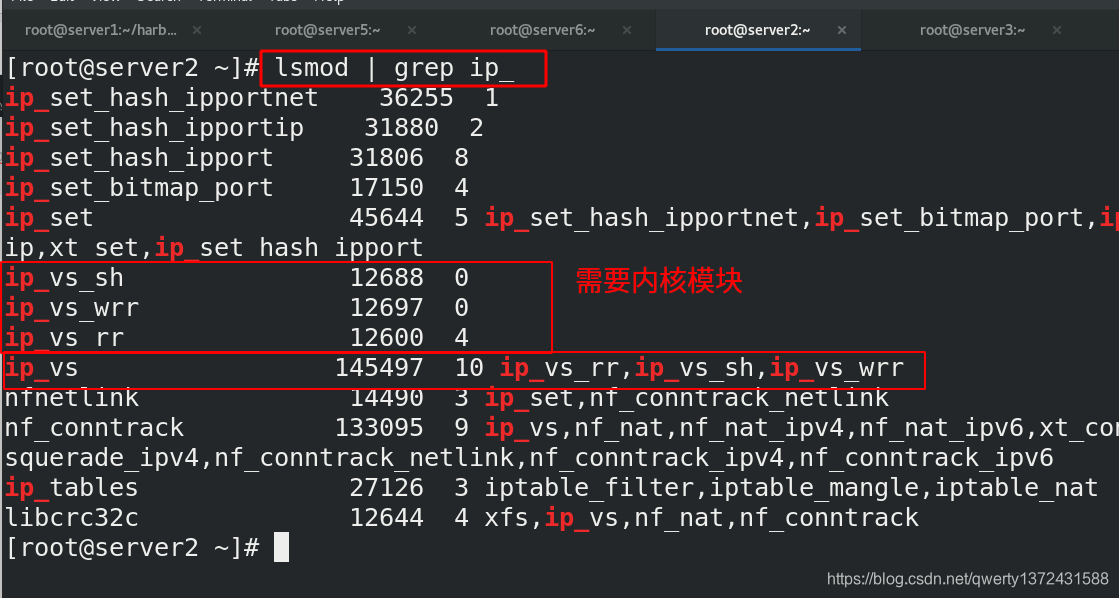

#加载内核模块:(kube_proxy使用IPVS模式)

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack_ipv4

[root@server2 ~]# yum install ipvsadm -y

4.2 k8s配置(三个master节点)

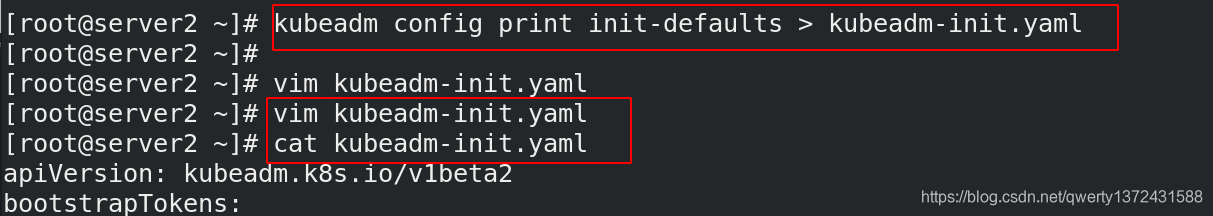

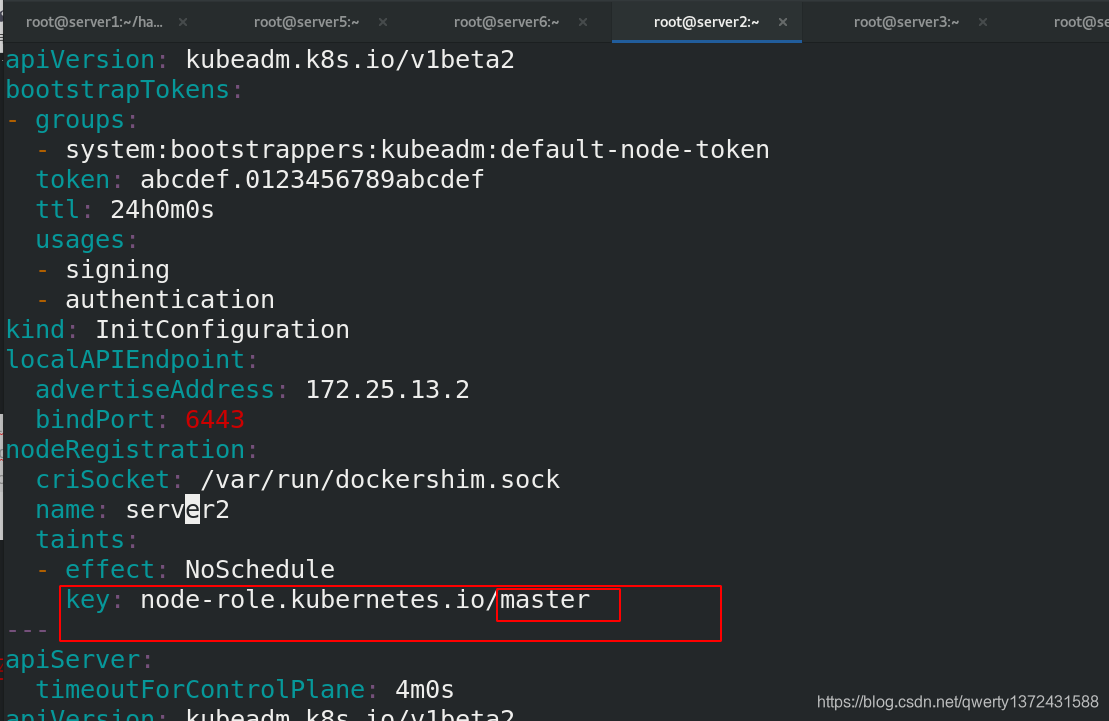

## 1.修改初始化文件

[root@server2 ~]# kubeadm config print init-defaults > kubeadm-init.yaml ##生成init文件

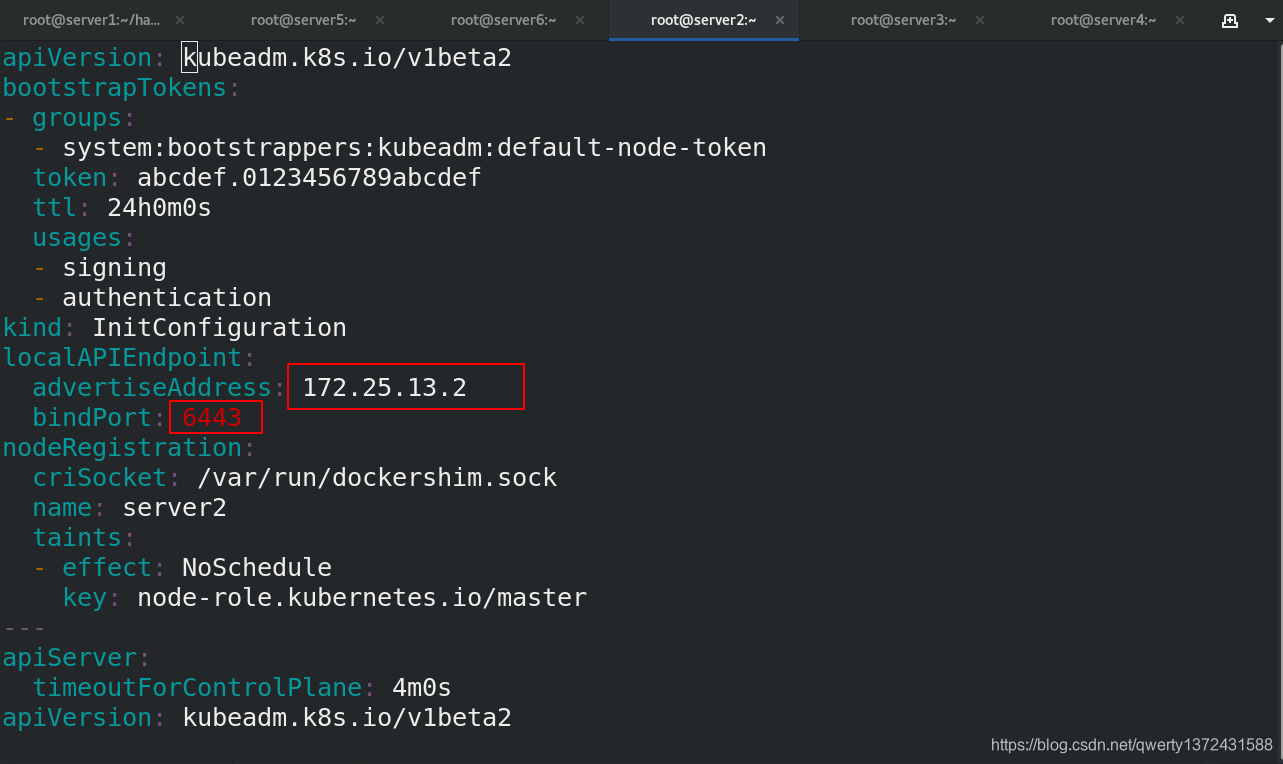

[root@server2 ~]# vim kubeadm-init.yaml ##编辑文件内容如下图

[root@server2 ~]# cat kubeadm-init.yaml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 172.25.13.2

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: server2

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

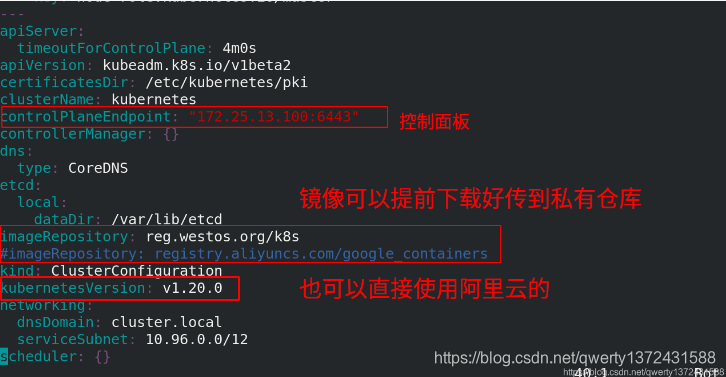

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: "172.25.13.100:6443"

controllerManager: {

}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: reg.westos.org/k8s

#imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.20.0

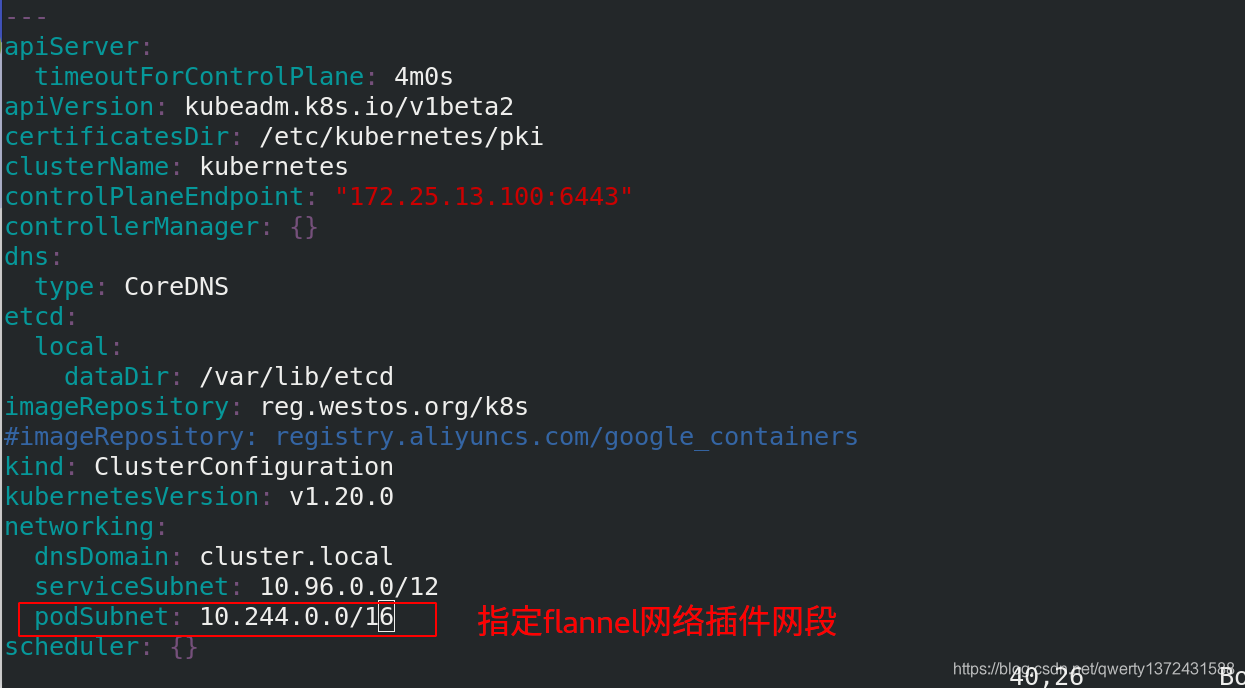

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 10.244.0.0/16

scheduler: {

}

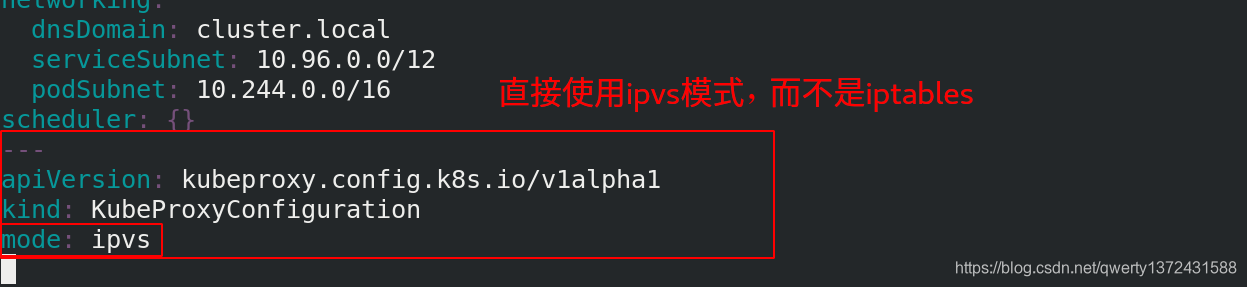

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs ##ipvs模块来做proxy

4.2.1 修改初始化文件

4.2.2 删除server2、3、4所有的镜像

## 2. 删除server2、3、4上面所有的镜像,然后从本地部署k8s需要的镜像

[root@server2 ~]# docker rmi `docker images | grep -v ^REPOSITORY | awk '{print $1":"$2}'`

4.2.3 上传仓库所需镜像

[root@server1 harbor]# docker pull registry.aliyuncs.com/google_containers/pause:3.2

[root@server1 harbor]# docker pull registry.aliyuncs.com/google_containers/coredns:1.7.0

[root@server1 harbor]# docker pull registry.aliyuncs.com/google_containers/etcd:3.4.13-0

[root@server1 harbor]# docker pull quay.io/coreos/flannel:v0.12.0-amd64

[root@server1 harbor]# docker pull registry.aliyuncs.com/google_containers/kube-proxy:v1.20.0

[root@server1 harbor]# docker pull registry.aliyuncs.com/google_containers/kube-scheduler:v1.20.0

[root@server1 harbor]# docker pull registry.aliyuncs.com/google_containers/kube-controller-manager:v1.20.0

[root@server1 harbor]# docker pull registry.aliyuncs.com/google_containers/kube-apiserver:v1.20.0

[root@server1 harbor]# docker push reg.westos.org/k8s/kube-apiserver:v1.20.0

[root@server1 harbor]# docker push reg.westos.org/k8s/kube-proxy:v1.20.0

[root@server1 harbor]# docker push reg.westos.org/k8s/kube-scheduler:v1.20.0

[root@server1 harbor]# docker push reg.westos.org/k8s/kube-controller-manager:v1.20.0

[root@server1 harbor]# docker push reg.westos.org/k8s/coredns:1.7.0

[root@server1 harbor]# docker push reg.westos.org/k8s/pause:3.2

[root@server1 harbor]# docker push reg.westos.org/k8s/etcd:3.4.13-0

[root@server1 harbor]# docker push reg.westos.org/k8s/flannel:v0.12.0-amd64

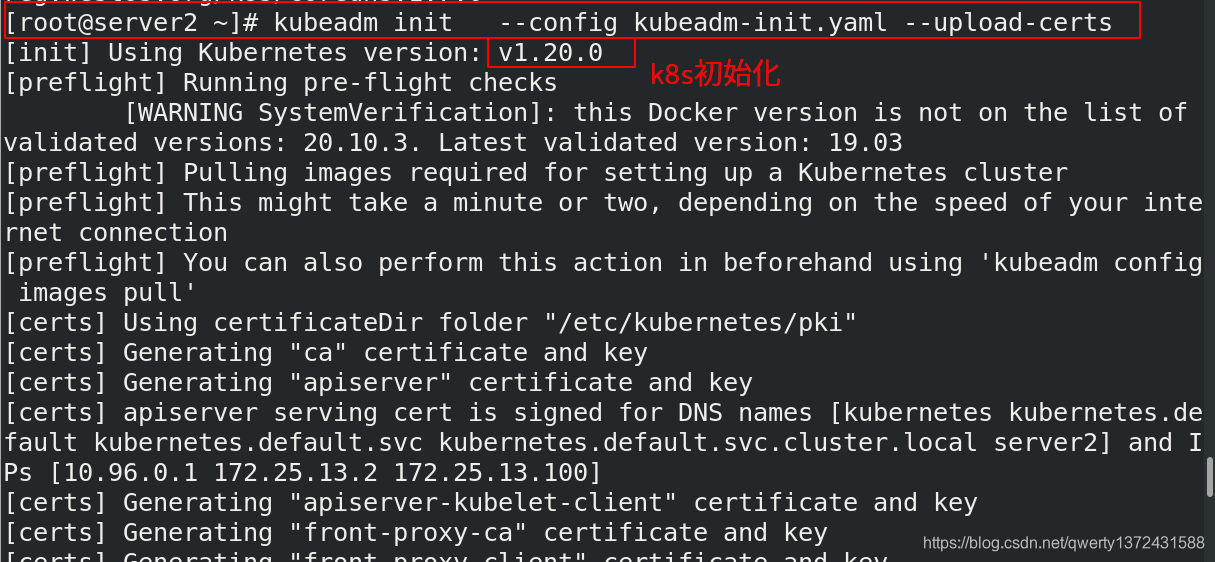

4.2.4 k8s初始化

[root@server2 ~]# kubeadm config images list --config kubeadm-init.yaml ##后面的版本号是更着init文件中的k8s版本走的,刚才上传的镜像就是现在展示的版本

reg.westos.org/k8s/kube-apiserver:v1.20.0

reg.westos.org/k8s/kube-controller-manager:v1.20.0

reg.westos.org/k8s/kube-scheduler:v1.20.0

reg.westos.org/k8s/kube-proxy:v1.20.0

reg.westos.org/k8s/pause:3.2

reg.westos.org/k8s/etcd:3.4.13-0

reg.westos.org/k8s/coredns:1.7.0

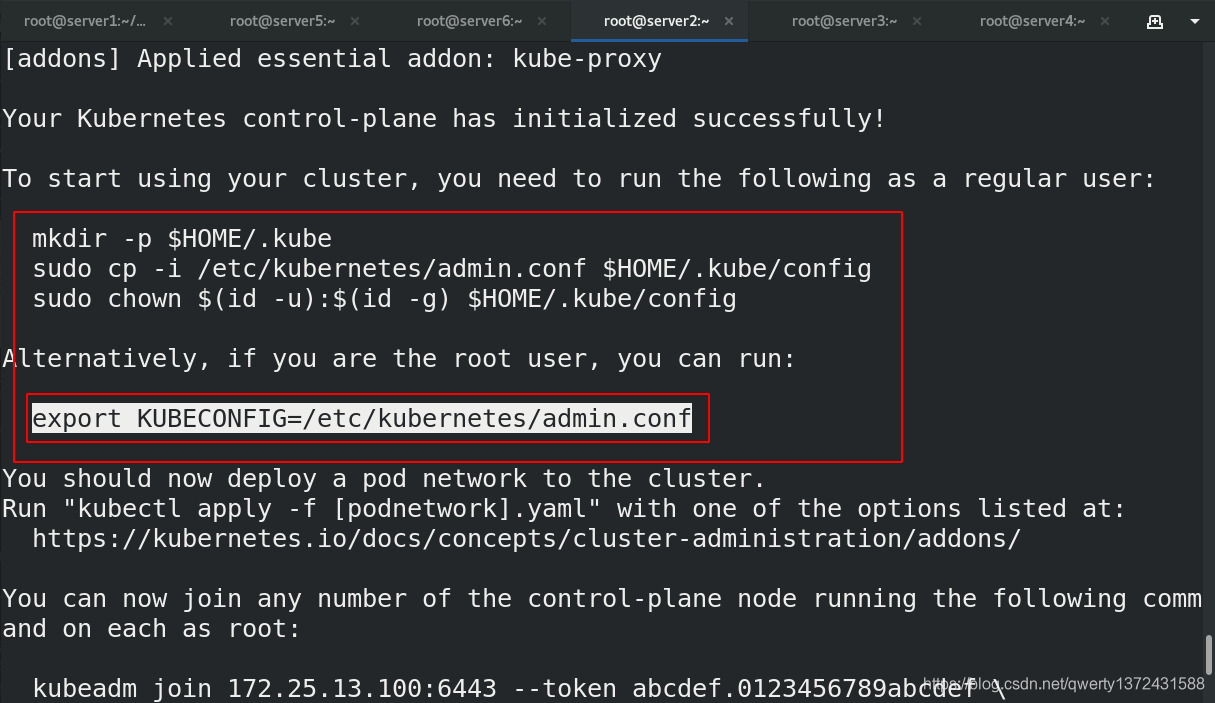

[root@server2 ~]# kubeadm init --config kubeadm-init.yaml --upload-certs ##初始化,生成两个密钥,一个是加入控制面板,都是master(control-plane),一个是加入集群作为slave。三个都是master,需要共享证书。

wd

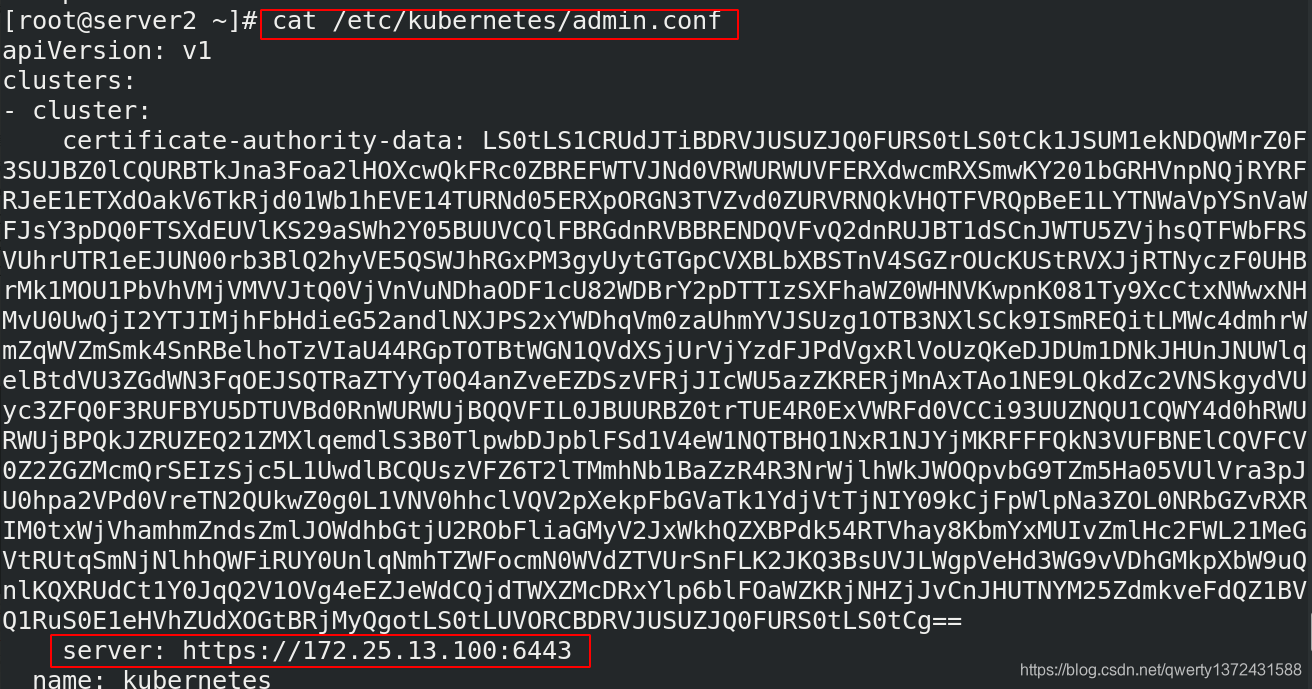

[root@server2 ~]# export KUBECONFIG=/etc/kubernetes/admin.conf ##导入admin认证,不然会出现下面的错误(这是方法一,针对是root用户,可以将上面的命令加入.bashrc环境变量)

Unable to connect to the server: x509: certificate signed by unknown authority (possibly because of "crypto/rsa: verification error" while trying to verify candidate authority certificate "kubernetes")

[root@server2 ~]# vim .bashrc ##加入环境变量,扩容的master节点也是每一个加一下环境变量

[root@server2 ~]# cat .bashrc | grep KUBE

export KUBECONFIG=/etc/kubernetes/admin.conf

[root@server2 ~]# source .bashrc

##(方法二,针对不是root用户)

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

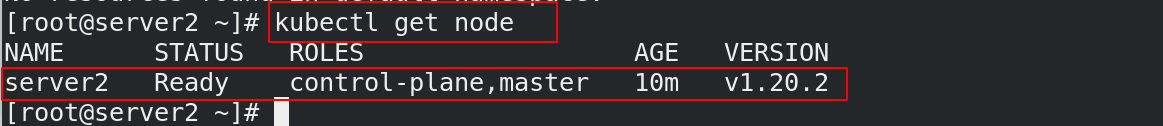

[root@server2 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

server2 Ready control-plane,master 10m v1.20.2

[root@server2 ~]# echo "source <(kubectl completion bash)" >> ~/.bashrc ##添加k8s命令补齐功能

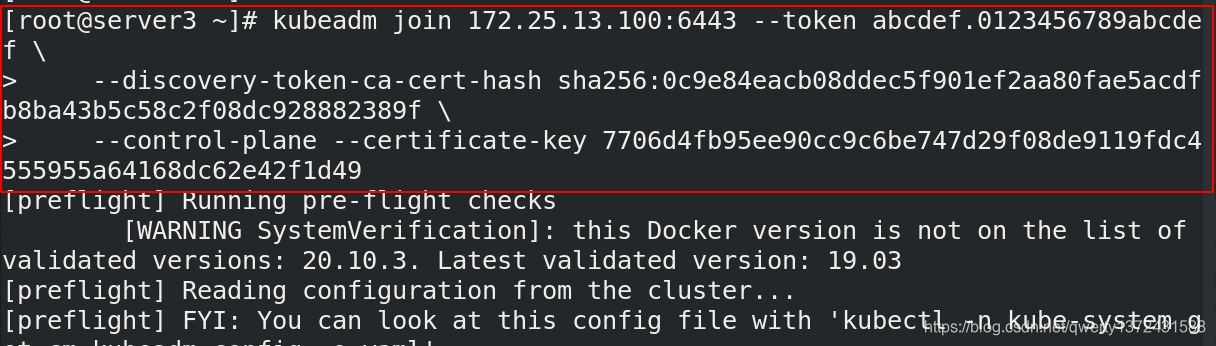

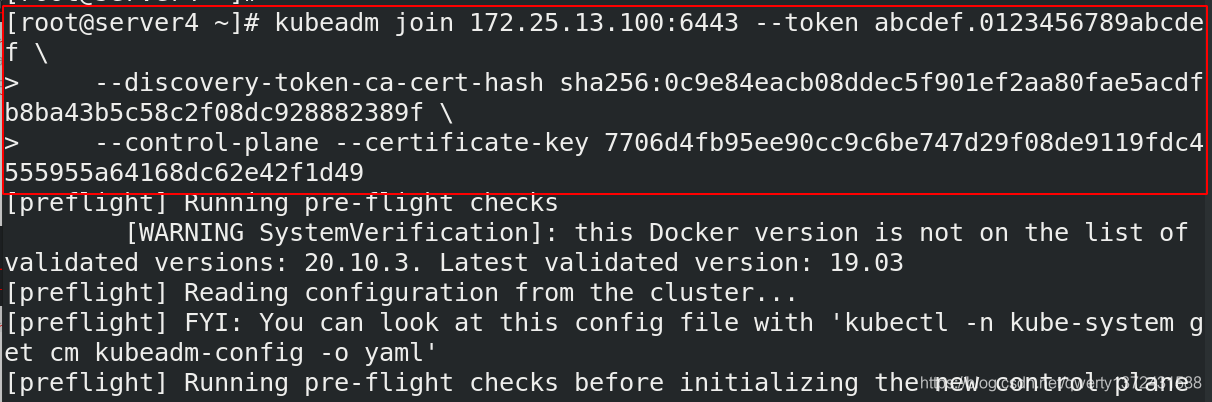

4.2.5 节点扩容

##节点扩容,server3和server4使用刚才生成的加入集群(master)命令进行扩容

##如果加入不进去,可以重新kubeadm reset或者查看/var/lib/etcd是否有文件,里面文件清干净

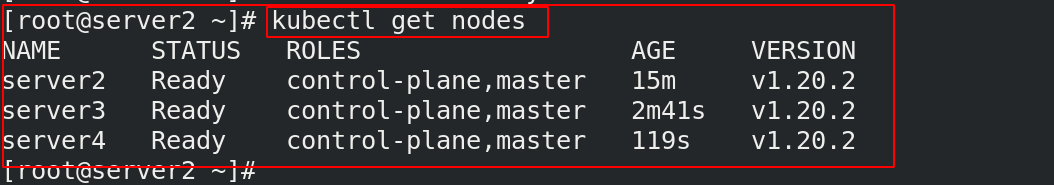

[root@server2 ~]# kubectl get nodes ##节点准备好

NAME STATUS ROLES AGE VERSION

server2 Ready control-plane,master 15m v1.20.2

server3 Ready control-plane,master 2m41s v1.20.2

server4 Ready control-plane,master 119s v1.20.2

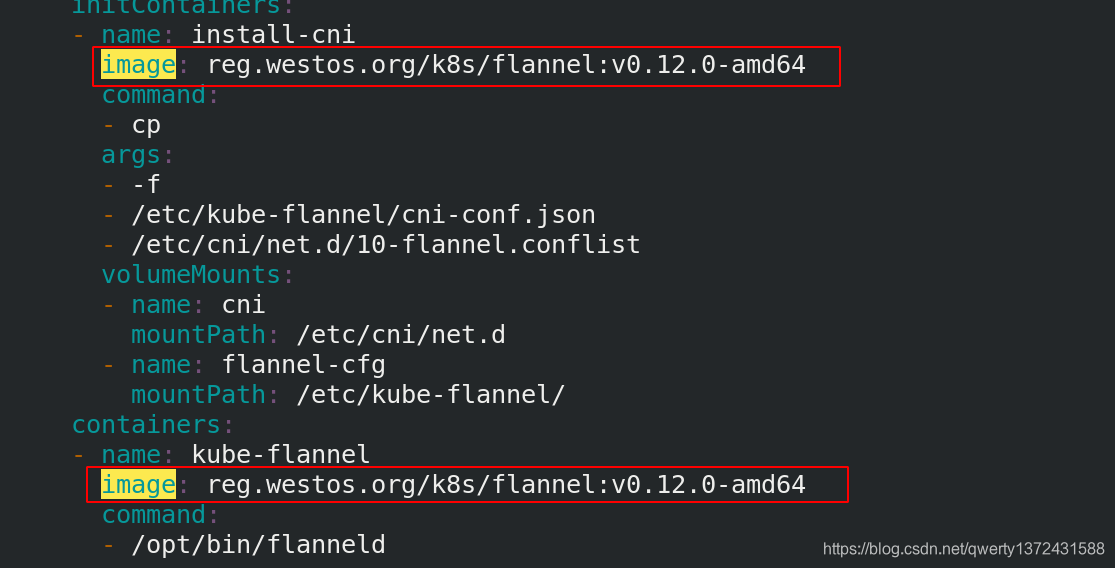

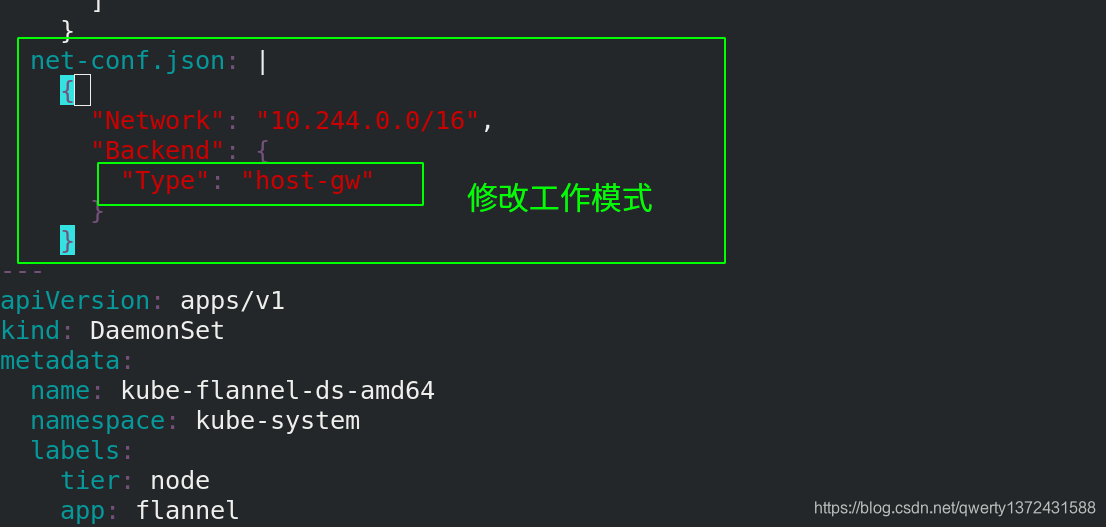

4.2.6 安装网络组件(flannel)

[root@server2 ~]# vim kube-flannel.yml

[root@server2 ~]# cat kube-flannel.yml ##这个文件可以网络上下载,修改一下镜像获取路径即可,并修改工作模式从vxlan改为host-gw

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "host-gw"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-amd64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: kubernetes.io/arch

operator: In

values:

- amd64

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: reg.westos.org/k8s/flannel:v0.12.0-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: reg.westos.org/k8s/flannel:v0.12.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: kubernetes.io/arch

operator: In

values:

- arm64

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.12.0-arm64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-arm64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: kubernetes.io/arch

operator: In

values:

- arm

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.12.0-arm

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-arm

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-ppc64le

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: kubernetes.io/arch

operator: In

values:

- ppc64le

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.12.0-ppc64le

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-ppc64le

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-s390x

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: kubernetes.io/arch

operator: In

values:

- s390x

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.12.0-s390x

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-s390x

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

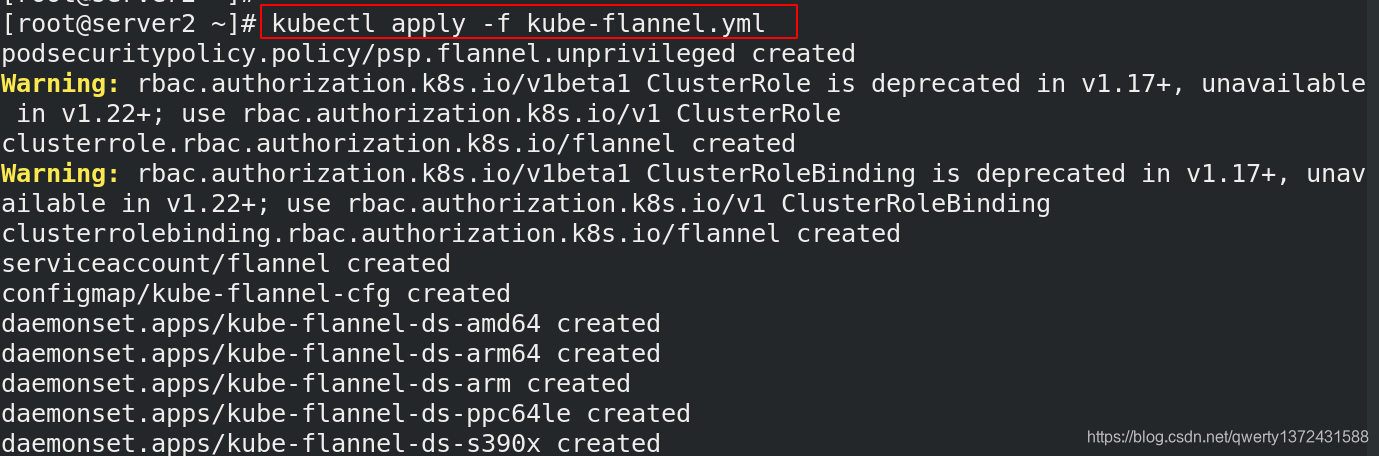

[root@server2 ~]# kubectl apply -f kube-flannel.yml ##创建flannel

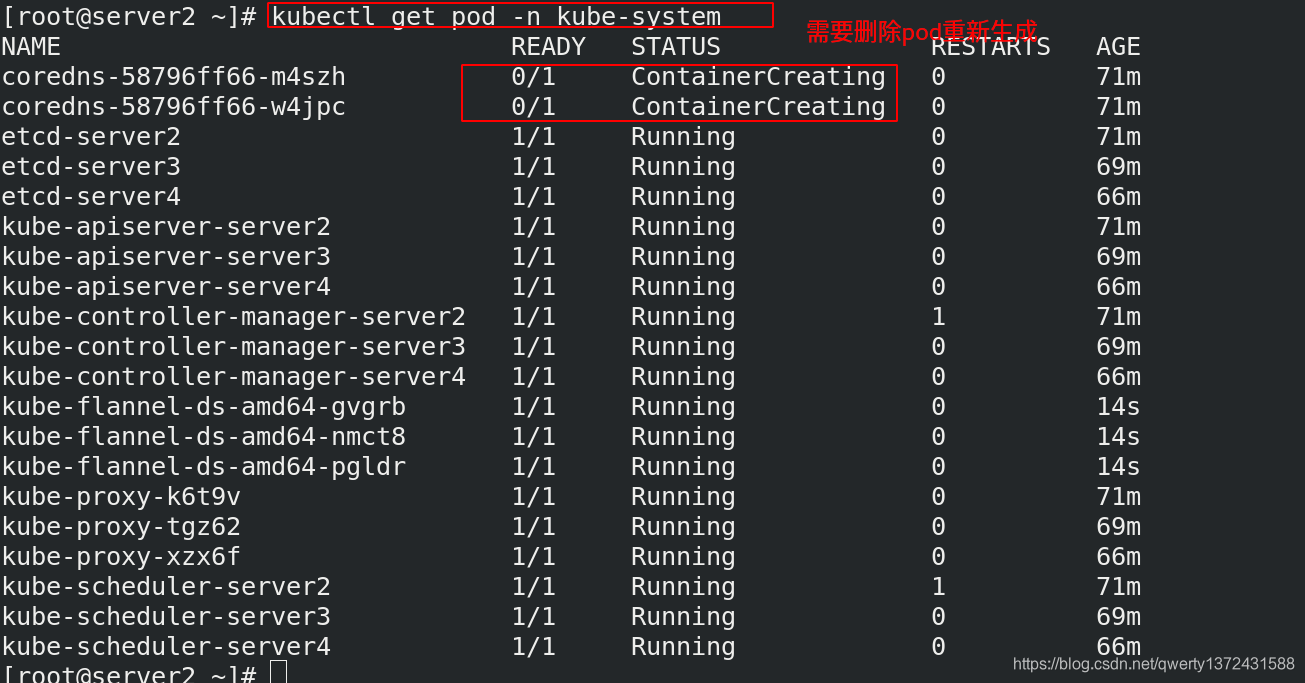

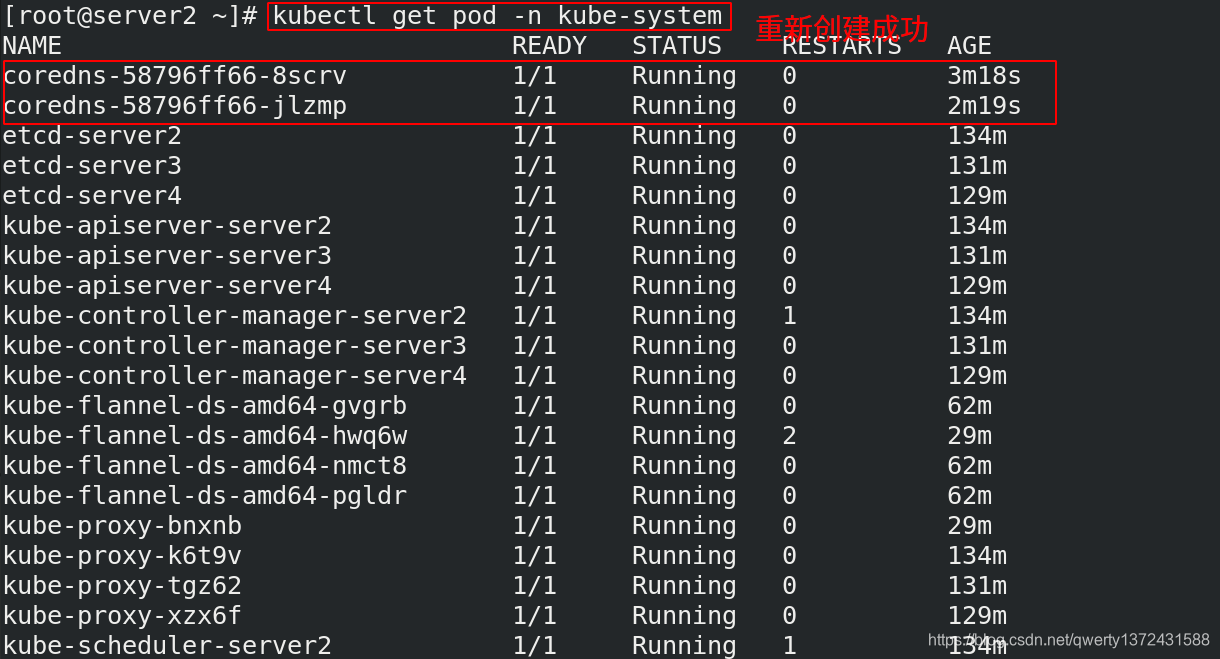

[root@server2 ~]# kubectl -n kube-system get pod | grep coredns | awk '{system("kubectl -n kube-system delete pod "$1"")}' ##删除pod重新生成(调度会比较慢)

[root@server2 ~]# kubectl -n kube-system delete pod coredns-58796ff66-bmztk --force ##删不了就强只删除

4.3 k8s配置(node节点–需要配置docker和k8s)

4.3.1 前期配置

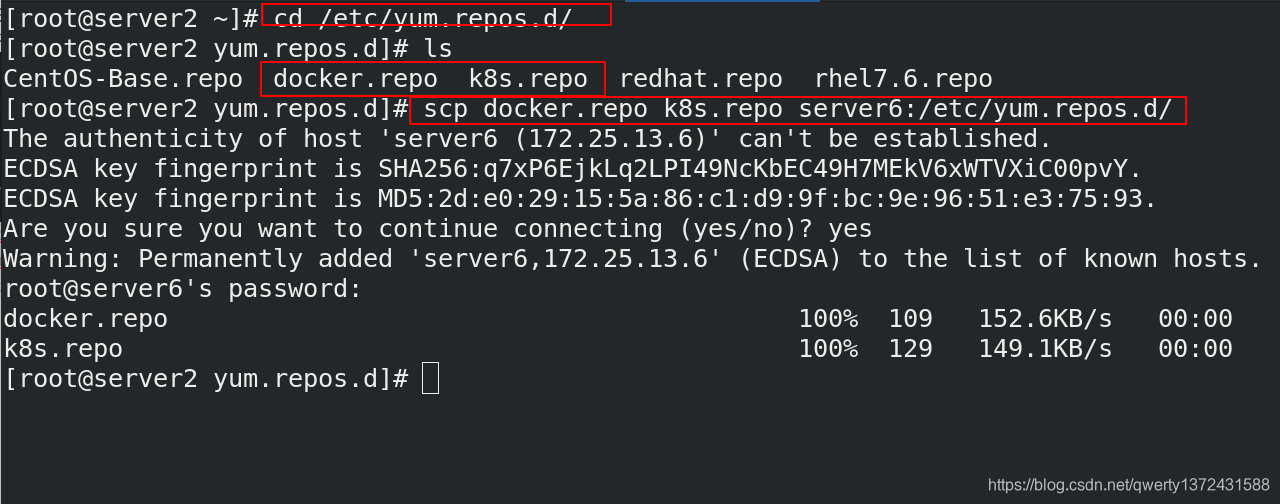

[root@server2 ~]# cd /etc/yum.repos.d/

[root@server2 yum.repos.d]# ls

CentOS-Base.repo docker.repo k8s.repo redhat.repo rhel7.6.repo

[root@server2 yum.repos.d]# scp docker.repo k8s.repo server6:/etc/yum.repos.d/

[root@server6 yum.repos.d]# cat k8s.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

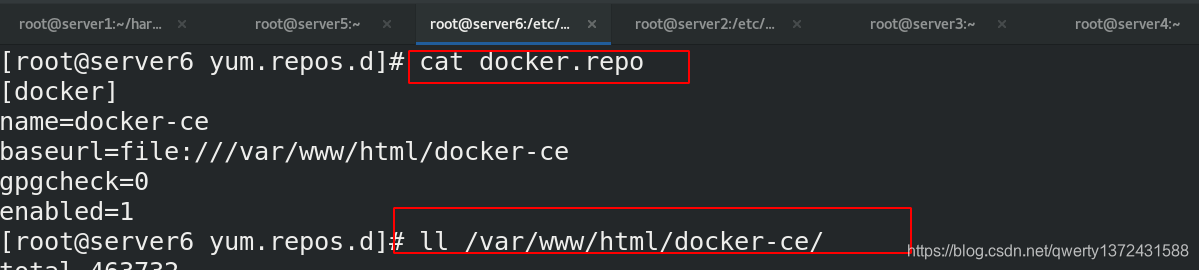

[root@server6 yum.repos.d]# cat docker.repo ##修改仓库文件

[docker]

name=docker-ce

baseurl=file:///var/www/html/docker-ce

gpgcheck=0

enabled=1

[root@server6 yum.repos.d]# ll /var/www/html/docker-ce/ ##pub/docs/docker/docker-ce目录

total 463732

-rwxr-xr-x 1 root root 24250280 Mar 6 09:28 containerd.io-1.2.10-3.2.el7.x86_64.rpm

-rwxr-xr-x 1 root root 24416076 Mar 6 09:28 containerd.io-1.2.13-3.1.el7.x86_64.rpm

-rwxr-xr-x 1 root root 26675948 Mar 6 09:28 containerd.io-1.2.13-3.2.el7.x86_64.rpm

-rwxr-xr-x 1 root root 30374084 Mar 6 09:28 containerd.io-1.3.7-3.1.el7.x86_64.rpm

-rwxr-xr-x 1 root root 38144 Mar 6 09:28 container-selinux-2.77-1.el7.noarch.rpm

-rwxr-xr-x 1 root root 22238716 Mar 6 09:28 docker-ce-18.09.9-3.el7.x86_64.rpm

-rwxr-xr-x 1 root root 25278520 Mar 6 09:28 docker-ce-19.03.11-3.el7.x86_64.rpm

-rwxr-xr-x 1 root root 25285728 Mar 6 09:28 docker-ce-19.03.12-3.el7.x86_64.rpm

-rwxr-xr-x 1 root root 25268380 Mar 6 09:28 docker-ce-19.03.13-3.el7.x86_64.rpm

-rwxr-xr-x 1 root root 25671976 Mar 6 09:28 docker-ce-19.03.5-3.el7.x86_64.rpm

-rwxr-xr-x 1 root root 25697324 Mar 6 09:28 docker-ce-19.03.8-3.el7.x86_64.rpm

-rwxr-xr-x 1 root root 16409108 Mar 6 09:28 docker-ce-cli-18.09.9-3.el7.x86_64.rpm

-rwxr-xr-x 1 root root 40054796 Mar 6 09:28 docker-ce-cli-19.03.11-3.el7.x86_64.rpm

-rwxr-xr-x 1 root root 40044364 Mar 6 09:28 docker-ce-cli-19.03.12-3.el7.x86_64.rpm

-rwxr-xr-x 1 root root 40247476 Mar 6 09:28 docker-ce-cli-19.03.13-3.el7.x86_64.rpm

-rwxr-xr-x 1 root root 41396672 Mar 6 09:28 docker-ce-cli-19.03.5-3.el7.x86_64.rpm

-rwxr-xr-x 1 root root 41468684 Mar 6 09:28 docker-ce-cli-19.03.8-3.el7.x86_64.rpm

drwxr-xr-x 2 root root 4096 Mar 6 09:28 repodata

[root@server6 yum.repos.d]# yum repolist ##加载仓库

Loaded plugins: product-id, search-disabled-repos, subscription-manager

This system is not registered with an entitlement server. You can use subscription-manager to register.

repo id repo name status

docker docker-ce 17

kubernetes Kubernetes 633

rhel7.6 rhel7.6 5,152

repolist: 5,802

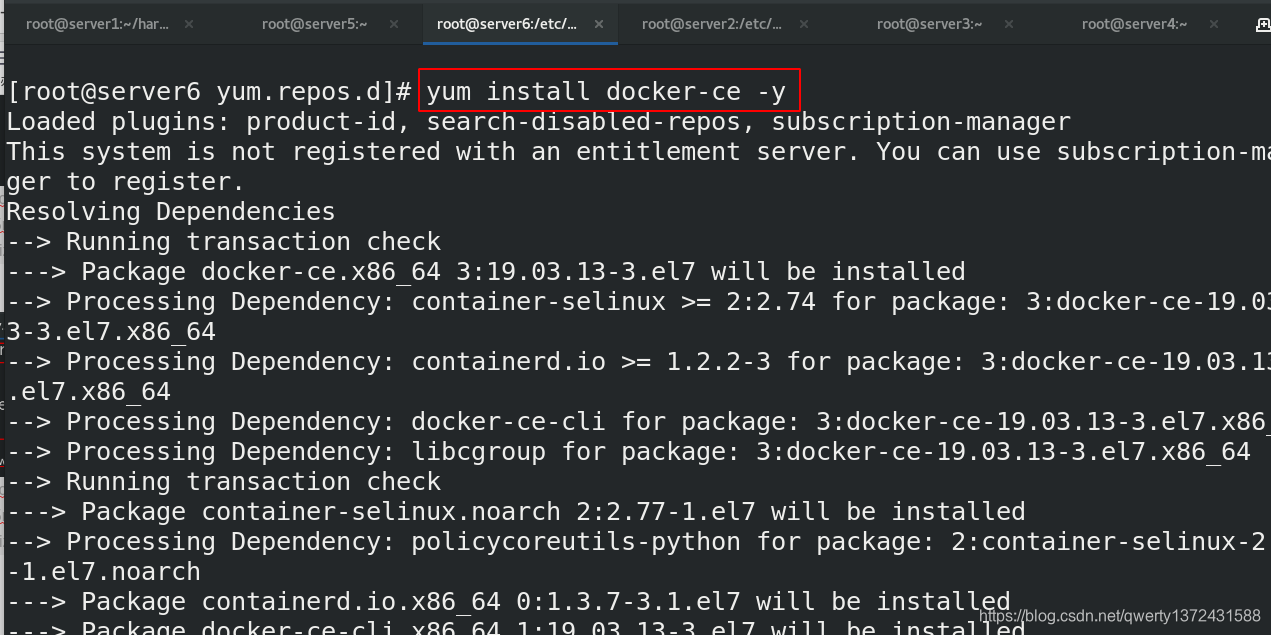

4.3.2 安装docker并发送所需文件

## 2. 安装并发送所需文件

[root@server6 yum.repos.d]# yum install docker-ce -y

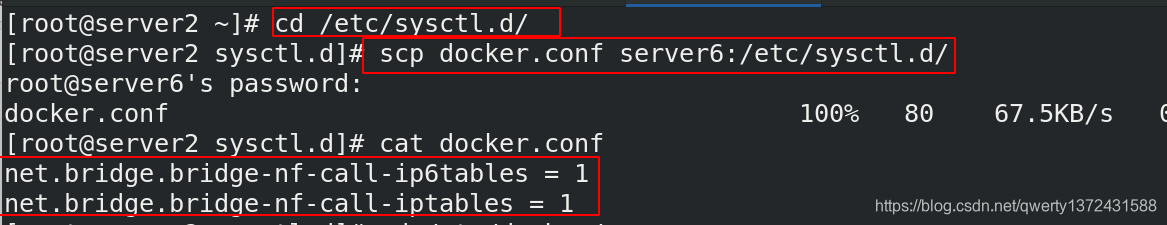

[root@server2 ~]# cd /etc/sysctl.d/

[root@server2 sysctl.d]# scp docker.conf server6:/etc/sysctl.d/

[root@server2 sysctl.d]# cat docker.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

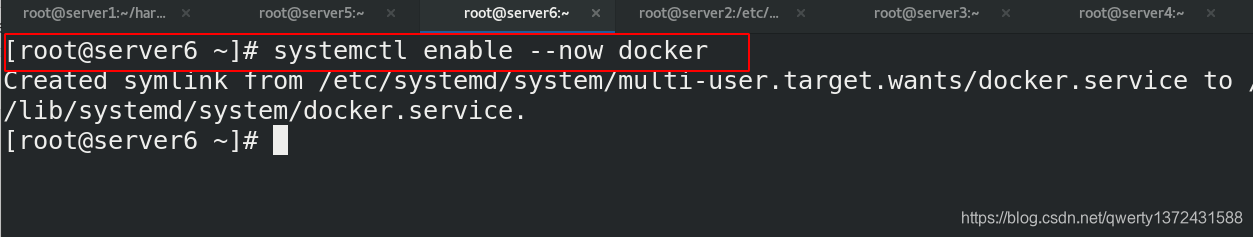

[root@server6 ~]# systemctl enable --now docker ##启动docker

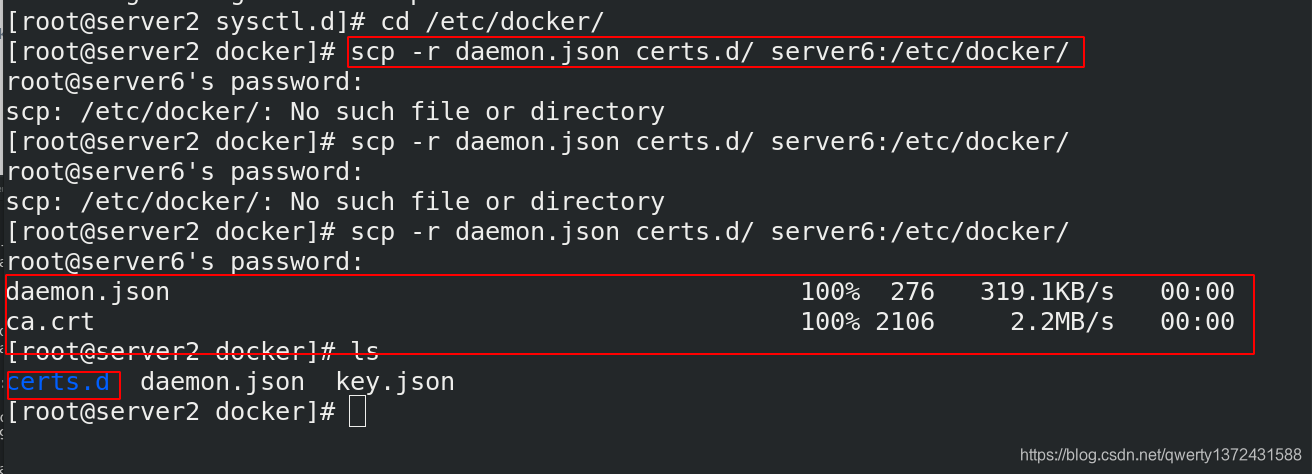

[root@server2 sysctl.d]# cd /etc/docker/

[root@server2 docker]# ls

certs.d daemon.json key.json

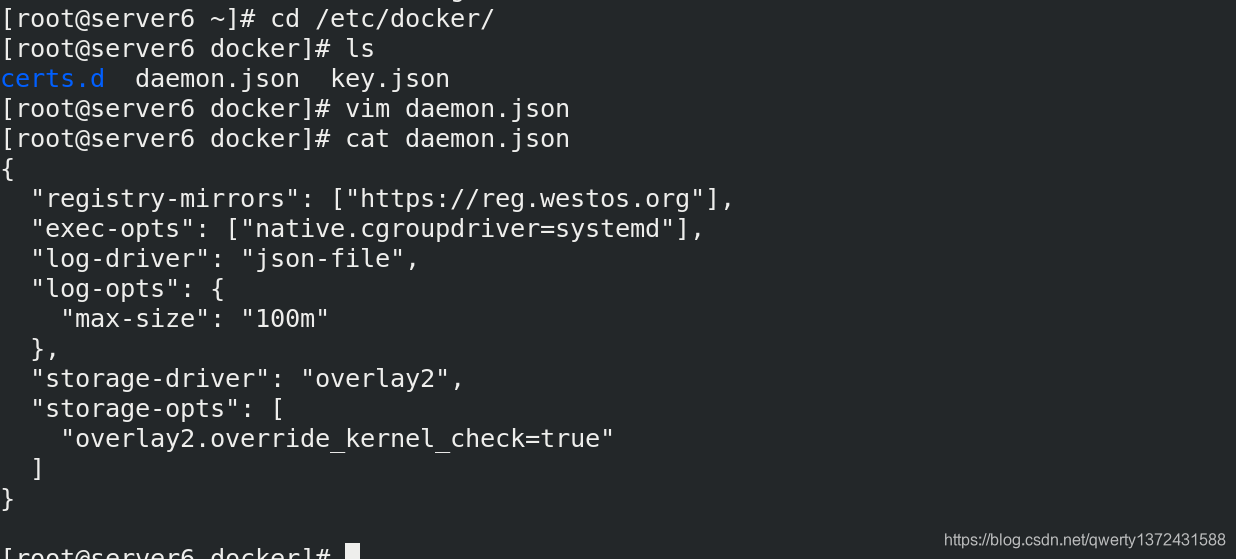

[root@server2 docker]# scp -r daemon.json certs.d/ server6:/etc/docker/ ##发送配置和证书

4.3.3 做解析

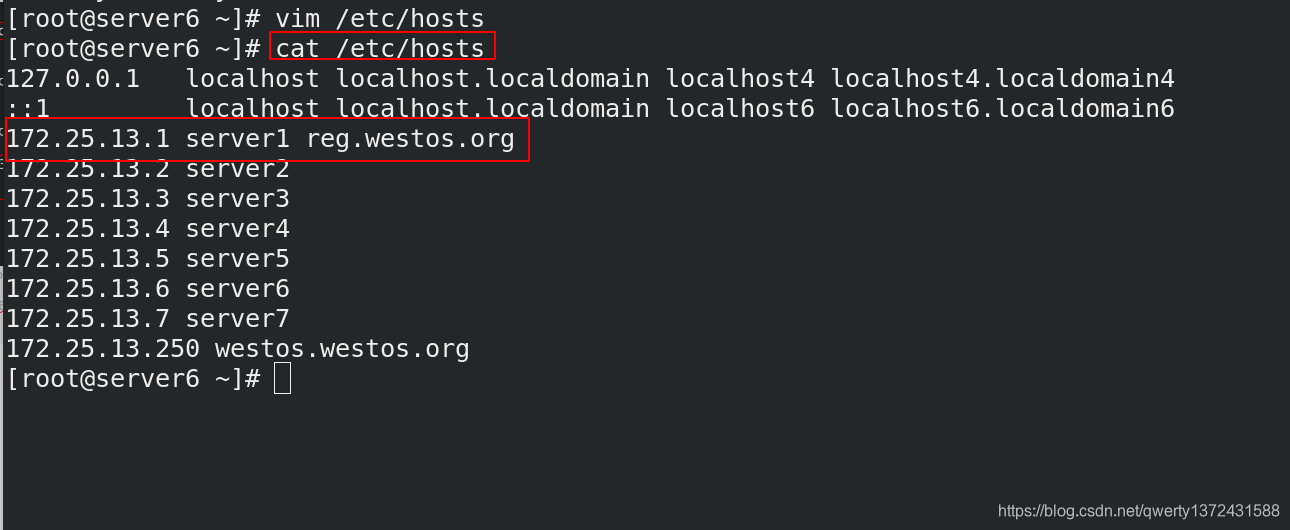

[root@server6 ~]# vim /etc/hosts

[root@server6 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.25.13.1 server1 reg.westos.org ##解析

172.25.13.2 server2

172.25.13.3 server3

172.25.13.4 server4

172.25.13.5 server5

172.25.13.6 server6

172.25.13.7 server7

172.25.13.250 westos.westos.org

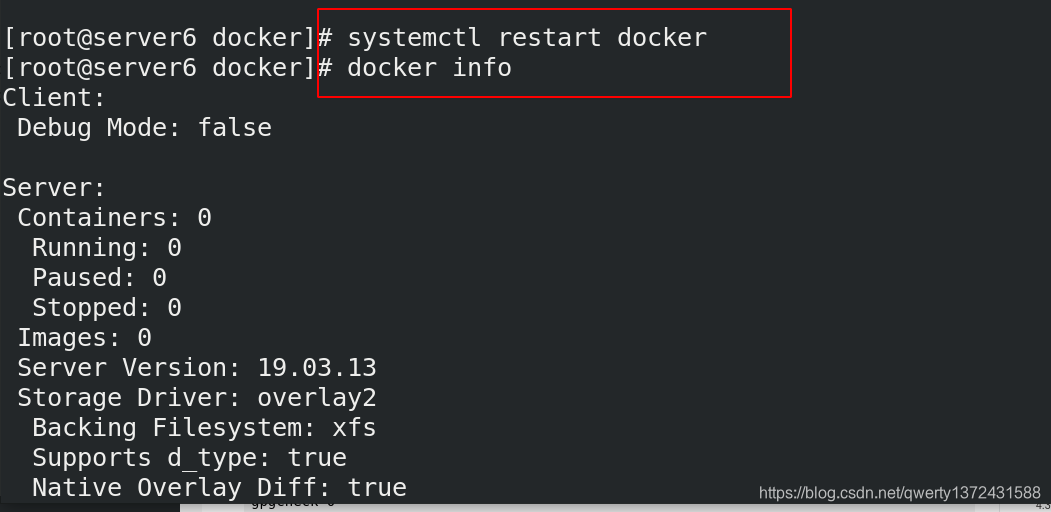

[root@server6 docker]# systemctl restart docker

[root@server6 docker]# docker info

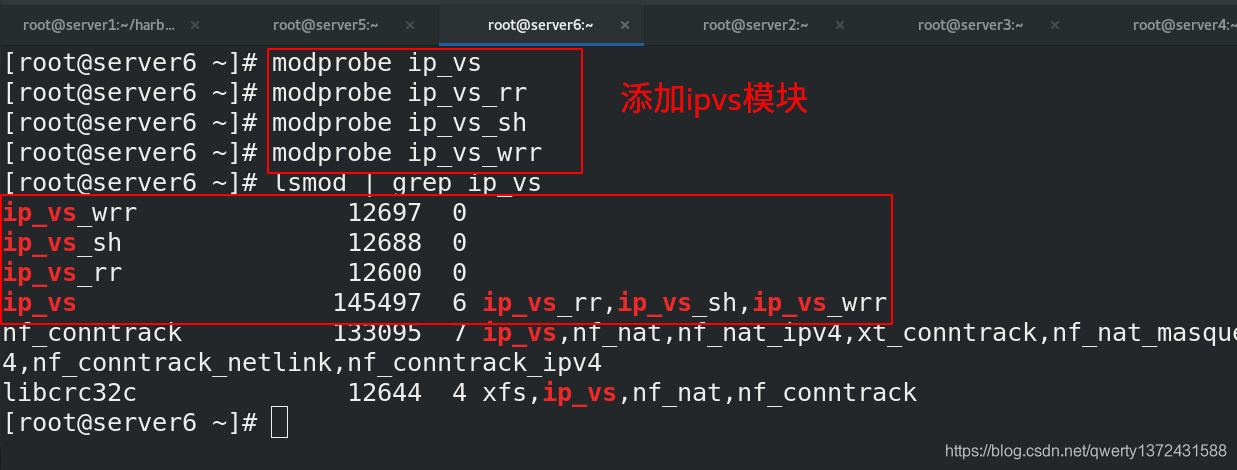

4.3.4 安装ipvsadm模块

[root@server6 ~]# yum install -y ipvsadm ##安装ipvsadm模块(需要ipvs模块)

[root@server6 ~]# modprobe ip_vs ##添加ip_vs模块

[root@server6 ~]# modprobe ip_vs_rr

[root@server6 ~]# modprobe ip_vs_sh

[root@server6 ~]# modprobe ip_vs_wrr

[root@server6 ~]# lsmod | grep ip_vs

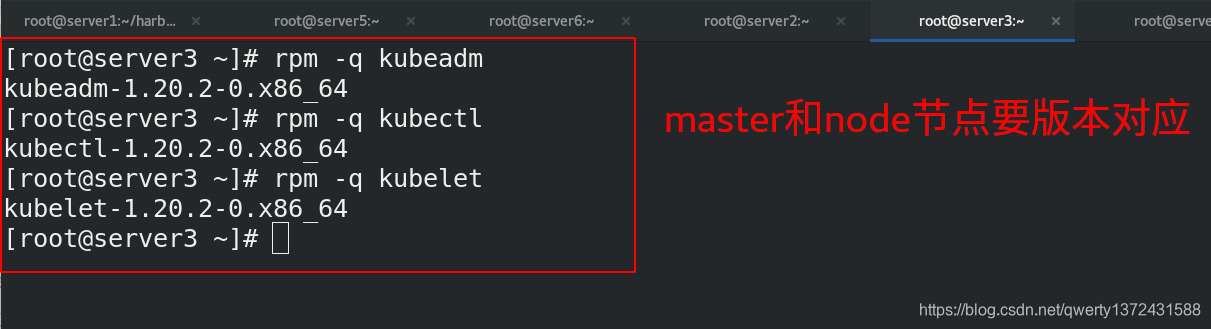

4.3.5 节点配置kubeadm,kubectl,kubelet

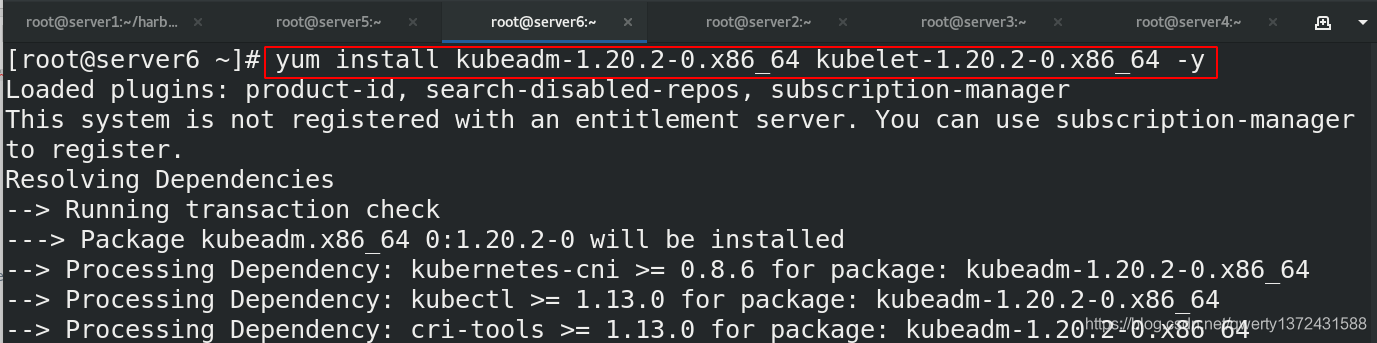

[root@server6 ~]# yum install kubeadm-1.20.2-0.x86_64 kubelet-1.20.2-0.x86_64 -y ##安装k8s组件

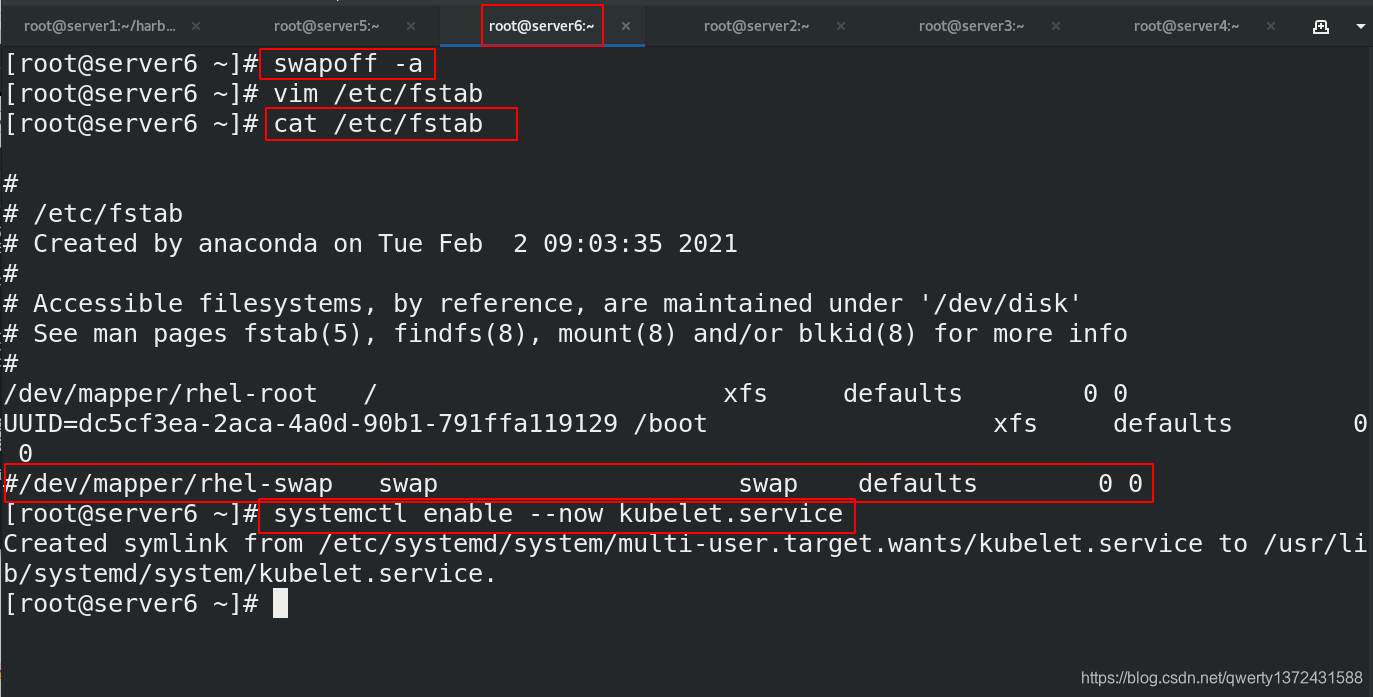

##禁用swap分区

[root@server6 ~]# swapoff -a

[root@server6 ~]# vim /etc/fstab

[root@server6 ~]# cat /etc/fstab

#

# /etc/fstab

# Created by anaconda on Tue Feb 2 09:03:35 2021

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/rhel-root / xfs defaults 0 0

UUID=dc5cf3ea-2aca-4a0d-90b1-791ffa119129 /boot xfs defaults 0 0

#/dev/mapper/rhel-swap swap swap defaults 0 0

##启动kubelet服务

[root@server6 ~]# systemctl enable --now kubelet.service

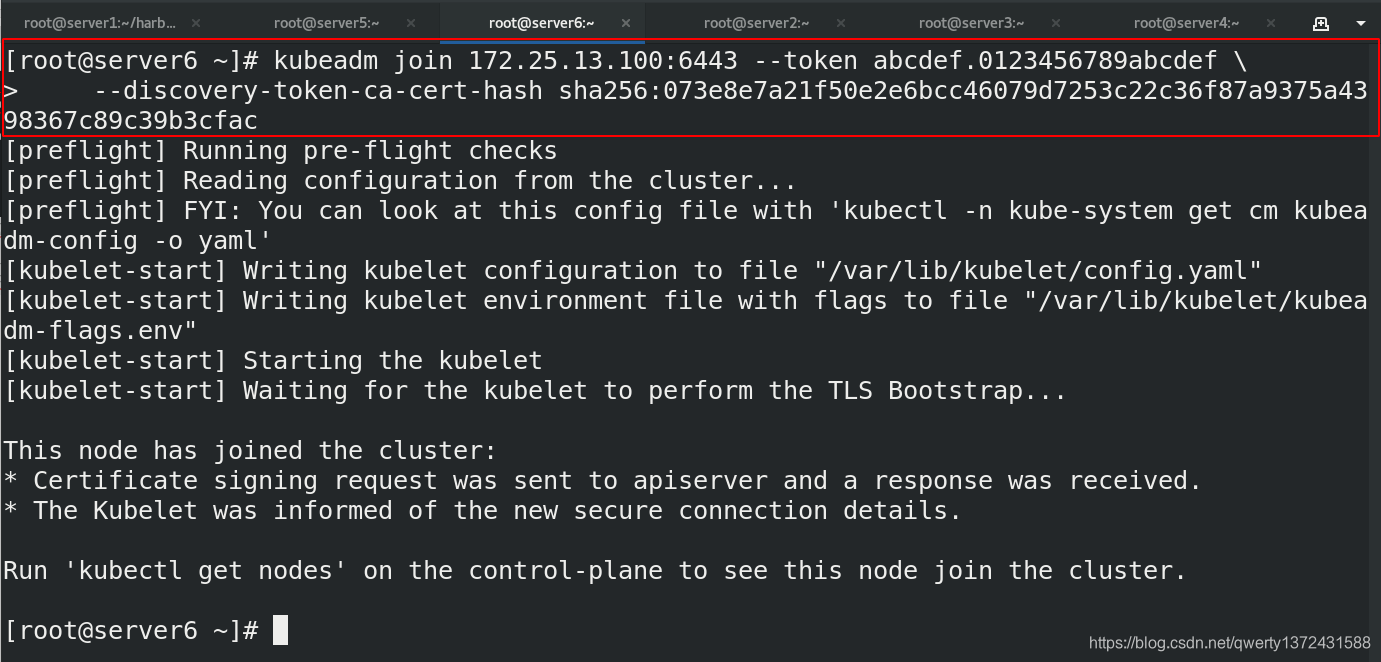

4.3.6 节点扩容

[root@server6 ~]# kubeadm join 172.25.13.100:6443 --token abcdef.0123456789abcdef \

> --discovery-token-ca-cert-hash sha256:073e8e7a21f50e2e6bcc46079d7253c22c36f87a9375a4398367c89c39b3cfac

##加入节点命令

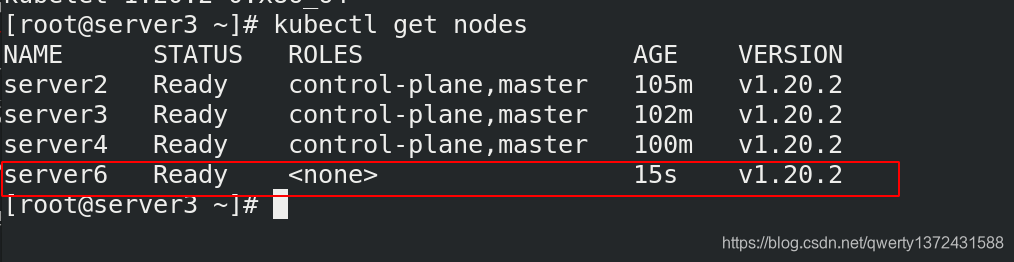

[root@server3 ~]# kubectl get nodes ##master上查看是否添加节点成功

NAME STATUS ROLES AGE VERSION

server2 Ready control-plane,master 105m v1.20.2

server3 Ready control-plane,master 102m v1.20.2

server4 Ready control-plane,master 100m v1.20.2

server6 Ready <none> 15s v1.20.2

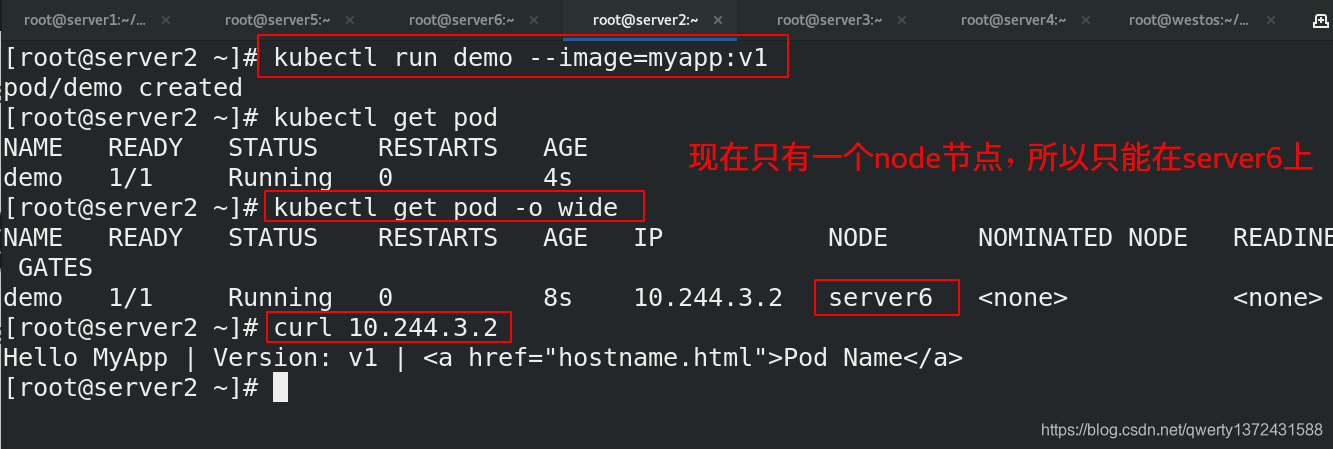

4.4 测试

[root@server2 ~]# kubectl run demo --image=myapp:v1

pod/demo created

[root@server2 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

demo 1/1 Running 0 4s

[root@server2 ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

demo 1/1 Running 0 8s 10.244.3.2 server6 <none> <none>

[root@server2 ~]# curl 10.244.3.2

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

5. 使用pacemaker搭建k8s的高可用(haproxy的高可用)

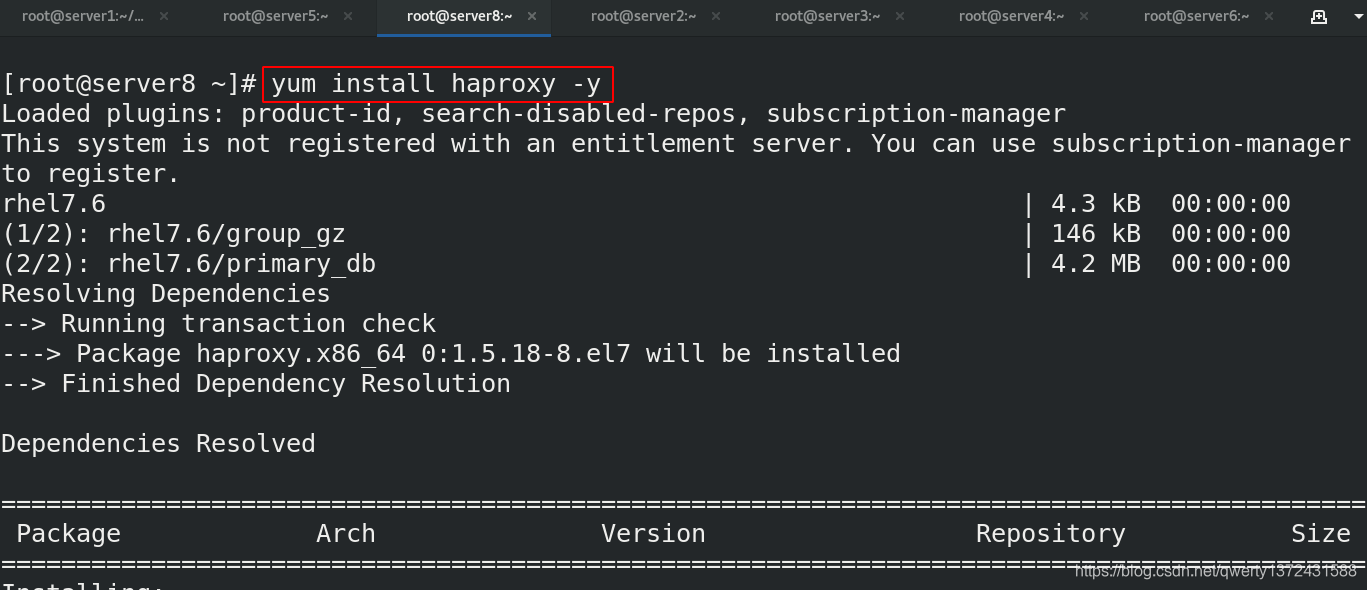

5.1 安装haproxy

## 1. 安装haproxy

[root@server8 ~]# yum install haproxy -y ##新开虚拟机安装haproxy

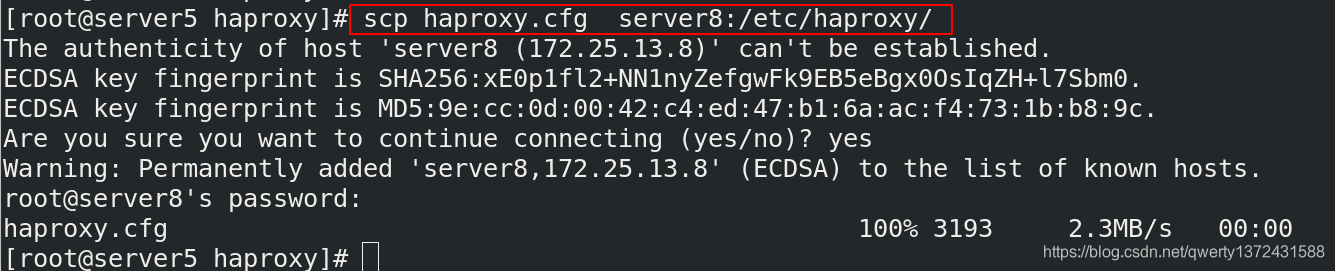

[root@server5 haproxy]# scp haproxy.cfg server8:/etc/haproxy/ ##将之前server5配置的haproxy文件发送,并作出相应修改(一定要做解析)

5.2 安装pacemaker

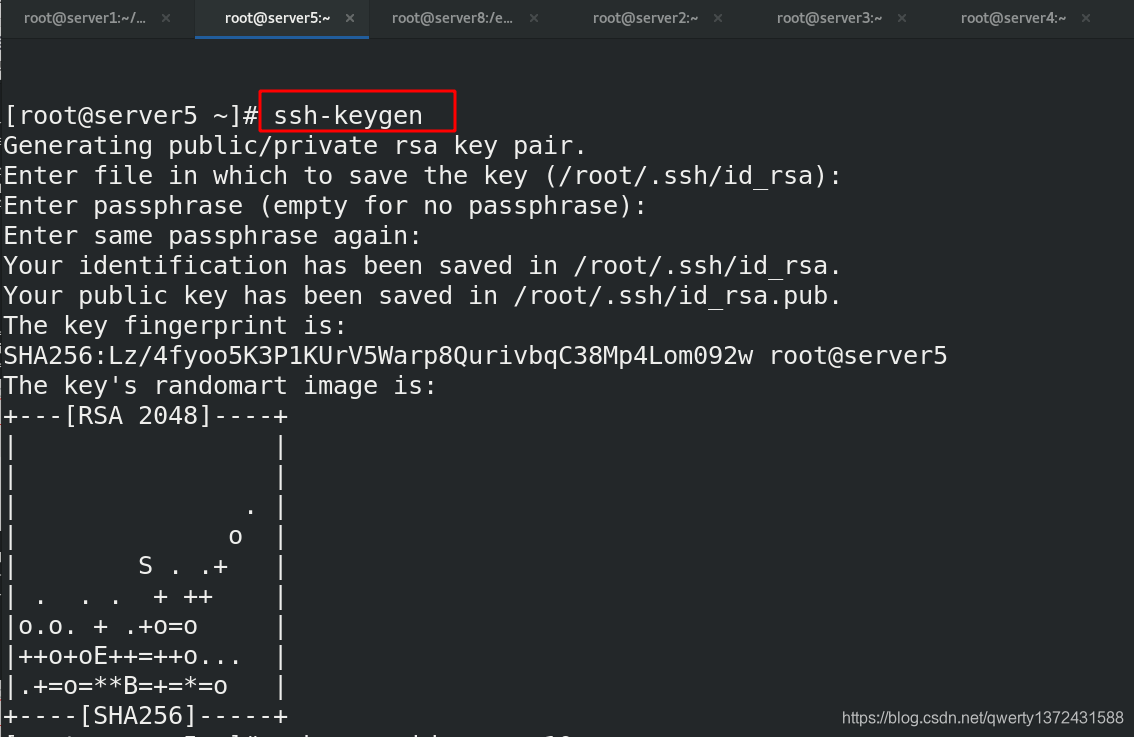

## 1. 免密

[root@server5 ~]# ssh-keygen ##做免密

[root@server5 ~]# ssh-copy-id server8 ##做免密

## 2.安装pacemaker

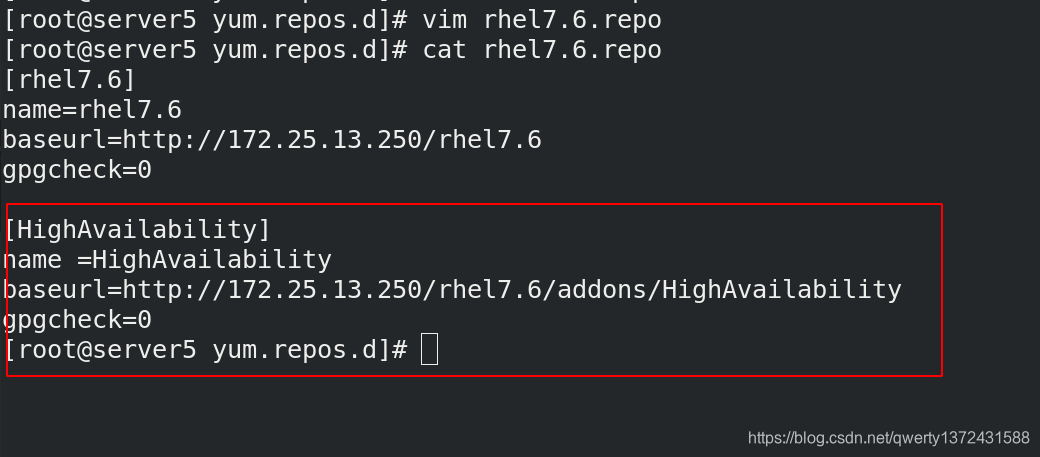

[root@server5 yum.repos.d]# vim rhel7.6.repo

[root@server5 yum.repos.d]# cat rhel7.6.repo

[rhel7.6]

name=rhel7.6

baseurl=http://172.25.13.250/rhel7.6

gpgcheck=0

[HighAvailability] ##高可用

name =HighAvailability

baseurl=http://172.25.13.250/rhel7.6/addons/HighAvailability

gpgcheck=0

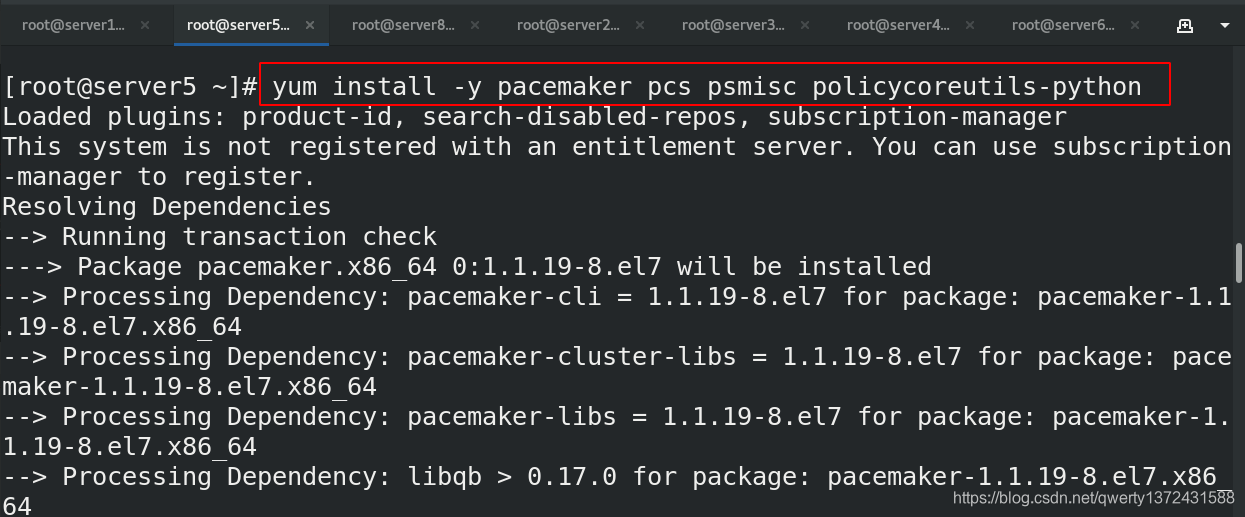

[root@server5 ~]# yum install -y pacemaker pcs psmisc policycoreutils-python ##安装pacemaker

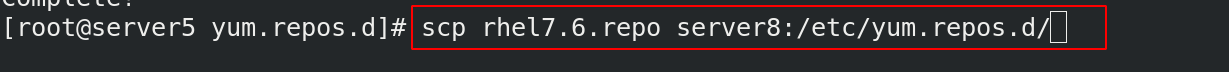

[root@server5 yum.repos.d]# scp rhel7.6.repo server8:/etc/yum.repos.d/ ##拷贝一份仓库文件

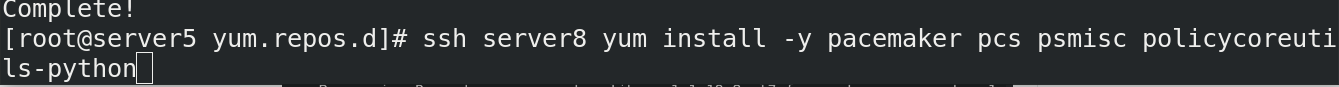

[root@server5 yum.repos.d]# ssh server8 yum install -y pacemaker pcs psmisc policycoreutils-python ##server8也配置同样的pacemaker

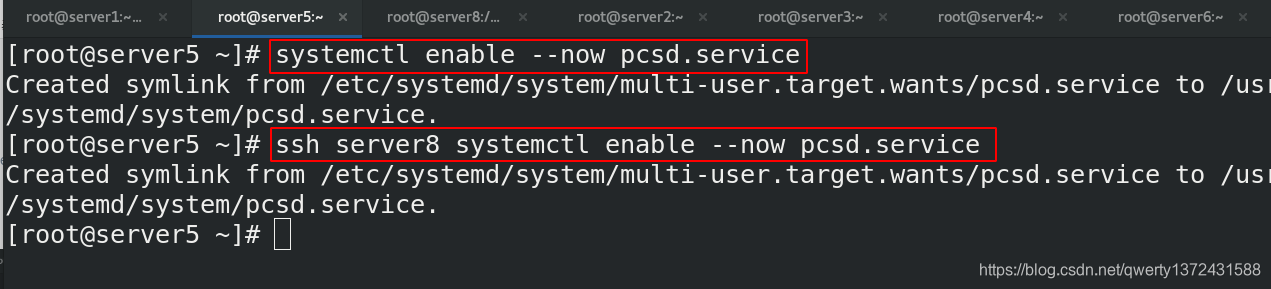

## 3.启动pcs服务

[root@server5 ~]# systemctl enable --now pcsd.service

[root@server5 ~]# ssh server8 systemctl enable --now pcsd.service ##启动server8 服务

5.3 配置pacemaker

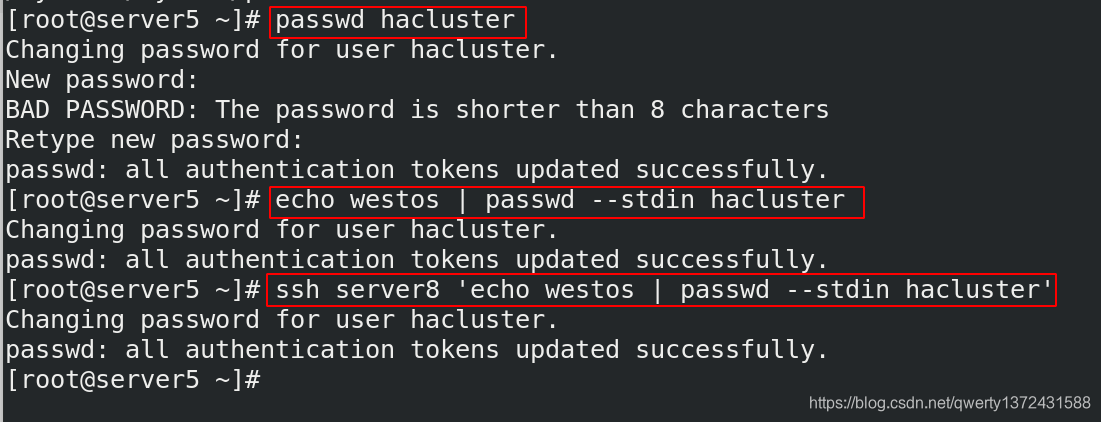

## 1. create a hacluster user

[root@server5 ~]# passwd hacluster ##创建一个用户(两个节点使用一个)

[root@server5 ~]# echo westos | passwd --stdin hacluster

[root@server5 ~]# ssh server8 'echo westos | passwd --stdin hacluster'

[root@server5 ~]# cat /etc/shadow ##查看是否有密码

[root@server8 ~]# cat /etc/shadow

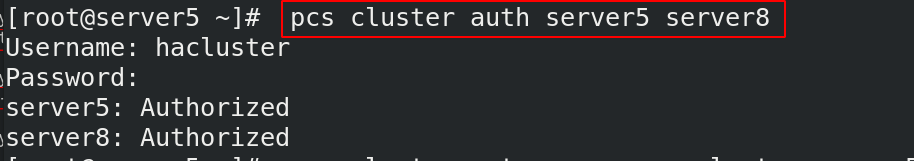

## 2. 对两个用户进行认证

[root@server5 ~]# pcs cluster auth server5 server8

Username: hacluster ##用户是上一条命令生成的

Password:

server5: Authorized

server8: Authorized

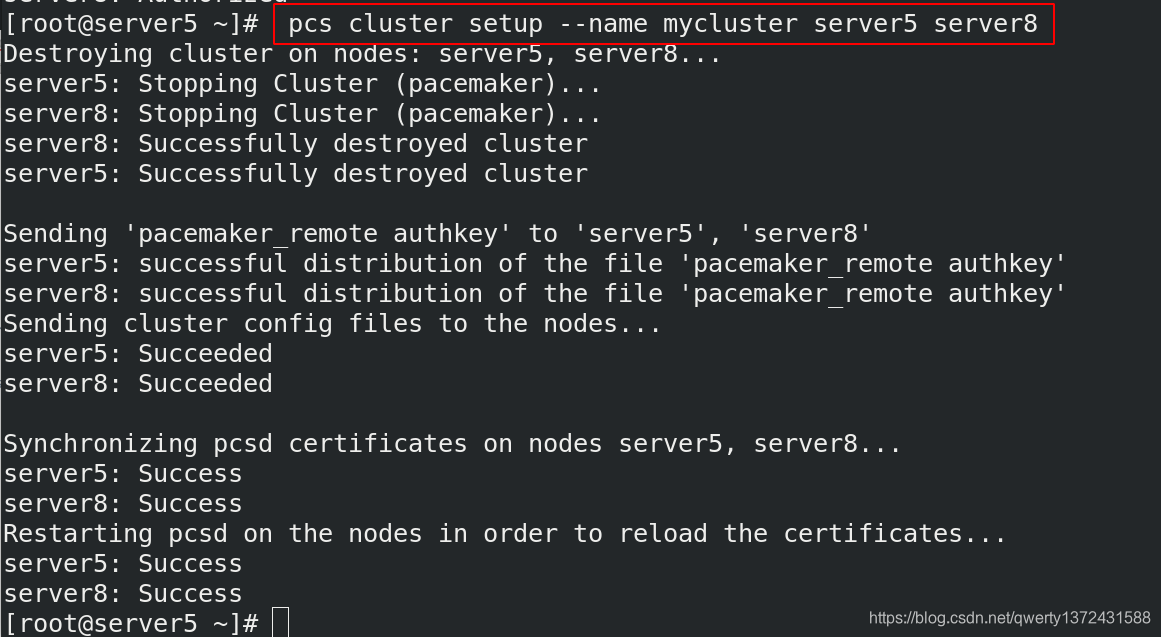

## 3. 组件集群

[root@server5 ~]# pcs cluster setup --name mycluster server5 server8 ##集群名字mycluster

## 4. 设置开机自启动集群

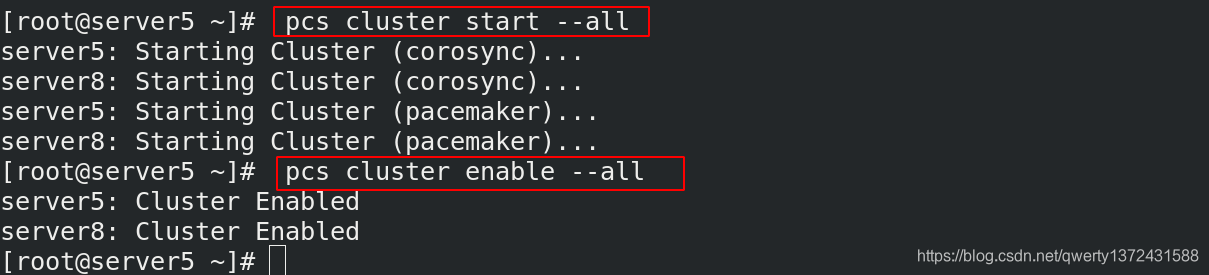

[root@server5 ~]# pcs cluster start --all

server5: Starting Cluster (corosync)... ##集群心跳

server8: Starting Cluster (corosync)... ##pacemaker是资源管理器

server5: Starting Cluster (pacemaker)...

server8: Starting Cluster (pacemaker)...

[root@server5 ~]# pcs cluster enable --all

server5: Cluster Enabled

server8: Cluster Enabled

5.4 校验(设置stonith)

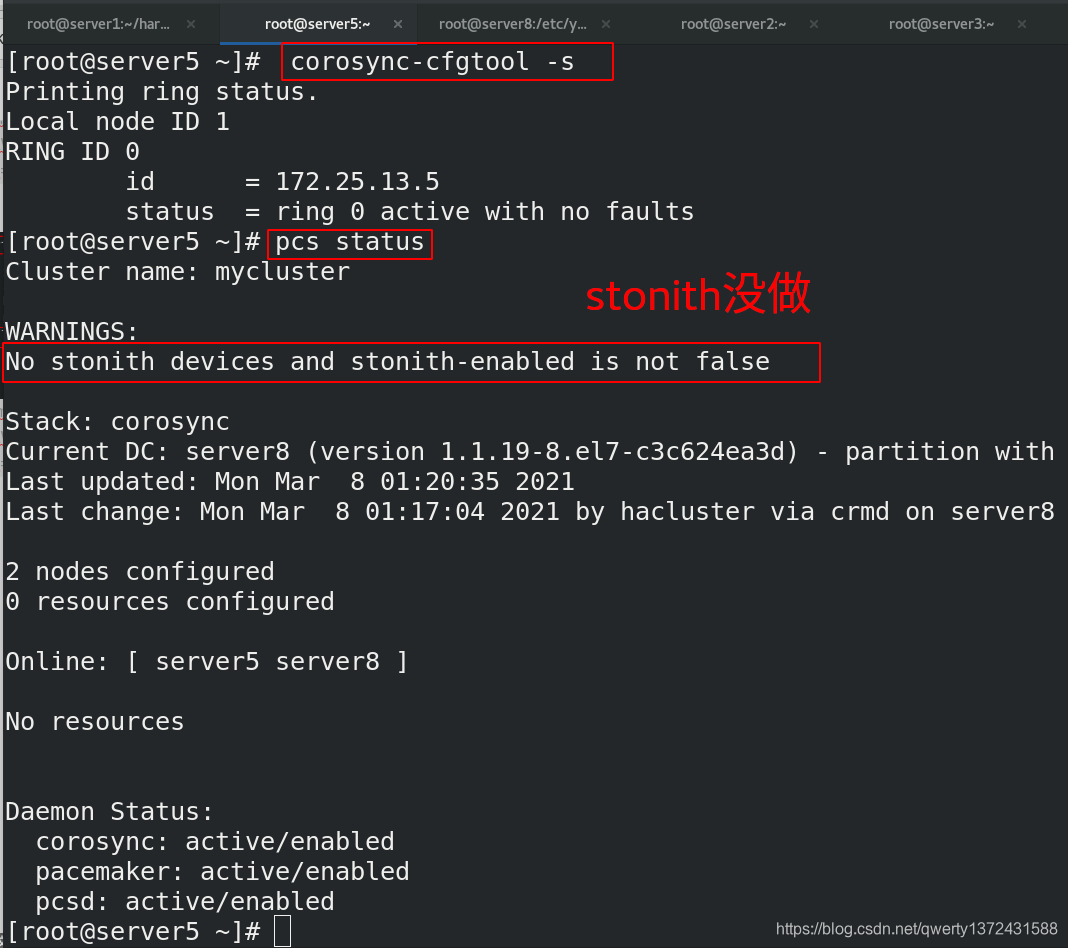

[root@server5 ~]# corosync-cfgtool -s

Printing ring status.

Local node ID 1

RING ID 0

id = 172.25.13.5

status = ring 0 active with no faults

[root@server5 ~]# pcs status ##查看状态

Cluster name: mycluster

WARNINGS:

No stonith devices and stonith-enabled is not false

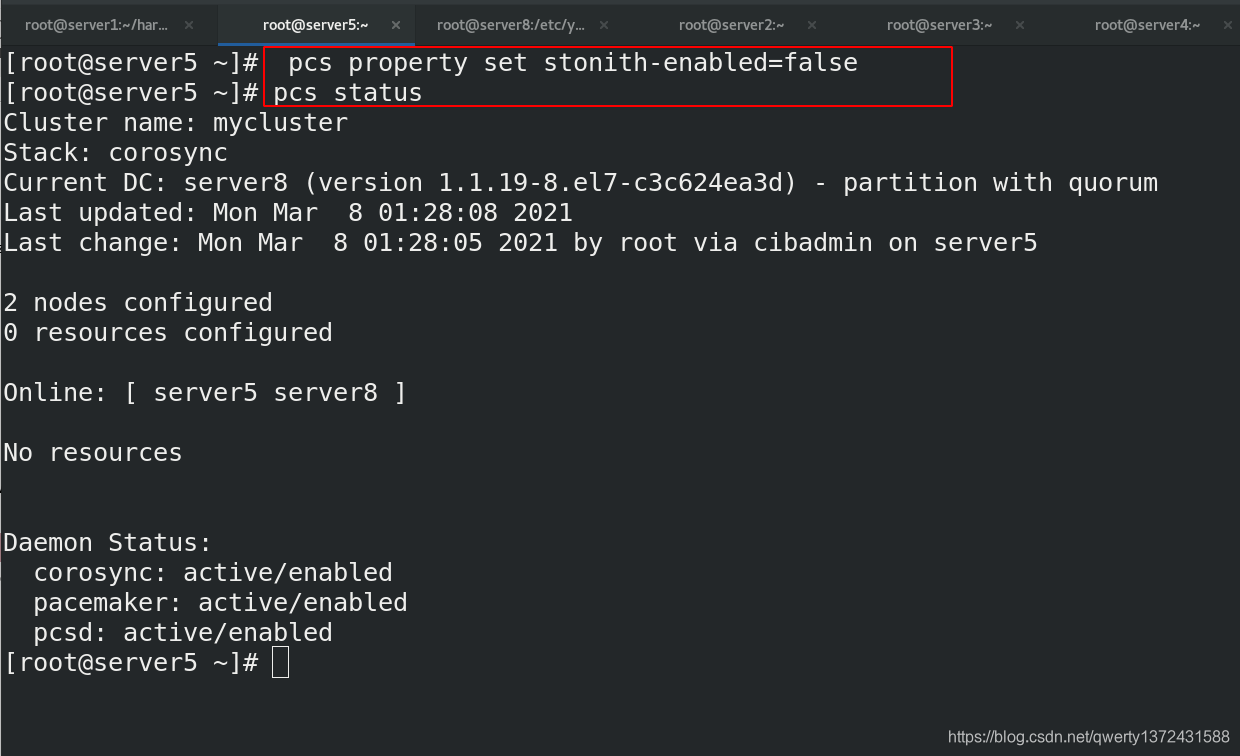

[root@server5 ~]# pcs property set stonith-enabled=false ##设置stonith为False

[root@server5 ~]# pcs status ##状态没有警告

[root@server5 ~]# crm_verify -LV ##验证成功

5.5 配置资源

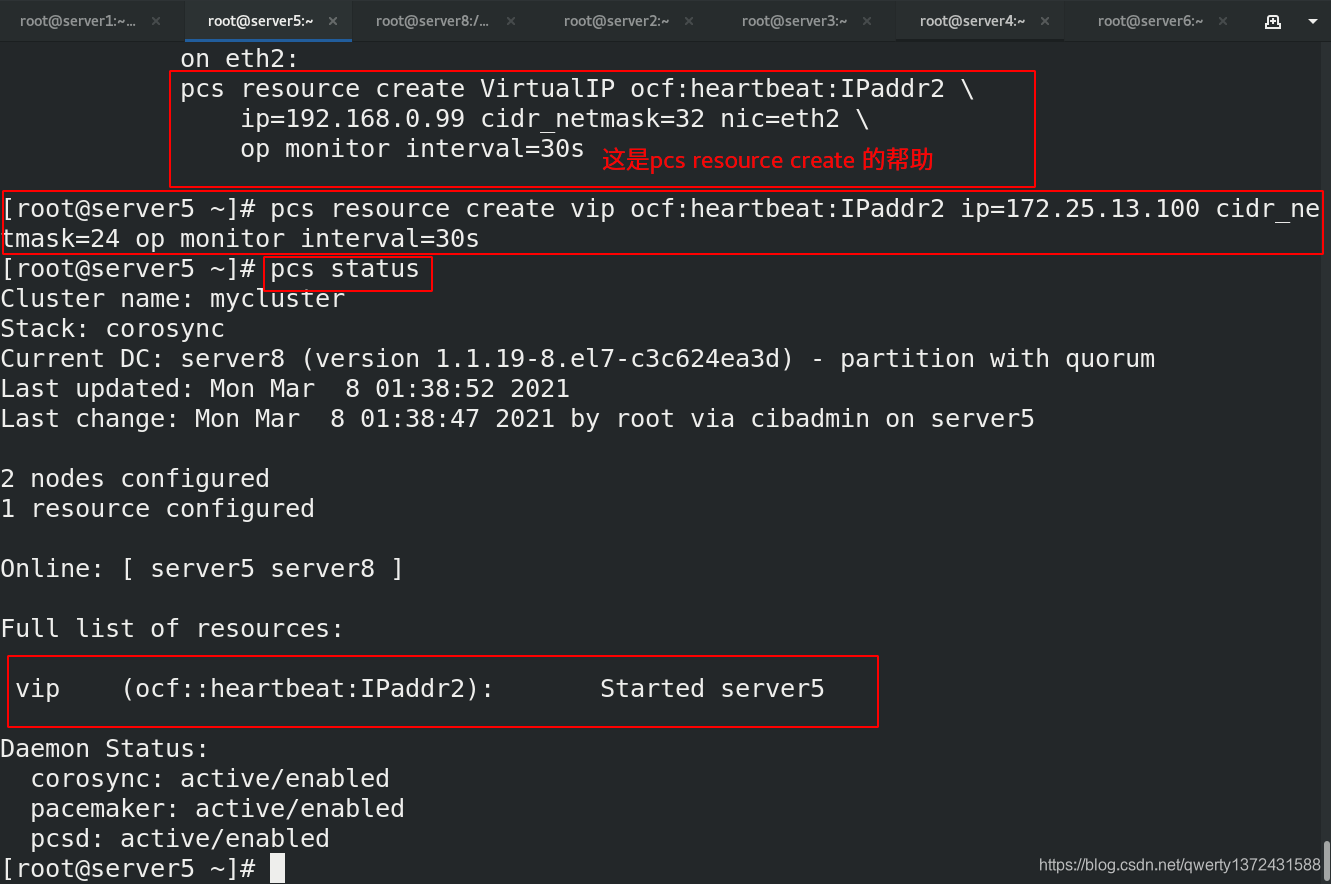

5.5.1 配置vip资源

## 1. 配置vip资源

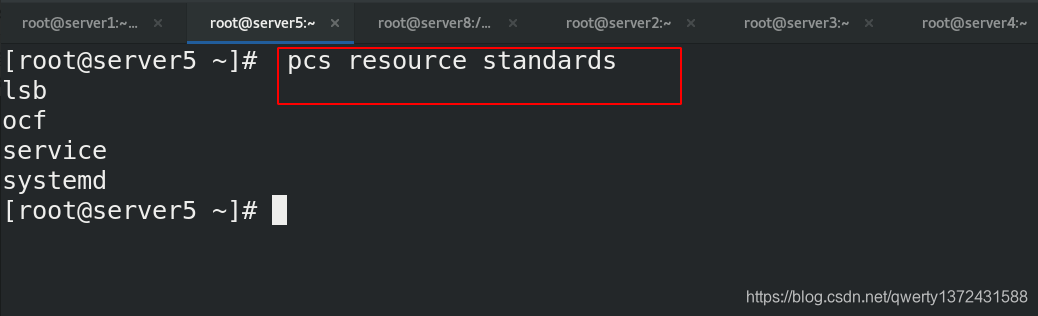

[root@server5 ~]# pcs resource standards ##查看系统级资源

lsb

ocf

service

systemd

[root@server5 ~]# pcs resource create --help ##查看资源创建的帮助

[root@server5 ~]# pcs resource create vip ocf:heartbeat:IPaddr2 ip=172.25.13.100 cidr_netmask=24 op monitor interval=30s ##创建vip资源(op表示监控)

[root@server5 ~]# pcs status ##查看状态,看是否创建成功

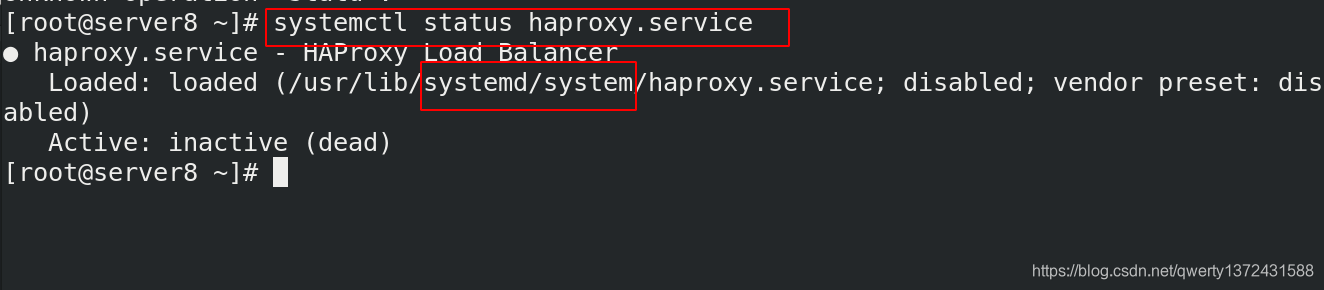

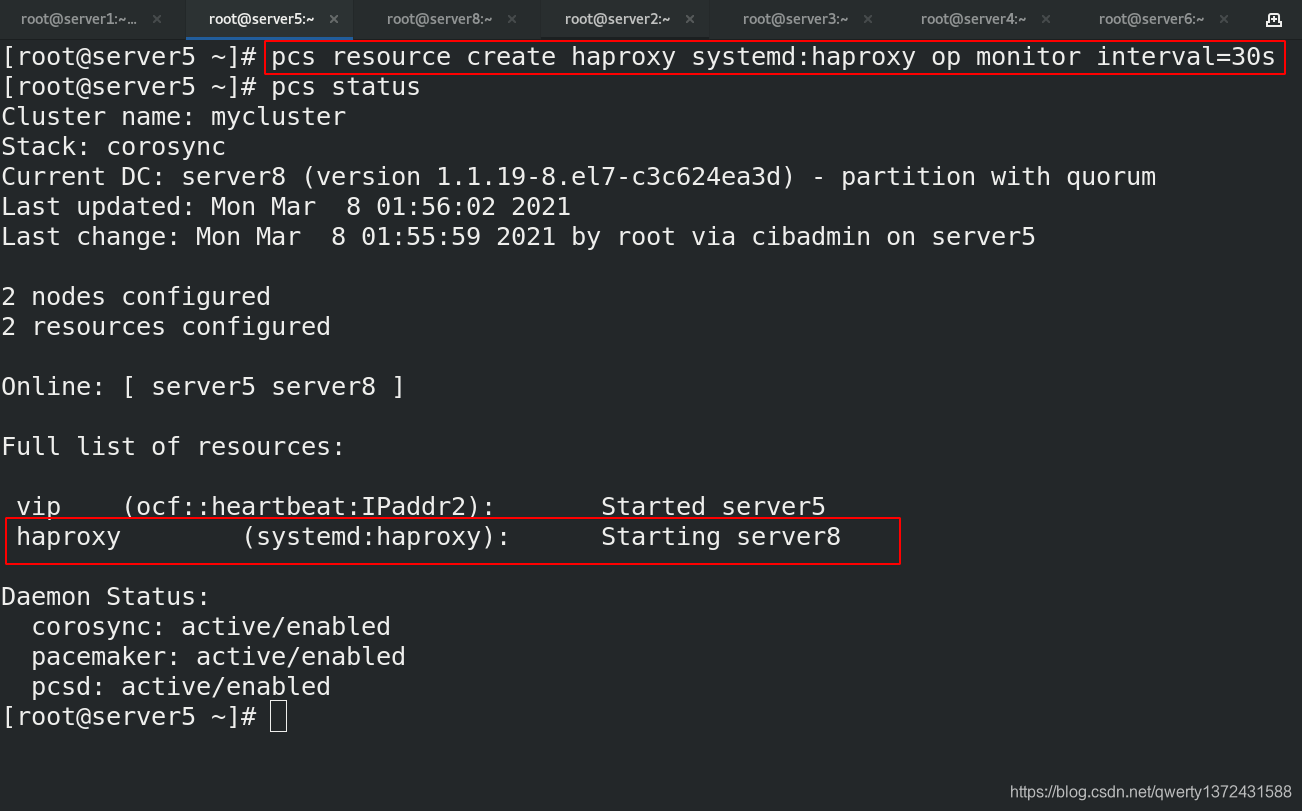

5.5.2 配置haproxy服务资源

[root@server5 ~]# pcs resource create haproxy systemd:haproxy op monitor interval=30s ##添加haproxy服务资源

[root@server5 ~]# pcs status

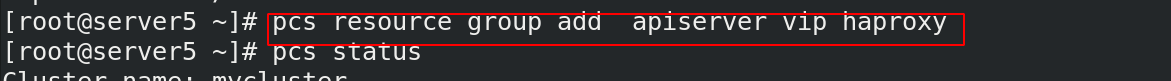

5.5.3 资源放到一个组

[root@server5 ~]# pcs resource group add apiserver vip haproxy #先起vip,后起服务。把资源加到apiserver这个组。apiserver是组名

5.6 测试主从切换(双机热备)

[root@server5 ~]# pcs node standby

[root@server5 ~]# pcs status