准备工作

- 1.Kafka是Scala+Java语言编写的,需要JDK环境

- 2.Kafka需要依赖ZK

- 3.准备目录

- mkdir -p /export/servers/

- mkdir -p /export/software/

- mkdir -p /export/data/

- mkdir -p /export/logs/

- 4.下载

- http://archive.apache.org/dist/kafka/

- https://www.apache.org/dyn/closer.cgi?path=/kafka/1.0.0/kafka_2.11-1.0.0.tgz

- 由于kafka是scala语言编写的,基于scala的多个版本,kafka发布了多个版本。这里使用2.11

上传并解压改名

- tar -zxvf kafka_2.11-1.0.0.tgz -C /export/servers/

- cd /export/servers/

- mv kafka_2.11-1.0.0 kafka

配置环境变量并及时生效

- vim /etc/profile

- 增加如下配置

- export KAFKA_HOME=/export/servers/kafka

- export PATH= P A T H : PATH: PATH:KAFKA_HOME/bin

- source /etc/profile

分发到其他机器

- node01执行

scp -r /export/servers/kafka node02:/export/servers

scp -r /export/servers/kafka node03:/export/servers

scp /etc/profile node02:/etc/profile

scp /etc/profile node03:/etc/profile

- 然后node02/node03执行

source /etc/profile

修改配置文件

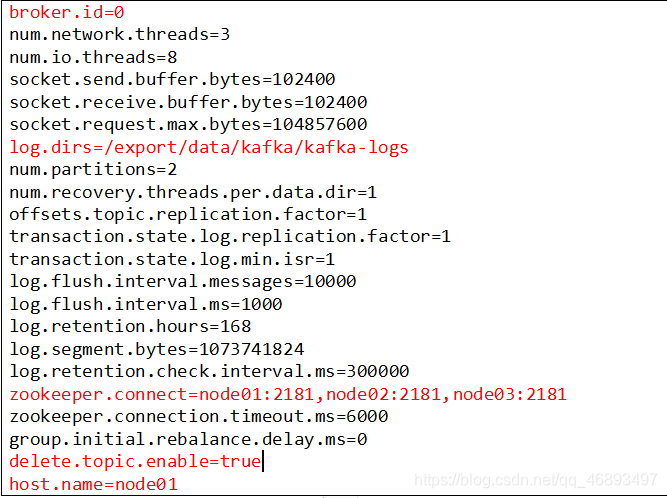

node01

vim /export/servers/kafka/config/server.properties

- 删除所有内容(注释太多了)

:%d

- 按i插入

broker.id=0

num.network.threads=3

num.io.threads=8

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

log.dirs=/export/data/kafka/kafka-logs

num.partitions=2

num.recovery.threads.per.data.dir=1

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

log.flush.interval.messages=10000

log.flush.interval.ms=1000

log.retention.hours=168

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

zookeeper.connect=node01:2181,node02:2181,node03:2181

zookeeper.connection.timeout.ms=6000

group.initial.rebalance.delay.ms=0

delete.topic.enable=true

host.name=node01

ndoe02

- vim /export/servers/kafka/config/server.properties

- 删除所有内容(注释太多了)

- :%d

- 按i插入

broker.id=1

num.network.threads=3

num.io.threads=8

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

log.dirs=/export/data/kafka/kafka-logs

num.partitions=2

num.recovery.threads.per.data.dir=1

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

log.flush.interval.messages=10000

log.flush.interval.ms=1000

log.retention.hours=168

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

zookeeper.connect=node01:2181,node02:2181,node03:2181

zookeeper.connection.timeout.ms=6000

group.initial.rebalance.delay.ms=0

delete.topic.enable=true

host.name=node02

node03

- vim /export/servers/kafka/config/server.properties

- 删除所有内容(注释太多了)

- :%d

- 按i插入

broker.id=2

num.network.threads=3

num.io.threads=8

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

log.dirs=/export/data/kafka/kafka-logs

num.partitions=2

num.recovery.threads.per.data.dir=1

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

log.flush.interval.messages=10000

log.flush.interval.ms=1000

log.retention.hours=168

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

zookeeper.connect=node01:2181,node02:2181,node03:2181

zookeeper.connection.timeout.ms=6000

group.initial.rebalance.delay.ms=0

delete.topic.enable=true

host.name=node03

启动集群

- 1.注意!!! 一定要先启动三台zk,因为Kafka依赖zk

/export/servers/zookeeper/bin/zkServer.sh start

- 2.再启动三台kafak

nohup /export/servers/kafka/bin/kafka-server-start.sh /export/servers/kafka/config/server.properties >/dev/null 2>&1 &

-

3.三台jps查看到kafka进程即可

-

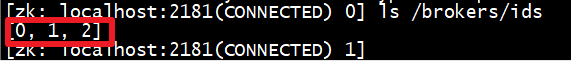

4.也可以去zk上验证

/export/servers/zookeeper/bin/zkCli.sh

ls /brokers/ids

quit

- 大功告成!

- 5.停止可以jps再kill -9

- 也可以使用/export/servers/kafka/bin/kafka-server-stop.sh