在训练深度学习模型时,通常将数据集切分为训练集和验证集.Keras提供了两种评估模型性能的方法:

- 使用自动切分的验证集

- 使用手动切分的验证集

一.自动切分

在Keras中,可以从数据集中切分出一部分作为验证集,并且在每次迭代(epoch)时在验证集中评估模型的性能.具体地,调用model.fit()训练模型时,可通过validation_split参数来指定从数据集中切分出验证集的比例.

from keras.models import Sequential

from keras.layers import Dense

import numpy as np

from sklearn.datasets import load_iris

iris = load_iris()

iris_X = iris.data

iris_y = iris.target

np.random.seed(0)

np.random.shuffle(iris_X)

np.random.seed(0)

np.random.shuffle(iris_y)

np.random.seed(7) # fix random seed for reproducibility

# create model

model = Sequential()

model.add(Dense(8, input_dim=4, activation='relu'))

model.add(Dense(6, activation='relu'))

model.add(Dense(4, activation='softmax'))

# Compile model

model.compile(loss='sparse_categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

# Fit the model

model.fit(iris_X, iris_y, validation_split=0.20, epochs=80, batch_size=10)validation_split:0~1之间的浮点数,用来指定训练集的一定比例数据作为验证集。验证集将不参与训练,并在每个epoch结束后测试的模型的指标,如损失函数、精确度等。

注意,验证集的划分发生在shuffle之前,因此如果数据本身是有序的,那么需要先手工打乱再指定validation_split,否则可能会出现验证集样本不均匀。

二.手动切分

Keras允许在训练模型的时候手动指定验证集.

例如,用sklearn库中的train_test_split()函数将数据集进行切分,然后通过keras的model.fit()的validation_data参数指定前面切分出来的验证集.

from sklearn.datasets import load_iris

from sklearn.neighbors import KNeighborsClassifier

import matplotlib.pyplot as plt

import sys

import numpy as np

from keras.models import Sequential

from keras.layers import Dense

from sklearn.model_selection import train_test_split

iris = load_iris()

iris_X = iris.data

iris_y = iris.target

X_train, X_test, y_train, y_test = train_test_split(iris_X, iris_y, test_size=0.20, random_state=7)

np.random.seed(7)

# create model

model = Sequential()

model.add(Dense(8, input_dim=4, activation='relu'))

model.add(Dense(6, activation='relu'))

model.add(Dense(3, activation='sigmoid'))

# Compile model

model.compile(loss='sparse_categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

# Fit the model

model.fit(X_train, y_train, validation_data=(X_test ,y_test), epochs=100, batch_size=10)三.K折交叉验证(k-fold cross validation)

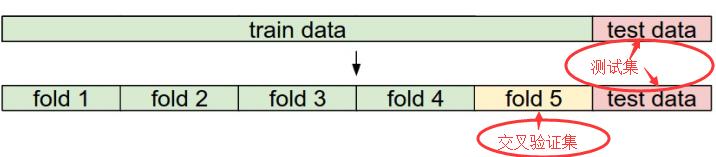

上面所讲的自动切分与手工切分方案简单来说就是三步,即训练、验证、测试,这类方案会导致部分评测数据无法参与模型的训练,造成数据的浪费.为了充分利用训练集的数据,下面采用K折交叉验证.

K折交叉验证方法将数据集分成k份,其中k-1份用做训练集,剩下的1份作为验证集,以这种方式执行k轮,得到k个模型评测结果.对k次的评估结果取平均,作为该算法的整体性能.(k一般取值为5或者10).

- 优点:能比较鲁棒性地评估模型在未知数据上的性能.

- 缺点:计算复杂度较大.因此,在数据集较大,模型复杂度较高,或者计算资源不是很充沛的情况下,可能不适用,尤其是在训练深度学习模型的时候.

sklearn.model_selection提供了 KFold以及 RepeatedKFold, LeaveOneOut, LeavePOut, ShuffleSplit, StratifiedKFold, GroupKFold, TimeSeriesSplit等变体.

下面的例子中用的StratifiedKFold采用的是分层抽样,它保证各类别的样本在切割后每一份小数据集中的比例都与原数据集中的比例相同.

from sklearn.datasets import load_iris

from sklearn.model_selection import StratifiedKFold

import matplotlib.pyplot as plt

import numpy as np

from keras.models import Sequential

from keras.layers import Dense

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LinearRegression

def model1(train_X, train_y, test_X, test_y):

model = Sequential()

model.add(Dense(8, input_dim=4, activation='relu'))

model.add(Dense(6, activation='relu'))

model.add(Dense(3, activation='sigmoid'))

model.compile(loss='sparse_categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

model.fit(train_X, train_y, epochs=200, batch_size=20, verbose=0)

scores = model.evaluate(test_X, test_y, verbose=0)

return scores

def model2(train_X, train_y, test_X, test_y):

model = Sequential()

# model.add(Dense(8, input_dim=4, activation='relu'))

model.add(Dense(3, input_dim=4, activation='sigmoid'))

model.compile(loss='sparse_categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

model.fit(train_X, train_y, epochs=200, batch_size=20, verbose=0)

scores = model.evaluate(test_X, test_y, verbose=0)

return scores

def model3(train_X, train_y, test_X, test_y):

model = LinearRegression()

model.fit(train_X , train_y)

score = model.score(test_X , test_y)

return score

if __name__ == "__main__":

iris = load_iris()

iris_X = iris.data

iris_y = iris.target

# fix random seed for reproducibility

method1_acc = []

method2_acc = []

method3_acc = []

seed = 7

np.random.seed(seed)

kfold = StratifiedKFold(n_splits=5, shuffle=True, random_state=seed)

for train, test in kfold.split(iris_X, iris_y):

# define 5-fold cross validation

score1 = model1(iris_X[train], iris_y[train],iris_X[test], iris_y[test])

score2 = model2(iris_X[train], iris_y[train],iris_X[test], iris_y[test])

score3 = model3(iris_X[train], iris_y[train],iris_X[test], iris_y[test])

method1_acc.append(score1[1])

method2_acc.append(score2[1])

method3_acc.append(score3)

x_axix = [x for x in range(1, 6)] #开始画图

plt.title('Result Analysis')

plt.plot(x_axix, method1_acc, color='green', label='method1 accuracy')

plt.plot(x_axix, method2_acc, color='skyblue', label='method2 accuracy')

plt.plot(x_axix, method3_acc, color='blue', label='method3 accuracy')

plt.legend() # 显示图例

plt.xlabel('iteration times')

plt.ylabel('accuracy')

plt.show()

代码地址:

https://github.com/yscoder-github/zhihu/blob/master/share_code/k_fold_demo.pygithub.com