1.3 label_map.pbtxt文件

xx_label_map.pbtxt文件中的内容如下:

item {id: 1name: 'Abyssinian'}item {id: 2name: 'american_bulldog'}item {id: 3name: 'american_pit_bull_terrier'}

1.4 创建tf_record文件

先创建一个create_xx_tf_record.py文件,单独用来处理训练数据。可以直接从object_detection工程下的create_pacal_tf_record.py(如果是每个图片只有一个分类,可以使用create_pet_tf_record.py)复制而来。

修改起始参数配置:

- data_dir: 数据目录,包含了图片和标注的目录

- output_dir:输出目录,图片转换为tf_record之后存储的位置

- label_map_path:上面提到的xx_label_map.pbtxt

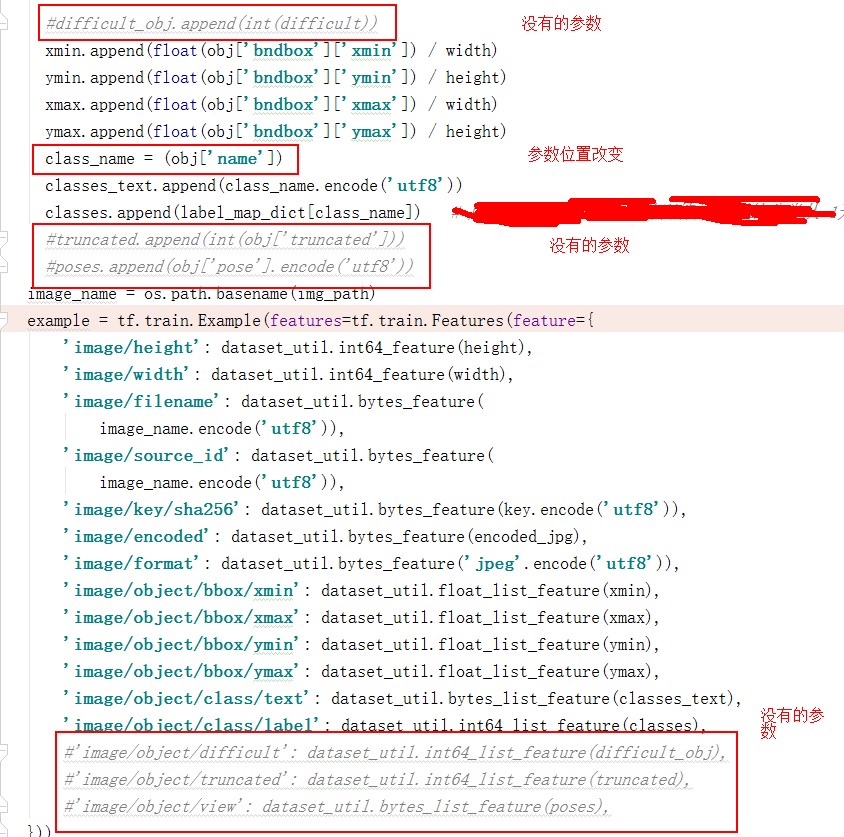

修改dict_to_tf_example

参考你的标准xml文件,有些地方需要修改。

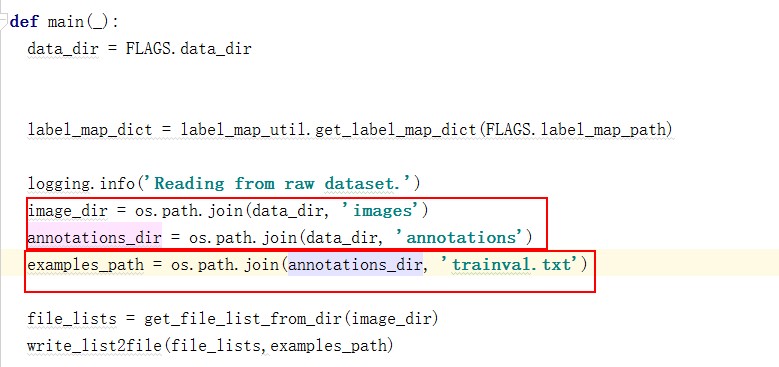

修改main

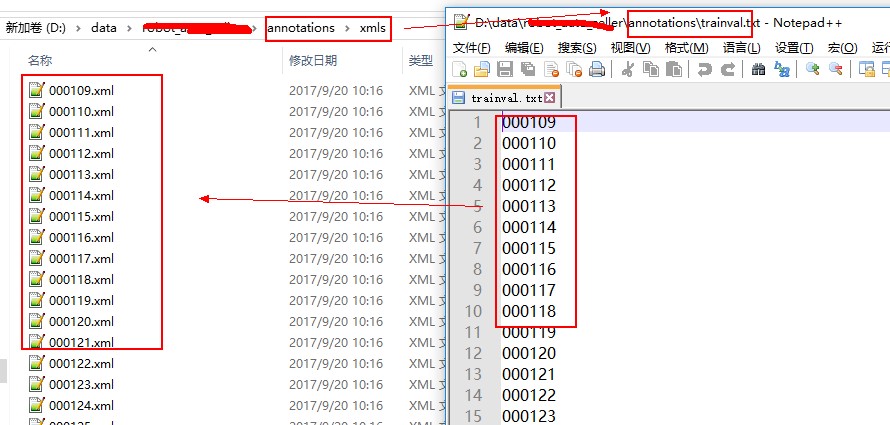

确保你的标注文件,图片目录对应的目录。标注文件目录下是否存在 trainval.txt文件是否存在,这个需要自己生成。我生成的列表(注意:没有带后缀)为:

执行完之后会在对应目录下生成 tf_record文件。

我的文件:

# Copyright 2017 The TensorFlow Authors. All Rights Reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# ==============================================================================

r"""Convert raw PASCAL dataset to TFRecord for object_detection.

Example usage:

python object_detection/dataset_tools/create_pascal_tf_record.py \

--data_dir=/home/user/VOCdevkit \

--year=VOC2012 \

--output_path=/home/user/pascal.record

"""

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import hashlib

import io

import logging

import os

from lxml import etree

import PIL.Image

import tensorflow as tf

from object_detection.utils import dataset_util

from object_detection.utils import label_map_util

flags = tf.app.flags

flags.DEFINE_string('data_dir', '/media/chenyu/6046436546433AD4', 'Root directory to raw PASCAL VOC dataset.')

flags.DEFINE_string('set', 'train', 'Convert training set, validation set or '

'merged set.')

flags.DEFINE_string('annotations_dir', 'Annotations',

'(Relative) path to annotations directory.')

flags.DEFINE_string('year', 'VOC2007', 'Desired challenge year.')

flags.DEFINE_string('output_path', '/home/chenyu/Documents/models/research/object_detection/date/train/pascal_train.record', 'Path to output TFRecord')

flags.DEFINE_string('label_map_path', '/home/chenyu/Documents/models/research/object_detection/date/pascal_label_map.pbtxt',

'Path to label map proto')

flags.DEFINE_boolean('ignore_difficult_instances', False, 'Whether to ignore '

'difficult instances')

FLAGS = flags.FLAGS

SETS = ['train', 'val', 'trainval', 'test']

YEARS = ['VOC2007', 'VOC2012', 'merged']

def dict_to_tf_example(data,

dataset_directory,

label_map_dict,

ignore_difficult_instances=False,

image_subdirectory='JPEGImages'):

"""Convert XML derived dict to tf.Example proto.

Notice that this function normalizes the bounding box coordinates provided

by the raw data.

Args:

data: dict holding PASCAL XML fields for a single image (obtained by

running dataset_util.recursive_parse_xml_to_dict)

dataset_directory: Path to root directory holding PASCAL dataset

label_map_dict: A map from string label names to integers ids.

ignore_difficult_instances: Whether to skip difficult instances in the

dataset (default: False).

image_subdirectory: String specifying subdirectory within the

PASCAL dataset directory holding the actual image data.

Returns:

example: The converted tf.Example.

Raises:

ValueError: if the image pointed to by data['filename'] is not a valid JPEG

"""#data['folder'],

img_path = os.path.join('VOC2007',image_subdirectory, data['filename'][:-4]+'.jpg') #此处修改

full_path = os.path.join(dataset_directory, img_path)

with tf.gfile.GFile(full_path, 'rb') as fid:

encoded_jpg = fid.read()

encoded_jpg_io = io.BytesIO(encoded_jpg)

image = PIL.Image.open(encoded_jpg_io)

if image.format != 'JPEG':

raise ValueError('Image format not JPEG')

key = hashlib.sha256(encoded_jpg).hexdigest()

width = int(data['size']['width'])

height = int(data['size']['height'])

xmin = []

ymin = []

xmax = []

ymax = []

classes = []

classes_text = []

# truncated = []

#poses = []

difficult_obj = []

if 'object' in data:

for obj in data['object']:

difficult = bool(int(obj['difficult']))

if ignore_difficult_instances and difficult:

continue

difficult_obj.append(int(difficult))

xmin.append(float(obj['bndbox']['xmin']) / width)

ymin.append(float(obj['bndbox']['ymin']) / height)

xmax.append(float(obj['bndbox']['xmax']) / width)

ymax.append(float(obj['bndbox']['ymax']) / height)

classes_text.append(obj['name'].encode('utf8'))

classes.append(label_map_dict[obj['name']])

# truncated.append(int(obj['truncated'])) #此处修改

#poses.append(obj['pose'].encode('utf8')) #此处修改

example = tf.train.Example(features=tf.train.Features(feature={

'image/height': dataset_util.int64_feature(height),

'image/width': dataset_util.int64_feature(width),

'image/filename': dataset_util.bytes_feature(

data['filename'].encode('utf8')),

'image/source_id': dataset_util.bytes_feature(

data['filename'].encode('utf8')),

'image/key/sha256': dataset_util.bytes_feature(key.encode('utf8')),

'image/encoded': dataset_util.bytes_feature(encoded_jpg),

'image/format': dataset_util.bytes_feature('jpeg'.encode('utf8')),

'image/object/bbox/xmin': dataset_util.float_list_feature(xmin),

'image/object/bbox/xmax': dataset_util.float_list_feature(xmax),

'image/object/bbox/ymin': dataset_util.float_list_feature(ymin),

'image/object/bbox/ymax': dataset_util.float_list_feature(ymax),

'image/object/class/text': dataset_util.bytes_list_feature(classes_text),

'image/object/class/label': dataset_util.int64_list_feature(classes),

'image/object/difficult': dataset_util.int64_list_feature(difficult_obj),

# 'image/object/truncated': dataset_util.int64_list_feature(truncated), #此处修改

# 'image/object/view': dataset_util.bytes_list_feature(poses), #此处修改

}))

return example

def main(_):

if FLAGS.set not in SETS:

raise ValueError('set must be in : {}'.format(SETS))

if FLAGS.year not in YEARS:

raise ValueError('year must be in : {}'.format(YEARS))

data_dir = FLAGS.data_dir

years = ['VOC2007', 'VOC2012']

if FLAGS.year != 'merged':

years = [FLAGS.year]

writer = tf.python_io.TFRecordWriter(FLAGS.output_path)

label_map_dict = label_map_util.get_label_map_dict(FLAGS.label_map_path)

for year in years:

logging.info('Reading from PASCAL %s dataset.', year)

examples_path = os.path.join(data_dir,year, 'ImageSets', 'Main',

FLAGS.set + '.txt') #此处修改

annotations_dir = os.path.join(data_dir, year, FLAGS.annotations_dir)

examples_list = dataset_util.read_examples_list(examples_path)

for idx, example in enumerate(examples_list):

if idx % 100 == 0:

logging.info('On image %d of %d', idx, len(examples_list))

path = os.path.join(annotations_dir, example + '.xml')

print(path)

with tf.gfile.GFile(path, 'r') as fid:

xml_str = fid.read()

xml = etree.fromstring(xml_str.encode('utf-8')) #此处修改

# xml = etree.fromstring(xml_str)

data = dataset_util.recursive_parse_xml_to_dict(xml)['annotation']

tf_example = dict_to_tf_example(data, FLAGS.data_dir, label_map_dict,

FLAGS.ignore_difficult_instances)

writer.write(tf_example.SerializeToString())

writer.close()

if __name__ == '__main__':

tf.app.run()