一、Spring整合redis模板

1.1 Pom.xml----SSM项目直接可引的maven整合包

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<configuration>

<source>1.8</source>

<target>1.8</target>

</configuration>

</plugin>

</plugins>

</build>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<spring.version>4.1.7.RELEASE</spring.version>

<jackson.version>2.5.0</jackson.version>

</properties>

<dependencies>

<dependency>

<groupId>redis.clients</groupId>

<artifactId>jedis</artifactId>

<version>2.9.0</version>

</dependency>

<dependency>

<groupId>org.springframework.data</groupId>

<artifactId>spring-data-redis</artifactId>

<version>1.6.0.RELEASE</version>

</dependency>

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-lang3</artifactId>

<version>3.3.2</version>

</dependency>

<dependency>

<groupId>commons-logging</groupId>

<artifactId>commons-logging</artifactId>

<version>1.2</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.12</version>

<scope>test</scope>

</dependency>

<!-- spring -->

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-core</artifactId>

<version>${spring.version}</version>

</dependency>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-beans</artifactId>

<version>${spring.version}</version>

</dependency>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-context</artifactId>

<version>${spring.version}</version>

</dependency>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-tx</artifactId>

<version>${spring.version}</version>

</dependency>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-web</artifactId>

<version>${spring.version}</version>

</dependency>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-webmvc</artifactId>

<version>${spring.version}</version>

</dependency>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-jdbc</artifactId>

<version>${spring.version}</version>

</dependency>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-test</artifactId>

<version>${spring.version}</version>

<scope>test</scope>

</dependency>

<!-- mybatis 包 -->

<!--mybatis spring 插件 -->

<dependency>

<groupId>org.mybatis</groupId>

<artifactId>mybatis-spring</artifactId>

<version>1.3.2</version>

</dependency>

<!-- mysql连接 -->

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.6</version>

</dependency>

<!-- 数据源 -->

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid</artifactId>

<version>1.0.12</version>

</dependency>

<dependency>

<groupId>org.aspectj</groupId>

<artifactId>aspectjweaver</artifactId>

<version>1.8.4</version>

</dependency>

<!-- log4j -->

<dependency>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

<version>1.2.17</version>

</dependency>

<!-- servlet -->

<dependency>

<groupId>javax.servlet</groupId>

<artifactId>servlet-api</artifactId>

<version>3.0-alpha-1</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>javax.servlet</groupId>

<artifactId>jstl</artifactId>

<version>1.2</version>

</dependency>

<!-- json -->

<dependency>

<groupId>org.codehaus.jackson</groupId>

<artifactId>jackson-mapper-asl</artifactId>

<version>1.9.13</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.3</version>

</dependency>

<dependency>

<groupId>com.fasterxml.jackson.core</groupId>

<artifactId>jackson-annotations</artifactId>

<version>${jackson.version}</version>

</dependency>

<dependency>

<groupId>com.fasterxml.jackson.core</groupId>

<artifactId>jackson-core</artifactId>

<version>${jackson.version}</version>

</dependency>

<dependency>

<groupId>com.fasterxml.jackson.core</groupId>

<artifactId>jackson-databind</artifactId>

<version>${jackson.version}</version>

</dependency>

<!-- 文件上传 -->

<dependency>

<groupId>commons-io</groupId>

<artifactId>commons-io</artifactId>

<version>2.4</version>

</dependency>

<dependency>

<groupId>commons-fileupload</groupId>

<artifactId>commons-fileupload</artifactId>

<version>1.2.2</version>

</dependency>

<dependency>

<groupId>org.mybatis</groupId>

<artifactId>mybatis</artifactId>

<version>3.4.5</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-api</artifactId>

<version>1.7.21</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

<version>1.7.21</version>

</dependency>

<dependency>

<groupId>com.google.guava</groupId>

<artifactId>guava</artifactId>

<version>22.0</version>

</dependency>

</dependencies>

1.2 Application.xml

<?xml version="1.0" encoding="UTF-8"?>

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns:p="http://www.springframework.org/schema/p"

xmlns:context="http://www.springframework.org/schema/context"

xmlns:cache="http://www.springframework.org/schema/cache"

xmlns:tx="http://www.springframework.org/schema/tx"

xsi:schemaLocation="http://www.springframework.org/schema/beans

http://www.springframework.org/schema/beans/spring-beans-4.1.xsd

http://www.springframework.org/schema/context

http://www.springframework.org/schema/context/spring-context-4.1.xsd

http://www.springframework.org/schema/cache

http://www.springframework.org/schema/cache/spring-cache-4.1.xsd

http://www.springframework.org/schema/tx

http://www.springframework.org/schema/tx/spring-tx.xsd">

<context:component-scan base-package="cn.tx"></context:component-scan>

<!-- redis 相关配置 -->

<bean id="poolConfig" class="redis.clients.jedis.JedisPoolConfig">

<property name="maxIdle" value="300" />

<property name="maxWaitMillis" value="300" />

<property name="testOnBorrow" value="true" />

</bean>

<bean id="JedisConnectionFactory"

class="org.springframework.data.redis.connection.jedis.JedisConnectionFactory"

p:host-name="192.168.0.209"

p:port="6379"

p:pool-config-ref="poolConfig"/>

<bean id="redisTemplate" class="org.springframework.data.redis.core.RedisTemplate">

<property name="connectionFactory" ref="JedisConnectionFactory" />

</bean>

</beans>

1.3 Util工具类

package cn.tx.service;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.factory.annotation.Qualifier;

import org.springframework.data.redis.core.*;

import org.springframework.stereotype.Service;

import java.util.*;

import java.util.concurrent.TimeUnit;

@Service

public class RedisCacheUtil<T>{

@Autowired

@Qualifier("redisTemplate")

public RedisTemplate redisTemplate;

/**

* 缓存基本的对象,Integer、String、实体类等

* @param key 缓存的键值

* @param value 缓存的值

* @return 缓存的对象

*/

public <T> ValueOperations<String,T> setCacheObject(String key, T value)

{

ValueOperations<String,T> operation = redisTemplate.opsForValue();

operation.set(key,value);

return operation;

}

public <T> ValueOperations<String,T> setCacheObject(String key, T value, long time)

{

ValueOperations<String,T> operation = redisTemplate.opsForValue();

operation.set(key, value, time, TimeUnit.SECONDS);

return operation;

}

/**

* 获得缓存的基本对象。

* @param key 缓存键值

* @param

* @return 缓存键值对应的数据

*/

public <T> T getCacheObject(String key/*,ValueOperations<String,T> operation*/)

{

ValueOperations<String,T> operation = redisTemplate.opsForValue();

return operation.get(key);

}

/**

* 缓存List数据

* @param key 缓存的键值

* @param dataList 待缓存的List数据

* @return 缓存的对象

*/

public <T> ListOperations<String, T> setCacheList(String key, List<T> dataList)

{

ListOperations listOperation = redisTemplate.opsForList();

if(null != dataList)

{

int size = dataList.size();

for(int i = 0; i < size ; i ++)

{

listOperation.rightPush(key,dataList.get(i));

}

}

return listOperation;

}

/**

* 获得缓存的list对象

* @param key 缓存的键值

* @return 缓存键值对应的数据

*/

public <T> List<T> getCacheList(String key)

{

List<T> dataList = new ArrayList<T>();

ListOperations<String,T> listOperation = redisTemplate.opsForList();

Long size = listOperation.size(key);

for(int i = 0 ; i < size ; i ++)

{

dataList.add((T) listOperation.leftPop(key));

}

return dataList;

}

/**

* 缓存Set

* @param key 缓存键值

* @param dataSet 缓存的数据

* @return 缓存数据的对象

*/

public <T> BoundSetOperations<String,T> setCacheSet(String key, Set<T> dataSet)

{

BoundSetOperations<String,T> setOperation = redisTemplate.boundSetOps(key);

/*T[] t = (T[]) dataSet.toArray();

setOperation.add(t);*/

Iterator<T> it = dataSet.iterator();

while(it.hasNext())

{

setOperation.add(it.next());

}

return setOperation;

}

/**

* 获得缓存的set

* @param key

* @param

* @return

*/

public Set<T> getCacheSet(String key/*,BoundSetOperations<String,T> operation*/)

{

Set<T> dataSet = new HashSet<T>();

BoundSetOperations<String,T> operation = redisTemplate.boundSetOps(key);

Long size = operation.size();

for(int i = 0 ; i < size ; i++)

{

dataSet.add(operation.pop());

}

return dataSet;

}

/**

* 缓存Map

* @param key

* @param dataMap

* @return

*/

public <T> HashOperations<String,String,T> setCacheMap(String key,Map<String,T> dataMap)

{

HashOperations hashOperations = redisTemplate.opsForHash();

if(null != dataMap)

{

for (Map.Entry<String, T> entry : dataMap.entrySet()) {

/*System.out.println("Key = " + entry.getKey() + ", Value = " + entry.getValue()); */

hashOperations.put(key,entry.getKey(),entry.getValue());

}

}

return hashOperations;

}

/**

* 获得缓存的Map

* @param key

* @param

* @return

*/

public <T> Map<String,T> getCacheMap(String key/*,HashOperations<String,String,T> hashOperation*/)

{

Map<String, T> map = redisTemplate.opsForHash().entries(key);

/*Map<String, T> map = hashOperation.entries(key);*/

return map;

}

/**

* 缓存Map

* @param key

* @param dataMap

* @return

*/

public <T> HashOperations<String,Integer,T> setCacheIntegerMap(String key, Map<Integer,T> dataMap)

{

HashOperations hashOperations = redisTemplate.opsForHash();

if(null != dataMap)

{

for (Map.Entry<Integer, T> entry : dataMap.entrySet()) {

/*System.out.println("Key = " + entry.getKey() + ", Value = " + entry.getValue()); */

hashOperations.put(key,entry.getKey(),entry.getValue());

}

}

return hashOperations;

}

/**

* 获得缓存的Map

* @param key

* @param

* @return

*/

public <T> Map<Integer,T> getCacheIntegerMap(String key/*,HashOperations<String,String,T> hashOperation*/)

{

Map<Integer, T> map = redisTemplate.opsForHash().entries(key);

/*Map<String, T> map = hashOperation.entries(key);*/

return map;

}

}

1.4 Model

package cn.tx.model;

import java.io.Serializable;

public class Account implements Serializable {

private int id;

private String name;

public Account() {

}

public Account(int id, String name) {

this.id = id;

this.name = name;

}

public int getId() {

return id;

}

public void setId(int id) {

this.id = id;

}

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

@Override

public String toString() {

return "Account{" +

"id=" + id +

", name='" + name + '\'' +

'}';

}

}

1.5 Test测试类

package cn.tx.service;

import cn.tx.model.Account;

import org.junit.Test;

import org.junit.runner.RunWith;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.test.context.ContextConfiguration;

import org.springframework.test.context.junit4.SpringJUnit4ClassRunner;

import java.util.ArrayList;

import java.util.List;

@RunWith(SpringJUnit4ClassRunner.class)

@ContextConfiguration(locations = "classpath:application.xml")

public class AccountServiceTest {

@Autowired

RedisCacheUtil redisCacheUtil;

@Test

public void selectAll(){

List<Account> accounts = new ArrayList<>();

for (int i = 0; i < 10; i++) {

Account a = new Account();

a.setId(i);

a.setName("liangge");

accounts.add(a);

}

redisCacheUtil.setCacheObject("key1", accounts);

List<Account> key1 = (List<Account>) redisCacheUtil.getCacheObject("key1");

System.out.println(key1);

}

}

二、持久化

Redis的高性能是由于其将所有数据都存储在了内存中,为了使Redis在重启之后仍能保证数据不丢失,需要将数据从内存中同步到硬盘中,这一过程就是持久化。

Redis支持两种方式的持久化,一种是RDB方式,一种是AOF方式。可以单独使用其中一种或将二者结合使用

2.1RDB持久化

RDB方式的持久化是通过快照(snapshotting)完成的,当符合一定条件时Redis会自动将内存中的数据进行快照并持久化到硬盘。

问题总结:

通过RDB方式实现持久化,一旦Redis异常退出,就会丢失最后一次快照以后更改的所有数据。这就需要开发者根据具体的应用场合,通过组合设置自动快照条件的方式来将可能发生的数据损失控制在能够接受的范围。如果数据很重要以至于无法承受任何损失,则可以考虑使用AOF方式进行持久化。

2.2AOF持久化

默认情况下Redis没有开启AOF(append only file)方式的持久化,可以通过appendonly参数开启:

appendonly yes

开启AOF持久化后每执行一条会更改Redis中的数据的命令,Redis就会将该命令写入硬

盘中的AOF文件。AOF文件的保存位置和RDB文件的位置相同,都是通过dir参数设置的,默认的文件名是appendonly.aof,可以通过appendfilename参数修改:appendfilename appendonly.aof。

三、主从复制

3.1 什么是主从复制

持久化保证了即使redis服务重启也不会丢失数据,因此redis服务重启后会将硬盘上持久化的数据恢复到内存中,但是当redis服务器的硬盘损坏了可能会导致数据丢失,如果通过redis的主从复制机制就可以避免这种单点故障。

3.2主从配置

3.2.1主redis配置

无需特殊配置

3.2.2从redis配置

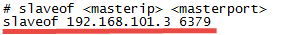

修改从redis服务器上的redis.conf文件,添加slaveof 主redisip 主redis端口

上边的配置说明当前该从redis服务器所对应的主redis是192.168.0.209,端口是6379

部分复制说明:

从机连接主机后,会主动发起 PSYNC 命令,从机会提供 master的runid(机器标识,随机生成的一个串) 和 offset(数据偏移量,如果offset主从不一致则说明数据不同步),主机验证 runid 和 offset 是否有效, runid 相当于主机身份验证码,用来验证从机上一次连接的主机,如果runid验证未通过则,则进行全同步,如果验证通过则说明曾经同步过,根据offset同步部分数据。

三、redis集群

3.1 ruby环境

redis集群管理工具redis-trib.rb依赖ruby环境,首先需要安装ruby环境:

安装ruby

yum install ruby

gem install redis

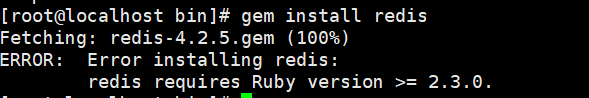

如果此处报错,说明redis4.0的版本需要ruby的版本较高,故此我们更新ruby版本:

执行下面命令:

(1)gpg --keyserver hkp://keys.gnupg.net --recv-keys 409B6B1796C275462A1703113804BB82D39DC0E3 7D2BAF1CF37B13E2069D6956105BD0E739499BDB

(2)curl -sSL https://get.rvm.io | bash -s stable

(3)source /etc/profile.d/rvm.sh

(4)rvm list known

(5)rvm install 2.6

(6)rvm use 2.6.3 default

(7)安装完毕后重新执行:gem install redis

3.2 创建集群

这里在同一台服务器用不同的端口表示不同的redis服务器,如下:

主节点:192.168.0.209:7001 192.168.0.209:7002 192.168.0.209:7003

从节点:192.168.0.209:7004 192.168.0.209:7005 192.168.0.209:7006

在/下创建redis-cluster目录,其下创建7001、7002。。7006目录,如下:

创建文件夹命令

mkdir -p /redis-cluster/700{1…6}/{logs,data} && cd /redis-cluster

生成配置文件命令(一起复制,不要单行复制)

IP=`ip a|grep 'inet' |grep -v '127.0.0.1'|grep -v 'inet6'|awk '{print $2}'|awk -F'/' '{print $1}'`

for i in {

1..6}

do

cat > 700${

i}/redis.conf <<EOF

daemonize yes

port 700${

i}

cluster-enabled yes

cluster-config-file cluster-nodes-700${

i}.conf

cluster-node-timeout 15000

appendonly yes

bind ${

IP}

protected-mode no

dbfilename dump-700${

i}.rdb

logfile /redis-cluster/700${

i}/logs/redis.log

pidfile /redis-cluster/700${

i}/data/redis.pid

dir /redis-cluster/700${

i}/data

appendfilename "appendonly-700${i}.aof"

EOF

done

启动每个节点redis服务

(1)复制下图代码,创建cluster.sh脚本至/redis-cluster文件夹

#!/bin/bash

# by seven

# date 2019-04-09

# redis管理脚本

# start,stop,restart,status

# 该脚本用于管理redis实例,如启动、关闭、状态等

# exp: redis.sh restart 7000

# redis.sh stop all

# # # # # # # # #

# 参数

IP=`ip a|grep 'inet' |grep -v '127.0.0.1'|grep -v 'inet6'|awk '{print $2}'|awk -F'/' '{print $1}'`

port=$2

if [ ! $# -eq 2 ];then

echo "Warning! 请输入两个参数!"

exit 1

fi

if [ -z ${

port} ];then

echo "Warning! 请输入端口号"

exit 1

fi

# start函数

start(){

IS_PORT_EXISTS=$( ps -ef | grep redis| grep ${

port} | grep -v grep | wc -l )

if [ $IS_PORT_EXISTS -ge 1 ];then

echo "Warning! 端口号已存在!"

exit 1

else

/redis-cluster/redis-server /redis-cluster/${

port}/redis.conf

fi

}

# stop函数

stop(){

IS_PORT_EXISTS=$(ps -ef |grep redis |grep ${

port} |grep -v grep |wc -l)

if [ $IS_PORT_EXISTS -lt 1 ];then

echo "Warning! 端口不存在!"

exit 1

else

/redis-cluster/redis-cli -h ${

IP} -p ${

port} shutdown

fi

}

# status函数

status(){

/redis-cluster/redis-trib.rb check ${

IP}:${

port}

}

case $1 in

start)

start

;;

stop)

stop

;;

status)

status

;;

?|help)

echo $"Usage: 'redis' {start|status|stop|help|?}"

;;

*)

echo $"Usage: 'redis' {start|status|stop|help|?}"

esac

(2)复制redis的src目录下的客户端,服务端和集群脚本至/redis-cluster文件夹

cp /usr/local/tools/redis-test/redis-4.0.14/src/redis-cli /redis-cluster/redis-cli

cp /usr/local/tools/redis-test/redis-4.0.14/src/redis-server /redis-cluster/redis-server

cp /usr/local/tools/redis-test/redis-4.0.14/src/redis-trib.rb /redis-cluster/redis-trib.rb

至此 /redis-cluster文件夹下的目录结构如图:

为cluster.sh脚本赋值

chmod 777 cluster.sh

运行脚本启动6个redis进程

./cluster.sh start 7001

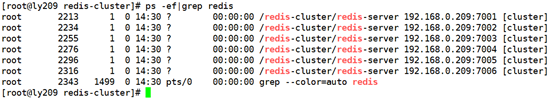

查看启动的redis进程:

执行创建集群命令

执行redis-trib.rb,此脚本是ruby脚本,它依赖ruby环境。

./redis-trib.rb create --replicas 1 192.168.0.209:7001 192.168.0.209:7002 192.168.0.209:7003 192.168.0.209:7004 192.168.0.209:7005 192.168.0.209:7006

说明:

redis集群至少需要3个主节点,每个主节点有一个从节点总共6个节点

replicas指定为1表示每个主节点有一个从节点

注意:

如果执行时报如下错误:

[ERR] Node XXXXXX is not empty. Either the node already knows other nodes (check with CLUSTER NODES) or contains some key in database 0

解决方法是删除生成的配置文件nodes.conf,如果不行则说明现在创建的结点包括了旧集群的结点信息,需要删除redis的持久化文件后再重启redis,比如:appendonly.aof、dump.rdb

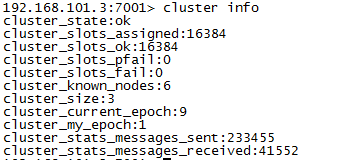

查询集群信息

集群创建成功登陆任意redis结点查询集群中的节点情况。

客户端以集群方式登陆:

说明:

./redis-cli -c -h 192.168.0.209 -p 7001 ,其中-c表示以集群方式连接redis,-h指定ip地址,-p指定端口号

cluster nodes 查询集群结点信息

cluster info 查询集群状态信息

jedisCluster

测试代码

// 连接redis集群

@Test

public void testJedisCluster() {

JedisPoolConfig config = new JedisPoolConfig();

// 最大连接数

config.setMaxTotal(30);

// 最大连接空闲数

config.setMaxIdle(2);

//集群结点

Set<HostAndPort> jedisClusterNode = new HashSet<HostAndPort>();

jedisClusterNode.add(new HostAndPort("192.168.0.209", 7001));

jedisClusterNode.add(new HostAndPort("192.168.0.209", 7002));

jedisClusterNode.add(new HostAndPort("192.168.0.209", 7003));

jedisClusterNode.add(new HostAndPort("192.168.0.209", 7004));

jedisClusterNode.add(new HostAndPort("192.168.0.209", 7005));

jedisClusterNode.add(new HostAndPort("192.168.0.209", 7006));

JedisCluster jc = new JedisCluster(jedisClusterNode, config);

JedisCluster jcd = new JedisCluster(jedisClusterNode);

jcd.set("name", "zhangsan");

String value = jcd.get("name");

System.out.println(value);

}