**

提交 mr 作业到 yarn 上运行 wc

**

1.先编辑两个文件:

[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$ vi a.log(先编辑一个文件保存)

ruoze

jepson

www.ruozedata.com

dashu adai fanren

1

a

b

c

a

b

c

ruoze

jepon

[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$ vi b.txt(再编辑一个文件保存)

a

b

d

e

f

ruoze

1

1

3

5

2.在 hdfs的家目录下创建一个级联目录:

hdfs dfs -mkdir -p / wordcount/input

3.将a.log和b.text文件上传到hdfs下:

[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$ hdfs dfs -put a.log /wordcount/input

[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$ hdfs dfs -put b.txt /wordcount/input

4.我们可以通过:find ./ -name 'example.jar’找到这个jar包:

./share/hadoop/mapreduce2/hadoop-mapreduce-examples-2.6.0-cdh5.7.0.jar

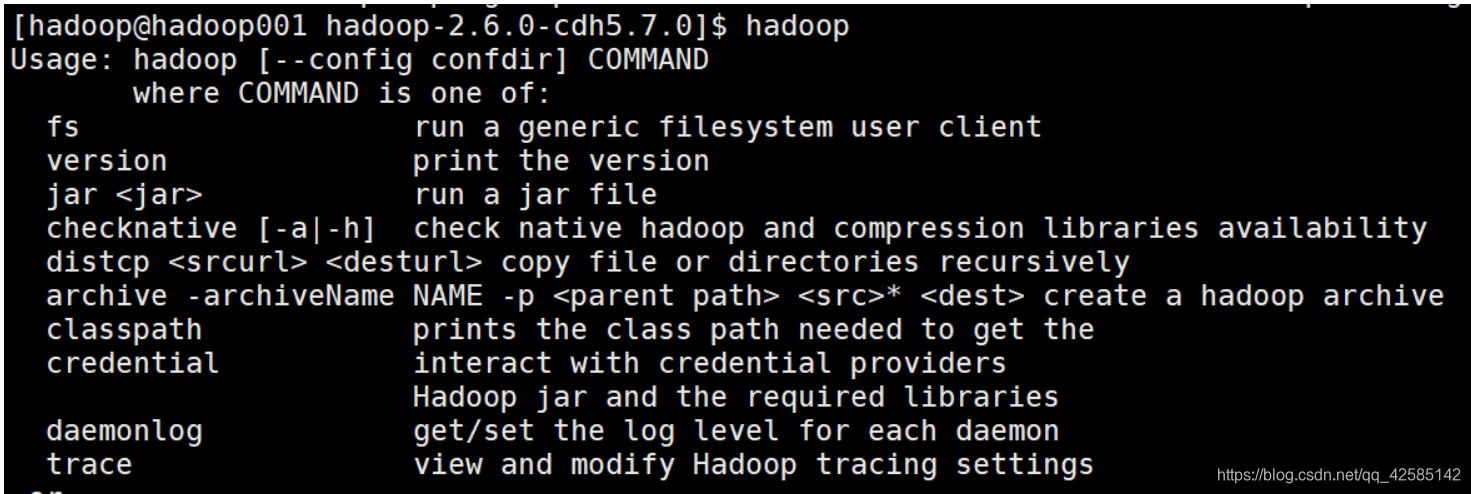

5.这个时候我们需要查看命令帮助:

hadoop 回车 向下翻看,会发现一个 jar的命令

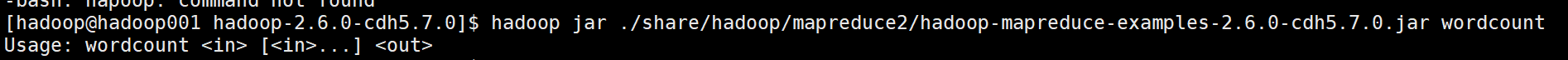

6.输入命令:

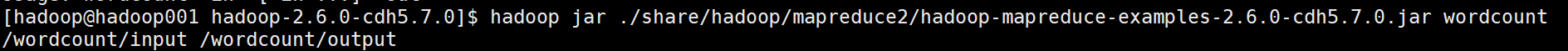

7.继续输入命令:(注意:output1事先一定是不存在的)

7.继续输入命令:(注意:output1事先一定是不存在的)

8.查看计算结果:

8.查看计算结果:

[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$ hadoop jar \

./share/hadoop/mapreduce2/hadoop-mapreduce-examples-2.6.0-cdh5.7.0.jar \

wordcount /wordcount/input /wordcount/output1

[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$ hdfs dfs -cat /wordcount/output1/part-r-00000

19/02/16 22:05:46 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

1 3

3 1

5 1

a 3

adai 1

b 3

c 2

d 1

dashu 1

e 1

f 1

fanren 1

jepon 1

jepson 1

ruoze 3

www.ruozedata.com 1

[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$ hdfs dfs -get /wordcount/output1/part-r-00000 ./

[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$ cat part-r-00000

1 3

3 1

5 1

a 3

adai 1

b 3

c 2

d 1

dashu 1

e 1

f 1

fanren 1

jepon 1

jepson 1

ruoze 3

www.ruozedata.com 1