前言

本节学习几种深度卷积神经网络

- AlexNet

- VGG

- NiN

- GoogleNet

1、AlexNet

- 2012年由Alex Krizhevsky提出

- 以很⼤的优势赢得了ImageNet 2012图像识别挑战赛

- 有5层卷积和2层全连接隐藏层,以及1个全连接输出层

卷积层

- 第⼀层中的卷积窗口形状是11 * 11

- 第⼆层中的卷积窗口形状减小到5*5

- 之后全采⽤3*3

- 第⼀、第⼆和第五个卷积层之后都使⽤了窗口形状为3*3、步幅为2的最⼤池化层。

全连接层

- 两个输出个数为4096

激活函数用ReLU

一个简单实现如下

import d2lzh as d2l

from mxnet import gluon, init, nd

from mxnet.gluon import data as gdata, nn

import os

import sys

"""简单实现alexnet"""

# alexnet模型

net = nn.Sequential()

# 使用较大的11 x 11窗口来捕获物体。同时使用步幅4来较大幅度减小输出高和宽

# 这里使用的输出通道数比LeNet中的也要大很多

net.add(nn.Conv2D(96, kernel_size=11, strides=4, activation='relu'),

nn.MaxPool2D(pool_size=3, strides=2),

# 减小卷积窗口,使用填充为2来使得输入与输出的高和宽一致,且增大输出通道数

nn.Conv2D(256, kernel_size=5, padding=2, activation='relu'),

nn.MaxPool2D(pool_size=3, strides=2),

# 连续3个卷积层,且使用更小的卷积窗口。除了最后的卷积层外,进一步增大了输出通道数。

# 前两个卷积层后不使用池化层来减小输入的高和宽

nn.Conv2D(384, kernel_size=3, padding=1, activation='relu'),

nn.Conv2D(384, kernel_size=3, padding=1, activation='relu'),

nn.Conv2D(256, kernel_size=3, padding=1, activation='relu'),

nn.MaxPool2D(pool_size=3, strides=2),

# 这里全连接层的输出个数比LeNet中的大数倍。使用丢弃层来缓解过拟合

nn.Dense(4096, activation="relu"), nn.Dropout(0.5),

nn.Dense(4096, activation="relu"), nn.Dropout(0.5),

# 输出层。由于这里使用Fashion-MNIST,所以用类别数为10,而非论文中的1000

nn.Dense(10))

# 构造一个高和宽均为224的单通道数据样本来观察每一层的输出形状。

X = nd.random.uniform(shape=(1, 1, 224, 224))

net.initialize()

for layer in net:

X = layer(X)

print(layer.name, 'output shape:\t', X.shape)

# 读取数据

def load_data_fashion_mnist(batch_size, resize=None, root=os.path.join('~', '.mxnet', 'datasets', 'fashion-mnist')):

root = os.path.expanduser(root) # 展开用户路径'~'

transformer = []

if resize: #将图像⾼和宽扩⼤到AlexNet使⽤的图像⾼和宽224

transformer += [gdata.vision.transforms.Resize(resize)]

transformer += [gdata.vision.transforms.ToTensor()]

transformer = gdata.vision.transforms.Compose(transformer)

mnist_train = gdata.vision.FashionMNIST(root=root, train=True)

mnist_test = gdata.vision.FashionMNIST(root=root, train=False)

num_workers = 0 if sys.platform.startswith('win32') else 4

train_iter = gdata.DataLoader(mnist_train.transform_first(transformer), batch_size, shuffle=True, num_workers=num_workers)

test_iter = gdata.DataLoader(mnist_test.transform_first(transformer), batch_size, shuffle=False, num_workers=num_workers)

return train_iter, test_iter

batch_size = 128 #如出现“out of memory”的报错信息,可减小batch_size或resize

train_iter, test_iter = load_data_fashion_mnist(batch_size, resize=224)

# 训练

lr, num_epochs, ctx = 0.01, 5, d2l.try_gpu()

net.initialize(force_reinit=True, ctx=ctx, init=init.Xavier())

trainer = gluon.Trainer(net.collect_params(), 'sgd', {'learning_rate': lr})

d2l.train_ch5(net, train_iter, test_iter, batch_size, trainer, ctx, num_epochs)2、VGG

- Visual Geometry Group实验室提出

- 通过重复使⽤简单的基础块来构建深度模型

- 连续使⽤数个相同的填充为1、窗口形状为3 * 3的卷积层后接上⼀个步幅为2、窗口形状为2 * 2的最⼤池化层

import d2lzh as d2l

from mxnet import gluon, init, nd

from mxnet.gluon import nn

"""实现VGG"""

# 基础模块

def vgg_block(num_convs, num_channels): #可以指定卷积层的数量num_convs和输出通道数num_channels

blk = nn.Sequential()

for _ in range(num_convs):

blk.add(nn.Conv2D(num_channels, kernel_size=3,padding=1, activation='relu'))

blk.add(nn.MaxPool2D(pool_size=2, strides=2))

return blk

# VGG-11。

def vgg(conv_arch):

net = nn.Sequential()

# 卷积层部分

for (num_convs, num_channels) in conv_arch:

net.add(vgg_block(num_convs, num_channels))

# 全连接层部分

net.add(nn.Dense(4096, activation='relu'), nn.Dropout(0.5),

nn.Dense(4096, activation='relu'), nn.Dropout(0.5),

nn.Dense(10))

return net

conv_arch = ((1, 64), (1, 128), (2, 256), (2, 512), (2, 512))

net = vgg(conv_arch)

# 构造一个高和宽均为224的单通道数据样本来观察每一层的输出形状。

net.initialize()

X = nd.random.uniform(shape=(1, 1, 224, 224))

for blk in net:

X = blk(X)

print(blk.name, 'output shape:\t', X.shape)

# 数据与训练

ratio = 4

small_conv_arch = [(pair[0], pair[1] // ratio) for pair in conv_arch]

net = vgg(small_conv_arch)

lr, num_epochs, batch_size, ctx = 0.05, 5, 128, d2l.try_gpu()

net.initialize(ctx=ctx, init=init.Xavier())

trainer = gluon.Trainer(net.collect_params(), 'sgd', {'learning_rate': lr})

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size, resize=224)

d2l.train_ch5(net, train_iter, test_iter, batch_size, trainer, ctx,num_epochs)3、NiN

⽹络中的⽹络

- 串联多个由卷积层和“全连接”层构成的小⽹络来构建⼀个深层⽹络

与AlexNet还有个不同

- NiN去掉了AlexNet最后的3个全连接层

- 取而代之地,NiN使⽤了输出通道数等于标签类别数的NiN块

- 然后使⽤全局平均池化层对每个通道中所有元素求平均并直接⽤于分类

import d2lzh as d2l

from mxnet import gluon, init, nd

from mxnet.gluon import nn

"""实现NiN"""

#基础模块

def nin_block(num_channels, kernel_size, strides, padding):

blk = nn.Sequential()

blk.add(nn.Conv2D(num_channels, kernel_size, strides, padding, activation='relu'),

nn.Conv2D(num_channels, kernel_size=1, activation='relu'),

nn.Conv2D(num_channels, kernel_size=1, activation='relu'))

return blk

# NiN

net = nn.Sequential()

net.add(nin_block(96, kernel_size=11, strides=4, padding=0),

nn.MaxPool2D(pool_size=3, strides=2),

nin_block(256, kernel_size=5, strides=1, padding=2),

nn.MaxPool2D(pool_size=3, strides=2),

nin_block(384, kernel_size=3, strides=1, padding=1),

nn.MaxPool2D(pool_size=3, strides=2), nn.Dropout(0.5),

# 标签类别数是10

nin_block(10, kernel_size=3, strides=1, padding=1),

# 全局平均池化层将窗口形状自动设置成输入的高和宽

nn.GlobalAvgPool2D(),

# 将四维的输出转成二维的输出,其形状为(批量大小, 10)

nn.Flatten())

# 构建一个数据样本来查看每一层的输出形状。

X = nd.random.uniform(shape=(1, 1, 224, 224))

net.initialize()

for layer in net:

X = layer(X)

print(layer.name, 'output shape:\t', X.shape)

# 数据与训练

lr, num_epochs, batch_size, ctx = 0.1, 5, 128, d2l.try_gpu()

net.initialize(force_reinit=True, ctx=ctx, init=init.Xavier())

trainer = gluon.Trainer(net.collect_params(), 'sgd', {'learning_rate': lr})

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size, resize=224)

d2l.train_ch5(net, train_iter, test_iter, batch_size, trainer, ctx, num_epochs)4、GoogleNet

2014年的ImageNet图像识别挑战赛中出现

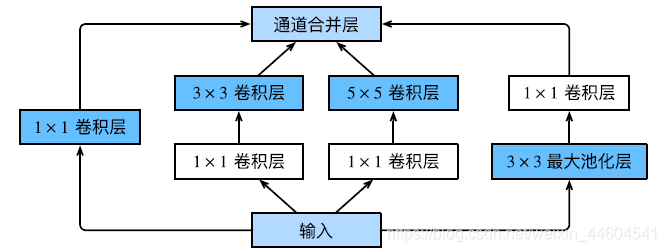

基础卷积块Inception块

- 相当于⼀个有4条线路的⼦⽹络

- 通过不同窗口形状的卷积层和最⼤池化层来并⾏抽取信息

- 使⽤1 * 1卷积层减少通道数从而降低模型复杂度。

- 将多个设计精细的Inception块和其他层串联起来

- Inception块的通道数分配之⽐是在ImageNet数据集上通过⼤量的实验得来的

import d2lzh as d2l

from mxnet import gluon, init, nd

from mxnet.gluon import nn

"""实现googlenet"""

# 基础块Inception

class Inception(nn.Block):

# c1 - c4为每条线路里的层的输出通道数

def __init__(self, c1, c2, c3, c4, **kwargs):

super(Inception, self).__init__(**kwargs)

# 线路1,单1 x 1卷积层

self.p1_1 = nn.Conv2D(c1, kernel_size=1, activation='relu')

# 线路2,1 x 1卷积层后接3 x 3卷积层

self.p2_1 = nn.Conv2D(c2[0], kernel_size=1, activation='relu')

self.p2_2 = nn.Conv2D(c2[1], kernel_size=3, padding=1, activation='relu')

# 线路3,1 x 1卷积层后接5 x 5卷积层

self.p3_1 = nn.Conv2D(c3[0], kernel_size=1, activation='relu')

self.p3_2 = nn.Conv2D(c3[1], kernel_size=5, padding=2, activation='relu')

# 线路4,3 x 3最大池化层后接1 x 1卷积层

self.p4_1 = nn.MaxPool2D(pool_size=3, strides=1, padding=1)

self.p4_2 = nn.Conv2D(c4, kernel_size=1, activation='relu')

def forward(self, x):

p1 = self.p1_1(x)

p2 = self.p2_2(self.p2_1(x))

p3 = self.p3_2(self.p3_1(x))

p4 = self.p4_2(self.p4_1(x))

return nd.concat(p1, p2, p3, p4, dim=1) # 在通道维上连结输出

# googlenet模型

# 第一模块使用一个64通道的7*7卷积层。

b1 = nn.Sequential()

b1.add(nn.Conv2D(64, kernel_size=7, strides=2, padding=3, activation='relu'),

nn.MaxPool2D(pool_size=3, strides=2, padding=1))

# 第二模块使用2个卷积层:首先是64通道的1*1卷积层,然后是将通道增大3倍的3*3卷积层。它对应Inception块中的第二条线路。

b2 = nn.Sequential()

b2.add(nn.Conv2D(64, kernel_size=1, activation='relu'),

nn.Conv2D(192, kernel_size=3, padding=1, activation='relu'),

nn.MaxPool2D(pool_size=3, strides=2, padding=1))

# 第三模块串联2个完整的Inception块

# 第一个Inception块的输出通道数为64+128+32+32=256

# 第二个Inception块输出通道数增至$128+192+96+64=480

b3 = nn.Sequential()

b3.add(Inception(64, (96, 128), (16, 32), 32),

Inception(128, (128, 192), (32, 96), 64),

nn.MaxPool2D(pool_size=3, strides=2, padding=1))

# 第四模块串联了5个Inception块,其输出通道数分别是192+208+48+64=512、160+224+64+64=512、128+256+64+64=512、112+288+64+64=528和256+320+128+128=832

b4 = nn.Sequential()

b4.add(Inception(192, (96, 208), (16, 48), 64),

Inception(160, (112, 224), (24, 64), 64),

Inception(128, (128, 256), (24, 64), 64),

Inception(112, (144, 288), (32, 64), 64),

Inception(256, (160, 320), (32, 128), 128),

nn.MaxPool2D(pool_size=3, strides=2, padding=1))

# 第五模块有输出通道数为256+320+128+128=832和384+384+128+128=1024的两个Inception块

# 第五模块的后面紧跟输出层,该模块同NiN一样使用全局平均池化层来将每个通道的高和宽变成1

# 最后将输出变成二维数组后接上一个输出个数为标签类别数的全连接层

b5 = nn.Sequential()

b5.add(Inception(256, (160, 320), (32, 128), 128),

Inception(384, (192, 384), (48, 128), 128),

nn.GlobalAvgPool2D())

net = nn.Sequential()

net.add(b1, b2, b3, b4, b5, nn.Dense(10))

# 构造数据查看每层形状

X = nd.random.uniform(shape=(1, 1, 96, 96))

net.initialize()

for layer in net:

X = layer(X)

print(layer.name, 'output shape:\t', X.shape)

# 数据与训练

lr, num_epochs, batch_size, ctx = 0.1, 5, 128, d2l.try_gpu()

net.initialize(force_reinit=True, ctx=ctx, init=init.Xavier())

trainer = gluon.Trainer(net.collect_params(), 'sgd', {'learning_rate': lr})

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size, resize=96)

d2l.train_ch5(net, train_iter, test_iter, batch_size, trainer, ctx, num_epochs)结语

简单了解了下这几种深度卷积神经网络

对于其设计思路以及一些延伸还有待后续学习