第4章:nginx配置实例 -反向代理

4.1 反向代理实例一

实现效果:使用 nginx 反向代理,访问 www.123.com 直接跳转到 127.0.0.1:8080

4.1.1 实验代码

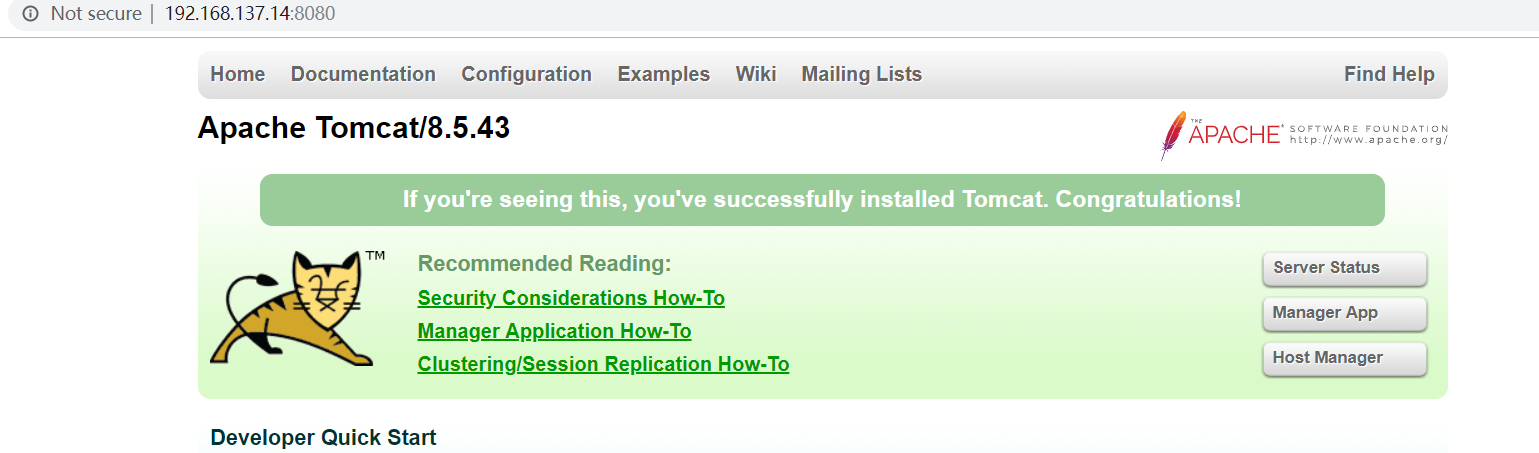

1) 启动一个 tomcat,浏览器地址栏输入 127.0.0.1:8080,出现如下界面

[root@hadoop-104 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

tomcat latest 238e6d7313e3 9 months ago 506MB

[root@hadoop-104 ~]# docker run -d -p 8080:8080 tomcat

08c7bd6f8d662a6a0a651b4bf40936bbc078b46bd8acb3111c743f14032c302a

[root@hadoop-104 ~]# docker ps -l

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

08c7bd6f8d66 tomcat "catalina.sh run" 15 seconds ago Up 11 seconds 0.0.0.0:8080->8080/tcp lucid_boyd

[root@hadoop-104 ~]#

#查看主机的IP

[root@hadoop-104 ~]# hostname -i

192.168.137.14

#查看是否能够访问

[root@hadoop-104 ~]# curl http://192.168.137.14:8080/

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8" />

<title>Apache Tomcat/8.5.43</title>

<link href="favicon.ico" rel="icon" type="image/x-icon" />

<link href="favicon.ico" rel="shortcut icon" type="image/x-icon" />

<link href="tomcat.css" rel="stylesheet" type="text/css" />

</head>

<body>

...

</body>

</html>

在外部通过浏览器访问:

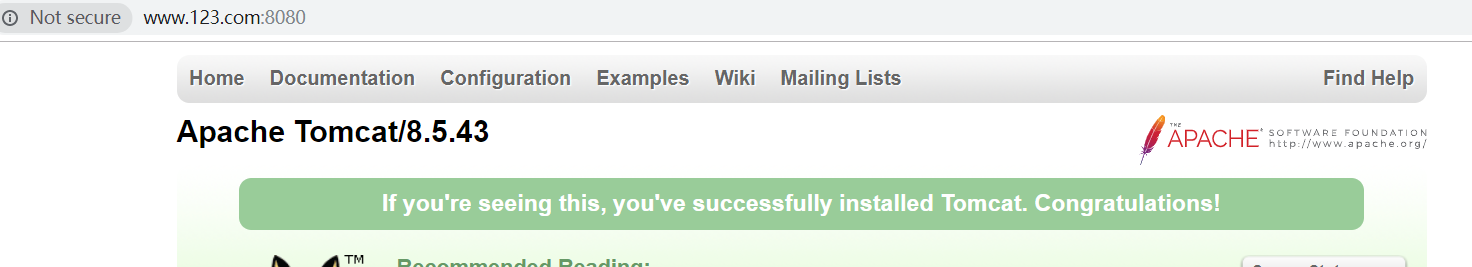

2)通过修改本地 host 文件,将 www.123.com 映射到192.168.137.14

配置完成之后,我们便可以通过 www.123.com:8080 访问到第一步出现的 Tomcat 初始界面。那么如何只需要输入 www.123.com 便可以跳转到 Tomcat 初始界面呢?便用到 nginx的反向代理。

192.168.137.14 www.123.com

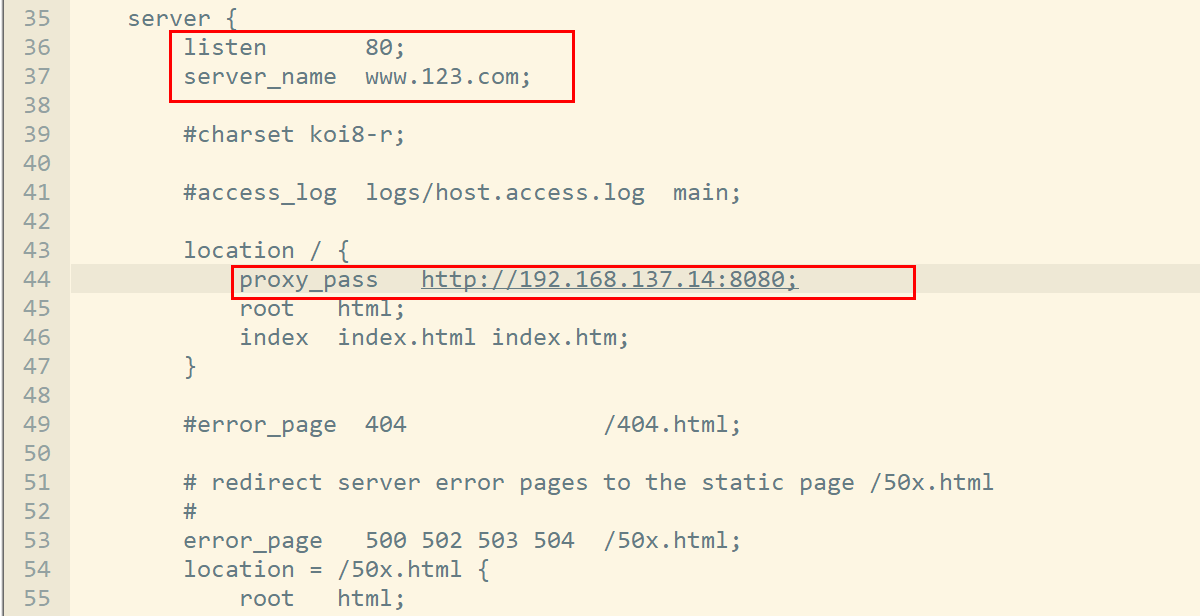

3) 在 nginx.conf 配置文件中增加如下配置

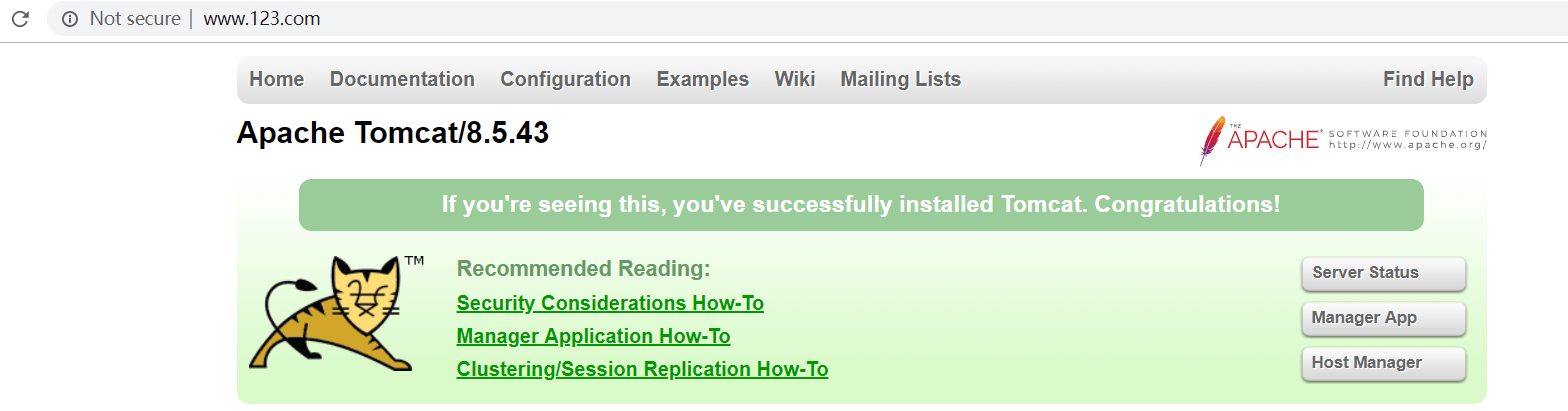

如上配置,我们监听 80 端口,访问域名为 www.123.com,不加端口号时默认为 80 端口,故访问该域名时会跳转到 127.0.0.1:8080 路径上。在浏览器端输入 www.123.com 结果如下:

如果出现在Linux上能够访问,但是在外部不能够访问,请检查防火墙是否开启,若开启则关闭它,再次访问。

#查看防火墙状态

[root@hadoop-104 nginx]# service firewalld status ;

Redirecting to /bin/systemctl status ; firewalld.service

Unit \xef\xbc\x9b.service could not be found.

● firewalld.service - firewalld - dynamic firewall daemon

Loaded: loaded (/usr/lib/systemd/system/firewalld.service; enabled; vendor preset: enabled)

Active: active (running) since Sun 2020-04-19 01:52:58 EDT; 2h 49min ago

Docs: man:firewalld(1)

Main PID: 5944 (firewalld)

CGroup: /system.slice/firewalld.service

└─5944 /usr/bin/python -Es /usr/sbin/firewalld --nofork --nopid

#关闭防火墙

[root@hadoop-104 nginx]# service firewalld stop ;

Redirecting to /bin/systemctl stop ; firewalld.service

Failed to stop \xef\xbc\x9b.service: Unit \xef\xbc\x9b.service not loaded.

#再次查看防火墙状态

[root@hadoop-104 nginx]# service firewalld status ;

Redirecting to /bin/systemctl status ; firewalld.service

Unit \xef\xbc\x9b.service could not be found.

● firewalld.service - firewalld - dynamic firewall daemon

Loaded: loaded (/usr/lib/systemd/system/firewalld.service; enabled; vendor preset: enabled)

Active: inactive (dead) since Sun 2020-04-19 04:42:10 EDT; 6s ago

Docs: man:firewalld(1)

Process: 5944 ExecStart=/usr/sbin/firewalld --nofork --nopid $FIREWALLD_ARGS (code=exited, status=0/SUCCESS)

Main PID: 5944 (code=exited, status=0/SUCCESS)

[root@hadoop-104 nginx]#

4.3 反向代理实例二

实现效果:使用 nginx 反向代理,根据访问的路径跳转到不同端口的服务中nginx 监听端口为 9001,

访问 http://192.168.137.14:9001/edu/ 直接跳转到 192.168.137.14:8001

访问 http://192.168.137.14:9001/vod/ 直接跳转到 192.168.137.14:8002

4.3.1 实验代码

第1步:准备好测试数据

在/opt/目录下,创建两个文件夹“edu”和“vod”,目录结构如下:

[root@hadoop-104 opt]# pwd

/opt

#目录结构如下

[root@hadoop-104 opt]# ll -R edu vod

edu:

total 4

-rw-r--r--. 1 root root 523 Apr 19 09:59 a.jsp

drwxr-xr-x. 2 root root 21 Apr 19 07:01 webapps

edu/webapps:

total 4

-rw-r--r--. 1 root root 346 Apr 19 07:04 web.xml

vod:

total 4

-rw-r--r--. 1 root root 525 Apr 19 09:59 b.jsp

drwxr-xr-x. 2 root root 21 Apr 19 07:01 webapps

vod/webapps:

total 4

-rw-r--r--. 1 root root 346 Apr 19 07:04 web.xml

[root@hadoop-104 opt]#

两个目录下的web.xml都是相同的

<?xml version="1.0" encoding="UTF-8"?>

<web-app xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns="http://java.sun.com/xml/ns/javaee"

xsi:schemaLocation="http://java.sun.com/xml/ns/javaee http://java.sun.com/xml/ns/javaee/web-app_2_5.xsd"

id="WebApp_ID" version="2.5">

<display-name>test</display-name>

</web-app>

edu目录下的a.jsp

<%@ page language="java" contentType="text/html; charset=UTF-8" pageEncoding="UTF-8"%>

<!DOCTYPE html PUBLIC "-//W3C//DTD HTML 4.01 Transitional//EN" "http://www.w3.org/TR/html4/loose.dtd">

<html>

<head>

<meta http-equiv="Content-Type" content="text/html; charset=UTF-8">

<title>Insert title here</title>

</head>

<body>

-----------welcome------------

<%="hello world!!! i am 8081"%>

<br>

<br>

<% System.out.println("=============docker tomcat self");%>

</body>

</html>

vod目录下的b.jsp

<%@ page language="java" contentType="text/html; charset=UTF-8" pageEncoding="UTF-8"%>

<!DOCTYPE html PUBLIC "-//W3C//DTD HTML 4.01 Transitional//EN" "http://www.w3.org/TR/html4/loose.dtd">

<html>

<head>

<meta http-equiv="Content-Type" content="text/html; charset=UTF-8">

<title>Insert title here</title>

</head>

<body>

-----------welcome------------

<%="hello world!!! i am 8082. "%>

<br>

<br>

<% System.out.println("=============docker tomcat self");%>

</body>

</html>

第2步,准备两个 tomcat,一个 8001 端口,一个 8002 端口

创建容器,并将本地/opt/edut和opt/vod数据卷分别映射到tomcat容器的“/usr/local/tomcat/webapp”

[root@hadoop-104 opt]# docker run -d --name tomcat_8001 -v /opt/edu:/usr/local/tomcat/webapps/edu -p 8001:8080 tomcat

5b34385bec1e531ee94ab5b5346dffb596a0e4e655797380b4f7695afe27d5c3

[root@hadoop-104 opt]# docker run -d --name tomcat_8002 -v /opt/vod:/usr/local/tomcat/webapps/vod -p 8002:8080 tomcat

db9f6a62e7816a26ab9033889bcaf0d66aaada78d20657f7d4d9a67f6a471910

查看所创建的两个容器:

[root@hadoop-104 opt]# docker ps -l -n 2

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

db9f6a62e781 tomcat "catalina.sh run" 15 seconds ago Up 14 seconds 0.0.0.0:8002->8080/tcp tomcat_8002

5b34385bec1e tomcat "catalina.sh run" 20 seconds ago Up 19 seconds 0.0.0.0:8001->8080/tcp tomcat_8001

[root@hadoop-104 opt]#

分别进入到“tomcat_8001”和“tomcat_8002”容器内,查看"/usr/local/tomcat/webapps"目录内容

[root@hadoop-104 opt]# docker exec -it tomcat_8001 /bin/bash

root@5b34385bec1e:/usr/local/tomcat# cd webapps/

root@5b34385bec1e:/usr/local/tomcat/webapps# ls -l

total 8

drwxr-xr-x. 3 root root 4096 Jul 18 2019 ROOT

drwxr-xr-x. 14 root root 4096 Jul 18 2019 docs

drwxr-xr-x. 3 root root 34 Apr 19 13:59 edu

drwxr-xr-x. 6 root root 83 Jul 18 2019 examples

drwxr-xr-x. 5 root root 87 Jul 18 2019 host-manager

drwxr-xr-x. 5 root root 103 Jul 18 2019 manager

root@5b34385bec1e:/usr/local/tomcat/webapps# ls -l -R edu/

edu/:

total 4

-rw-r--r--. 1 root root 523 Apr 19 13:59 a.jsp

drwxr-xr-x. 2 root root 21 Apr 19 11:01 webapps

edu/webapps:

total 4

-rw-r--r--. 1 root root 346 Apr 19 11:04 web.xml

root@5b34385bec1e:/usr/local/tomcat/webapps#

进入到“tomcat_8002”容器中,查看目录结构

[root@hadoop-104 opt]# docker exec -it tomcat_8002 /bin/bash

root@db9f6a62e781:/usr/local/tomcat# cd webapps/

root@db9f6a62e781:/usr/local/tomcat/webapps# ls -l

total 8

drwxr-xr-x. 3 root root 4096 Jul 18 2019 ROOT

drwxr-xr-x. 14 root root 4096 Jul 18 2019 docs

drwxr-xr-x. 6 root root 83 Jul 18 2019 examples

drwxr-xr-x. 5 root root 87 Jul 18 2019 host-manager

drwxr-xr-x. 5 root root 103 Jul 18 2019 manager

drwxr-xr-x. 3 root root 34 Apr 19 13:59 vod

root@db9f6a62e781:/usr/local/tomcat/webapps# ls -l -R vod/

vod/:

total 4

-rw-r--r--. 1 root root 525 Apr 19 13:59 b.jsp

drwxr-xr-x. 2 root root 21 Apr 19 11:01 webapps

vod/webapps:

total 4

-rw-r--r--. 1 root root 346 Apr 19 11:04 web.xml

root@db9f6a62e781:/usr/local/tomcat/webapps#

第3步,修改 nginx 的配置文件

在 http 块中添加 server{}

server {

listen 9001;

server_name 192.168.137.14;

location / {

proxy_pass http://192.168.137.14:8080;

root html;

index index.html index.htm;

}

location ~ /edu/ {

proxy_pass http://192.168.137.14:8001;

index index.html index.htm;

}

location ~ /vod/ {

proxy_pass http://192.168.137.14:8002;

index index.html index.htm;

}

}

location 指令说明

该指令用于匹配 URL。

语法如下:

-

= :用于不含正则表达式的 uri 前,要求请求字符串与 uri 严格匹配,如果匹配成功,就停止继续向下搜索并立即处理该请求。

-

~:用于表示 uri 包含正则表达式,并且区分大小写。

-

~*:用于表示 uri 包含正则表达式,并且不区分大小写。

-

^~:用于不含正则表达式的 uri 前,要求 Nginx 服务器找到标识 uri 和请求字符串匹配度最高的 location 后,立即使用此 location 处理请求,而不再使用 location 块中的正则 uri 和请求字符串做匹配。

注意:如果 uri 包含正则表达式,则必须要有 ~ 或者 ~* 标识。

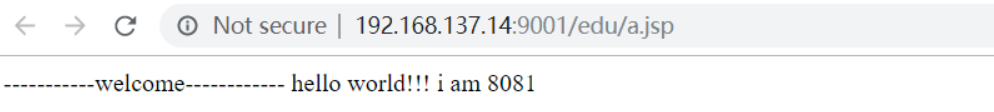

第4步,测试

访问: http://192.168.137.14:9001/edu/a.jsp

访问: http://192.168.137.14:9001/vod/b.jsp

第5章:nginx 配置实例-负载均衡

实现效果:配置负载均衡

5.1 实验代码

1) 首先准备两个同时启动的 Tomcat

创建/opt/test目录,然后创建/opt/test/a.jsp文件和/opt/test/webapps/web.xml文件

/opt/test/a.jsp

<%@ page language="java" contentType="text/html; charset=UTF-8" pageEncoding="UTF-8"%>

<!DOCTYPE html PUBLIC "-//W3C//DTD HTML 4.01 Transitional//EN" "http://www.w3.org/TR/html4/loose.dtd">

<html>

<head>

<meta http-equiv="Content-Type" content="text/html; charset=UTF-8">

<title>Insert title here</title>

</head>

<body>

8080 !!!

</body>

</html>

/opt/test/webapps/web.xml

<?xml version="1.0" encoding="UTF-8"?>

<web-app xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns="http://java.sun.com/xml/ns/javaee"

xsi:schemaLocation="http://java.sun.com/xml/ns/javaee http://java.sun.com/xml/ns/javaee/web-app_2_5.xsd"

id="WebApp_ID" version="2.5">

<display-name>test</display-name>

</web-app>

创建“tomcat_8001”和“tomcat_8002”容器,并将“/opt/test”数据卷映射到“/usr/local/tomcat/webapps/test”的目录

[root@hadoop-104 test]# docker run -d --name tomcat_8001 -v /opt/test:/usr/local/tomcat/webapps/test -p 8001:8080 tomcat

b7a0c6d3e9ca241a3d9b0dee972ab7837a0aefbaa0226219ae1c03eecb08beb4

[root@hadoop-104 test]# docker run -d --name tomcat_8002 -v /opt/test:/usr/local/tomcat/webapps/test -p 8002:8080 tomcat

4ecaec8c00da9b3cf207b7cd1e5c10ca711523476c132924bebe123bf0c767bc

进入到“tomcat_8001”容器中,拷贝test为edu,将a.jsp修改为8001

[root@hadoop-104 test]# docker exec -it tomcat_8001 /bin/bash

root@b7a0c6d3e9ca:/usr/local/tomcat# cd webapps/

root@b7a0c6d3e9ca:/usr/local/tomcat/webapps# ls

ROOT docs examples host-manager manager test

root@b7a0c6d3e9ca:/usr/local/tomcat/webapps# cp -rfp test edu

#替换为8080为8081

root@b7a0c6d3e9ca:/usr/local/tomcat/webapps# sed -i "s/8080/8081/g" edu/a.jsp

root@b7a0c6d3e9ca:/usr/local/tomcat/webapps# cat edu/a.jsp

<%@ page language="java" contentType="text/html; charset=UTF-8" pageEncoding="UTF-8"%>

<!DOCTYPE html PUBLIC "-//W3C//DTD HTML 4.01 Transitional//EN" "http://www.w3.org/TR/html4/loose.dtd">

<html>

<head>

<meta http-equiv="Content-Type" content="text/html; charset=UTF-8">

<title>Insert title here</title>

</head>

<body>

8081 !!!

</body>

</html>

root@b7a0c6d3e9ca:/usr/local/tomcat/webapps#

进入到“tomcat_8002”容器中,拷贝test为edu,将a.jsp修改为8002

[root@hadoop-104 ~]# docker exec -it tomcat_8002 /bin/bash

root@4ecaec8c00da:/usr/local/tomcat# cd webapps/

root@4ecaec8c00da:/usr/local/tomcat/webapps# ls

ROOT docs examples host-manager manager test

root@4ecaec8c00da:/usr/local/tomcat/webapps# cp -rfp test edu

#替换为8080为8082

root@4ecaec8c00da:/usr/local/tomcat/webapps# sed -i "s/8080/8082/g" edu/a.jsp

root@4ecaec8c00da:/usr/local/tomcat/webapps# cat edu/a.jsp

<%@ page language="java" contentType="text/html; charset=UTF-8" pageEncoding="UTF-8"%>

<!DOCTYPE html PUBLIC "-//W3C//DTD HTML 4.01 Transitional//EN" "http://www.w3.org/TR/html4/loose.dtd">

<html>

<head>

<meta http-equiv="Content-Type" content="text/html; charset=UTF-8">

<title>Insert title here</title>

</head>

<body>

8082 !!!

</body>

</html>

root@4ecaec8c00da:/usr/local/tomcat/webapps#

2) 在 nginx.conf 中进行配置

upstream myserver {

server 192.168.137.14:8001;

server 192.168.137.14:8002;

}

server {

listen 80;

server_name 192.168.137.14;

location / {

proxy_pass http://myserver;

root html;

index index.html index.htm;

}

}

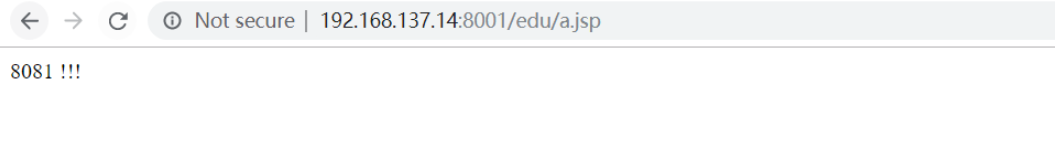

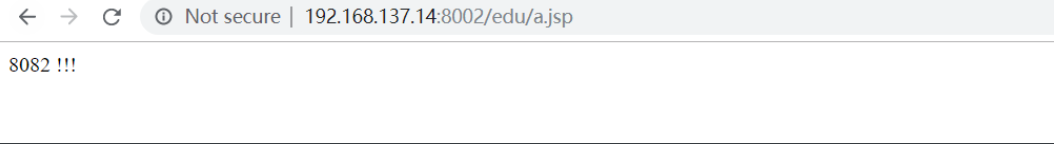

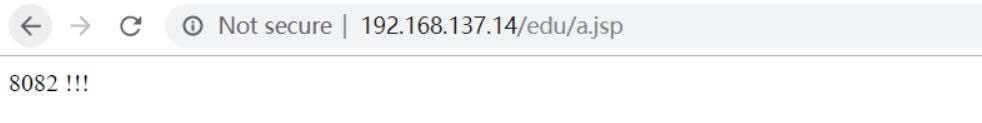

3)测试

测试: http://192.168.137.14:8001/edu/a.jsp

测试: http://192.168.137.14:8002/edu/a.jsp

测试:http://192.168.137.14/edu/a.jsp ,并且随着不断的刷新,内容也在发生着变化。

随着互联网信息的爆炸性增长,负载均衡(load balance)已经不再是一个很陌生的话题,顾名思义,负载均衡即是将负载分摊到不同的服务单元,既保证服务的可用性,又保证响应足够快,给用户很好的体验。快速增长的访问量和数据流量催生了各式各样的负载均衡产品,很多专业的负载均衡硬件提供了很好的功能,但却价格不菲,这使得负载均衡软件大受欢迎,nginx 就是其中的一个,在 linux 下有 Nginx、LVS、Haproxy 等等服务可以提供负载均衡服务,而且 Nginx 提供了几种分配方式(策略):

-

轮询(默认)

每个请求按时间顺序逐一分配到不同的后端服务器,如果后端服务器 down 掉,能自动剔除。 -

weight

weight 代表权,重默认为1,权重越高被分配的客户端越多

指定轮询几率,weight 和访问比率成正比,用于后端服务器性能不均的情况。 例如:upstream server_pool{ server 192.168.5.21 weight=10; server 192.168.5.22 weight=10; } -

ip_hash

每个请求按访问 ip 的 hash 结果分配,这样每个访客固定访问一个后端服务器,可以解决 session 的问题。 例如:upstream server_pool{ ip_hash; server 192.168.5.21:80; server 192.168.5.22:80; } -

fair(第三方)

按后端服务器的响应时间来分配请求,响应时间短的优先分配。upstream server_pool{ server 192.168.5.21:80; server 192.168.5.22:80; fair; }

第6章:nginx 配置实例-动静分离

Nginx 动静分离简单来说就是把动态跟静态请求分开,不能理解成只是单纯的把动态页面和静态页面物理分离。严格意义上说应该是动态请求跟静态请求分开,可以理解成使用 Nginx 处理静态页面,Tomcat 处理动态页面。动静分离从目前实现角度来讲大致分为两种:

- 一种是纯粹把静态文件独立成单独的域名,放在独立的服务器上,也是目前主流推崇的方案;

- 另外一种方法就是动态跟静态文件混合在一起发布,通过 nginx 来分开;

通过 location 指定不同的后缀名实现不同的请求转发。通过 expires 参数设置,可以使浏览器缓存过期时间,减少与服务器之前的请求和流量。具体 Expires 定义:是给一个资源设定一个过期时间,也就是说无需去服务端验证,直接通过浏览器自身确认是否过期即可,所以不会产生额外的流量。此种方法非常适合不经常变动的资源。(如果经常更新的文件,不建议使用 Expires 来缓存),我这里设置 3d,表示在这 3 天之内访问这个 URL,发送一个请求,比对服务器该文件最后更新时间没有变化,则不会从服务器抓取,返回状态码304,如果有修改,则直接从服务器重新下载,返回状态码 200。

6.1 实验代码

-

项目资源准备

在/opt/edu目录下,创建两个文件夹“www”和“images”,用来存放静态资源[root@hadoop-104 edu]# mkdir -p data/{images,www}/opt/edu/data/www/index.html

<html> <head> <meta http-equiv="Content-Type" content="text/html; charset=UTF-8"> <title>动静分离</title> </head> <body> <h2>测试动静分离</h2> </body> </html>找到一张图片放置到“/opt/edu/data/images”目录中。

整个/opt/edu目录结构为:[root@hadoop-104 edu]# find ./ ./ ./webapps ./webapps/web.xml ./a.jsp ./data ./data/images ./data/images/timg.jpg ./data/www ./data/www/index.html [root@hadoop-104 edu]#创建docker容器tomcat_8001

[root@hadoop-104 edu]# docker run -d --name tomcat_8001 -v /opt/edu:/usr/local/tomcat/webapps/edu -p 8001:8080 tomcat 1eaba92b56f0987f8c79cc84043b87a6dc2c7ae22607c46c169c1621cac6a9c2 -

进行 nginx 配置

找到 nginx 安装目录,打开conf/nginx.conf 配置文件,upstream myserver { server 192.168.137.14:8001; server 192.168.137.14:8002; } server { listen 80; server_name 192.168.137.14; location / { proxy_pass http://myserver; root html; index index.html index.htm; } location /www/ { root /opt/edu/data; index index.html index.htm; } location /images/ { root /opt/edu/data; autoindex on; } } -

测试

测试静态请求: http://192.168.137.14/images/

测试静态请求: http://192.168.137.14/www/index.html

测试动态请求:http://192.168.137.14/edu/a.jsp

第7章:nginx 原理与优化参数配置

1、mater 和 worker

查看nginx进程能够发现,它存在着两个进程master和worker

[root@master ~]# ps -ef|grep nginx

root 9494 1 0 03:20 ? 00:00:00 nginx: master process nginx

nginx 9495 9494 0 03:20 ? 00:00:00 nginx: worker process

[root@master ~]#

下面是master和worker的架构模型:

2、worker 如何进行工作的

3、一个 master 和多个 woker 有好处

(1)可以使用 nginx –s reload 热部署,利用 nginx 进行热部署操作

(2)每个 woker 是独立的进程,如果有其中的一个 woker 出现问题,其他 woker 独立的,继续进行争抢,实现请求过程,不会造成服务中断.

4、设置多少个 woker 合适

worker 数和服务器的 cpu 数相等是最为适宜的

5、连接数 worker_connection

-

第一个:发送请求,占用了 woker 的几个连接数?

答案:2 或者 4 个

-

第二个:nginx 有一个 master,有四个 woker,每个 woker 支持最大的连接数 1024,支持的最大并发数是多少?

普通的静态访问最大并发数是: (worker_connections * worker_processes) / 2

而如果是 HTTP作为反向代理来说,最大并发数量应该是( worker_connections * worker_processes) / 4

第8章:nginx 搭建高可用集群

8.1 Keepalived+Nginx 高可用集群(主从模式)

1、IP地址规划

| host | service | role |

|---|---|---|

| 192.168.137.14 | dokcer tomcat | tomcat |

| 192.168.137.15 | nginx+keepalived | master |

| 192.168.137.17 | nginx+keepalived | backup |

| 192.168.137.50 | 虚拟IP |

2、安装nginx和keepalived

分别在192.168.137.15和192.168.137.16上安装nginx和keepalived

安装nginx:

yum -y install nginx

安装:keepalived

yum -y install keepalived

3、修改配置

192.168.137.15:/etc/keepalived/keepalived.conf

global_defs {

notification_email {

[email protected]

[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_server 192.168.137.15

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_script chk_http_port {

script "/usr/local/src/nginx_check.sh"

interval 2 #(检测脚本执行的间隔)

weight 2

}

vrrp_instance VI_1 {

state MASTER # 备份服务器上将 MASTER 改为 BACKUP

interface eth0

virtual_router_id 51 # 主、备机的 virtual_router_id 必须相同

priority 100 # 主、备机取不同的优先级,主机值较大,备份机值较小

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.137.50

}

}

192.168.137.16:/etc/keepalived/keepalived.conf

global_defs {

notification_email {

[email protected]

[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_server 192.168.137.15

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_script chk_http_port {

script "/usr/local/src/nginx_check.sh"

interval 2 #(检测脚本执行的间隔)

weight 2

}

vrrp_instance VI_1 {

state BACKUP # 备份服务器上将 MASTER 改为 BACKUP

interface eth0

virtual_router_id 51 # 主、备机的 virtual_router_id 必须相同

priority 90 # 主、备机取不同的优先级,主机值较大,备份机值较小

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.137.50

}

}

分别在“192.168.137.15”和“192.168.137.16”的“/usr/local/src”目录下,创建“nginx_check.sh”文件:

#!/bin/bash

A=`ps -C nginx --no-headers |wc -l`

if [ ${A} -eq 0 ]; then

systemctl start nginx

sleep 2

var=`ps -C nginx --no-headers |wc -l`;

if [ ${var} -eq 0 ]; then

killall keepalived;

fi

fi

修改 /usr/share/nginx/html/index.html,添加IP地址标识 "192.168.137.15":

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx! 192.168.137.15</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

修改 /usr/share/nginx/html/index.html,添加IP地址标识"192.168.137.15" :

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx! 192.168.137.16</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

4、创建tomcat容器

结合第5章中的实验,创建tomcat容器

[root@tomcat ~]# docker run -d --name tomcat_8001 -v /opt/edu:/usr/local/tomcat/webapps/edu -p 8001:8080 tomcat

b80ac12ae19c3784ffc9f08c698b73ac9bcd3bc60e1b7f98dd7c6e9f9212049c

[root@tomcat ~]#

分别在“192.168.137.15”和“192.168.137.16”的"/etc/nginx/nginx.conf"中添加如下的语句:

upstream myserver {

server 192.168.137.14:8001;

server 192.168.137.14:8002;

}

server {

listen 9001;

server_name 192.168.137.15; #在192.168.137.16上请修改IP为192.168.137.16

location / {

proxy_pass http://myserver;

root html;

index index.html index.htm;

}

}

5、分别启动两台服务器上的 nginx 和 keepalived

启动 nginx:nginx

启动 keepalived:systemctl start keepalived.service

6、最终测试

(1)在浏览器地址栏输入 虚拟 ip 地址 192.168.173.50

[root@master ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:ec:8a:c5 brd ff:ff:ff:ff:ff:ff

inet 192.168.137.15/24 brd 192.168.137.255 scope global eth0

valid_lft forever preferred_lft forever

#能够看到已经绑定了虚拟IP

inet 192.168.137.50/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:feec:8ac5/64 scope link

valid_lft forever preferred_lft forever

[root@master ~]#

(2)把主服务器(192.168.137.15)nginx 和 keepalived 停止,再输入 192.168.137.50

[root@master ~]# nginx -s stop

[root@master ~]# systemctl stop keepalived.service

[root@master ~]#

查看backup上是否绑定虚拟IP:

[root@backup html]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:65:e3:5e brd ff:ff:ff:ff:ff:ff

inet 192.168.137.16/24 brd 192.168.137.255 scope global eth0

valid_lft forever preferred_lft forever

#能够看到在master关闭后,虚拟IP已经绑定到了Backup上了

inet 192.168.137.50/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe65:e35e/64 scope link

valid_lft forever preferred_lft forever

[root@backup html]# c

再次访问: http://192.168.137.50/

(3)再次启动主服务器(192.168.137.15)的nginx 和 keepalived

再次访问http://192.168.137.50/ ,能够发现已经变为了192.168.137.15,因为我们配置了主备节点的优先级,主节点优先级要高,所以当主节点故障恢复后,将会重新抢占vip。

(4)访问: http://192.168.137.50:9001/

(5)访问: http://192.168.137.50:9001/edu/a.jsp ,并且即便Master节点down了后,也能够正常访问。

8.2 Keepalived+Nginx 高可用集群(双主模式)