VGGNet是牛津大学计算机视觉组和Google DeepMind 公司的研究员一起研发的深度卷积神经网络。VGGNet探索了卷积神经网络的深度与性能之间的关系,通过反复堆叠3x3的小型卷积核和2x2的最大池化层,VGGNet成功地构筑了16~19层深的卷积神经网络,并取得了ILSVRC2014比赛分类项目的第二名和定位项目的第一名。

其网络结构和思路主要展示在论文

Very Deep Convolutional Networks for Large-Scale Image Recognition

一、VGGNet网络结构

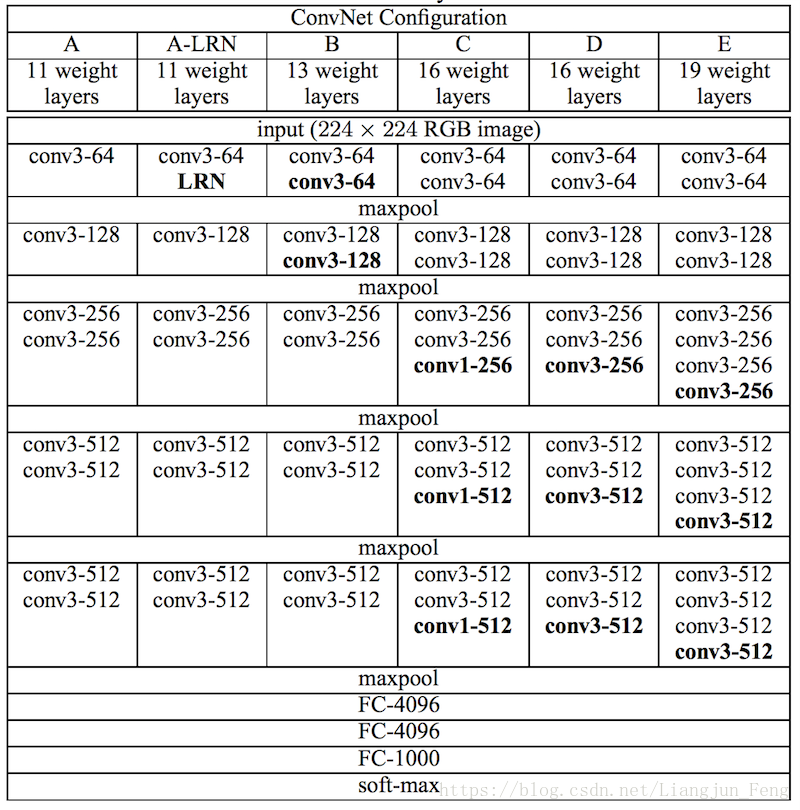

VGGNet论文中全部使用了3x3的卷积核和2x2的池化核,通过不断加深网络结构来提升性能,下图为VGGNet各级别的网络结构图

从A到E每一级网络逐渐变深,但是网络的参数量并没有增长很多,这是因为参数量主要都消耗在最后3个全连接层。前面的卷积部分虽然很深,但是消耗的参数量并不大,不过训练比较耗时的部分依然是卷积,因为其计算量比较大。这其中的D, E也就是我们常说的VGGNet-16和VGGNet-19,C很有意思,相比于B多了几个1x1的卷积层,1x1卷积的主要意义在于线性变换,而输入的通道数和输出通道数不变,没有发生降维

VGGNet 拥有5段卷积,每一段内有2~3个卷积层,每一段内有2~3个卷积层,同时每段尾部会连接一个最大池化层用来缩小图片尺寸。每段内的卷积核数量一样,越靠后的段的卷积核数量越多:64-128-256-512-512。其中经常出现多个完全一样的3x3的卷积层堆叠在一起的情况,这是一个非常有用的设计,两个3x3的卷积层串联相当于1个5x5的卷积层,即一个像素会跟周围5x5的像素产生关联,可以说感受野大小为5x5。而三个3x3的卷积层串联的效果则相当于1个7x7的卷积层。除此之外,3个串联的3x3的卷积层拥有比一个7x7的卷积层更多的非线性变化,使得CNN对特征的学习能力更强

二、VGGNet中用到的技巧

VGGNet在训练时有一个小的技巧,先训练级别A的简单网络,再复用A网络的权重来初始化后面的几个复杂模型,这样训练收敛速度更快。在预测时,VGG采用Multi-Scale的方法,将图像scale到一个尺寸Q,并将图片输入到卷积网络计算。然后在最后一个卷积层使用划窗的方式进行分类预测,将不同窗口的分类结果平均,再将不同尺寸Q的结果平均后得到最后结果,这样可以提高图片的利用率并提升预测准确率。同时在训练中,VGGNet还使用了Multi-Scale的方法做数据增强,将原始图像缩放到不同尺寸S,然后再随机裁剪244x244的图片,这样能增加很多数据量,对于防止过拟合有很不错的效果

作者在对比各级网络时总结出以下几个观点

(1)LRN层作用不大

(2)越深的网络效果越好

(3)1x1的卷积也是有效果的,但是没有3x3的卷积效果好,大一些的卷积核可以学习更大的空间特征

三、VGGNet的tensorflow实现

用到的数据集比较大,可以在评论留邮箱,会发送给你

import tensorflow as tf

import os

import numpy as np

from PIL import Image

import pandas as pd

from sklearn import preprocessing

import cv2

from sklearn.model_selection import train_test_split

def load_Img(imgDir):

imgs = os.listdir(imgDir)

imgs = np.ravel(pd.DataFrame(imgs).sort_values(by=0).values)

imgNum = len(imgs)

data = np.empty((imgNum,image_size,image_size,3),dtype="float32")

for i in range (imgNum):

img = Image.open(imgDir+"/"+imgs[i])

arr = np.asarray(img,dtype="float32")

arr = cv2.resize(arr,(image_size,image_size))

if len(arr.shape) == 2:

temp = np.empty((image_size,image_size,3))

temp[:,:,0] = arr

temp[:,:,1] = arr

temp[:,:,2] = arr

arr = temp

data[i,:,:,:] = arr

return data

def make_label(labelFile):

label_list = pd.read_csv(labelFile,sep = '\t',header = None)

label_list = label_list.sort_values(by=0)

le = preprocessing.LabelEncoder()

for item in [1]:

label_list[item] = le.fit_transform(label_list[item])

label = label_list[1].values

onehot = preprocessing.OneHotEncoder(sparse = False)

label_onehot = onehot.fit_transform(np.mat(label).T)

return label_onehot

def conv_op(input_op,name,kh,kw,n_out,dh,dw,p):

n_in = input_op.get_shape()[-1].value

with tf.name_scope(name) as scope:

kernel = tf.get_variable(scope+'W',

shape=[kh,kw,n_in,n_out],

dtype=tf.float32,

initializer=tf.contrib.layers.xavier_initializer_conv2d())

# kernel = tf.Variable(tf.truncated_normal([kh,kw,n_in,n_out],

# dtype=tf.float32,

# stddev=0.01),

# name=scope+'W')

conv = tf.nn.conv2d(input_op,kernel,(1,dh,dw,1),padding='SAME')

bias_init_val = tf.constant(0,shape=[n_out],dtype=tf.float32)

biases = tf.Variable(bias_init_val,trainable=True,name='b')

z = tf.nn.bias_add(conv,biases)

activation = tf.nn.relu(z,name=scope)

p += [kernel,biases]

return activation

def fc_op(input_op,name,n_out,p):

n_in = input_op.get_shape()[-1].value

with tf.name_scope(name) as scope:

# kernel = tf.get_variable(scope+'w',

# shape = [n_in,n_out],

# dtype = tf.float32,

# initializer = tf.contrib.layers.xavier_initializer())

kernel = tf.Variable(tf.truncated_normal([n_in,n_out],

dtype=tf.float32,

stddev=0.01),

name=scope+'W')

biases = tf.Variable(tf.constant(0.1,shape=[n_out],dtype=tf.float32),name='b')

activation = tf.nn.sigmoid(tf.matmul(input_op,kernel)+biases,name = 'ac')

# activation = tf.nn.relu_layer(input_op,kernel,biases,name=scope)

p += [kernel,biases]

return activation

def mpool_op(input_op,name,kh,kw,dh,dw):

return tf.nn.max_pool(input_op,

ksize=[1,kh,kw,1],

strides=[1,dh,dw,1],

padding='SAME',

name=name)

def inference_op(input_op,y,keep_prob):

p = []

conv1_1 = conv_op(input_op,name='conv1_1',kh=3,kw=3,n_out=64,dh=1,dw=1,p=p)

conv1_2 = conv_op(conv1_1,name='conv1_2',kh=3,kw=3,n_out=64,dh=1,dw=1,p=p)

pool1 = mpool_op(conv1_2,name='pool1',kh=2,kw=2,dw=2,dh=2)

conv2_1 = conv_op(pool1,name='conv2_1',kh=3,kw=3,n_out=128,dh=1,dw=1,p=p)

conv2_2 = conv_op(conv2_1,name='conv2_2',kh=3,kw=3,n_out=128,dh=1,dw=1,p=p)

pool2 = mpool_op(conv2_2,name='pool2',kh=2,kw=2,dw=2,dh=2)

conv3_1 = conv_op(pool2,name='conv3_1',kh=3,kw=3,n_out=256,dh=1,dw=1,p=p)

conv3_2 = conv_op(conv3_1,name='conv3_2',kh=3,kw=3,n_out=256,dh=1,dw=1,p=p)

conv3_3 = conv_op(conv3_2,name='conv3_3',kh=3,kw=3,n_out=256,dh=1,dw=1,p=p)

pool3 = mpool_op(conv3_3,name='pool3',kh=2,kw=2,dh=2,dw=2)

conv4_1 = conv_op(pool3,name='conv4_1',kh=3,kw=3,n_out=512,dh=1,dw=1,p=p)

conv4_2 = conv_op(conv4_1,name='conv4_2',kh=3,kw=3,n_out=512,dh=1,dw=1,p=p)

conv4_3 = conv_op(conv4_2,name='conv4_3',kh=3,kw=3,n_out=512,dh=1,dw=1,p=p)

pool4 = mpool_op(conv4_3,name='pool4',kh=2,kw=2,dh=2,dw=2)

conv5_1 = conv_op(pool4,name='conv5_1',kh=3,kw=3,n_out=512,dh=1,dw=1,p=p)

conv5_2 = conv_op(conv5_1,name='conv5_2',kh=3,kw=3,n_out=512,dh=1,dw=1,p=p)

conv5_3 = conv_op(conv5_2,name='conv5_3',kh=3,kw=3,n_out=512,dh=1,dw=1,p=p)

pool5 = mpool_op(conv5_3,name='pool5',kh=2,kw=2,dh=2,dw=2)

shp = pool5.get_shape()

flattened_shape = shp[1].value*shp[2].value*shp[3].value

resh1 = tf.reshape(pool5,[-1,flattened_shape],name='resh1')

fc6 = fc_op(resh1,name='fc6',n_out=4096,p=p)

fc6_drop = tf.nn.dropout(fc6,keep_prob,name='fc6_drop')

fc7 = fc_op(fc6_drop,name='fc7',n_out=4096,p=p)

fc7_drop = tf.nn.dropout(fc7,keep_prob,name='fc7_drop')

fc8 = fc_op(fc7_drop,name='fc8',n_out=190,p=p)

y_conv = tf.nn.softmax(fc8)

cross_entropy = tf.reduce_mean(-tf.reduce_sum(y * tf.log(tf.clip_by_value(y_conv, 1e-10, 1.0)),reduction_indices=[1]))

train_step = tf.train.AdamOptimizer(1e-4).minimize(cross_entropy)

correct_prediction = tf.equal(tf.arg_max(y_conv,1),tf.arg_max(y,1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction,tf.float32))

return accuracy,train_step,cross_entropy,fc8,p

def run_benchmark():

imgDir = '/Users/zhuxiaoxiansheng/Desktop/DatasetA_train_20180813/train'

labelFile = '/Users/zhuxiaoxiansheng/Desktop/DatasetA_train_20180813/train.txt'

data = load_Img(imgDir)

data = data/255.0

label = make_label(labelFile)

traindata,testdata,trainlabel,testlabel = train_test_split(data,label,test_size=100,random_state = 2018)

print(traindata.shape,testdata.shape)

with tf.Graph().as_default():

os.environ["CUDA_VISIBLE_DEVICES"] = '0' #指定第一块GPU可用

config = tf.ConfigProto()

config.gpu_options.per_process_gpu_memory_fraction = 1.0

my_graph = tf.Graph()

sess = tf.InteractiveSession(graph=my_graph,config=config)

keep_prob = tf.placeholder(tf.float32)

input_op = tf.placeholder(tf.float32,[None,image_size*image_size*3])

input_op = tf.reshape(input_op,[-1,image_size,image_size,3])

y = tf.placeholder(tf.float32,[None,190])

accuracy,train_step,cross_entropy,fc8,p = inference_op(input_op,y,keep_prob)

init = tf.global_variables_initializer()

sess.run(init)

for i in range(num_batches):

rand_index = np.random.choice(38121,size=(batch_size))

train_step.run(feed_dict={input_op:traindata[rand_index],y:trainlabel[rand_index],keep_prob:0.8})

if i%100 == 0:

rand_index = np.random.choice(38121,size=(100))

train_accuracy = accuracy.eval(feed_dict={input_op:traindata[rand_index],y:trainlabel[rand_index],keep_prob:1.0})

print('step %d, training accuracy %g'%(i,train_accuracy))

print(fc8.eval(feed_dict={input_op:traindata[rand_index],y:trainlabel[rand_index],keep_prob:1.0}))

print(cross_entropy.eval(feed_dict={input_op:traindata[rand_index],y:trainlabel[rand_index],keep_prob:1.0}))

print("test accuracy %g"%accuracy.eval(feed_dict={input_op:testdata,y:testlabel,keep_prob:1.0}))

image_size = 224

batch_size = 64

num_batches = 10000

run_benchmark()