import jieba

from lxml import etree

import urllib

import urllib.request

from wordcloud import WordCloud

import pandas as pd

from imageio import imread

import matplotlib.pyplot as plt

def getpage(url):

req=urllib.request.Request(url)

req.add_header("User-Agent","Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36")

data=urllib.request.urlopen(req).read().decode('utf-8')

return data

def getdata(data):

html=etree.HTML(data)

top_search=html.xpath('//td[@class="td-02"]/a[@href]/text()')

return top_search

def cut_words(top_search):

top_cut=[]

for top in top_search:

top_cut.extend(list(jieba.cut(top)))

return top_cut

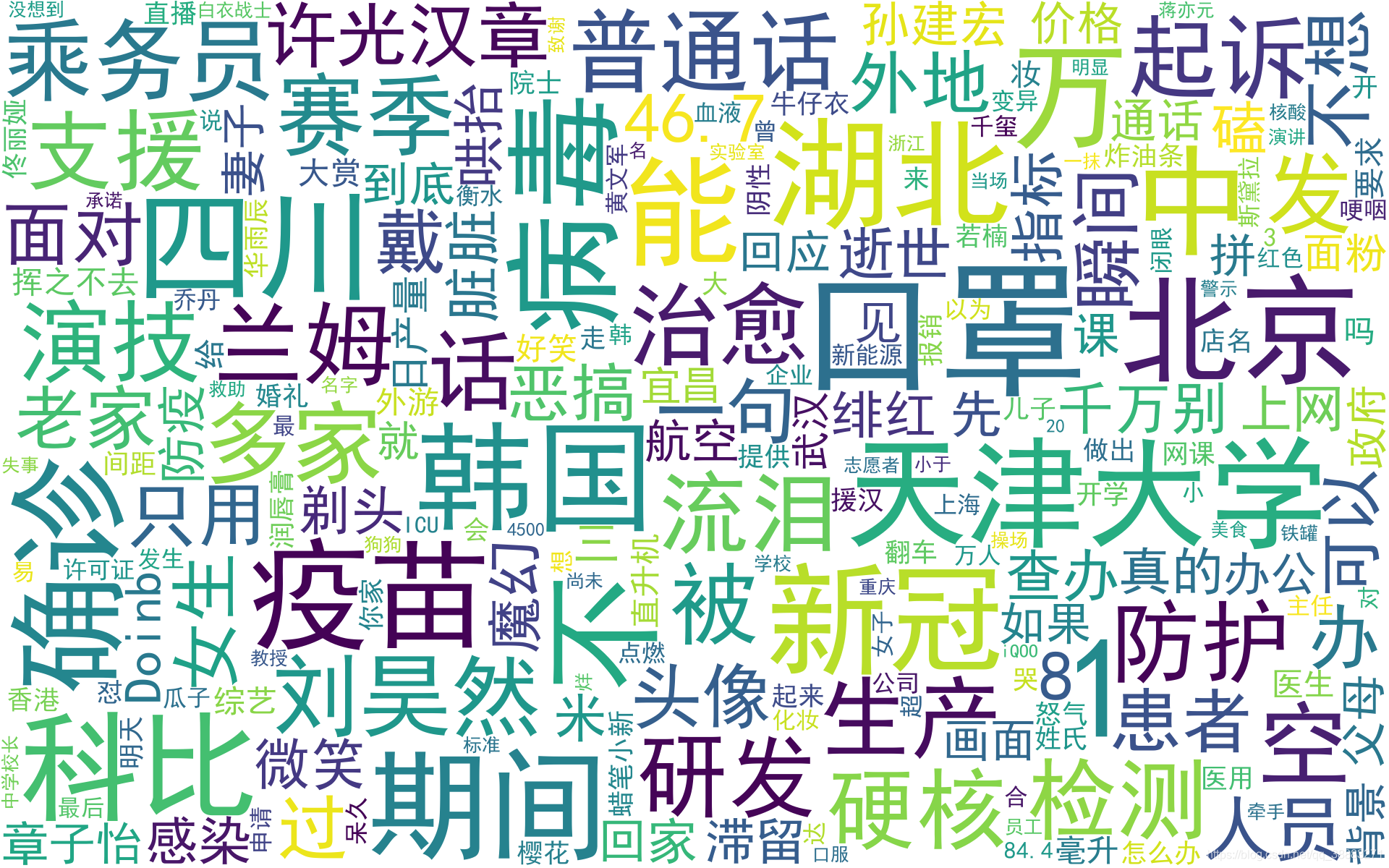

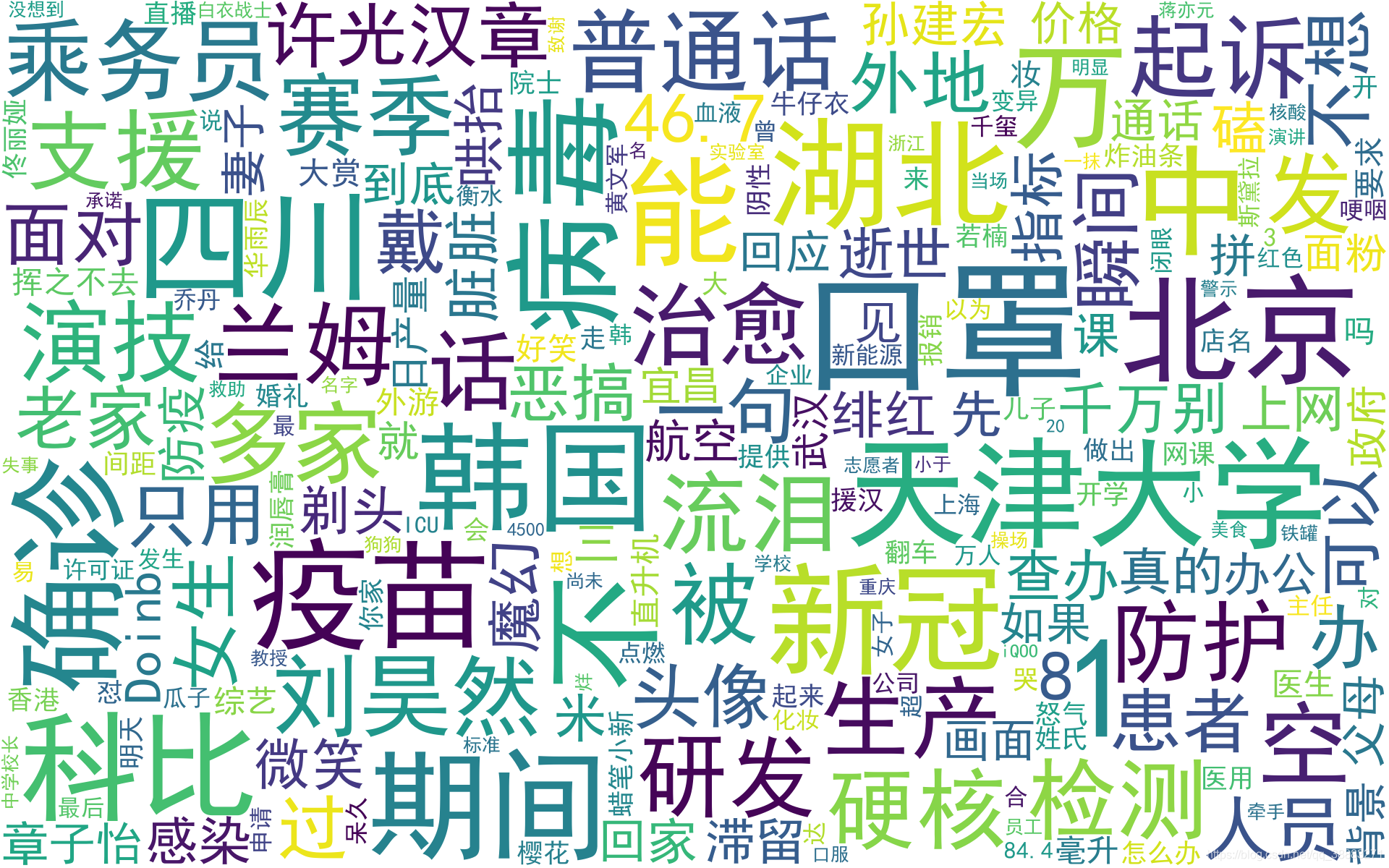

if __name__=="__main__":

url="https://s.weibo.com/top/summary?cate=realtimehot"

top_search = getdata(getpage(url))

all_words = cut_words(top_search)

stop = ['的','你','了','将','为','例',' ','多','再','有','是','等','天','次']

words_cut = []

for word in all_words:

if word not in stop:

words_cut.append(word)

word_count = pd.Series(words_cut).value_counts()

back_ground = imread("E:\\python\\flower.jpg")

wc = WordCloud(

font_path="C:\\Windows\\Fonts\\simhei.ttf",

background_color="white",

max_words=1000,

mask=back_ground,

max_font_size=200,

random_state=50

)

wc1 = wc.fit_words(word_count)

plt.figure()

plt.imshow(wc1)

plt.axis("off")

plt.show()

wc.to_file("ciyun.png")