最近研究爬虫,自己写了几个小项目,爬取猫眼电影top100的数据就是其中之一

先去解析一下页面,发现一共有10页,随便切了两页,发现他的一个url组成大致是

https://maoyan.com/board/4?offset=页面*10

然后写一个函数用来获取页面url

def get_pages_url_list():

pages_url_list = []

for i in range(10):

url = 'https://maoyan.com/board/4?offset=%s'%str(i*10)

pages_url_list.append(url)

return pages_url_list

这样就得到了网页url的列表

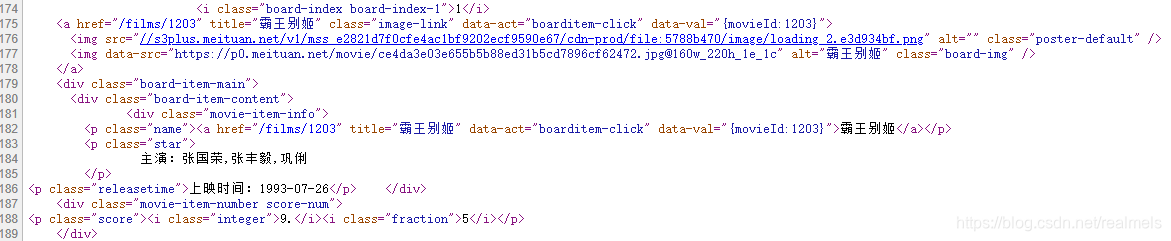

接下来解析一下网页,发现想要的信息都在源码里,举一个例子

这样可以直接通过正则表达式将信息筛选出来

每一种信息的获取,都写一个函数

获取电影名`

def movie_name():

namelist = []

for pages in pages_url:

try:

html = requests.get(pages).text

reg = re.compile(r'<a href="/films/.*?" title="(.*?)" class="image-link" data-act="boarditem-click" data-val=".*">')

result = re.findall(reg,html)

for res in result:

namelist.append(res)

except:

pass

return namelist

输出一下,发现什么都没有,获取到的是一个空列表

简单看了一下获取的源码,发现这猫眼有反爬虫机制

应该要加headers请求

打开谷歌浏览器的network,刷新一下,想要的便都有了

把headers复制过来

headers= {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0'

'.3987.116 Safari/537.36',

'Cookie': '__mta=252583478.1582162970276.1582251329773.1582251337605.36; uuid_n_v=v1; uuid=49C96250538211EAA'

'1F029AD4767FB60F69E29B324164685A575DBC49D9F7D7B; _csrf=22354046805a970e2742e52e9f2f0f6742e9c2f0b66'

'79d37bfa4d63b3f042b0d; _lxsdk_cuid=1706042fa27b9-01be66e73d352-313f68-100200-1706042fa28c8; _lxsdk'

'=49C96250538211EAA1F029AD4767FB60F69E29B324164685A575DBC49D9F7D7B; mojo-uuid=0dc740302bb9b347859b6'

'5d4fe0e70e4; Hm_lvt_703e94591e87be68cc8da0da7cbd0be2=1582162967,1582167037,1582167046; mojo-sessio'

'n-id={"id":"63862b9f8ff58455ee319c623bc3ba4d","time":1582250701907}; __mta=252583478.158216297027'

'6.1582182174928.1582250732005.29; mojo-trace-id=16; Hm_lpvt_703e94591e87be68cc8da0da7cbd0be2=1582'

'251337; _lxsdk_s=170657db4a6-42f-ab4-e3a%7C%7C22'

}

这样就可以了

完整代码如下

import requests

import re

headers= {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0'

'.3987.116 Safari/537.36',

'Cookie': '__mta=252583478.1582162970276.1582251329773.1582251337605.36; uuid_n_v=v1; uuid=49C96250538211EAA'

'1F029AD4767FB60F69E29B324164685A575DBC49D9F7D7B; _csrf=22354046805a970e2742e52e9f2f0f6742e9c2f0b66'

'79d37bfa4d63b3f042b0d; _lxsdk_cuid=1706042fa27b9-01be66e73d352-313f68-100200-1706042fa28c8; _lxsdk'

'=49C96250538211EAA1F029AD4767FB60F69E29B324164685A575DBC49D9F7D7B; mojo-uuid=0dc740302bb9b347859b6'

'5d4fe0e70e4; Hm_lvt_703e94591e87be68cc8da0da7cbd0be2=1582162967,1582167037,1582167046; mojo-sessio'

'n-id={"id":"63862b9f8ff58455ee319c623bc3ba4d","time":1582250701907}; __mta=252583478.158216297027'

'6.1582182174928.1582250732005.29; mojo-trace-id=16; Hm_lpvt_703e94591e87be68cc8da0da7cbd0be2=1582'

'251337; _lxsdk_s=170657db4a6-42f-ab4-e3a%7C%7C22'

}

def get_pages_url_list():

pages_url_list = []

for i in range(10):

url = 'https://maoyan.com/board/4?offset=%s'%str(i*10)

pages_url_list.append(url)

return pages_url_list

pages_url = get_pages_url_list()

def movie_name():

namelist = []

for pages in pages_url:

try:

html = requests.get(pages,headers = headers).text

reg = re.compile(r'<a href="/films/.*?" title="(.*?)" class="image-link" data-act="boarditem-click" data-val=".*">')

result = re.findall(reg,html)

for res in result:

namelist.append(res)

except:

pass

return namelist

def movie_actor():

actorlist = []

for pages in pages_url:

try:

html = requests.get(pages,headers = headers).text

reg = re.compile(r'主演:.*')

result = re.findall(reg,html)

for res in result:

actorlist.append(res)

except:

pass

return actorlist

def movie_time():

timelist = []

for pages in pages_url:

try:

html = requests.get(pages,headers = headers).text

reg = re.compile(r'<p class="releasetime">(.*?)</p> </div>')

result = re.findall(reg,html)

for res in result:

timelist.append(res)

except:

pass

return timelist

def movie_score():

scorelist =[]

for pages in pages_url:

try:

html = requests.get(pages,headers = headers).text

reg = re.compile(r'<p class="score"><i class="integer">(.*?)</i><i class="fraction">(.*?)</i></p>')

result = re.findall(reg,html)

for i in range(len(result)):

s = result[i]

v = s[0] + s[1]

scorelist.append(v)

except:

pass

return scorelist

def movie_photo():

photolist = []

for pages in pages_url:

try:

html = requests.get(pages,headers = headers).text

reg = re.compile(r'<img data-src="(.*?)@160w_220h_1e_1c" alt=".*" class="board-img" />')

result = re.findall(reg,html)

for res in result:

photolist.append(res)

except:

pass

return photolist

def main():

movie_names = movie_name()

movie_actors = movie_actor()

movie_times = movie_time()

movie_scores = movie_score()

movie_photos = movie_photo()

result = []

for i in range(len(movie_names)):

res = '%s\t\t%s\t\t%s\t\t评分:%s\t\t图片:%s'%(movie_names[i],movie_actors[i],movie_times[i],movie_scores[i],movie_photos[i])

result.append(res)

return result

if __name__ == '__main__':

file = open('猫眼电影top100.txt','w',encoding='utf-8')

num = 1

for item in main():

file.write(str(num))

file.write('\t\t')

file.write(item)

file.write('\n')

print('写入成功:\t%s'%item)

num += 1