一、工程介绍

这个示例sampleUffMNIST是一个对TensorFlow模型进行推理的示例。MNIST TensorFlow 模型被转换成UFF文件。UFF文件用图表的方式存储神经网络。 NvUffParser 在该示例中被使用去解析 UFF文件,从而创建该神经网络的推理引擎。使用TensorRT,您可以使用 UFF converter将预训练的TensorFlow模型转换成UFF文件。UFF parser可以完成对UFF文件输入。这是一个用C++完成tensorRT加速的示例。

二、这个工程如何工作起来

这个示例使用已经在MNIST dataset数据集训练好的TensorFlow模型,

包含以下内容:

- 导入UFF文件,该文件由预训练的TensorFlow模型转换而来。

- 创建the UFF Parser

- 使用 UFF Parser注册输入和输出层,注册输入层的时候还需要提供输入张量的大小和顺序。

- 建立引擎。

- 推理10次并且显示平均推理时间。

三、tensorRT API的层和节点

该示例当中,下面这些层被用到:

Activation layer 激活层

Convolution layer 卷积层

FullyConnected layer 全连接层

Pooling layer 池化层

Scale layer 变换层

Shuffle layer implements a reshape and transpose operator for tensors

四、运行这个示例

该示例的运行环境参考下面博客

(jetson-nano的镜像采用这篇博客里的《JETSON-Nano刷机运行deepstream4.0的demo》https://blog.csdn.net/shajiayu1/article/details/102669346)

- cd /usr/src/tensorrt/samples/sampleUffMNIST

- sudo make

- cd /usr/src/tensorrt/bin

- ./sample_uff_mnist

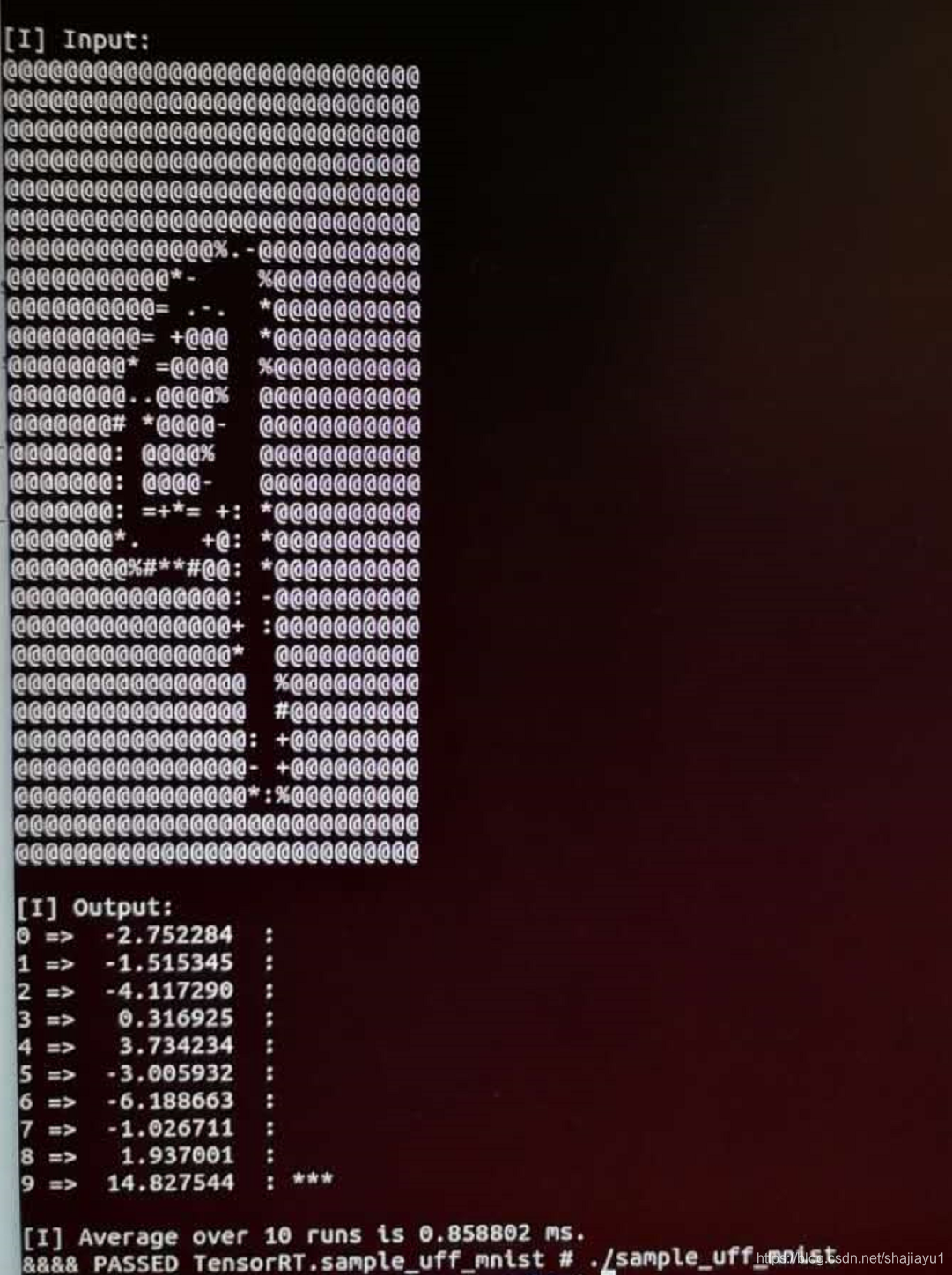

从上图可以看到输入图片是数字9推理结果也是9。执行了10次平均推理时间是0.85ms

主函数的代码如下:

从大的方面讲一共是3块的内容

1.首先是建立引擎

2.执行推理

3.释放资源

注意代码分析不是nano镜像里的文件,用的GitHub上面的tensorrt5.1代码。该代码比较规整。

https://github.com/NVIDIA/TensorRT/tree/release/5.1/samples/opensource/sampleUffMNIST

int main(int argc, char** argv)

{

samplesCommon::Args args;

bool argsOK = samplesCommon::parseArgs(args, argc, argv);

if (!argsOK)

{

gLogError << "Invalid arguments" << std::endl;

printHelpInfo();

return EXIT_FAILURE;

}

if (args.help)

{

printHelpInfo();

return EXIT_SUCCESS;

}

auto sampleTest = gLogger.defineTest(gSampleName, argc, argv);

gLogger.reportTestStart(sampleTest);

samplesCommon::UffSampleParams params = initializeSampleParams(args);//初始化参数

SampleUffMNIST sample(params);//建立对象

gLogInfo << "Building and running a GPU inference engine for Uff MNIST" << std::endl;

if (!sample.build())//建立引擎

{

return gLogger.reportFail(sampleTest);

}

if (!sample.infer())//执行推理

{

return gLogger.reportFail(sampleTest);

}

if (!sample.teardown())//释放资源

{

return gLogger.reportFail(sampleTest);

}

return gLogger.reportPass(sampleTest);

}

其他的代码如下:

class SampleUffMNIST

{

template <typename T>

using SampleUniquePtr = std::unique_ptr<T, samplesCommon::InferDeleter>;

public:

SampleUffMNIST(const samplesCommon::UffSampleParams& params)

: mParams(params)

{

}

//!

//! \brief Builds the network engine

//!

bool build();

//!

//! \brief Runs the TensorRT inference engine for this sample

//!

bool infer();

//!

//! \brief Used to clean up any state created in the sample class

//!

bool teardown();

private:

//!

//! \brief Parses a Uff model for MNIST and creates a TensorRT network

//!

void constructNetwork(

SampleUniquePtr<nvuffparser::IUffParser>& parser, SampleUniquePtr<nvinfer1::INetworkDefinition>& network);

//!

//! \brief Reads the input and mean data, preprocesses, and stores the result

//! in a managed buffer

//!

bool processInput(

const samplesCommon::BufferManager& buffers, const std::string& inputTensorName, int inputFileIdx) const;

//!

//! \brief Verifies that the output is correct and prints it

//!

bool verifyOutput(

const samplesCommon::BufferManager& buffers, const std::string& outputTensorName, int groundTruthDigit) const;

std::shared_ptr<nvinfer1::ICudaEngine> mEngine{nullptr}; //!< The TensorRT engine used to run the network

samplesCommon::UffSampleParams mParams;

nvinfer1::Dims mInputDims;

const int kDIGITS{10};

};

//!

//! \brief Creates the network, configures the builder and creates the network engine

//!

//! \details This function creates the MNIST network by parsing the Uff model

//! and builds the engine that will be used to run MNIST (mEngine)

//!

//! \return Returns true if the engine was created successfully and false otherwise

//!

bool SampleUffMNIST::build()

{

//创建builder

auto builder = SampleUniquePtr<nvinfer1::IBuilder>(nvinfer1::createInferBuilder(gLogger.getTRTLogger()));

if (!builder)

{

return false;

}

//用builder创建network

auto network = SampleUniquePtr<nvinfer1::INetworkDefinition>(builder->createNetwork());

if (!network)

{

return false;

}

//创建Uffparser

auto parser = SampleUniquePtr<nvuffparser::IUffParser>(nvuffparser::createUffParser());

if (!parser)

{

return false;

}

//创建填充网络

constructNetwork(parser, network);//

builder->setMaxBatchSize(mParams.batchSize);//配置builder参数

builder->setMaxWorkspaceSize(16_MB);

builder->allowGPUFallback(true);

builder->setFp16Mode(mParams.fp16);

builder->setInt8Mode(mParams.int8);

samplesCommon::enableDLA(builder.get(), mParams.dlaCore);

//创建引擎

mEngine = std::shared_ptr<nvinfer1::ICudaEngine>(builder->buildCudaEngine(*network), samplesCommon::InferDeleter());

if (!mEngine)

{

return false;

}

assert(network->getNbInputs() == 1);

mInputDims = network->getInput(0)->getDimensions();

assert(mInputDims.nbDims == 3);

return true;

}

//!

//! \brief Uses a Uff parser to create the MNIST Network and marks the output layers

//!

//! \param network Pointer to the network that will be populated with the MNIST network

//!

//! \param builder Pointer to the engine builder

//!//该函数是网络层搭建的重点函数

void SampleUffMNIST::constructNetwork(

SampleUniquePtr<nvuffparser::IUffParser>& parser, SampleUniquePtr<nvinfer1::INetworkDefinition>& network)

{

// There should only be one input and one output tensor

assert(mParams.inputTensorNames.size() == 1);

assert(mParams.outputTensorNames.size() == 1);

// Register tensorflow input

//注册输入层,输入数据的大小和顺序

parser->registerInput(

mParams.inputTensorNames[0].c_str(), nvinfer1::Dims3(1, 28, 28), nvuffparser::UffInputOrder::kNCHW);

//注册输出层

parser->registerOutput(mParams.outputTensorNames[0].c_str());

//创建神经网络

parser->parse(mParams.uffFileName.c_str(), *network, nvinfer1::DataType::kFLOAT);

if (mParams.int8)

{

samplesCommon::setAllTensorScales(network.get(), 127.0f, 127.0f);

}

}

//!

//! \brief Reads the input data, preprocesses, and stores the result in a managed buffer

//!

bool SampleUffMNIST::processInput(

const samplesCommon::BufferManager& buffers, const std::string& inputTensorName, int inputFileIdx) const

{

const int inputH = mInputDims.d[1];

const int inputW = mInputDims.d[2];

std::vector<uint8_t> fileData(inputH * inputW);

//读取文件

readPGMFile(locateFile(std::to_string(inputFileIdx) + ".pgm", mParams.dataDirs), fileData.data(), inputH, inputW);

// Print ASCII representation of digit

gLogInfo << "Input:\n";

for (int i = 0; i < inputH * inputW; i++)

{

gLogInfo << (" .:-=+*#%@"[fileData[i] / 26]) << (((i + 1) % inputW) ? "" : "\n");

}

gLogInfo << std::endl;

float* hostInputBuffer = static_cast<float*>(buffers.getHostBuffer(inputTensorName));

//数据存储

for (int i = 0; i < inputH * inputW; i++)

{

hostInputBuffer[i] = 1.0 - float(fileData[i]) / 255.0;

}

return true;

}

//!

//! \brief Verifies that the inference output is correct

//!

bool SampleUffMNIST::verifyOutput(//检查输出结果

const samplesCommon::BufferManager& buffers, const std::string& outputTensorName, int groundTruthDigit) const

{

const float* prob = static_cast<const float*>(buffers.getHostBuffer(outputTensorName));

gLogInfo << "Output:\n";

float val{0.0f};

int idx{0};

// Determine index with highest output value

for (int i = 0; i < kDIGITS; i++)

{

if (val < prob[i])

{

val = prob[i];

idx = i;

}

}

// Print output values for each index

for (int j = 0; j < kDIGITS; j++)

{

gLogInfo << j << "=> " << setw(10) << prob[j] << "\t : ";

// Emphasize index with highest output value

if (j == idx)

{

gLogInfo << "***";

}

gLogInfo << "\n";

}

gLogInfo << std::endl;

return (idx == groundTruthDigit);

}

//!

//! \brief Runs the TensorRT inference engine for this sample

//!

//! \details This function is the main execution function of the sample.

//! It allocates the buffer, sets inputs, executes the engine, and verifies the output.

//!

bool SampleUffMNIST::infer()//执行推理

{

// Create RAII buffer manager object

samplesCommon::BufferManager buffers(mEngine, mParams.batchSize);//创建缓存区

auto context = SampleUniquePtr<nvinfer1::IExecutionContext>(mEngine->createExecutionContext());

if (!context)

{

return false;

}

bool outputCorrect = true;

float total = 0;

// Try to infer each digit 0-9

for (int digit = 0; digit < kDIGITS; digit++)//从0到9依次执行

{

if (!processInput(buffers, mParams.inputTensorNames[0], digit))

{

return false;

}

// Copy data from host input buffers to device input buffers

buffers.copyInputToDevice();//数据从cpu拷贝到GPU

const auto t_start = std::chrono::high_resolution_clock::now();

// Execute the inference work

//执行推理工作

if (!context->execute(mParams.batchSize, buffers.getDeviceBindings().data()))

{

return false;

}

const auto t_end = std::chrono::high_resolution_clock::now();

const float ms = std::chrono::duration<float, std::milli>(t_end - t_start).count();

total += ms;

// Copy data from device output buffers to host output buffers

buffers.copyOutputToHost();//从GPU拷贝结果到CPU

// Check and print the output of the inference

outputCorrect &= verifyOutput(buffers, mParams.outputTensorNames[0], digit);//判断结果是否正确

}

total /= kDIGITS;

gLogInfo << "Average over " << kDIGITS << " runs is " << total << " ms." << std::endl;//打印10次的平均时间

return outputCorrect;

}

//!

//! \brief Used to clean up any state created in the sample class

//!

bool SampleUffMNIST::teardown()

{

nvuffparser::shutdownProtobufLibrary();

return true;

}

//!

//! \brief Initializes members of the params struct

//! using the command line args

//!

samplesCommon::UffSampleParams initializeSampleParams(const samplesCommon::Args& args)//初始化参数

{

samplesCommon::UffSampleParams params;

if (args.dataDirs.empty()) //!< Use default directories if user hasn't provided paths

{

params.dataDirs.push_back("data/mnist/");

params.dataDirs.push_back("data/samples/mnist/");

}

else //!< Use the data directory provided by the user

{

params.dataDirs = args.dataDirs;

}

params.uffFileName = locateFile("lenet5.uff", params.dataDirs);

params.inputTensorNames.push_back("in");//in填充到inputTensorNames

params.batchSize = 1;

params.outputTensorNames.push_back("out");//out填充到outputTensorNames

params.dlaCore = args.useDLACore;

params.int8 = args.runInInt8;

params.fp16 = args.runInFp16;

return params;

}

//!

//! \brief Prints the help information for running this sample

//!

void printHelpInfo()

{

std::cout << "Usage: ./sample_uff_mnist [-h or --help] [-d or "

"--datadir=<path to data directory>] [--useDLACore=<int>]\n";

std::cout << "--help Display help information\n";

std::cout << "--datadir Specify path to a data directory, overriding "

"the default. This option can be used multiple times to add "

"multiple directories. If no data directories are given, the "

"default is to use (data/samples/mnist/, data/mnist/)"

<< std::endl;

std::cout << "--useDLACore=N Specify a DLA engine for layers that support "

"DLA. Value can range from 0 to n-1, where n is the number of "

"DLA engines on the platform."

<< std::endl;

std::cout << "--int8 Run in Int8 mode.\n";

std::cout << "--fp16 Run in FP16 mode." << std::endl;

}