every blog every motto: Happiness is a way station between too much and too little.

0. 前言

实战深度神经网络,dropout

1. 代码部分

1. 导入模块

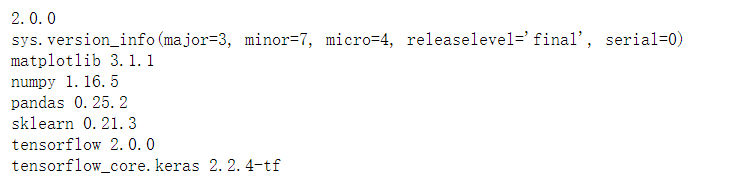

import matplotlib as mpl

import matplotlib.pyplot as plt

%matplotlib inline

import numpy as np

import sklearn

import pandas as pd

import os

import sys

import time

import tensorflow as tf

from tensorflow import keras

print(tf.__version__)

print(sys.version_info)

for module in mpl,np,pd,sklearn,tf,keras:

print(module.__name__,module.__version__)

2. 读取数据

fashion_mnist = keras.datasets.fashion_mnist

# print(fashion_mnist)

(x_train_all,y_train_all),(x_test,y_test) = fashion_mnist.load_data()

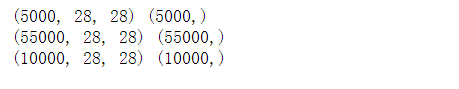

x_valid,x_train = x_train_all[:5000],x_train_all[5000:]

y_valid,y_train = y_train_all[:5000],y_train_all[5000:]

# 打印格式

print(x_valid.shape,y_valid.shape)

print(x_train.shape,y_train.shape)

print(x_test.shape,y_test.shape)

3. 数据归一化

# 数据归一化

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

# x_train:[None,28,28] -> [None,784]

x_train_scaled = scaler.fit_transform(x_train.astype(np.float32).reshape(-1,1)).reshape(-1,28,28)

x_valid_scaled = scaler.transform(x_valid.astype(np.float32).reshape(-1,1)).reshape(-1,28,28)

x_test_scaled = scaler.transform(x_test.astype(np.float32).reshape(-1,1)).reshape(-1,28,28)

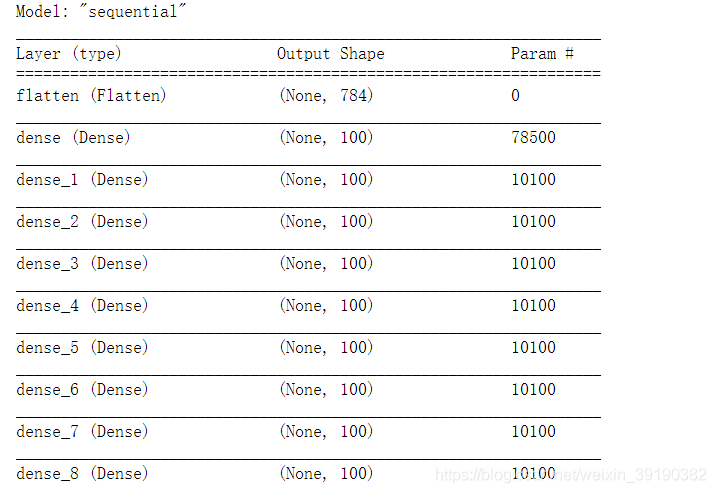

4. 构建模型

注意: 添加了dropout

# tf.keras.models.Sequential()

# 构建模型

# 深度神经网络

model = keras.models.Sequential()

# 输入数据展平

model.add(keras.layers.Flatten(input_shape=[28,28]))

# 隐藏层 20层

for _ in range(20):

model.add(keras.layers.Dense(100,activation="selu")) # 激活函数自带归一化

model.add(keras.layers.AlphaDropout(rate=0.5))

# AlphaDropout:1. 均值和方差不变 2. 归一化性质也不变

# model.add(keras.layers.Dropout(rate=0.5))

# 输出层

model.add(keras.layers.Dense(10,activation="softmax"))

#

model.compile(loss='sparse_categorical_crossentropy',optimizer='sgd',metrics=['accuracy'])

model.summary()

5. 训练

# 回调函数 Tensorboard(文件夹)\earylystopping\ModelCheckpoint(文件名)

logdir = os.path.join("dnn-selu-callbacks")

print(logdir)

if not os.path.exists(logdir):

os.mkdir(logdir)

# 文件名

output_model_file = os.path.join(logdir,"fashion_mnist_model.h5")

callbacks = [

keras.callbacks.TensorBoard(logdir),

keras.callbacks.ModelCheckpoint(output_model_file,save_best_only=True),

keras.callbacks.EarlyStopping(patience=5,min_delta=1e-3),

]

# 开始训练

history = model.fit(x_train_scaled,y_train,epochs=10,validation_data=(x_valid_scaled,y_valid),callbacks=callbacks)

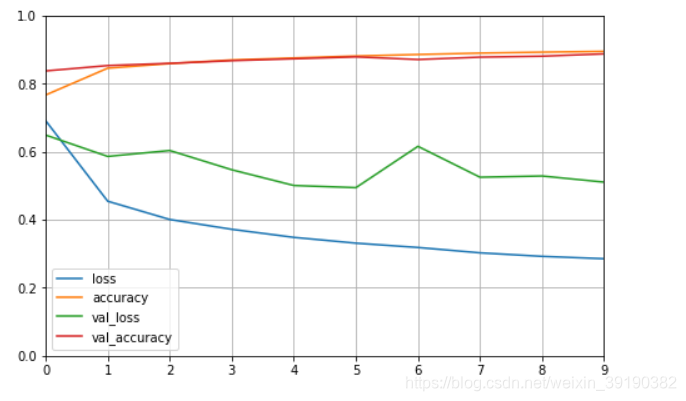

6. 学习曲线

# 画图

def plot_learning_curves(history):

pd.DataFrame(history.history).plot(figsize=(8,5))

plt.grid(True)

plt.gca().set_ylim(0,1)

plt.show()

plot_learning_curves(history)

# 损失函数,刚开始下降慢的原因

# 1. 参数众多,训练不充分

# 2. 梯度消失 -》 链式法则中

# 解决: selu缓解梯度消失

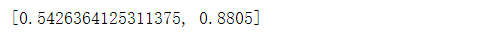

7. 测试集上

model.evaluate(x_test_scaled,y_test)

8. 问题

model.add(keras.layers.AlphaDropout(rate=0.5))

model.add(keras.layers.Dropout(rate=0.5))

注: AlphaDropout:1. 均值和方差不变 2. 归一化性质也不变

说人话:AlphaDropout更牛逼!!!!