0. 预先准备

0.1 虚拟主机准备

0.1.1 修改/etc/hosts内容

useradd hadoop

passwd hadoop

vim /etc/sudoers,增加一行hadoop ALL=(ALL) ALL

修改所有虚拟机上的/etc/hosts文件,增加以下内容。

192.168.174.128 master

192.168.174.122 slave1

192.168.174.120 slave2

0.1.2 修改/etc/hosts/权限

chmod -R 777 /etc/hosts

0.2 安装jdk1.8.0_221

在安装hadoop之前我们需要先安装jdk,本机安装jdk1.8.0_221,自行下载到centos中。

0.2.1 解压缩

tar -zxvf jdk1.8.0_221.tar.gz -C /usr/lib

0.2.2 修改配置文件/etc/profile

sudo vim /etc/profile

增加以下内容:

export JAVA_HOME=/usr/lib/jvm/jdk1.8.0_221

export JRE_HOME=${JAVA_HOME}/jre

export CLASSPATH=.:${JAVA_HOME}/lib:${JRE_HOME}/lib

export PATH=${JAVA_HOME}/bin:$PATH

0.3 关闭防火墙

systemctl stop firewalld # 关闭防火墙

systemctl disable firewalld #关闭开机启动项

vim /etc/selinux/config 将SELINUX=enforcing改为disabled

firewall-cmd --state #查看防火墙状态

1 下载hadoop2.7.5.tar.gz文件

wget https://mirrors.tuna.tsinghua.edu.cn/apache/hadoop/common/hadoop-2.7.7/hadoop-2.7.7.tar.gz

2 解压缩hadoop包到/usr/local下

tar -xzvf hadoop-2.7.7.tar.gz -C /usr/local

sudo mv hadoop-2.7.7 hadoop

sudo chown -R hadoop:hadoop /usr/local/hadoop

3 修改配置文件

需修改的文件:

- /usr/local/hadoop/etc/hadoop目录下:

hadoop-env.sh

core-site.xml

hdfs-site.xml

mapred-site.xml

yarn-site.xml

slaves

1). hadoop-env.sh

export JAVA_HOME=/usr/lib/jvm/jdk1.8.0_221

export HADOOP_HOME=/usr/local/hadoop

2). core-site.xml

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://master:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/usr/local/hadoop/tmp/</value>

</property>

</configuration>

3). hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.http-address</name>

<value>master:50070</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>slave1:50090</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/usr/local/hadoop/tmp/dfs/namenode_dir</value>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/usr/local/hadoop/tmp/dfs/datanode_dir</value>

</property>

</configuration>

4). mapred-site.xml

<configuration>

<property>

<name>dfs.namenode.http-address</name>

<value>master:50070</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>slave1:50090</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/usr/local/hadoop/tmp/dfs/namenode_dir</value>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/usr/local/hadoop/tmp/dfs/datanode_dir</value>

</property>

</configuration>

5). yarn-site.xml

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>master</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>master:8032</value>

</property>

<property>

<name>yarn.resourcemanager.schedular.address</name>

<value>master:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>master:8031</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

6). slaves

slave1

slave2

- 修改配置文件/etc/profile,添加以下设置

export HADOOP_HOME=/usr/local/hadoop

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin

4 ssh免密传输

该命令在所有主机执行

ssh-keygen -t rsa

在master主机上执行

cd ~/.ssh

cp id_rsa.pub authorized_keys

ssh-copy-id hadoop@slave1

ssh-copy-id hadoop@slave2

该命令在所有主机执行

chmod -R 700 .ssh

chmod 600 authorized_keys

5 将master中配置好的主机传给其他主机

scp -r /usr/local/hadoop hadoop@slave1:~/

scp -r /usr/local/hadoop hadoop@slave2:~/

在slave主机上将hadoop移动到/usr/local/下, 并按照以上配置修改/etc/profile文件。

6 初始化hadoop

hadoop namenode -format

不可多次初始化,若初始化错误,则删除/usr/local/hadoop/tmp/dfs/namenode_dir/current文件,再次初始化。

错误原因总结:

- 多次初始化

- 防火墙未关闭

- /etc/hosts文件为设置hadoop用户的权限

- 配置文件错误

7 启动hadoop

start-all.sh

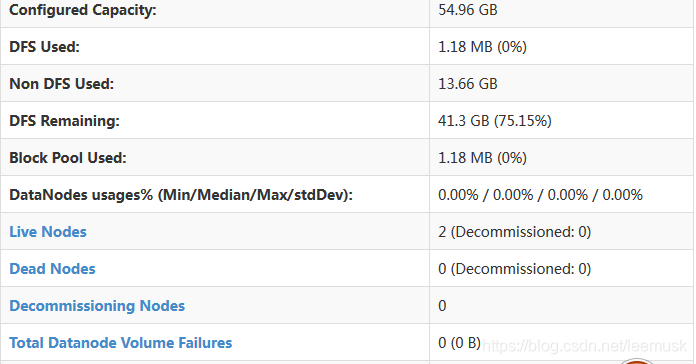

8 通过web页面查看是否配置成功

配置成功!

配置成功!