第四章:HDFS搭建客户端并测试

4.1 测试连接虚拟机

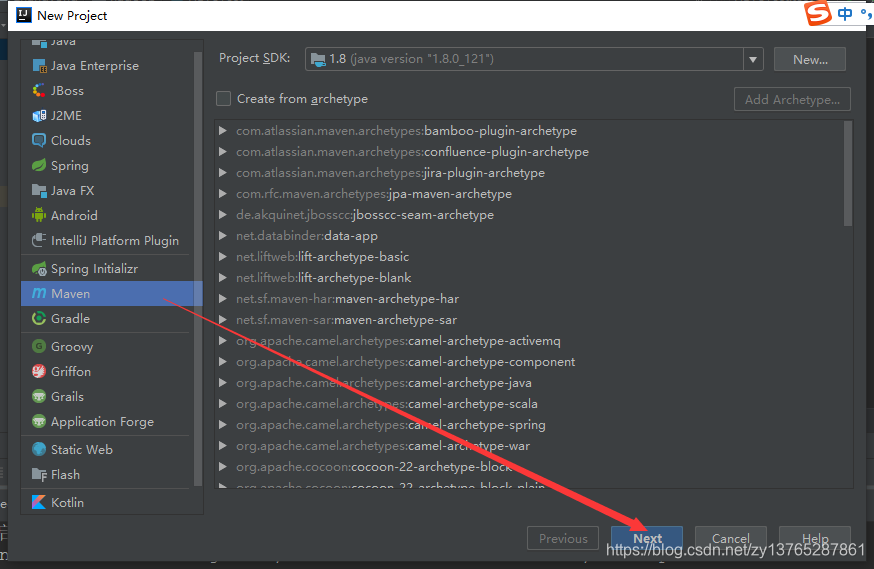

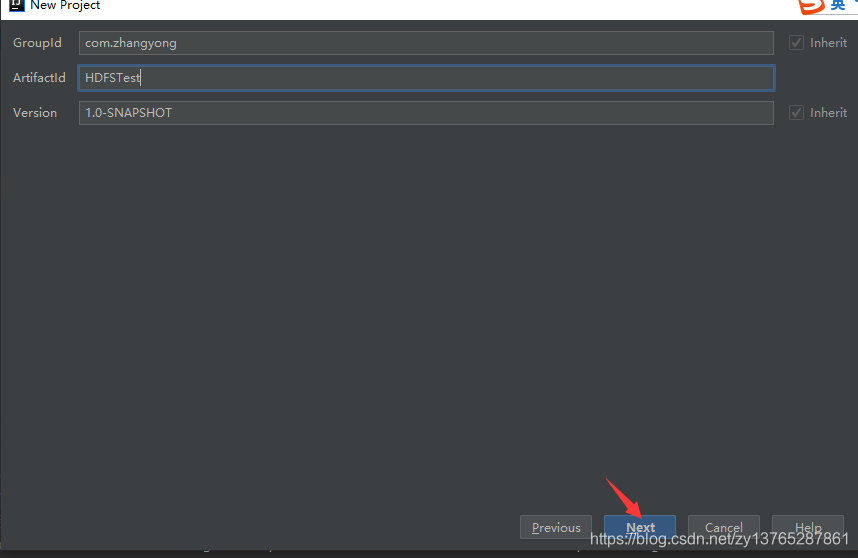

第一步:用IDEA创建Maven形式的Java项目

第二步:添加Maven依赖

在pom.xml添加HDFS的坐标,有些坐标用不了,但是我们后面的项目要用,可以全部先加进来

<dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.12</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>3.1.2</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>3.1.2</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>3.1.2</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-yarn-common</artifactId>

<version>3.1.2</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-yarn-client</artifactId>

<version>3.1.2</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-yarn-server-resourcemanager</artifactId>

<version>3.1.2</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-core</artifactId>

<version>3.1.2</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-jobclient</artifactId>

<version>3.1.2</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-common</artifactId>

<version>3.1.2</version>

</dependency>

<dependency>

<groupId>net.minidev</groupId>

<artifactId>json-smart</artifactId>

<version>2.3</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-core</artifactId>

<version>2.12.1</version>

</dependency>

<dependency>

<groupId>org.anarres.lzo</groupId>

<artifactId>lzo-hadoop</artifactId>

<version>1.0.6</version>

</dependency>

</dependencies>

第三步:在resources目录下加入日志文件

log4j.properties

log4j.rootLogger=INFO, stdout

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=%d %p [%c] - %m%n

log4j.appender.logfile=org.apache.log4j.FileAppender

log4j.appender.logfile.File=target/spring.log

log4j.appender.logfile.layout=org.apache.log4j.PatternLayout

log4j.appender.logfile.layout.ConversionPattern=%d %p [%c] - %m%n

第四步:测试java代码

public class HDFSTest {

/**

* 测试java连接Hadoop的HDFS

* @throws URISyntaxException

* @throws IOException

*/

@Test

public void test() throws URISyntaxException, IOException {

//虚拟机连接名,必须在本地配置域名,不然只能IP地址访问

String hdfs = "hdfs://hadoop101:9000";

// 1 获取文件系统

Configuration cfg = new Configuration ();

FileSystem fs = FileSystem.get (new URI (hdfs), cfg);

System.out.println (cfg);

System.out.println (fs);

System.out.println("HDFS开启了!!!");

}

}

第五步:运行得到结果(表示成功)