大数据(bigData)

- 数据量级大,处理GB/TB/PB级别数据(存储、分析)

- 时效性,需要在一定的时间范围内计算出结果(几个小时以内)

- 数据多维度(多样性),存在形式:传感器采集信息、web运行日志、用户的行为数据。

- 数据可疑性,数据要有价值。需要对采集的数据做数据清洗、降噪

大数据解决问题?

- 存储

打破单机存储瓶颈(数量有限,数据不安全),读写效率低下(顺序化读写)。大数据提出以分布式存储做为大数据存储和指导思想,通过构建集群,实现对硬件做水平扩展提升系统存储能力。目前为止常用海量数据存储的解决方案:Hadoop HDFS、FastDFS/GlusterFS(传统文件系统)、MongoDB GridFS、S3等

- 计算

单机计算所能计算的数据量有限,而且所需时间无法控制。大数据提出一种新的思维模式,讲计算拆分成n个小计算任务,然后将计算任务分发给集群中各个计算节点,由各个计算几点合作完成计算,我们将该种计算模式称为分布式计算。目前主流的计算模式:离线计算、近实时计算、在线实时计算等。其中离线计算以Hadoop的MapReduce为代表、近实时计算以Spark内存计算为代表、在线实时计算以Storm、KafkaStream、SparkStream为代表。

总结: 以上均是以分布式的计算思维去解决问题,因为垂直提升硬件成本高,一般企业优先选择做分布式水平扩展。

Hadoop 诞生

Hadoop由 Apache Software Foundation 公司于 2005 年秋天作为Lucene的子项目Nutch的一部分正式引入。它受到最先由 Google Lab 开发的 Map/Reduce 和 Google File System(GFS) 的启发。人们重构Nutch项目中的存储模块和计算模块。2006 年Yahoo团队加入Nutch工程尝试将Nutch存储模块和计算模块剥离因此就产生Hadoop1.x版本。2010年yahoo重构了hadoop的计算模块,进一步优化Hadoop中MapReduce计算框架的管理模型,后续被称为Hadoop-2.x版本。hadoop-1.x和hadoop-2.x版本最大的区别是计算框架重构。因为Hadoop-2.x引入了Yarn作为hadoop2计算框架的资源调度器,使得MapReduce框架扩展性更好,运行管理更加可靠。

- HDFS 存储

- MapReduce 计算 (MR2 引入YARN资源管理器)

大数据生态圈

hadoop生态-2006(物资文明):

hdfshadoop的分布式文件存储系统mapreduce指的是hadoop的分布式计算hbase基于hdfs的一款nosql数据库flume分布式日志采集系统kafka分布式消息队列hive一款做数据仓库ETL(Extract-Transform-Load)工具,可以将用户编写的SQL翻译成MapReduce程序。- Storm 一款流计算框架,实现数据在线实时处理。

- Mahout:象夫(骑大象的人),基于MapReduce实现一套算法库,通常用于机器学习和人工智能。

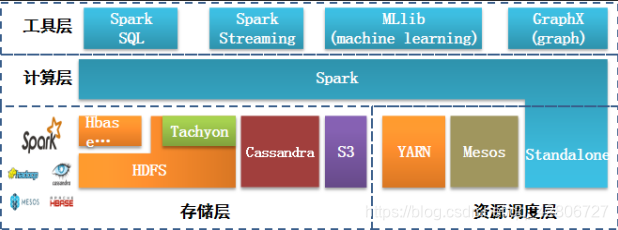

计算层面-2010年(精神文明)

Spark Core:吸取MapReduce框架设计的经验,有加州伯克利实验室使用Scala语言设计出了一个基于内存计算的分布式计算框架,计算性能几乎是hadoop的MapReduce的10~100倍。Spark SQL:使用SQL语句实现Spark的统计计算逻辑,增强交互性。Spark Stream:Spark流计算框架。- Spark MLib :基于Spark计算实现的机器学习语言库。

- Spark GraphX:图形化关系存储

大数据应用场景?

- 交通管理

- 电商系统(日志分析)

- 互联网医疗

- 金融信息

- 国家电网

Hadoop Distribute FileSystem

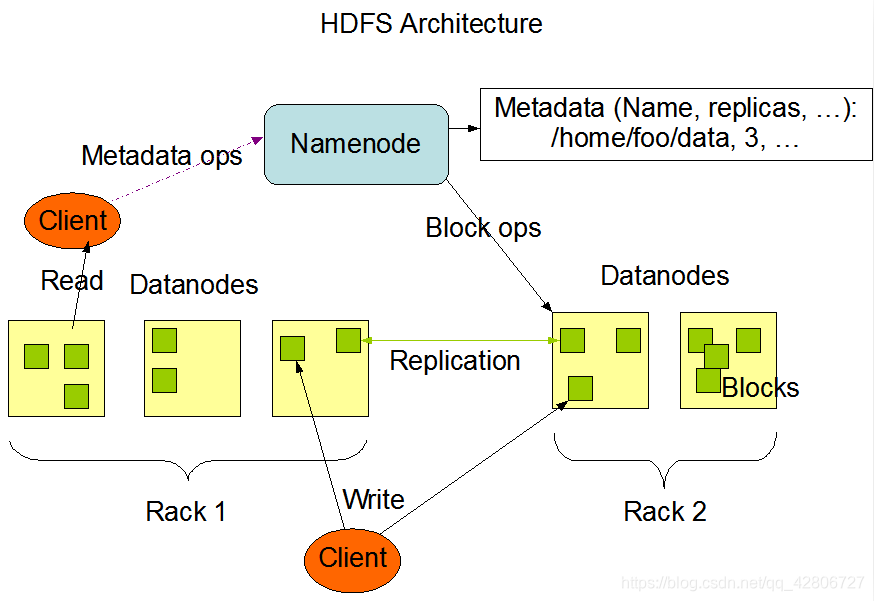

HDFS 架构

The Hadoop Distributed File System (HDFS) is a distributed file system designed to run on commodity hardware(商用硬件).Hardware failure is the norm rather than the exception. An HDFS instance may consist of hundreds or thousands of server machines, each storing part of the file system’s data. The fact that there are a huge number of components and that each component has a non-trivial probability of failure means that some component of HDFS is always non-functional. Therefore, detection of faults and quick, automatic recovery from them is a core architectural goal of HDFS.

- Namenode和DataNode

HDFS has a master/slave architecture. An HDFS cluster consists of a single NameNode, a master server that manages the file system namespace and regulates access to files by clients. In addition, there are a number of DataNodes, usually one per node in the cluster, which manage storage attached to the nodes that they run on. HDFS exposes a file system namespace and allows user data to be stored in files. Internally, a file is split into one or more blocks and these blocks are stored in a set of DataNodes. The NameNode executes file system namespace operations like opening, closing, and renaming files and directories. It also determines the mapping of blocks to DataNodes. The DataNodes are responsible for serving read and write requests from the file system’s clients. The DataNodes also perform block creation, deletion, and replication upon instruction from the NameNode.

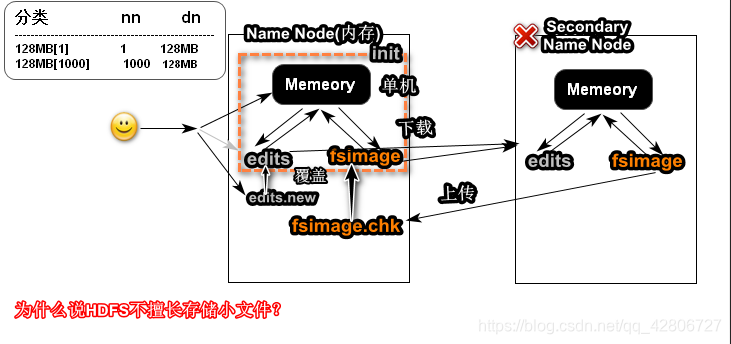

namenode:命名节点,用于管理集群中元数据(描述了数据块到datanode的映射关系以及块的副本信息)以及DataNode。控制数据块副本数(副本因子)可以配置dfs.replication参数,如果是单机模式需要配置1,其次nenodenode的client访问入口fs.defaultFS。在第一次搭建hdfs的时候需要执行hdfs namenode -foramt作用就是为namenode创建元数据初始化文件fsimage.

dataNode:存储block数据,响应客户端对block读写请求,执行namenode指令,同时向namenode汇报自身的状态信息。

block:文件的切割单位默认是128MB,可以通过配置dfs.blocksize

Rack: 机架,用于优化存储和计算,标识datanode所在物理主机的位置信息,可以通过hdfs dfsadmin -printTopology

- namenode & secondarynamenode

阅读:http://hadoop.apache.org/docs/stable/hadoop-project-dist/hadoop-hdfs/HdfsDesign.html

Hadoop HDFS(分布式存储)搭建

- 配置主机名和IP的映射关系

[root@CentOS ~]# vi /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.29.128 CentOS

- 关闭系统防火墙

[root@CentOS ~]# clear

[root@CentOS ~]# service iptables stop

iptables: Setting chains to policy ACCEPT: filter [ OK ]

iptables: Flushing firewall rules: [ OK ]

iptables: Unloading modules: [ OK ]

[root@CentOS ~]# chkconfig iptables off

[root@CentOS ~]# chkconfig --list | grep iptables

iptables 0:off 1:off 2:off 3:off 4:off 5:off 6:off

[root@CentOS ~]#

- 配置本机SSH免密码登录

[root@CentOS ~]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

de:83:c8:81:77:d7:db:f2:79:da:97:8b:36:d5:78:01 root@CentOS

The key's randomart image is:

+--[ RSA 2048]----+

| |

| E |

| . |

| . . . |

| . o S . . .o|

| o = + o..o|

| o o o o o..|

| . =.+o|

| ..=++|

+-----------------+

[root@CentOS ~]# ssh-copy-id CentOS

root@centos's password: ****

Now try logging into the machine, with "ssh 'CentOS'", and check in:

.ssh/authorized_keys

to make sure we haven't added extra keys that you weren't expecting

[root@CentOS ~]# ssh CentOS

Last login: Mon Oct 15 19:47:46 2018 from 192.168.29.1

[root@CentOS ~]# exit

logout

Connection to CentOS closed.

- 配置JAVA开发环境

[root@CentOS ~]# rpm -ivh jdk-8u171-linux-x64.rpm

Preparing... ########################################### [100%]

1:jdk1.8 ########################################### [100%]

Unpacking JAR files...

tools.jar...

plugin.jar...

javaws.jar...

deploy.jar...

rt.jar...

jsse.jar...

charsets.jar...

localedata.jar...

[root@CentOS ~]# vi .bashrc

JAVA_HOME=/usr/java/latest

PATH=$PATH:$JAVA_HOME/bin

CLASSPATH=.

export JAVA_HOME

export PATH

export CLASSPATH

[root@CentOS ~]# source .bashrc

[root@CentOS ~]# jps

1674 Jps

- 配置安装hadoop hdfs

[root@CentOS ~]# tar -zxf hadoop-2.6.0_x64.tar.gz -C /usr/

HADOOP_HOME=/usr/hadoop-2.6.0

JAVA_HOME=/usr/java/latest

PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

CLASSPATH=.

export JAVA_HOME

export PATH

export CLASSPATH

export HADOOP_HOME

[root@CentOS ~]# source .bashrc

[root@CentOS ~]# hadoop classpath

/usr/hadoop-2.6.0/etc/hadoop:/usr/hadoop-2.6.0/share/hadoop/common/lib/*:/usr/hadoop-2.6.0/share/hadoop/common/*:/usr/hadoop-2.6.0/share/hadoop/hdfs:/usr/hadoop-2.6.0/share/hadoop/hdfs/lib/*:/usr/hadoop-2.6.0/share/hadoop/hdfs/*:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/*:/usr/hadoop-2.6.0/share/hadoop/yarn/*:/usr/hadoop-2.6.0/share/hadoop/mapreduce/lib/*:/usr/hadoop-2.6.0/share/hadoop/mapreduce/*:/usr/hadoop-2.6.0/contrib/capacity-scheduler/*.jar

- 修改core-site.xml文件

[root@CentOS ~]# vi /usr/hadoop-2.6.0/etc/hadoop/core-site.xml

<property>

<name>fs.defaultFS</name>

<value>hdfs://CentOS:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/usr/hadoop-2.6.0/hadoop-${user.name}</value>

</property>

- 修改hdfs-site.xml

[root@CentOS ~]# vi /usr/hadoop-2.6.0/etc/hadoop/hdfs-site.xml

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

- 修改slaves文本文件

[root@CentOS ~]# vi /usr/hadoop-2.6.0/etc/hadoop/slaves

CentOS

- 初始化HDFS

[root@CentOS ~]# hdfs namenode -format

...

18/10/15 20:06:03 INFO namenode.NNConf: ACLs enabled? false

18/10/15 20:06:03 INFO namenode.NNConf: XAttrs enabled? true

18/10/15 20:06:03 INFO namenode.NNConf: Maximum size of an xattr: 16384

18/10/15 20:06:03 INFO namenode.FSImage: Allocated new BlockPoolId: BP-665637298-192.168.29.128-1539605163213

`18/10/15 20:06:03 INFO common.Storage: Storage directory /usr/hadoop-2.6.0/hadoop-root/dfs/name has been successfully formatted.`

18/10/15 20:06:03 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

18/10/15 20:06:03 INFO util.ExitUtil: Exiting with status 0

18/10/15 20:06:03 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at CentOS/192.168.29.128

************************************************************/

[root@CentOS ~]# ls /usr/hadoop-2.6.0/

bin `hadoop-root` lib LICENSE.txt README.txt share

etc include libexec NOTICE.txt sbin

只需要在第一次启动HDFS的时候执行,以后重启无需执行该命令

- 启动HDFS服务

[root@CentOS ~]# start-dfs.sh

Starting namenodes on [CentOS]

CentOS: starting namenode, logging to /usr/hadoop-2.6.0/logs/hadoop-root-namenode-CentOS.out

CentOS: starting datanode, logging to /usr/hadoop-2.6.0/logs/hadoop-root-datanode-CentOS.out

Starting secondary namenodes [0.0.0.0]

The authenticity of host '0.0.0.0 (0.0.0.0)' can't be established.

RSA key fingerprint is 7c:95:67:06:a7:d0:fc:bc:fc:4d:f2:93:c2:bf:e9:31.

Are you sure you want to continue connecting (yes/no)? yes

0.0.0.0: Warning: Permanently added '0.0.0.0' (RSA) to the list of known hosts.

0.0.0.0: starting secondarynamenode, logging to /usr/hadoop-2.6.0/logs/hadoop-root-secondarynamenode-CentOS.out

[root@CentOS ~]# jps

2132 SecondaryNameNode

1892 NameNode

2234 Jps

1998 DataNode

用户可以通过浏览器访问:http://192.168.29.128:50070

HDFS Shell

[root@CentOS ~]# hadoop fs -help | hdfs dfs -help

Usage: hadoop fs [generic options]

[-appendToFile <localsrc> ... <dst>]

[-cat [-ignoreCrc] <src> ...]

[-chmod [-R] <MODE[,MODE]... | OCTALMODE> PATH...]

[-copyFromLocal [-f] [-p] [-l] <localsrc> ... <dst>]

[-copyToLocal [-p] [-ignoreCrc] [-crc] <src> ... <localdst>]

[-cp [-f] [-p | -p[topax]] <src> ... <dst>]

[-get [-p] [-ignoreCrc] [-crc] <src> ... <localdst>]

[-help [cmd ...]]

[-ls [-d] [-h] [-R] [<path> ...]]

[-mkdir [-p] <path> ...]

[-moveFromLocal <localsrc> ... <dst>]

[-moveToLocal <src> <localdst>]

[-mv <src> ... <dst>]

[-put [-f] [-p] [-l] <localsrc> ... <dst>]

[-rm [-f] [-r|-R] [-skipTrash] <src> ...]

[-rmdir [--ignore-fail-on-non-empty] <dir> ...]

[-tail [-f] <file>]

[-text [-ignoreCrc] <src> ...]

[-touchz <path> ...]

[-usage [cmd ...]]

[root@CentOS ~]# hadoop fs -copyFromLocal /root/hadoop-2.6.0_x64.tar.gz /

[root@CentOS ~]# hdfs dfs -copyToLocal /hadoop-2.6.0_x64.tar.gz ~/

参考:http://hadoop.apache.org/docs/stable/hadoop-project-dist/hadoop-common/FileSystemShell.html

Java API 操作HDFS

-

Windows开发环境搭建

- 解压hadoop的安装包到C:/

- 配置HADOOP_HOME环境变量

- 拷贝

winutils.exe和hadoop.dll文件到hadoop安装目录的bin目录下 - windows上配置CentOS主机名和IP的映射关系

- 重启IDEA,以便系统识别

HADOOP_HOME环境变量

-

导入Maven依赖

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.12</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.6.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.6.0</version>

</dependency>

<dependency>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

<version>1.2.17</version>

</dependency>

- 关闭hdfs权限

org.apache.hadoop.security.AccessControlException: Permission denied: user=HIAPAD, access=WRITE, inode="/":root:supergroup:drwxr-xr-x

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkFsPermission(FSPermissionChecker.java:271)

...

配置hdfs-site.xml(方案1)

<property>

<name>dfs.permissions.enabled</name>

<value>false</value>

</property>

修改JVM启动参数配置-DHADOOP_USER_NAME=root(方案2)

java xxx -DHADOOP_USER_NAME=root

执行chmod修改目录读写权限

[root@CentOS ~]# hdfs dfs -chmod -R 777 /

- java API 案例

private FileSystem fileSystem;

private Configuration conf;

@Before

public void before() throws IOException {

conf=new Configuration();

conf.set("fs.defaultFS","hdfs://CentOS:9000");

conf.set("dfs.replication","1");

conf.set("fs.trash.interval","1");

fileSystem=FileSystem.get(conf);

assertNotNull(fileSystem);

}

@Test

public void testUpload01() throws IOException {

InputStream is=new FileInputStream("C:\\Users\\HIAPAD\\Desktop\\买家须知.txt");

Path path = new Path("/bb.txt");

OutputStream os=fileSystem.create(path);

IOUtils.copyBytes(is,os,1024,true);

/*byte[] bytes=new byte[1024];

while (true){

int n=is.read(bytes);

if(n==-1) break;

os.write(bytes,0,n);

}

os.close();

is.close();*/

}

@Test

public void testUpload02() throws IOException {

Path src=new Path("file:///C:\\Users\\HIAPAD\\Desktop\\买家须知.txt");

Path dist = new Path("/dd.txt");

fileSystem.copyFromLocalFile(src,dist);

}

@Test

public void testDownload01() throws IOException {

Path dist=new Path("file:///C:\\Users\\HIAPAD\\Desktop\\11.txt");

Path src = new Path("/dd.txt");

fileSystem.copyToLocalFile(src,dist);

//fileSystem.copyToLocalFile(false,src,dist,true);

}

@Test

public void testDownload02() throws IOException {

OutputStream os=new FileOutputStream("C:\\Users\\HIAPAD\\Desktop\\22.txt");

InputStream is = fileSystem.open(new Path("/dd.txt"));

IOUtils.copyBytes(is,os,1024,true);

}

@Test

public void testDelete() throws IOException {

Path path = new Path("/dd.txt");

fileSystem.delete(path,true);

}

@Test

public void testListFiles() throws IOException {

Path path = new Path("/");

RemoteIterator<LocatedFileStatus> files = fileSystem.listFiles(path, true);

while(files.hasNext()){

LocatedFileStatus file = files.next();

System.out.println(file.getPath()+" "+file.getLen()+" "+file.isFile());

}

}

@Test

public void testDeleteWithTrash() throws IOException {

Trash trash=new Trash(conf);

trash.moveToTrash(new Path("/aa.txt"));

}

@After

public void after() throws IOException {

fileSystem.close();

}

下一篇:Hadoop之MapReduce