只要在系统中配置HADOOP的环境变量, 可以在任意的位置输入bin和sbin下的命令!

- hdfs

Usage: hdfs [--config confdir] [--loglevel loglevel] COMMAND

where COMMAND is one of:

dfs run a filesystem command on the file systems supported in Hadoop.

classpath prints the classpath

namenode -format format the DFS filesystem

secondarynamenode run the DFS secondary namenode

namenode run the DFS namenode

journalnode run the DFS journalnode

zkfc run the ZK Failover Controller daemon

datanode run a DFS datanode

debug run a Debug Admin to execute HDFS debug commands

dfsadmin run a DFS admin client

haadmin run a DFS HA admin client

fsck run a DFS filesystem checking utility

balancer run a cluster balancing utility

jmxget get JMX exported values from NameNode or DataNode.

mover run a utility to move block replicas across

storage types

oiv apply the offline fsimage viewer to an fsimage

oiv_legacy apply the offline fsimage viewer to an legacy fsimage

oev apply the offline edits viewer to an edits file

fetchdt fetch a delegation token from the NameNode

getconf get config values from configuration

groups get the groups which users belong to

snapshotDiff diff two snapshots of a directory or diff the

current directory contents with a snapshot

lsSnapshottableDir list all snapshottable dirs owned by the current user

Use -help to see options

portmap run a portmap service

nfs3 run an NFS version 3 gateway

cacheadmin configure the HDFS cache

crypto configure HDFS encryption zones

storagepolicies list/get/set block storage policies

version print the versionMost commands print help when invoked w/o parameters.

- hdfs dfs 开启shell客户端控制台

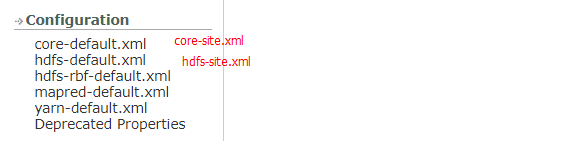

在HDFS中 hdfs dfs 默认操作的文件系统是本地系统 , 需要修改 core-site.xml修改默认的文件系统,官网的配置文件如下

Usage: hadoop fs [generic options]

[-appendToFile <localsrc> ... <dst>] hdfs中只能向文件后追加内容

[-cat [-ignoreCrc] <src> ...]

[-checksum <src> ...]

[-chgrp [-R] GROUP PATH...]

[-chmod [-R] <MODE[,MODE]... | OCTALMODE> PATH...]

[-chown [-R] [OWNER][:[GROUP]] PATH...]

[-copyFromLocal [-f] [-p] [-l] [-d] <localsrc> ... <dst>]

[-copyToLocal [-f] [-p] [-ignoreCrc] [-crc] <src> ... <localdst>]

[-count [-q] [-h] [-v] [-t [<storage type>]] [-u] [-x] <path> ...]

[-cp [-f] [-p | -p[topax]] [-d] <src> ... <dst>]

[-createSnapshot <snapshotDir> [<snapshotName>]]

[-deleteSnapshot <snapshotDir> <snapshotName>]

[-df [-h] [<path> ...]]

[-du [-s] [-h] [-x] <path> ...]

[-expunge]

[-find <path> ... <expression> ...]

[-get [-f] [-p] [-ignoreCrc] [-crc] <src> ... <localdst>]

[-getfacl [-R] <path>]

[-getfattr [-R] {-n name | -d} [-e en] <path>]

[-getmerge [-nl] [-skip-empty-file] <src> <localdst>]

[-help [cmd ...]]

[-ls [-C] [-d] [-h] [-q] [-R] [-t] [-S] [-r] [-u] [<path> ...]]

[-mkdir [-p] <path> ...]

[-moveFromLocal <localsrc> ... <dst>]

[-moveToLocal <src> <localdst>]

[-mv <src> ... <dst>]

[-put [-f] [-p] [-l] [-d] <localsrc> ... <dst>]

[-renameSnapshot <snapshotDir> <oldName> <newName>]

[-rm [-f] [-r|-R] [-skipTrash] [-safely] <src> ...]

[-rmdir [--ignore-fail-on-non-empty] <dir> ...]

[-setfacl [-R] [{-b|-k} {-m|-x <acl_spec>} <path>]|[--set <acl_spec> <path>]]

[-setfattr {-n name [-v value] | -x name} <path>]

[-setrep [-R] [-w] <rep> <path> ...]

[-stat [format] <path> ...]

[-tail [-f] <file>]

[-test -[defsz] <path>]

[-text [-ignoreCrc] <src> ...]

[-touchz <path> ...]

[-truncate [-w] <length> <path> ...]

[-usage [cmd ...]]

命令

1 hdfs dfs -ls

Filesystem Size Used Available Use%

hdfs://linux01:9000 51.0 G 711.1 M 40.4 G 1%

2 hdfs dfs -df -h

Filesystem Size Used Available Use%

hdfs://linux01:9000 51.0 G 711.1 M 40.4 G 1%

3 hdfs dfs -du -h

[root@linux01 ~]# hdfs dfs -du /hadoop-2.8.5.tar.gz

246543928 /hadoop-2.8.5.tar.gz

4 hdfs dfs -mkdir -p 层级创建文件夹

5 hdfs dfs -rm -r

[root@linux01 ~]# hdfs dfs -rm -r /a/

Deleted /a[root@linux01 ~]# hdfs dfs -rm -r hdfs://linux01:9000/*

Deleted hdfs://linux01:9000/data

Deleted hdfs://linux01:9000/hadoop-2.8.5.tar.gz

Deleted hdfs://linux01:9000/hui

6 hdfs dfs -put

7 hdfs dfs -get HDFS的路径 本地路径