支持向量机SVM

| 支持向量机 |

|---|

| 优点:泛化错误率低,计算开销不大,结果易解释 |

| 缺点:对参数调节和核函数的选择敏感,原始分类器不加修改仅适用于处理二类问题 |

| 适用数据类型:数值型和标称型数据 |

| SVM的一般流程 |

|---|

| 1.收集数据:可以使用任意方法 |

| 2.准备数据:需要数值型数据 |

| 3.分析数据:有助于可视化分隔超平面 |

| 4.训练数据:SVM的大部分时间都源自训练,该过程主要实现两个参数的调优 |

| 5.测试算法:十分简单的计算过程就可以实现 |

| 6.使用算法:几乎所有分类问题都可以使用SVM,值得一提的是,SVM本身是一个二类分类器,对多类问题应用SVM需要对代码做一些修改 |

在开始SVM的代码之前,要先知道几个概念和算法:

-

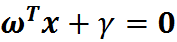

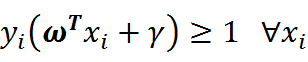

决策面方程(超平面方程):

超平面即分隔数据点的平面

其中

-

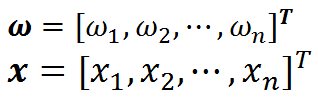

分隔间隔方程:

分隔间隔即支持向量到分隔平面的垂直距离

-

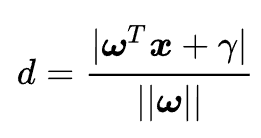

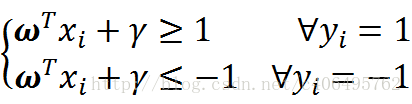

SVM最优化问题的约束条件:

整合后即为

-

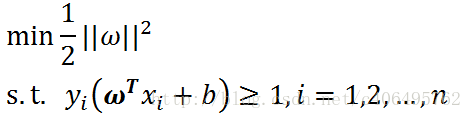

线性SVM优化问题基本描述:

我们将分隔间隔方程进行等效变换后,再结合约束条件,就可以整合得到基本描述了(所有的更详细的步骤和证明可以移步到最后的网址)

-

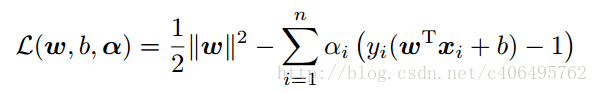

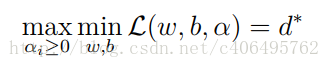

拉格朗日函数:

对优化问题进行拉格朗日处理

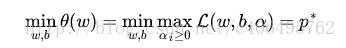

而我们的目的就是求新目标函数的最小值

只是直接求解比较困难,我们再进行对偶处理,得到

对该函数求解,先使得L关于w,b最小化,分别对w,b求导并使其等于零,即为满足L(w,b,α)关于w和b最小化的条件,代入原函数得

这就是最新得到的优化问题函数 -

SVM优化问题整体思路:

①明确目标:我们想得到一个超平面且此时有最大分隔间隔,为此我们需要得到方程的w和b;

②等效变换:由于直接求解会很不方便,于是我们进行等效变换,即求得最小的||w||;

③约束条件:这里的约束条件就是所有样本点需满足的分类条件;

④拉格朗日函数:我们以||w||和约束条件构造拉格朗日函数,得到拉格朗日函数后还是很难求解,于是再进行拉格朗日对偶处理;

⑤简化处理后的优化问题:现在优化问题的求解,首先要让L(w,b,α)关于w和b最小化,然后求对α的极大,最后利用SMO算法求解对偶问题中的拉格朗日乘子;

⑥对偶问题求解:为了让L(w,b,α)关于w和b最小化,我们对L对w和b求偏导,代入后即得满足L(w,b,α)关于w和b最小化的新函数L(α),这时函数只有αi一个变量;

⑦序列最小优化SMO算法:我们通过这个优化算法能对L(α)得到α,再根据α,我们就可以求解出w和b,进而求得我们最初的目的:找到超平面,即”决策平面” -

SMO算法:

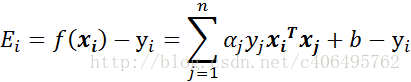

①计算误差:

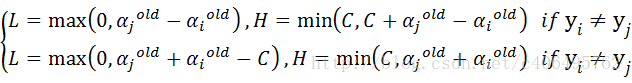

②计算上下界L和H:

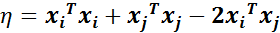

③计算学习效率η:

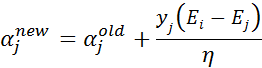

④更新αj:

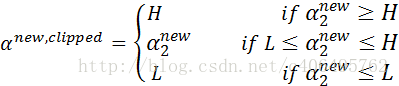

⑤根据取值范围修剪αj:

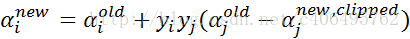

⑥更新αi:

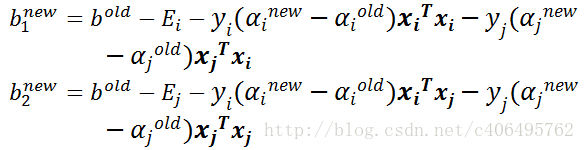

⑦更新b1和b2:

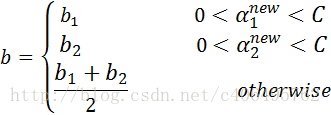

⑧根据b1和b2更新b:

以上,我们知道了SVM的相关原理知识,我们现在可以通过编程实现了

简化版SMO算法:

简化版SMO算法跳过了外循环确定要优化的最佳alpha对,首先在数据集上遍历每一个alpha,然后在剩下的alpha集合中随机选择另一个alpha,从而构建alpha对

- SMO算法中的辅助函数:

def loadDataSet(fileName):

dataMat = []; labelMat = []

fr = open(fileName)

for line in fr.readlines():

lineArr = line.strip().split('\t')

dataMat.append([float(lineArr[0]), float(lineArr[1])])

labelMat.append(float(lineArr[2]))

return dataMat,labelMat

def selectJrand(i,m):

j=i #we want to select any J not equal to i

while j==i:

j = int(random.uniform(0,m))

return j

def clipAlpha(aj,H,L):

if aj > H:

aj = H

if L > aj:

aj = L

return aj- 简化版SMO算法:

def smoSimple(dataMatIn, classLabels, C, toler, maxIter):#数据集、类别标签、常数C、容错率、退出前最大的循环次数

dataMatrix = mat(dataMatIn); labelMat = mat(classLabels).transpose()

b = 0; m,n = shape(dataMatrix)

alphas = mat(zeros((m,1)))

iter_num = 0 #储存在没有任何alpha改变的情况下遍历数据集的次数

while iter_num < maxIter:

alphaPairsChanged = 0 #记录alpha是否已经进行优化

for i in range(m):

fXi = float(multiply(alphas,labelMat).T*(dataMatrix*dataMatrix[i,:].T)) + b

Ei = fXi - float(labelMat[i])#if checks if an example violates KKT conditions

if ((labelMat[i]*Ei < -toler) and (alphas[i] < C)) or ((labelMat[i]*Ei > toler) and (alphas[i] > 0)):

j = selectJrand(i,m)

fXj = float(multiply(alphas,labelMat).T*(dataMatrix*dataMatrix[j,:].T)) + b

Ej = fXj - float(labelMat[j])

alphaIold = alphas[i].copy(); alphaJold = alphas[j].copy()

if labelMat[i] != labelMat[j]:

L = max(0, alphas[j] - alphas[i])

H = min(C, C + alphas[j] - alphas[i])

else:

L = max(0, alphas[j] + alphas[i] - C)

H = min(C, alphas[j] + alphas[i])

if L==H: print ("L==H"); continue

eta = 2.0 * dataMatrix[i,:]*dataMatrix[j,:].T - dataMatrix[i,:]*dataMatrix[i,:].T - dataMatrix[j,:]*dataMatrix[j,:].T

if eta >= 0: print ("eta>=0"); continue

alphas[j] -= labelMat[j]*(Ei - Ej)/eta

alphas[j] = clipAlpha(alphas[j],H,L)

if abs(alphas[j] - alphaJold) < 0.00001: print ("j not moving enough"); continue

alphas[i] += labelMat[j]*labelMat[i]*(alphaJold - alphas[j])#update i by the same amount as j

#the update is in the oppostie direction

b1 = b - Ei- labelMat[i]*(alphas[i]-alphaIold)*dataMatrix[i,:]*dataMatrix[i,:].T - labelMat[j]*(alphas[j]-alphaJold)*dataMatrix[i,:]*dataMatrix[j,:].T

b2 = b - Ej- labelMat[i]*(alphas[i]-alphaIold)*dataMatrix[i,:]*dataMatrix[j,:].T - labelMat[j]*(alphas[j]-alphaJold)*dataMatrix[j,:]*dataMatrix[j,:].T

if (0 < alphas[i]) and (C > alphas[i]): b = b1

elif (0 < alphas[j]) and (C > alphas[j]): b = b2

else: b = (b1 + b2)/2.0

alphaPairsChanged += 1

print ("iter: %d i:%d, pairs changed %d" % (iter_num,i,alphaPairsChanged))

if alphaPairsChanged == 0: iter_num+= 1

else: iter_num = 0

print ("iteration number: %d" % iter_num)

return b,alphas下面进行测试:

dataArr,labelArr = svmMLiA.loadDataSet('testSet.txt')

print('labelArr:',labelArr)

print('dataArr:',dataArr)

b,alpha = svmMLiA.smoSimple(dataArr,labelArr,0.6,0.001,40)

print('b',b)

print('alpha>0:',alpha[alpha>0])

print(shape(alpha[alpha>0]))#得到支持向量的个数

for i in range(100): #了解哪些数据点是支持向量

if alpha[i]>0.0:print(dataArr[i],labelArr[i])输出结果:

labelArr: [-1.0, -1.0, 1.0, -1.0, 1.0, 1.0, 1.0, -1.0, -1.0, -1.0, -1.0, -1.0, -1.0, 1.0, -1.0, 1.0, 1.0, -1.0, 1.0, -1.0, -1.0, -1.0, 1.0, -1.0, -1.0, 1.0, 1.0, -1.0, -1.0, -1.0, -1.0, 1.0, 1.0, 1.0, 1.0, -1.0, 1.0, -1.0, -1.0, 1.0, -1.0, -1.0, -1.0, -1.0, 1.0, 1.0, 1.0, 1.0, 1.0, -1.0, 1.0, 1.0, -1.0, -1.0, 1.0, 1.0, -1.0, 1.0, -1.0, -1.0, -1.0, -1.0, 1.0, -1.0, 1.0, -1.0, -1.0, 1.0, 1.0, 1.0, -1.0, 1.0, 1.0, -1.0, -1.0, 1.0, -1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, -1.0, -1.0, -1.0, -1.0, 1.0, -1.0, 1.0, 1.0, 1.0, -1.0, -1.0, -1.0, -1.0, -1.0, -1.0, -1.0]

dataArr: [[3.542485, 1.977398], [3.018896, 2.556416], [7.55151, -1.58003], [2.114999, -0.004466], [8.127113, 1.274372], [7.108772, -0.986906], [8.610639, 2.046708], [2.326297, 0.265213], [3.634009, 1.730537], [0.341367, -0.894998], [3.125951, 0.293251], [2.123252, -0.783563], [0.887835, -2.797792], [7.139979, -2.329896], [1.696414, -1.212496], [8.117032, 0.623493], [8.497162, -0.266649], [4.658191, 3.507396], [8.197181, 1.545132], [1.208047, 0.2131], [1.928486, -0.32187], [2.175808, -0.014527], [7.886608, 0.461755], [3.223038, -0.552392], [3.628502, 2.190585], [7.40786, -0.121961], [7.286357, 0.251077], [2.301095, -0.533988], [-0.232542, -0.54769], [3.457096, -0.082216], [3.023938, -0.057392], [8.015003, 0.885325], [8.991748, 0.923154], [7.916831, -1.781735], [7.616862, -0.217958], [2.450939, 0.744967], [7.270337, -2.507834], [1.749721, -0.961902], [1.803111, -0.176349], [8.804461, 3.044301], [1.231257, -0.568573], [2.074915, 1.41055], [-0.743036, -1.736103], [3.536555, 3.96496], [8.410143, 0.025606], [7.382988, -0.478764], [6.960661, -0.245353], [8.23446, 0.701868], [8.168618, -0.903835], [1.534187, -0.622492], [9.229518, 2.066088], [7.886242, 0.191813], [2.893743, -1.643468], [1.870457, -1.04042], [5.286862, -2.358286], [6.080573, 0.418886], [2.544314, 1.714165], [6.016004, -3.753712], [0.92631, -0.564359], [0.870296, -0.109952], [2.369345, 1.375695], [1.363782, -0.254082], [7.27946, -0.189572], [1.896005, 0.51508], [8.102154, -0.603875], [2.529893, 0.662657], [1.963874, -0.365233], [8.132048, 0.785914], [8.245938, 0.372366], [6.543888, 0.433164], [-0.236713, -5.766721], [8.112593, 0.295839], [9.803425, 1.495167], [1.497407, -0.552916], [1.336267, -1.632889], [9.205805, -0.58648], [1.966279, -1.840439], [8.398012, 1.584918], [7.239953, -1.764292], [7.556201, 0.241185], [9.015509, 0.345019], [8.266085, -0.230977], [8.54562, 2.788799], [9.295969, 1.346332], [2.404234, 0.570278], [2.037772, 0.021919], [1.727631, -0.453143], [1.979395, -0.050773], [8.092288, -1.372433], [1.667645, 0.239204], [9.854303, 1.365116], [7.921057, -1.327587], [8.500757, 1.492372], [1.339746, -0.291183], [3.107511, 0.758367], [2.609525, 0.902979], [3.263585, 1.367898], [2.912122, -0.202359], [1.731786, 0.589096], [2.387003, 1.573131]]

b [[-3.81348383]]

alpha>0: [[1.26296066e-01 2.39286462e-01 3.46944695e-18 3.65582528e-01]]

(1, 3)

[4.658191, 3.507396] -1.0

[3.457096, -0.082216] -1.0

[6.080573, 0.418886] 1.0完整Platt SMO算法加速优化:

- 完整版Platt SMO的支持函数:

class optStruct:

def __init__(self,dataMatIn, classLabels, C, toler, kTup): # Initialize the structure with the parameters

self.X = dataMatIn

self.labelMat = classLabels

self.C = C

self.tol = toler

self.m = shape(dataMatIn)[0]

self.alphas = mat(zeros((self.m,1)))

self.b = 0

self.eCache = mat(zeros((self.m,2))) #first column is valid flag

self.K = mat(zeros((self.m,self.m)))

for i in range(self.m):

self.K[:,i] = kernelTrans(self.X, self.X[i,:], kTup)

def calcEk(oS, k):

fXk = float(multiply(oS.alphas,oS.labelMat).T*oS.K[:,k] + oS.b)

Ek = fXk - float(oS.labelMat[k])

return Ek

def selectJ(i, oS, Ei): #this is the second choice -heurstic, and calcs Ej

maxK = -1; maxDeltaE = 0; Ej = 0

oS.eCache[i] = [1,Ei] #set valid #choose the alpha that gives the maximum delta E

validEcacheList = nonzero(oS.eCache[:,0].A)[0]

if (len(validEcacheList)) > 1:

for k in validEcacheList: #loop through valid Ecache values and find the one that maximizes delta E

if k == i: continue #don't calc for i, waste of time

Ek = calcEk(oS, k)

deltaE = abs(Ei - Ek)

if deltaE > maxDeltaE:

maxK = k; maxDeltaE = deltaE; Ej = Ek

return maxK, Ej

else: #in this case (first time around) we don't have any valid eCache values

j = selectJrand(i, oS.m)

Ej = calcEk(oS, j)

return j, Ej

def updateEk(oS, k):#after any alpha has changed update the new value in the cache

Ek = calcEk(oS, k)

oS.eCache[k] = [1,Ek]- 完整版Platt SMO算法中的优化例程:

def innerL(i, oS):

Ei = calcEk(oS, i)

if ((oS.labelMat[i]*Ei < -oS.tol) and (oS.alphas[i] < oS.C)) or ((oS.labelMat[i]*Ei > oS.tol) and (oS.alphas[i] > 0)):

j,Ej = selectJ(i, oS, Ei) #this has been changed from selectJrand

alphaIold = oS.alphas[i].copy(); alphaJold = oS.alphas[j].copy()

if oS.labelMat[i] != oS.labelMat[j]:

L = max(0, oS.alphas[j] - oS.alphas[i])

H = min(oS.C, oS.C + oS.alphas[j] - oS.alphas[i])

else:

L = max(0, oS.alphas[j] + oS.alphas[i] - oS.C)

H = min(oS.C, oS.alphas[j] + oS.alphas[i])

if L==H: print ("L==H"); return 0

eta = 2.0 * oS.K[i,j] - oS.K[i,i] - oS.K[j,j] #changed for kernel

if eta >= 0: print ("eta>=0"); return 0

oS.alphas[j] -= oS.labelMat[j]*(Ei - Ej)/eta

oS.alphas[j] = clipAlpha(oS.alphas[j],H,L)

updateEk(oS, j) #added this for the Ecache

if abs(oS.alphas[j] - alphaJold) < 0.00001: print ("j not moving enough"); return 0

oS.alphas[i] += oS.labelMat[j]*oS.labelMat[i]*(alphaJold - oS.alphas[j])#update i by the same amount as j

updateEk(oS, i) #added this for the Ecache #the update is in the oppostie direction

b1 = oS.b - Ei- oS.labelMat[i]*(oS.alphas[i]-alphaIold)*oS.K[i,i] - oS.labelMat[j]*(oS.alphas[j]-alphaJold)*oS.K[i,j]

b2 = oS.b - Ej- oS.labelMat[i]*(oS.alphas[i]-alphaIold)*oS.K[i,j]- oS.labelMat[j]*(oS.alphas[j]-alphaJold)*oS.K[j,j]

if (0 < oS.alphas[i]) and (oS.C > oS.alphas[i]): oS.b = b1

elif (0 < oS.alphas[j]) and (oS.C > oS.alphas[j]): oS.b = b2

else: oS.b = (b1 + b2)/2.0

return 1

else: return 0- 完整版Platt SMO的外循环代码:

def smoP(dataMatIn, classLabels, C, toler, maxIter,kTup=('lin', 0)): #full Platt SMO

oS = optStruct(mat(dataMatIn),mat(classLabels).transpose(),C,toler, kTup)

iter_num = 0

entireSet = True; alphaPairsChanged = 0

while (iter_num < maxIter) and ((alphaPairsChanged > 0) or entireSet):

alphaPairsChanged = 0

if entireSet: #go over all

for i in range(oS.m):

alphaPairsChanged += innerL(i,oS)

print ("fullSet, iter: %d i:%d, pairs changed %d" % (iter_num,i,alphaPairsChanged))

iter_num += 1

else:#go over non-bound (railed) alphas

nonBoundIs = nonzero((oS.alphas.A > 0) * (oS.alphas.A < C))[0]

for i in nonBoundIs:

alphaPairsChanged += innerL(i,oS)

print ("non-bound, iter: %d i:%d, pairs changed %d" % (iter_num,i,alphaPairsChanged))

iter_num += 1

if entireSet: entireSet = False #toggle entire set loop

elif alphaPairsChanged == 0: entireSet = True

print ("iteration number: %d" % iter_num)

return oS.b,oS.alphas在复杂数据上应用核函数:

核函数的意义在于把低维数据映射到高维平面里从而更易于找到超平面

- 核转换函数:

def kernelTrans(X, A, kTup): #calc the kernel or transform data to a higher dimensional space

m,n = shape(X)

K = mat(zeros((m,1)))

if kTup[0]=='lin': K = X * A.T #linear kernel

elif kTup[0]=='rbf':

for j in range(m):

deltaRow = X[j,:] - A

K[j] = deltaRow*deltaRow.T

K = exp(K/(-1*kTup[1]**2)) #divide in NumPy is element-wise not matrix like Matlab

else: raise NameError('Houston We Have a Problem -- \

That Kernel is not recognized')

return K

class optStruct:

def __init__(self,dataMatIn, classLabels, C, toler, kTup): # Initialize the structure with the parameters

self.X = dataMatIn

self.labelMat = classLabels

self.C = C

self.tol = toler

self.m = shape(dataMatIn)[0]

self.alphas = mat(zeros((self.m,1)))

self.b = 0

self.eCache = mat(zeros((self.m,2))) #first column is valid flag

self.K = mat(zeros((self.m,self.m)))

for i in range(self.m):

self.K[:,i] = kernelTrans(self.X, self.X[i,:], kTup)- 利用核函数进行分类的径向基测试函数:

def testRbf(k1=1.3):

dataArr,labelArr = loadDataSet('testSetRBF.txt')

b,alphas = smoP(dataArr, labelArr, 200, 0.0001, 10000, ('rbf', k1)) #C=200 important

datMat=mat(dataArr); labelMat = mat(labelArr).transpose()

svInd=nonzero(alphas.A>0)[0]

sVs=datMat[svInd] #get matrix of only support vectors

labelSV = labelMat[svInd]

print ("there are %d Support Vectors" % shape(sVs)[0])

m,n = shape(datMat)

errorCount = 0

for i in range(m):

kernelEval = kernelTrans(sVs,datMat[i,:],('rbf', k1))

predict=kernelEval.T * multiply(labelSV,alphas[svInd]) + b

if sign(predict)!=sign(labelArr[i]): errorCount += 1

print ("the training error rate is: %f" % (float(errorCount)/m))

dataArr,labelArr = loadDataSet('testSetRBF2.txt')

errorCount = 0

datMat=mat(dataArr)

m,n = shape(datMat)

for i in range(m):

kernelEval = kernelTrans(sVs,datMat[i,:],('rbf', k1))

predict=kernelEval.T * multiply(labelSV,alphas[svInd]) + b

if sign(predict)!=sign(labelArr[i]): errorCount += 1

print ("the test error rate is: %f" % (float(errorCount)/m))博客作为自己的学习笔记略去很多的详细说明,若要深入学习理解,可以转到下面的博客 https://blog.csdn.net/c406495762/article/details/78072313

https://blog.csdn.net/c406495762/article/details/78158354