本系列文章会深入研究 Ceph 以及 Ceph 和 OpenStack 的集成:

(1)安装和部署

(3)Ceph 物理和逻辑结构

(4)Ceph 的基础数据结构

(6)QEMU-KVM 和 Ceph RBD 的 缓存机制总结

(8)关于Ceph PGs

扫描二维码关注公众号,回复:

976066 查看本文章

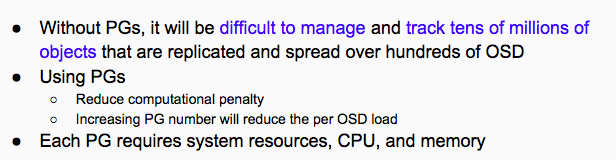

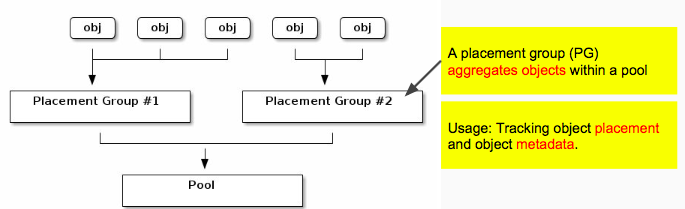

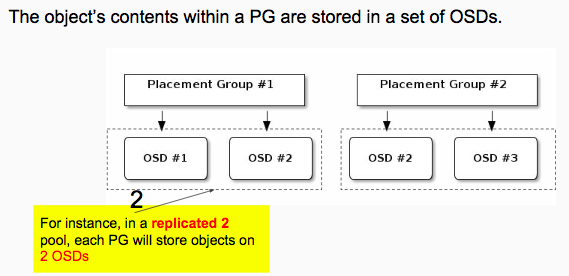

Placement Group, PG

How PGs are Used?

Should OSD#2 fail

Data Durability Issue

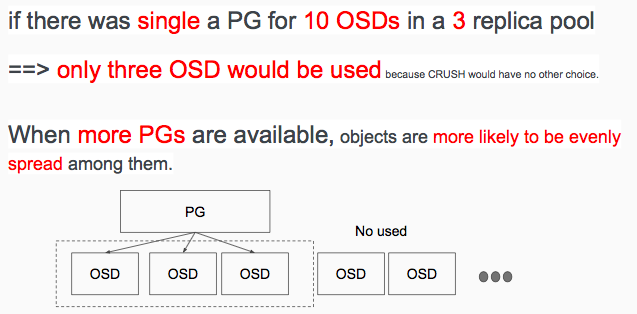

Object Distribution Issue

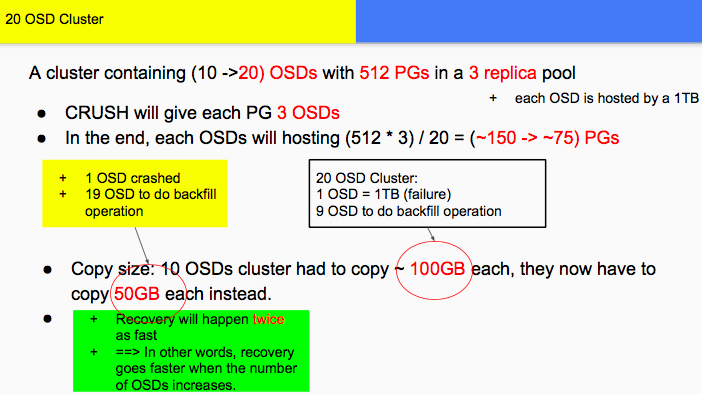

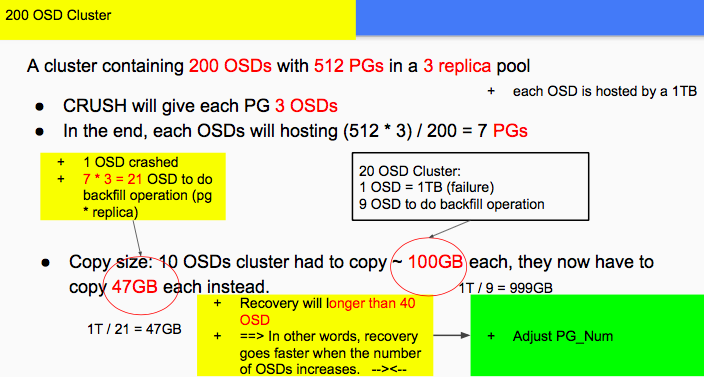

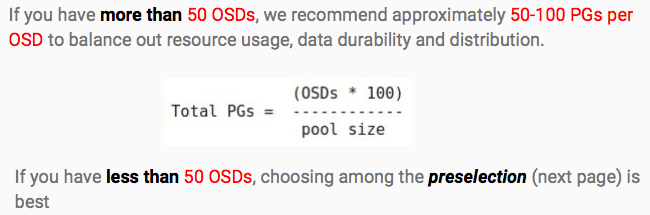

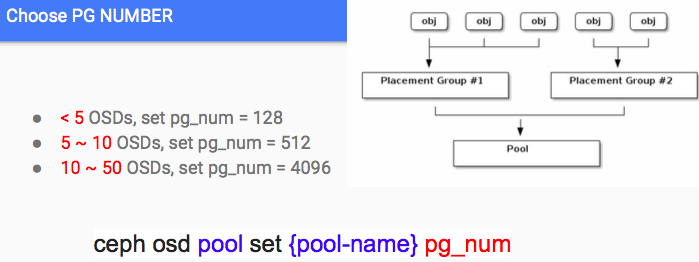

Choosing the number of PGs

Example

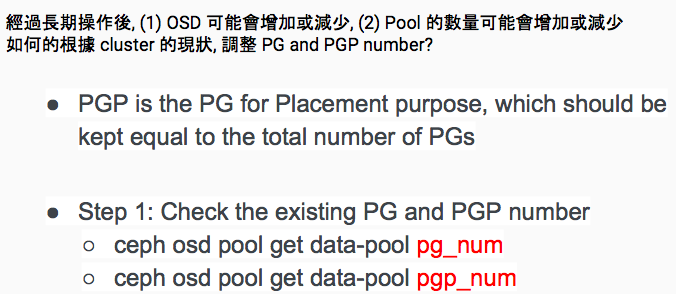

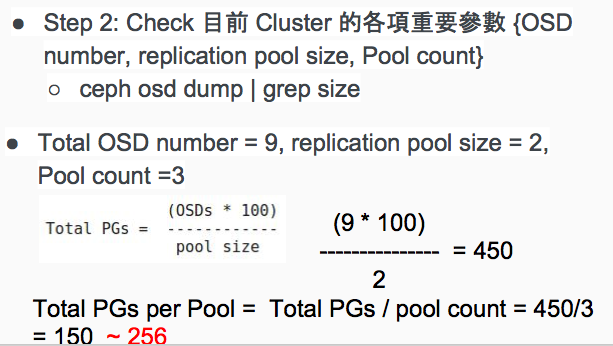

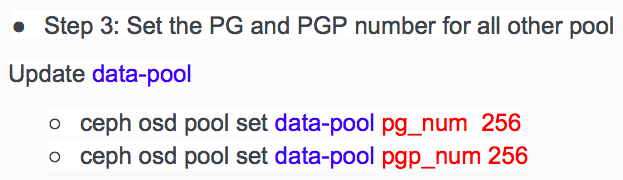

如何根據現狀調整 PG and PGP?

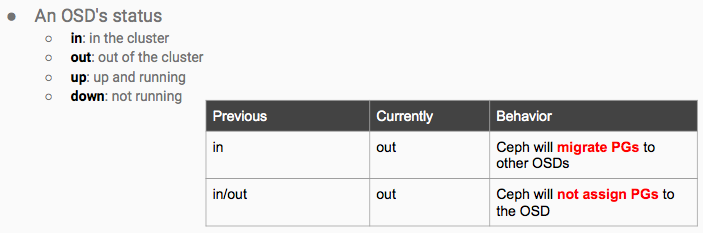

Monitoring OSDs

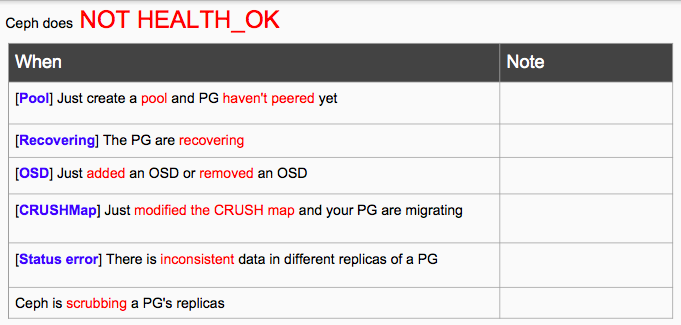

Ceph is NOT Healthy_OK

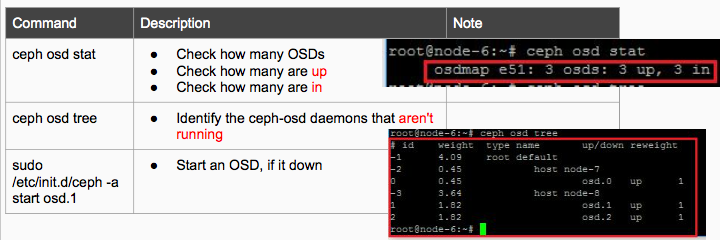

OSD Status Check

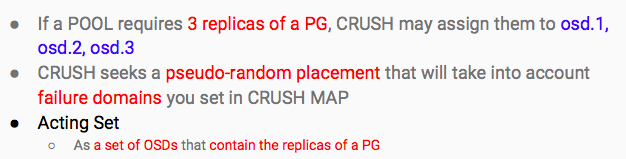

PG Sets

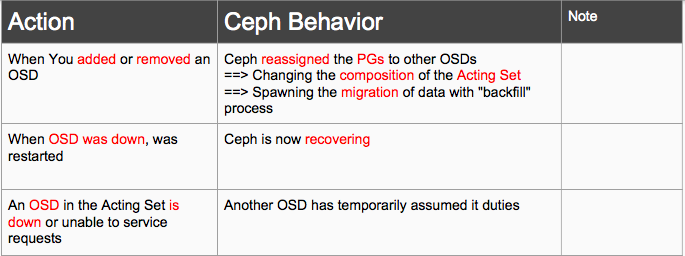

When A OSD in the Acting Set is down

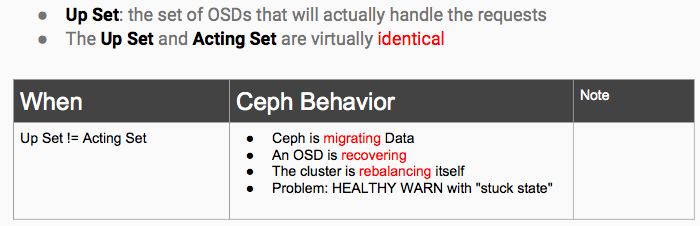

Up Set

Check PG Status

Point

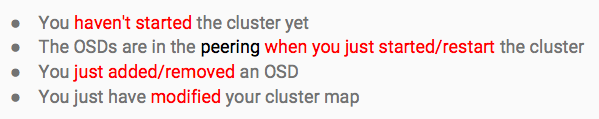

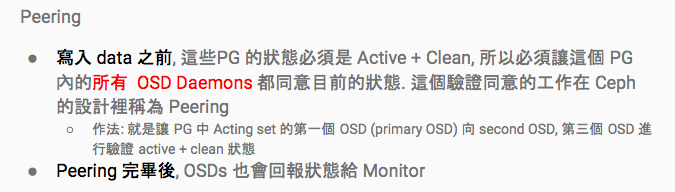

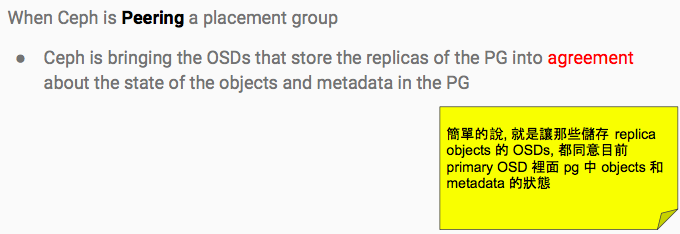

Peering

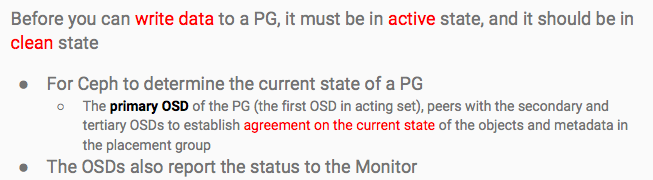

Peering: Establish Agreement of the PG status

Monitoring PG States

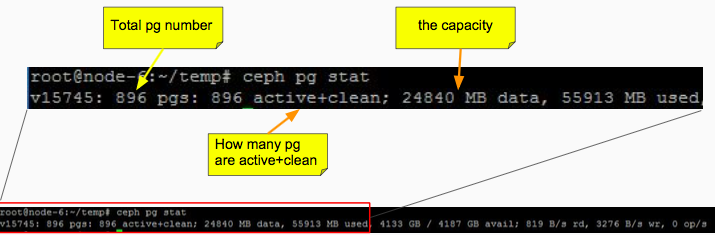

Check PG Stat

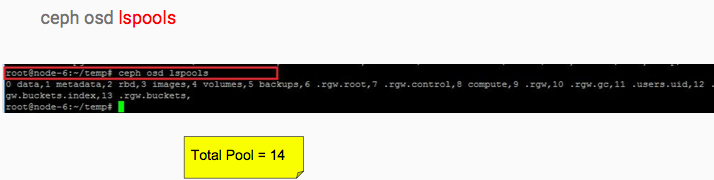

List Pool

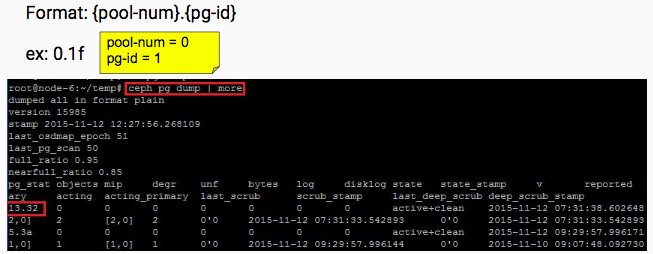

PG IDs

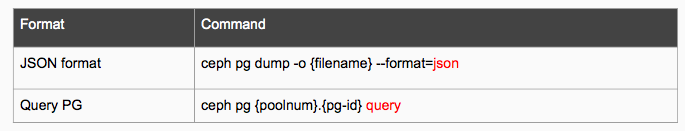

The Output Format of the placement group

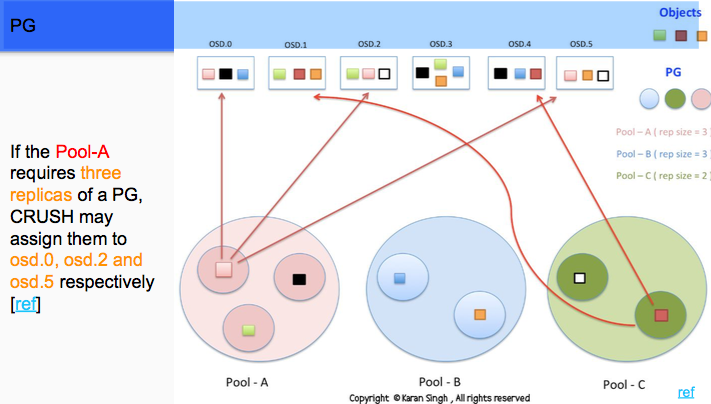

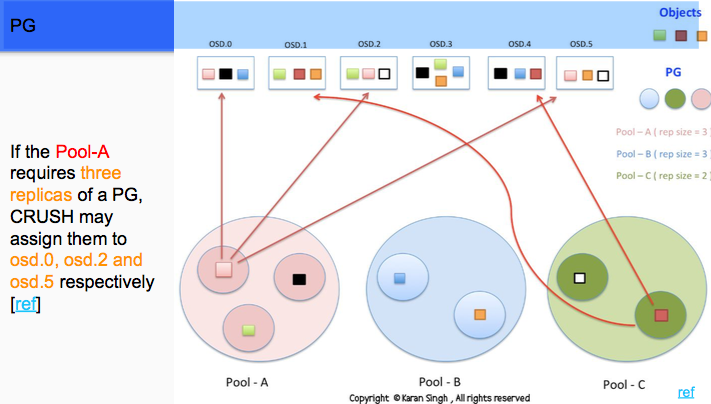

PG

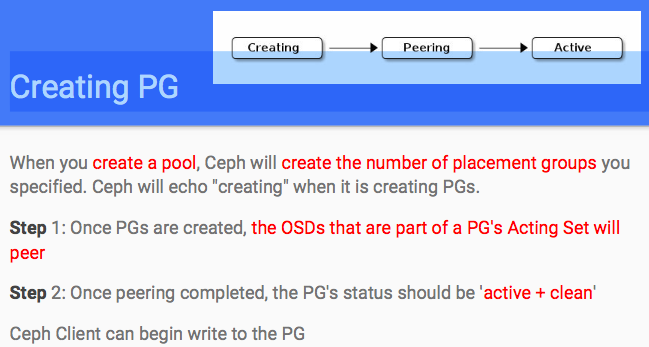

Creating PG

Create A Pool

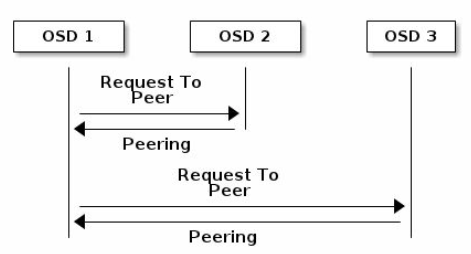

Peering

A Peering Process for a Pool with Replica 3

Active

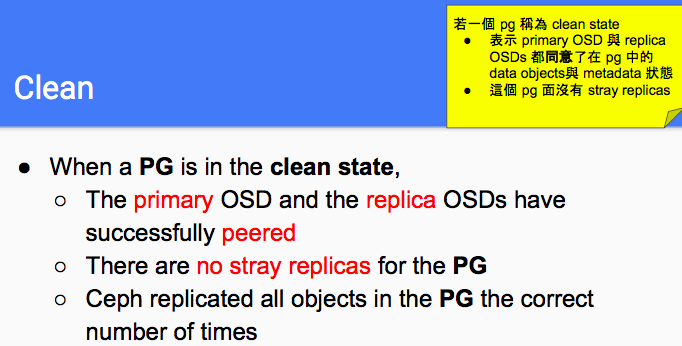

Clean

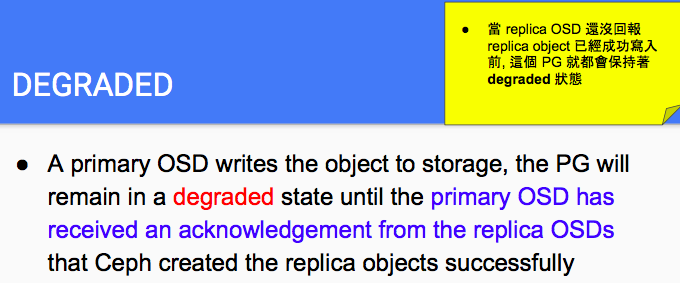

DEGRADED

PG with {active + degraded}

Recovering

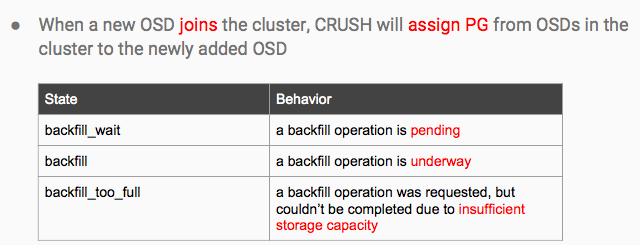

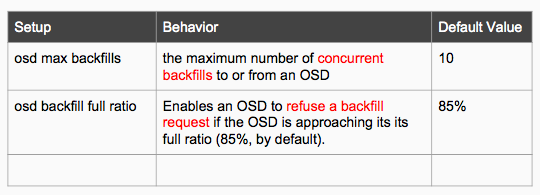

Backing Filling (1/2)

Backing Filling (2/2)

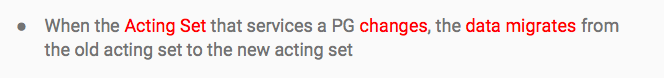

Remapped

Stale

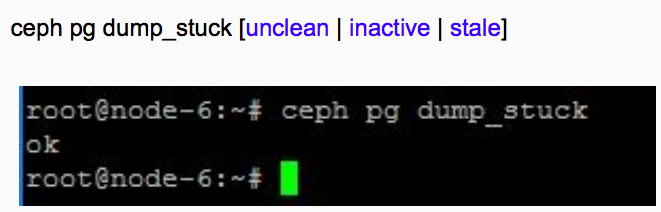

Identifying Troubled PGs (1/2)

Identify Trouble PGs (2/2)

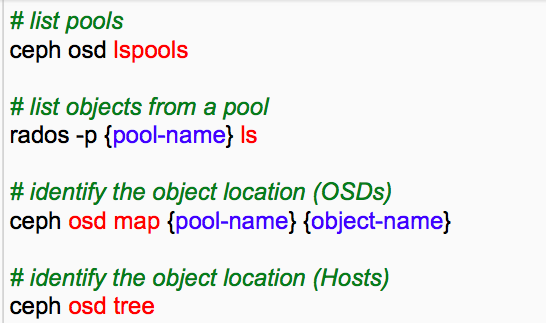

Finding An Object Location