该篇文章讲述了论文实验部分的伪代码,该实验采用python语言编写,框架采用深度学习框架keras,整体实验分为一下几个部分:

0 Latex伪代码框架

\documentclass[11pt]{ctexart}

\usepackage[top=2cm, bottom=2cm, left=2cm, right=2cm]{geometry}

\usepackage{algorithm}

\usepackage{algorithmicx}

\usepackage{algpseudocode}

\usepackage{amsmath}

\usepackage{amsfonts,amssymb}

\floatname{algorithm}{Algorithm}

\renewcommand{\algorithmicrequire}{\textbf{Input:}}

\renewcommand{\algorithmicensure}{\textbf{Output:}}

\begin{document}

...伪代码内容

\end{document}

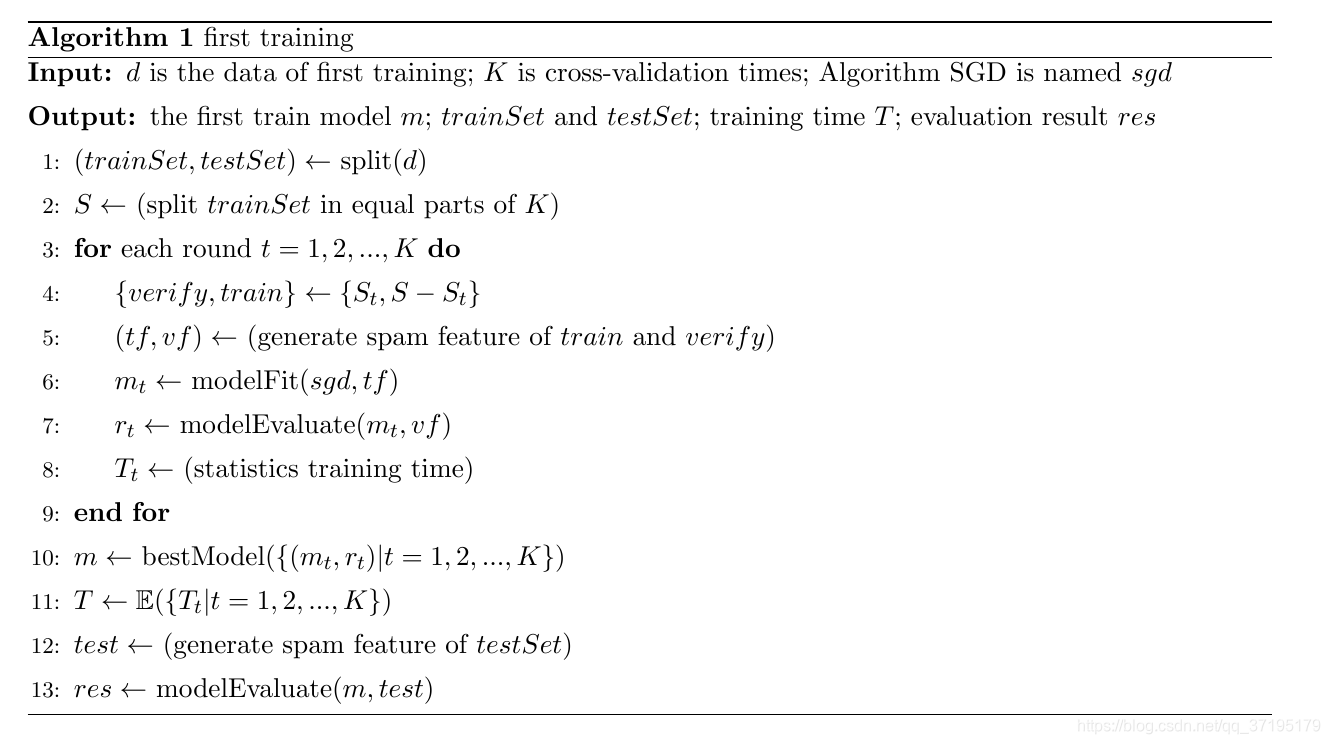

1 第一次训练(first.py)

功能实现:

根据输入的数据文件,处理数据后,切割为训练集和测试集,并在本地生成对应的文件。对整体数据,使用CountVectorizer对邮件文本进行向量化,并且生成了一个字典。用词袋模型将训练集的邮件文本数据转化为词袋特征,并用这些特征训练模型,将该模型生成本地文件。最后,加载训练集文件对模型进行评估,自此该文件运行完毕。

Latex effect map:

Latex Pseudocode:

\begin{algorithm}

\caption{first training}

\begin{algorithmic}[1] %每行显示行号

\Require $d$ is the data of first training; $K$ is cross-validation times; Algorithm SGD is named $sgd$

\Ensure the first train model $m$; $trainSet$ and $testSet$; training time $T$; evaluation result $res$

\State $(trainSet, testSet) \gets $ split($d$)

\State $S \gets $ (split $trainSet$ in equal parts of $K$)

\For {each round $t=1,2,...,K$}

\State $\left\{verify, train\right\} \gets \left\{S_{t}, S-S_{t}\right\} $

\State $(tf, vf) \gets $ (generate spam feature of $train$ and $verify$)

\State $m_{t} \gets $ modelFit($sgd, tf$)

\State $r_{t} \gets $ modelEvaluate($m_{t}, vf$)

\State $T_{t} \gets $ (statistics training time)

\EndFor

\State $m \gets $ bestModel($\left\{ (m_{t}, r_{t}) | t=1,2,...,K \right\}$)

\State $T \gets \mathbb{E}({\left\{ T_{t} | t=1,2,...,K \right\}})$

\State $test \gets $ (generate spam feature of $testSet$)

\State $res \gets $ modelEvaluate($m, test$)

\end{algorithmic}

\end{algorithm}

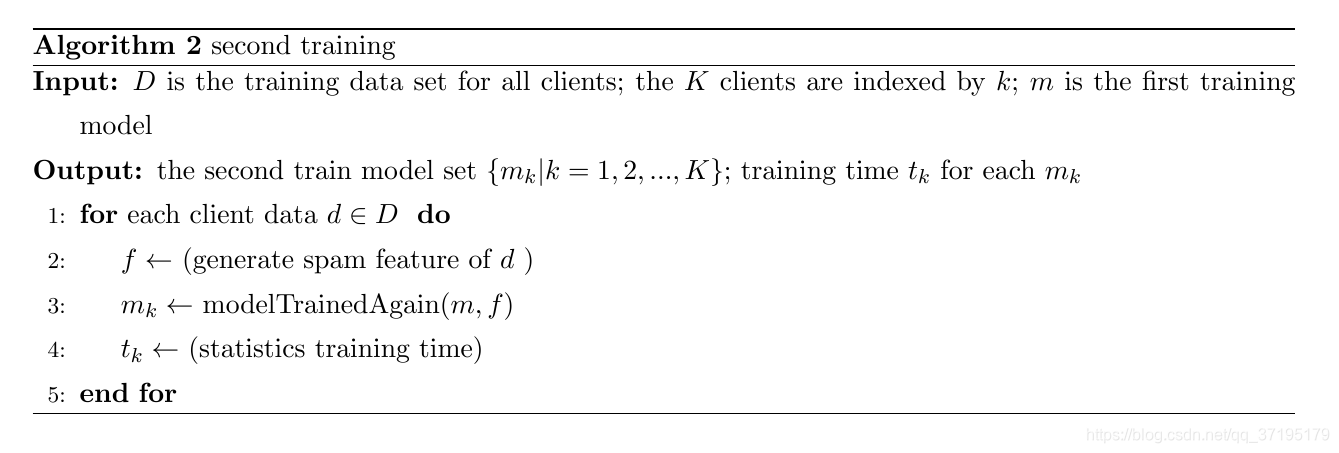

2 第二次训练(second.py)

Latex effect map:

Latex Pseudocode:

\begin{algorithm}

\caption{second training}

\begin{algorithmic}[1] %每行显示行号

\Require $D$ is the training data set for all clients; the $K$ clients are indexed by $k$; $m$ is the first training model

\Ensure the second train model set $\left\{m_{k}|k=1,2,...,K \right\}$; training time $t_{k}$ for each $m_{k}$

\For {each client data $d \in D$ }

\State $f \gets $ (generate spam feature of $d$ )

\State $m_{k} \gets $ modelTrainedAgain($m,f$)

\State $t_{k} \gets $ (statistics training time)

\EndFor

\end{algorithmic}

\end{algorithm}

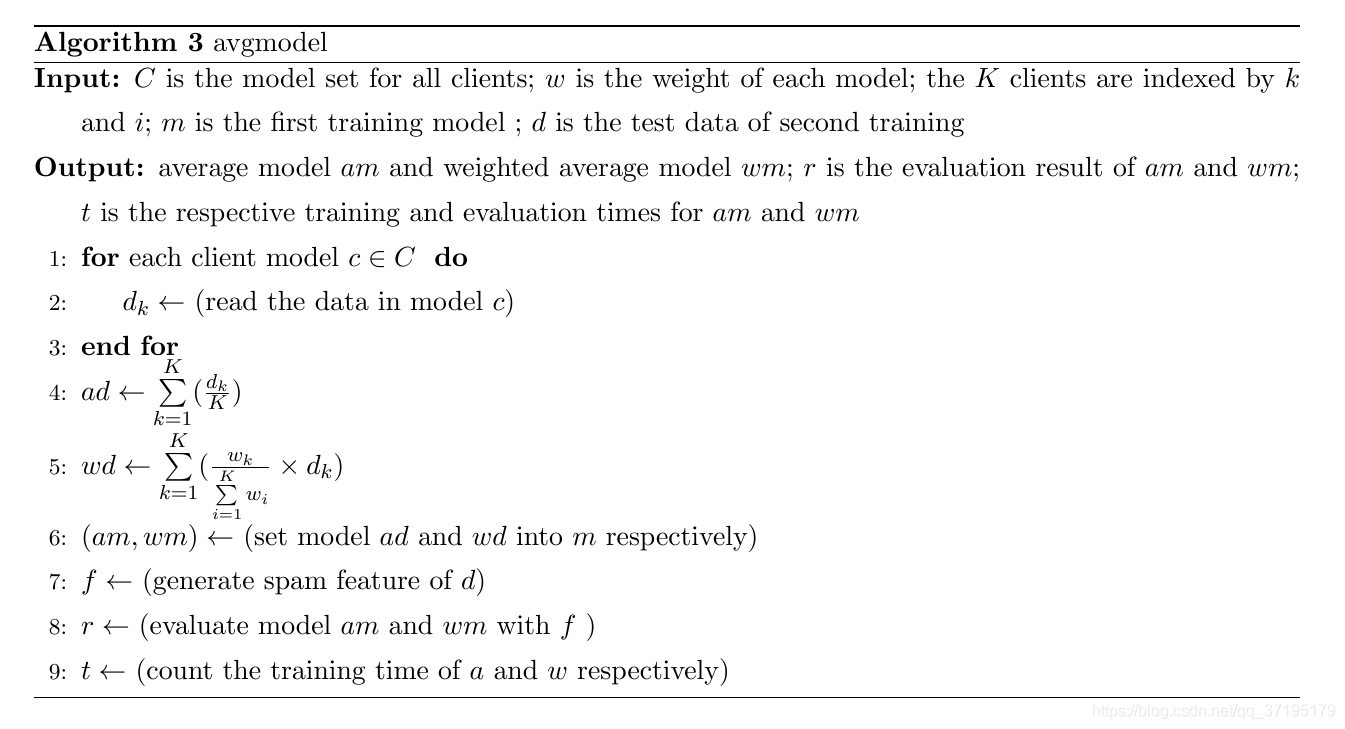

3 平均模型与加权平均模型(avgmodel.py)

Latex effect map:

Pseudocode:

\begin{algorithm}

\caption{avgmodel}

\begin{algorithmic}[1] %每行显示行号

\Require $C$ is the model set for all clients; $w$ is the weight of each model; the $K$ clients are indexed by $k$ and $i$; $m$ is the first training model ; $d$ is the test data of second training

\Ensure average model $am$ and weighted average model $wm$; $r$ is the evaluation result of $am$ and $wm$; $t$ is the respective training and evaluation times for $am$ and $wm$

\For {each client model $c \in C$ }

\State $d_{k} \gets $ (read the data in model $c$)

\EndFor

\State $ad \gets \sum\limits_{k=1}^{K}(\frac{d_{k}}{K})$

\State $wd \gets \sum\limits_{k=1}^{K}(\frac{w_{k}}{\sum\limits_{i=1}^{K}w_{i} } \times d_{k})$

\State $(am, wm) \gets $ (set model $ad$ and $wd$ into $m$ respectively)

\State $f \gets $ (generate spam feature of $d$)

\State $r \gets $ (evaluate model $am$ and $wm$ with $f$ )

\State $t \gets $ (count the training time of $a$ and $w$ respectively)

\end{algorithmic}

\end{algorithm}