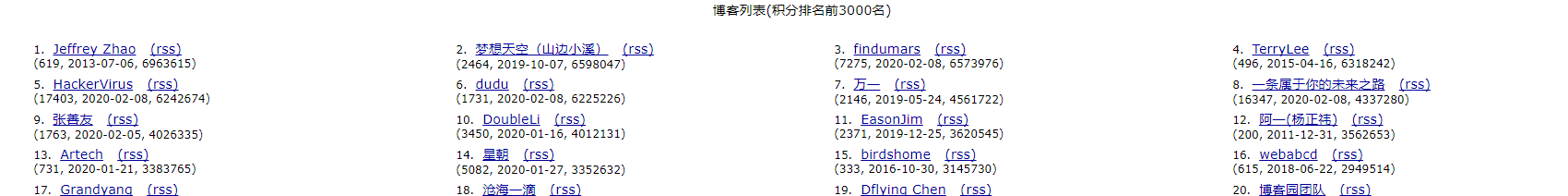

爬取积分榜前3000名博主前20页的博客标题,

根据左下角

博客列表页面

进行爬取

代码如下:

1 import requests 2 from bs4 import BeautifulSoup 3 import io 4 import re 5 6 url="" 7 8 #写入内容 9 def Content(url): 10 try: 11 kv = {'user-agent': 'Mozilla/5.0'} 12 r = requests.get(url, headers=kv) 13 r.encoding = r.apparent_encoding 14 demo = r.text 15 soup = BeautifulSoup(demo, "html.parser") 16 print(url) 17 for a in soup.find_all("a",{"class":"postTitle2"}): 18 print(str(a.string).rstrip().lstrip().replace("[置顶]","")) 19 Content_write(str(a.string).rstrip().lstrip().replace("[置顶]","")) 20 except: 21 print("没有数据了!") 22 23 #读取博主主页链接 24 def Href(): 25 try: 26 kv = {'user-agent': 'Mozilla/5.0'} 27 r = requests.get("https://www.cnblogs.com/AllBloggers.aspx", headers=kv) 28 r.encoding = r.apparent_encoding 29 demo = r.text 30 soup = BeautifulSoup(demo, "html.parser") 31 print(url) 32 text = "" 33 for t in soup.find_all("td"): 34 if t.find("a") is not None: 35 print(t.find("a").attrs['href']) 36 write(t.find("a").attrs['href']) 37 except: 38 print("没有数据了") 39 40 #写入链接 41 def write(contents): 42 f=open('E://bloghref.txt','a+',encoding='utf-8') 43 f.write(contents+"\n") 44 print('写入成功!') 45 f.close() 46 47 #写入内容 48 def Content_write(contents): 49 f=open('E://blogcontent.txt','a+',encoding='utf-8') 50 f.write(contents+"\n") 51 print('写入成功!') 52 f.close() 53 54 #循环写入 55 def write_all(): 56 try: 57 f=open('E://bloghref.txt','r+',encoding='utf-8') 58 for line in f: 59 line=line.rstrip("\n") 60 for i in range(1,20): 61 url=line+"default.html?page="+str(i) 62 Content(url) 63 except: 64 print("超出页数!") 65 if __name__=="__main__": 66 Herf() 67 write_all() 68 # 69 #Content("https://www.cnblogs.com/#p3") 70 #Content("https://www.cnblogs.com/Terrylee/")

import requests

from bs4 import BeautifulSoup

import io

import re

url=""

#写入内容

def Content(url):

try:

kv = {'user-agent': 'Mozilla/5.0'}

r = requests.get(url, headers=kv)

r.encoding = r.apparent_encoding

demo = r.text

soup = BeautifulSoup(demo, "html.parser")

print(url)

for a in soup.find_all("a",{"class":"postTitle2"}):

print(str(a.string).rstrip().lstrip().replace("[置顶]",""))

Content_write(str(a.string).rstrip().lstrip().replace("[置顶]",""))

except:

print("没有数据了!")

#读取博主主页链接

def Href():

try:

kv = {'user-agent': 'Mozilla/5.0'}

r = requests.get("https://www.cnblogs.com/AllBloggers.aspx", headers=kv)

r.encoding = r.apparent_encoding

demo = r.text

soup = BeautifulSoup(demo, "html.parser")

print(url)

text = ""

for t in soup.find_all("td"):

if t.find("a") is not None:

print(t.find("a").attrs['href'])

write(t.find("a").attrs['href'])

except:

print("没有数据了")

#写入链接

def write(contents):

f=open('E://bloghref.txt','a+',encoding='utf-8')

f.write(contents+"\n")

print('写入成功!')

f.close()

#写入内容

def Content_write(contents):

f=open('E://blogcontent.txt','a+',encoding='utf-8')

f.write(contents+"\n")

print('写入成功!')

f.close()

#循环写入

def write_all():

try:

f=open('E://bloghref.txt','r+',encoding='utf-8')

for line in f:

line=line.rstrip("\n")

for i in range(1,20):

url=line+"default.html?page="+str(i)

Content(url)

except:

print("超出页数!")

if __name__=="__main__":

write_all()

#Href()

#Content("https://www.cnblogs.com/#p3")

#Content("https://www.cnblogs.com/Terrylee/")