目录:

1、构建公用数据集如MNIST数据集

2、构建自己的数据集

3、读取或者保存模型,进行断点续训练

4、构建网络结构

5、进行训练

6、图形化展示训练结果

1、构建公用数据集如MNIST数据集

def load_dataset(batch_size=10, download=True):

"""

The output of torchvision datasets are PILImage images of range [0, 1].

Transform them to Tensors of normalized range [-1, 1]

"""

transform = transforms.Compose([transforms.ToTensor(),

transforms.Normalize([0.5], [0.5])])

#二维和三维数据的transforms.Normalize()有区别

trainset = torchvision.datasets.MNIST(root='../data', train=True,

download=download,

transform=transform)

trainloader = torch.utils.data.DataLoader(trainset, batch_size=batch_size,

shuffle=True)

testset = torchvision.datasets.MNIST(root='../data', train=False,

download=download,

transform=transform)

testloader = torch.utils.data.DataLoader(testset, batch_size=batch_size,

shuffle=False)

return trainloader, testloader

2、构建自己的数据集

在大多数的实际训练和项目进行中,都是需要根据自己的数据制作数据集进行训练的,使用pytorch制作自己的数据集。

在构建pytorch类型的数据集时,我们有图片、 标签txt文件两种,任务就是使用图片、标签txt文件制作torch.dataset格式数据集:

前提知识:

python的运算符重载:https://blog.csdn.net/zhangshuaijun123/article/details/82149056

1、数据集的制作

import torch

from torch.utils.data import Dataset

from PIL import Image

#以torch.utils.data.Dataset为基类创建MyDataset

class MyDataset(Dataset):

#stpe1:初始化

def __init__(self, txt, transform=None, target_transform=None,):

fh = open(txt, 'r')#打开标签文件

imgs = []#创建列表,装东西

for line in fh:#遍历标签文件每行

line = line.rstrip()#删除字符串末尾的空格

words = line.split()#通过空格分割字符串,变成列表

imgs.append((words[0],int(words[1])))#把图片名words[0],标签int(words[1])放到imgs里

self.imgs = imgs

self.transform = transform

self.target_transform = target_transform

def __getitem__(self, index):#检索函数,相当于[]的符号重载

fn, label = self.imgs[index]#读取文件名、标签

img = Image.open(fn).convert('RGB')#通过PIL.Image读取图片

if self.transform is not None:

img = self.transform(img)

return img,label

def __len__(self):#相当于重载len()

return len(self.imgs)

2、数据集的使用

from torchvision import transforms as transforms

trans_form = transforms.Compose([

transforms.Resize(96), # 缩放到 96 * 96 大小

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5)) # 归一化

])

train_data=MyDataset(txt='train.txt', transform=trans_form)

test_data=MyDataset(txt='test.txt', transform=trans_form)

train_loader = DataLoader(dataset=train_data, batch_size=64, shuffle=True)

test_loader = DataLoader(dataset=test_data, batch_size=64)

dataloader是一个可迭代的对象,意味着我们可以像使用迭代器一样使用它

迭代器返回的是一个list[imgs,labels]

imgs是64张图片组成的一个tensor

labels是64个标签的tensor

3、划分训练集、验证集合测试集

train_data=MyDataset(txt='train.txt', transform=transforms.ToTensor())

test_data=MyDataset(txt='test.txt', transform=transforms.ToTensor())

print('train:', len(train_data), 'test:', len(test_data))

train_data, val_data = torch.utils.data.random_split(train_data, [55000, 5000])

print('train:', len(train_data), 'validation:', len(val_data))

>>>('train:', 60000, 'test:', 10000)

>>>('train:', 55000, 'validation:', 5000)

代码参考自:

https://blog.csdn.net/weixin_41680653/article/details/93904480

3、读取或者保存模型,进行断点续训练

1、首先是保存模型,主要需要保存epoch、net和optimizer的信息,将其封装在checkpoint中进行保存。

checkpoint = {

'epoch': epoch,

'model_state_dict': net.state_dict(),

'optimizer_state_dict': optimizer.state_dict(),

}

if not os.path.isdir('checkpoint'):

os.mkdir('checkpoint')

torch.save(checkpoint, CHECKPOINT_FILE)

2、读取模型,读取模型进行断点续训练

使用torch.load()恢复checkpoint。

if resume:

# 恢复上次的训练状态

print("Resume from checkpoint...")

checkpoint = torch.load(CHECKPOINT_FILE)

net.load_state_dict(checkpoint['model_state_dict'])

optimizer.load_state_dict(checkpoint['optimizer_state_dict'])

initepoch = checkpoint['epoch']+1

4、构建网络结构

以GAN网络的判别网络为例(可以看做是对图像进行二分类):

在pytorch的框架下,网络都是通过继承nn.Module来进行搭建的。网络的中的参数都被写在该类的属性中。如self.conv1、self.conv2。然后通过forward()进行网络的连接。

import torch.nn as nn

import torch.nn.functional as F

class DCGAN_Discriminator(nn.Module):

def __init__(self, featmap_dim=512, n_channel=1):

#super().__init__()代表继承父类的属性

super(DCGAN_Discriminator, self).__init__()

self.featmap_dim = featmap_dim

self.conv1 = nn.Conv2d(n_channel, featmap_dim // 4, 5,

stride=2, padding=2)

self.conv2 = nn.Conv2d(featmap_dim // 4, featmap_dim //2, 5,

stride=2, padding=2)

self.BN2 = nn.BatchNorm2d(featmap_dim // 2)

self.conv3 = nn.Conv2d(featmap_dim // 2, featmap_dim, 5,

stride=2, padding=2)

self.BN3 = nn.BatchNorm2d(featmap_dim)

self.fc = nn.Linear(featmap_dim * 4 * 4, 1)

def forward(self, x):

"""

Strided convulation layers,

Batch Normalization after convulation but not at input layer,

LeakyReLU activation function with slope 0.2.

"""

x = F.leaky_relu(self.conv1(x), negative_slope=0.2)

x = F.leaky_relu(self.BN2(self.conv2(x)), negative_slope=0.2)

x = F.leaky_relu(self.BN3(self.conv3(x)), negative_slope=0.2)

x = x.view(-1, self.featmap_dim * 4 * 4)

x = F.sigmoid(self.fc(x))

return x

还有一种简单的方法,使用nn.Sequential来构建网络:

# 具体的使用方法

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv_module = nn.Sequential(

nn.Conv2d(1,20,5),

nn.ReLU(),

nn.Conv2d(20,64,5),

nn.ReLU()

)

def forward(self, input):

out = self.conv_module(input)

return out

5、进行训练

网络搭建好之后,进行训练:

D_featmap_dim=512

n_channel=1

Dis_model = DCGAN_Discriminator(featmap_dim=D_featmap_dim,

n_channel=n_channel)

if use_gpu:

Dis_model = Dis_model.cuda()

# assign loss function and optimizer (Adam) to D and G

D_criterion = torch.nn.BCELoss()

D_optimizer = optim.Adam(Dis_model.parameters(), lr=dis_lr,

betas=(0.5, 0.999))

#进行训练

for epoch in range(n_epoch):

D_running_loss = 0.0

# Discriminator

D_optimizer.zero_grad()

#inputs是类中forward的输入,使用类的__call__()函数隐式地使用了forward()函数

outputs = Dis_model(inputs)#相当于Dis_model.forward(inputs)

D_loss = D_criterion(outputs[:, 0], labels)#labels是标签

#反向传播

D_loss.backward(retain_graph=True)

#参数更新

D_optimizer.step()

以上代码只是表示运行过程,并不能实际运行,因为有很多过程被忽略。下面是可以运行的代码:

DCGAN.py

# -*- coding: utf-8 -*-

"""

Created on Wed Jan 15 10:02:47 2020

@author: liwj128260

"""

# -*- coding: utf-8 -*-

# @Author: aaronlai

import torch.nn as nn

import torch.nn.functional as F

class DCGAN_Discriminator(nn.Module):

def __init__(self, featmap_dim=512, n_channel=1):

#super().__init__()代表继承父类的属性

super(DCGAN_Discriminator, self).__init__()

self.featmap_dim = featmap_dim

self.conv1 = nn.Conv2d(n_channel, featmap_dim // 4, 5,

stride=2, padding=2)

self.conv2 = nn.Conv2d(featmap_dim // 4, featmap_dim //2, 5,

stride=2, padding=2)

self.BN2 = nn.BatchNorm2d(featmap_dim // 2)

self.conv3 = nn.Conv2d(featmap_dim // 2, featmap_dim, 5,

stride=2, padding=2)

self.BN3 = nn.BatchNorm2d(featmap_dim)

self.fc = nn.Linear(featmap_dim * 4 * 4, 1)

def forward(self, x):

"""

Strided convulation layers,

Batch Normalization after convulation but not at input layer,

LeakyReLU activation function with slope 0.2.

"""

x = F.leaky_relu(self.conv1(x), negative_slope=0.2)

x = F.leaky_relu(self.BN2(self.conv2(x)), negative_slope=0.2)

x = F.leaky_relu(self.BN3(self.conv3(x)), negative_slope=0.2)

x = x.view(-1, self.featmap_dim * 4 * 4)

x = F.sigmoid(self.fc(x))

return x

class DCGAN_Generator(nn.Module):

def __init__(self, featmap_dim=1024, n_channel=1, noise_dim=100):

super(DCGAN_Generator, self).__init__()

self.featmap_dim = featmap_dim

self.fc1 = nn.Linear(noise_dim, 4 * 4 * featmap_dim)

self.conv1 = nn.ConvTranspose2d(featmap_dim, (featmap_dim // 2), 5,

stride=2, padding=2)

self.BN1 = nn.BatchNorm2d(featmap_dim // 2)

self.conv2 = nn.ConvTranspose2d(featmap_dim // 2, featmap_dim // 4, 6,

stride=2, padding=2)

self.BN2 = nn.BatchNorm2d(featmap_dim // 4)

self.conv3 = nn.ConvTranspose2d(featmap_dim // 4, n_channel, 6,

stride=2, padding=2)

def forward(self, x):

"""

Project noise to featureMap * width * height,

Batch Normalization after convulation but not at output layer,

ReLU activation function.

"""

x = self.fc1(x)

x = x.view(-1, self.featmap_dim, 4, 4)

x = F.relu(self.BN1(self.conv1(x)))

x = F.relu(self.BN2(self.conv2(x)))

x = F.tanh(self.conv3(x))

return x

run_DCGAN.py

# -*- coding: utf-8 -*-

"""

Created on Wed Jan 15 10:04:04 2020

@author: liwj128260

"""

# -*- coding: utf-8 -*-

# @Author: aaronlai

import torch

import torch.optim as optim

import torchvision

import torchvision.transforms as transforms

import numpy as np

from torch.autograd import Variable

from DCGAN import DCGAN_Discriminator, DCGAN_Generator

def load_dataset(batch_size=10, download=True):

"""

The output of torchvision datasets are PILImage images of range [0, 1].

Transform them to Tensors of normalized range [-1, 1]

"""

transform = transforms.Compose([transforms.ToTensor(),

transforms.Normalize([0.5], [0.5])])

trainset = torchvision.datasets.MNIST(root='../data', train=True,

download=download,

transform=transform)

trainloader = torch.utils.data.DataLoader(trainset, batch_size=batch_size,

shuffle=True)

testset = torchvision.datasets.MNIST(root='../data', train=False,

download=download,

transform=transform)

testloader = torch.utils.data.DataLoader(testset, batch_size=batch_size,

shuffle=False)

return trainloader, testloader

def gen_noise(n_instance, n_dim=2):

"""generate n-dim uniform random noise"""

return torch.Tensor(np.random.uniform(low=-1.0, high=1.0,

size=(n_instance, n_dim)))

def train_DCGAN(Dis_model, Gen_model, D_criterion, G_criterion, D_optimizer,

G_optimizer, trainloader, n_epoch, batch_size, noise_dim,

n_update_dis=1, n_update_gen=1, use_gpu=False, print_every=10,

update_max=None):

"""train DCGAN and print out the losses for D and G"""

for epoch in range(n_epoch):

D_running_loss = 0.0

G_running_loss = 0.0

for i, data in enumerate(trainloader, 0):

# get the inputs from true distribution

true_inputs, _ = data

if use_gpu:

true_inputs = true_inputs.cuda()

true_inputs = Variable(true_inputs)

# get the inputs from the generator

noises = gen_noise(batch_size, n_dim=noise_dim)

if use_gpu:

noises = noises.cuda()

fake_inputs = Gen_model(Variable(noises))

inputs = torch.cat([true_inputs, fake_inputs])

# get the labels

labels = np.zeros(2 * batch_size)

labels[:batch_size] = 1

labels = torch.from_numpy(labels.astype(np.float32))

if use_gpu:

labels = labels.cuda()

labels = Variable(labels)

# Discriminator

D_optimizer.zero_grad()

outputs = Dis_model(inputs)#使用类的__call__()显示使用forward()函数

D_loss = D_criterion(outputs[:, 0], labels)

if i % n_update_dis == 0:

D_loss.backward(retain_graph=True)

D_optimizer.step()

# Generator

if i % n_update_gen == 0:

G_optimizer.zero_grad()

G_loss = G_criterion(outputs[batch_size:, 0],

labels[:batch_size])

G_loss.backward()

G_optimizer.step()

# print statistics

D_running_loss += D_loss.item()

G_running_loss += G_loss.item()

if i % print_every == (print_every - 1):

print('[%d, %5d] D loss: %.3f ; G loss: %.3f' %

(epoch+1, i+1, D_running_loss / print_every,

G_running_loss / print_every))

D_running_loss = 0.0

G_running_loss = 0.0

if update_max and i > update_max:

break

print('Finished Training')

def run_DCGAN(n_epoch=2, batch_size=50, use_gpu=False, dis_lr=1e-5,

gen_lr=1e-4, n_update_dis=1, n_update_gen=1, noise_dim=10,

D_featmap_dim=512, G_featmap_dim=1024, n_channel=1,

update_max=None):

# loading data

trainloader, testloader = load_dataset(batch_size=batch_size)

# initialize models

Dis_model = DCGAN_Discriminator(featmap_dim=D_featmap_dim,

n_channel=n_channel)

Gen_model = DCGAN_Generator(featmap_dim=G_featmap_dim, n_channel=n_channel,

noise_dim=noise_dim)

if use_gpu:

Dis_model = Dis_model.cuda()

Gen_model = Gen_model.cuda()

# assign loss function and optimizer (Adam) to D and G

D_criterion = torch.nn.BCELoss()

D_optimizer = optim.Adam(Dis_model.parameters(), lr=dis_lr,

betas=(0.5, 0.999))

G_criterion = torch.nn.BCELoss()

G_optimizer = optim.Adam(Gen_model.parameters(), lr=gen_lr,

betas=(0.5, 0.999))

train_DCGAN(Dis_model, Gen_model, D_criterion, G_criterion, D_optimizer,

G_optimizer, trainloader, n_epoch, batch_size, noise_dim,

n_update_dis, n_update_gen, update_max=update_max)

if __name__ == '__main__':

run_DCGAN(D_featmap_dim=64, G_featmap_dim=128)

6、图形化展示训练结果

在pytorch中可以使用visdom工具做可视化:

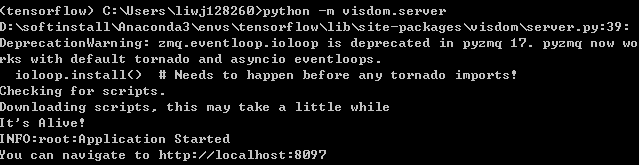

1、在命令行输入:python -m visdom.server,开启web服务,出现

然后将网址输入到浏览器,打开界面

2、在python中(可以是编译器spyder中),输入测试程序

import torch

import visdom

vis = visdom.Visdom(env='test1')

x = torch.arange(1,30,0.01)

y = torch.sin(x)

vis.line(X=x,Y=y,win='sinx',opts={'title':'y=sin(x)'})

Environment选择test1,显示出如下所示的正旋波形图

代码参考自

:https://blog.csdn.net/nanxiaoting/article/details/81395579