1. 朴素贝叶斯法的学习与分类

1.1 基本方法

- 输入空间

χ⊆Rn , n维向量的集合

- 输出空间:类标记集合

Y′={c1,c2,...ck}

- 输入:特征向量

x∈χ

- 输出:类标记

y∈Y′

-

X 是空间

χ 上的随机向量

-

Y 是输出空间

Y′ 上的随机变量

- 训练数据集

T={(x1,y1),(x2,y2),...(xN,yN)} 由

P(X,Y)联合概率分布 独立同分布产生

目标 :通过训练数据集学习 联合概率分布

P(X,Y)

- 先验概率分布:

P(Y=ck),k=1,2,...,K

- 条件概率分布:

P(X=x∣Y=ck)=P(X(1)=x(1),...,X(n)=x(n)∣Y=ck),k=1,2,...,K

上面两项相乘即可得到联合概率

P(X,Y)

但是

P(X=x∣Y=ck) 有 指数级 数量的参数,如果

x(j) 的取值有

Sj 个,

j=1,2,...,n,

Y 可取值有

K 个,总的参数个数为

K∏j=1nSj, 不可行

做出 条件独立性假设 :

X(j) 之间独立

P(X=x∣Y=ck)=P(X(1)=x(1),...,X(n)=x(n)∣Y=ck)=∏j=1nP(X(j)=x(j)∣Y=ck)(1)

朴素贝叶斯法实际上学习到生成数据的机制,所以属于生成模型。条件独立假设等于是说用于分类的特征在类确定的条件下都是条件独立的。这一假设使朴素贝叶斯法变得简单,但有时会牺牲一定的分类准确率。

朴素贝叶斯法分类时,对给定的输入

x,通过学习到的模型计算后验概率分布

P(Y=ck∣X=x),将后验概率最大的类作为

x 的类输出。

推导 :

P(Y=ck∣X=x)=∑kP(X=x∣Y=ck)P(Y=ck)P(X=x∣Y=ck)P(Y=ck)(2) 贝叶斯定理

将(1)代入(2)有:

P(Y=ck∣X=x)=∑kP(Y=ck)∏jP(X(j)=x(j)∣Y=ck)P(Y=ck)∏jP(X(j)=x(j)∣Y=ck),k=1,2,...,K

所以 朴素贝叶斯分类器表示为:

y=f(x)=argmaxck∑kP(Y=ck)∏jP(X(j)=x(j)∣Y=ck)P(Y=ck)∏jP(X(j)=x(j)∣Y=ck)

上式中,分母对所有的

ck 都是相同的,所以

y=argmaxckP(Y=ck)∏jP(X(j)=x(j)∣Y=ck)

2. 参数估计

2.1 极大似然估计

- 先验概率:

P(Y=ck)=(yi=ck的样本数)/N

- 条件概率分布:

P(X(j)=x(j)∣Y=ck)

设第

j 个特征

x(j) 可能的取值为

{aj1,aj2,...,ajSj}, 条件概率的极大似然估计为:

xi(j) 是第

i 个样本的第

j 个特征;

ajl 是第

j 个特征可能的第

l 个值,

I 是指示函数

2.2 学习与分类算法

朴素贝叶斯算法:

输入:

- 训练数据

T={(x1,y1),(x2,y2),...(xN,yN)}

- 其中

xi=(xi(1),xi(2),...,xi(n))T,

xi(j) 是第

i 个样本的第

j 个特征

-

xi(j)∈{aj1,aj2,...,ajSj},

ajl 是第

j 个特征可能的第

l 个值,

j=1,2,...,n;l=1,2,...,Sj

-

yi∈{c1,c2,...ck}

- 实例

x

输出:

步骤:

- 计算先验概率及条件概率

P(Y=ck)=(yi=ck的样本数)/N,k=1,2,...,K

- 对于给定的实例

x=(x(1),x(2),...,x(n))T, 计算

P(Y=ck)∏j=1nP(X(j)=x(j)∣Y=ck),k=1,2,...,K

- 确定实例

x 的类

y=argmaxckP(Y=ck)∏j=1nP(X(j)=x(j)∣Y=ck)

2.2.1 例题

例题:

用下表训练数据学习一个贝叶斯分类器并确定

x=(2,S)T 的类标记

y。

X(1),X(2) 为特征,取值的集合分别为

A1={1,2,3},A2={S,M,L},

Y 为类标记,

Y∈C={1,−1}。

| 训练数据 |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

14 |

15 |

|

X(1) |

1 |

1 |

1 |

1 |

1 |

2 |

2 |

2 |

2 |

2 |

3 |

3 |

3 |

3 |

3 |

|

X(2) |

S |

M |

M |

S |

S |

S |

M |

M |

L |

L |

L |

M |

M |

L |

L |

|

Y |

-1 |

-1 |

1 |

1 |

-1 |

-1 |

-1 |

1 |

1 |

1 |

1 |

1 |

1 |

1 |

-1 |

解:

先验概率

P(Y=1)=159,P(Y=−1)=156

条件概率

P(X(1)=1∣Y=1)=92,P(X(1)=2∣Y=1)=93,P(X(1)=3∣Y=1)=94

P(X(2)=S∣Y=1)=91,P(X(2)=M∣Y=1)=94,P(X(2)=L∣Y=1)=94

P(X(1)=1∣Y=−1)=63,P(X(1)=2∣Y=−1)=62,P(X(1)=3∣Y=−1)=61

P(X(2)=S∣Y=−1)=63,P(X(2)=M∣Y=−1)=62,P(X(2)=L∣Y=−1)=61

对给定的

x=(2,S)T 计算:

Y=1 时:

P(Y=1)P(X(1)=2∣Y=1)P(X(2)=S∣Y=1)=159∗93∗91=451

Y=−1 时:

P(Y=−1)P(X(1)=2∣Y=−1)P(X(2)=S∣Y=−1)=156∗62∗63=151

Y=−1 时的概率最大,所以

y=−1。

2.2.2 例题代码

import numpy as np

data = [[1, 1, 1, 1, 1, 2, 2, 2, 2, 2, 3, 3, 3, 3, 3],

['S', 'M', 'M', 'S', 'S', 'S', 'M', 'M', 'L', 'L', 'L', 'M', 'M', 'L', 'L'],

[-1, -1, 1, 1, -1, -1, -1, 1, 1, 1, 1, 1, 1, 1, -1]]

X1 = []

X2 = []

Y = []

for i in range(len(data[0])):

if data[0][i] not in X1:

X1.append(data[0][i])

if data[1][i] not in X2:

X2.append(data[1][i])

if data[2][i] not in Y:

Y.append(data[2][i])

nY = [0] * len(Y)

for i in range(len(data[0])):

nY[Y.index(data[2][i])] += 1

PY = [0.0] * len(Y)

for i in range(len(Y)):

PY[i] = nY[i] / len(data[0])

PX1_Y = np.zeros((len(X1), len(Y)))

PX2_Y = np.zeros((len(X2), len(Y)))

for i in range(len(data[0])):

PX1_Y[X1.index(data[0][i])][Y.index(data[2][i])] += 1

PX2_Y[X2.index(data[1][i])][Y.index(data[2][i])] += 1

for i in range(len(Y)):

PX1_Y[:, i] /= nY[i]

PX2_Y[:, i] /= nY[i]

x = [2, 'S']

PX_Y = [PX1_Y, PX2_Y]

X = [X1, X2]

ProbY = [0.0] * len(Y)

for i in range(len(Y)):

ProbY[i] = PY[i]

for j in range(len(x)):

ProbY[i] *= PX_Y[j][X[j].index(x[j])][i]

maxProb = -1

idx = -1

for i in range(len(Y)):

if ProbY[i] > maxProb:

maxProb = ProbY[i]

idx = i

print(Y)

print(ProbY)

print(x, ", 最有可能对应的贝叶斯估计 y = %d" % (Y[idx]))

[-1, 1]

[0.06666666666666667, 0.02222222222222222]

[2, 'S'] , 最有可能对应的贝叶斯估计 y = -1

2.3 贝叶斯估计(平滑)

用极大似然估计可能会出现所要估计的概率值为0的情况。会影响到后验概率的计算结果,使分类产生偏差。解决方法是采用贝叶斯估计。

条件概率的贝叶斯估计:

式中

λ≥0, 取 0 时,就是极大似然估计;

取正数,对随机变量各个取值的频数上赋予一个正数;

常取

λ=1,这时称为 拉普拉斯平滑(Laplacian smoothing)

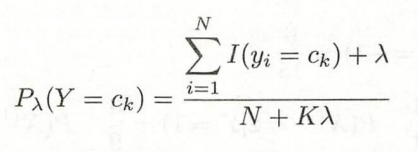

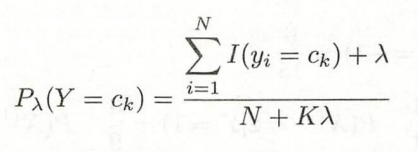

先验概率的贝叶斯估计:

2.3.1 例题

例题:(与上面一致,采用拉普拉斯平滑估计概率,取

λ=1)

用下表训练数据学习一个贝叶斯分类器并确定

x=(2,S)T 的类标记

y。

X(1),X(2) 为特征,取值的集合分别为

A1={1,2,3},A2={S,M,L},

Y 为类标记,

Y∈C={1,−1}。

| 训练数据 |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

14 |

15 |

|

X(1) |

1 |

1 |

1 |

1 |

1 |

2 |

2 |

2 |

2 |

2 |

3 |

3 |

3 |

3 |

3 |

|

X(2) |

S |

M |

M |

S |

S |

S |

M |

M |

L |

L |

L |

M |

M |

L |

L |

|

Y |

-1 |

-1 |

1 |

1 |

-1 |

-1 |

-1 |

1 |

1 |

1 |

1 |

1 |

1 |

1 |

-1 |

解:

先验概率

P(Y=1)=15+29+1=1710,P(Y=−1)=15+26+1=177

条件概率

P(X(1)=1∣Y=1)=9+32+1=123,P(X(1)=2∣Y=1)=9+33+1=124,P(X(1)=3∣Y=1)=9+34+1=125

P(X(2)=S∣Y=1)=9+31+1=122,P(X(2)=M∣Y=1)=9+34+1=125,P(X(2)=L∣Y=1)=9+34+1=125

P(X(1)=1∣Y=−1)=6+33+1=94,P(X(1)=2∣Y=−1)=6+32+1=93,P(X(1)=3∣Y=−1)=6+31+1=92

P(X(2)=S∣Y=−1)=6+33+1=94,P(X(2)=M∣Y=−1)=6+32+1=93,P(X(2)=L∣Y=−1)=6+31+1=92

对给定的

x=(2,S)T 计算:

Y=1 时:

P(Y=1)P(X(1)=2∣Y=1)P(X(2)=S∣Y=1)=1710∗124∗122=1535=0.0327

Y=−1 时:

P(Y=−1)P(X(1)=2∣Y=−1)P(X(2)=S∣Y=−1)=177∗93∗94=45928=0.0610

Y=−1 时的概率最大,所以

y=−1。

2.3.2 例题代码

import numpy as np

data = [[1, 1, 1, 1, 1, 2, 2, 2, 2, 2, 3, 3, 3, 3, 3],

['S', 'M', 'M', 'S', 'S', 'S', 'M', 'M', 'L', 'L', 'L', 'M', 'M', 'L', 'L'],

[-1, -1, 1, 1, -1, -1, -1, 1, 1, 1, 1, 1, 1, 1, -1]]

X1 = []

X2 = []

Y = []

for i in range(len(data[0])):

if data[0][i] not in X1:

X1.append(data[0][i])

if data[1][i] not in X2:

X2.append(data[1][i])

if data[2][i] not in Y:

Y.append(data[2][i])

nY = [0] * len(Y)

for i in range(len(data[0])):

nY[Y.index(data[2][i])] += 1

PY = [0.0] * len(Y)

for i in range(len(Y)):

PY[i] = (nY[i]+1) / (len(data[0])+len(Y))

PX1_Y = np.zeros((len(X1), len(Y)))

PX2_Y = np.zeros((len(X2), len(Y)))

for i in range(len(data[0])):

PX1_Y[X1.index(data[0][i])][Y.index(data[2][i])] += 1

PX2_Y[X2.index(data[1][i])][Y.index(data[2][i])] += 1

for i in range(len(Y)):

PX1_Y[:, i] = (PX1_Y[:, i] + 1)/(nY[i]+len(X1))

PX2_Y[:, i] = (PX2_Y[:, i] + 1)/(nY[i]+len(X2))

x = [2, 'S']

PX_Y = [PX1_Y, PX2_Y]

X = [X1, X2]

ProbY = [0.0] * len(Y)

for i in range(len(Y)):

ProbY[i] = PY[i]

for j in range(len(x)):

ProbY[i] *= PX_Y[j][X[j].index(x[j])][i]

maxProb = -1

idx = -1

for i in range(len(Y)):

if ProbY[i] > maxProb:

maxProb = ProbY[i]

idx = i

print(Y)

print(ProbY)

print(x, ", 最有可能对应的贝叶斯估计 y = %d" % (Y[idx]))

[-1, 1]

[0.06100217864923746, 0.0326797385620915]

[2, 'S'] , 最有可能对应的贝叶斯估计 y = -1