准备数据:20news groups

你可以在github上下到该数据集:20newsbydate.tar.gz

然后找到dataset loader

打开twenty_newsgroups.py

将里面的部分代码修改为:

运行:

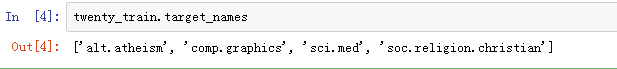

categories = ['alt.atheism', 'soc.religion.christian',

'comp.graphics', 'sci.med']

from sklearn.datasets import fetch_20newsgroups

twenty_train = fetch_20newsgroups(subset='train',

categories=categories, shuffle=True, random_state=42)

这时会产生一个cache,以后都会调用它。

了解20news group

官方文档:

The 20 Newsgroups data set is a collection of approximately 20,000 newsgroup documents, partitioned (nearly) evenly across 20 different newsgroups. To the best of our knowledge, it was originally collected by Ken Lang, probably for his paper “Newsweeder: Learning to filter netnews,” though he does not explicitly mention this collection. The 20 newsgroups collection has become a popular data set for experiments in text applications of machine learning techniques, such as text classification and text clustering.

参数:

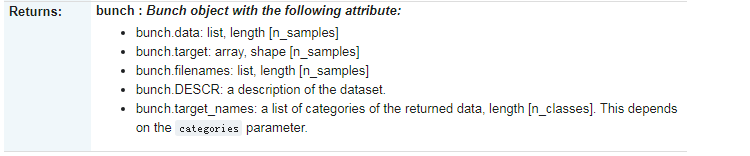

返回值:

返回的twenty_train是一个‘bunch’

你可以用类似字典的key或者对象的attribute一样访问它:

前几行数据:

twenty_train.target存的是整数array,对应着每个document的folder,这也正好是每个document的label。

这就是前10个document的folder(label)。

向量化

ML中,我们肯定要把文档向量化。

对于文档的处理,一般有几个简单的步骤:

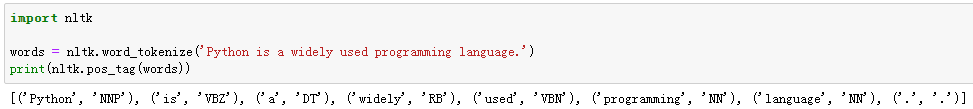

1.分词

我就拿nltk库来举例了。

这就是把句子拆成词。

2.词干提取

looked 和looking我们认为是一样的,因为表达的意义是相同的。

3.词性归并

同样是简化单词的处理。

4.词性标注

词性表:

5.去除停用词

停用词就是在句子中常用的但对意思影响不大的词。

英文中的停用词大概有:

# A list of common english words which should not affect predictions

stopwords = ['a', 'about', 'above', 'across', 'after', 'afterwards', 'again', 'against', 'all', 'almost', 'alone',

'along', 'already', 'also', 'although', 'always', 'am', 'among', 'amongst', 'amoungst', 'amount',

'an', 'and', 'another', 'any', 'anyhow', 'anyone', 'anything', 'anyway', 'anywhere', 'are', 'around',

'as', 'at', 'back', 'be', 'became', 'because', 'become', 'becomes', 'becoming', 'been', 'before',

'beforehand', 'behind', 'being', 'below', 'beside', 'besides', 'between', 'beyond', 'bill', 'both',

'bottom', 'but', 'by', 'call', 'can', 'cannot', 'cant', 'co', 'con', 'could', 'couldnt', 'cry', 'de',

'describe', 'detail', 'did', 'do', 'does', 'doing', 'don', 'done', 'down', 'due', 'during', 'each', 'eg',

'eight', 'either', 'eleven', 'else', 'elsewhere', 'empty', 'enough', 'etc', 'even', 'ever', 'every', 'everyone',

'everything', 'everywhere', 'except', 'few', 'fifteen', 'fify', 'fill', 'find', 'fire', 'first', 'five', 'for',

'former', 'formerly', 'forty', 'found', 'four', 'from', 'front', 'full', 'further', 'get', 'give', 'go', 'had',

'has', 'hasnt', 'have', 'having', 'he', 'hence', 'her', 'here', 'hereafter', 'hereby', 'herein', 'hereupon',

'hers', 'herself', 'him', 'himself', 'his', 'how', 'however', 'hundred', 'i', 'ie', 'if', 'in', 'inc', 'indeed',

'interest', 'into', 'is', 'it', 'its', 'itself', 'just', 'keep', 'last', 'latter', 'latterly', 'least', 'less',

'ltd', 'made', 'many', 'may', 'me', 'meanwhile', 'might', 'mill', 'mine', 'more', 'moreover', 'most', 'mostly',

'move', 'much', 'must', 'my', 'myself', 'name', 'namely', 'neither', 'never', 'nevertheless', 'next', 'nine',

'no', 'nobody', 'none', 'noone', 'nor', 'not', 'nothing', 'now', 'nowhere', 'of', 'off', 'often', 'on', 'once',

'one', 'only', 'onto', 'or', 'other', 'others', 'otherwise', 'our', 'ours', 'ourselves', 'out', 'over', 'own',

'part', 'per', 'perhaps', 'please', 'put', 'rather', 're', 's', 'same', 'see', 'seem', 'seemed', 'seeming',

'seems', 'serious', 'several', 'she', 'should', 'show', 'side', 'since', 'sincere', 'six', 'sixty', 'so',

'some', 'somehow', 'someone', 'something', 'sometime', 'sometimes', 'somewhere', 'still', 'such', 'system',

't', 'take', 'ten', 'than', 'that', 'the', 'their', 'theirs', 'them', 'themselves', 'then', 'thence', 'there',

'thereafter', 'thereby', 'therefore', 'therein', 'thereupon', 'these', 'they', 'thickv', 'thin', 'third', 'this',

'those', 'though', 'three', 'through', 'throughout', 'thru', 'thus', 'to', 'together', 'too', 'top', 'toward',

'towards', 'twelve', 'twenty', 'two', 'un', 'under', 'until', 'up', 'upon', 'us', 'very', 'via', 'was', 'we',

'well', 'were', 'what', 'whatever', 'when', 'whence', 'whenever', 'where', 'whereafter', 'whereas', 'whereby',

'wherein', 'whereupon', 'wherever', 'whether', 'which', 'while', 'whither', 'who', 'whoever', 'whole', 'whom',

'whose', 'why', 'will', 'with', 'within', 'without', 'would', 'yet', 'you', 'your', 'yours', 'yourself',

'yourselves']

直接用nltk中下载的停用词也是可以的。

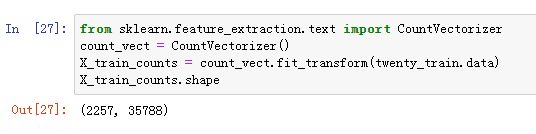

CountVectorizer 和TfidfVectorizer都能完成以上工作,不过他们也是有区别的。

CountVectorizer与TfidfVectorizer

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.feature_extraction.text import TfidfVectorizer

cv = CountVectorizer(stop_words='english')

tv = TfidfVectorizer(stop_words='english')

a = "cat hat bat splat cat bat hat mat cat"

b = "cat mat cat sat"

fit_transform后返回的都是

的object。不过数据类型一个是int64,一个是float64。这当然是合理的,因为CountVectorizer是靠数的,TfidfVectorizer是计算分数的。

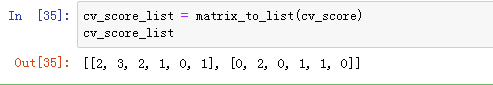

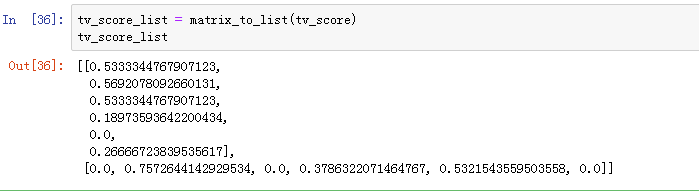

我们先把稀疏矩阵转为list:

def matrix_to_list(matrix):

matrix = matrix.toarray()

return matrix.tolist()

print("tfidf_a tfidf_b count_a count_b word")

print("-"*41)

for i in range(6):

print(" {:.3f} {:.3f} {:} {:} {:}".format(tv_score_list[0][i],

tv_score_list[1][i],

cv_score_list[0][i],

cv_score_list[1][i],

cv.get_feature_names()[i]))

这就很清楚了:比如,bat在a中出现2词,在b中出现0词。

那么,tf_idf是怎么算的呢?

tf_idf计算过程

tf就是term frequency,就是一个term在一句话中的频率。

但是这并不能说明一个term的重要与否,比如一句话中有好多个is,那is就重要了吗?

这时就要idf(inverse document frequency)了。它是所有的文本数量去除以包含指定term的文本数量,再取对数值。比如你有100个文本,99个文本都有is,100除以99,再取对数,最终的数肯定很小了,这样就合理地把is的重要性冲淡了。

tf_idf最后的值是tf的值再乘上idf的值。

我这里给一个小例子(用nltk库):

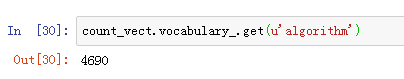

这时候已经把document转为特征向量了。

某个单词(比如algorithm)的index的值对应的就是训练文本中的频率(出现的次数)。

先fit再transform和fit、transform一起做是一样的:

Naive Bayes Classifier

我们有高斯贝叶斯分类器,多项式贝叶斯分类器,伯努力贝叶斯分类器,这里,我们用多项式的。

训练好后我们得到一个classifier。

现在我们呢来检测一番:

docs_new = ['God is love', 'OpenGL on the GPU is fast']

X_new_counts = count_vect.transform(docs_new)

X_new_tfidf = tfidf_transformer.transform(X_new_counts)

predicted = clf.predict(X_new_tfidf)

for doc, category in zip(docs_new, predicted):

print('%r => %s' % (doc, twenty_train.target_names[category]))

得到:

'God is love' => soc.religion.christian

'OpenGL on the GPU is fast' => comp.graphics

当然pipeline可以一口气做完:

from sklearn.pipeline import Pipeline

text_clf = Pipeline([

('vect', CountVectorizer()),

('tfidf', TfidfTransformer()),

('clf', MultinomialNB()),

])

text_clf.fit(twenty_train.data,twenty_train.target)

在test上进行模型评估

import numpy as np

twenty_test = fetch_20newsgroups(subset='test',

categories=categories, shuffle=True, random_state=42)

docs_test = twenty_test.data

predicted = text_clf.predict(docs_test)

np.mean(predicted == twenty_test.target)

最后的准确率是83%。

当然,我们也可以自己亲自处理数据,而不用sklearn提供的向量化。

数据:https://github.com/resuscitate/20news_group

代码:

import os

import string

import numpy as np

import matplotlib.pyplot as plt

from sklearn import model_selection

from sklearn.naive_bayes import MultinomialNB

from sklearn.metrics import classification_report

#X存的是文件名和文本内容(tuple)

#Y存的是X对应的类别

X = []

Y = []

for category in os.listdir('20_newsgroups'):

for document in os.listdir('20_newsgroups/'+category):

with open('20_newsgroups/'+category+'/'+document, "r",encoding='gbk',errors='ignore') as f:

X.append((document,f.read()))

Y.append(category)

#划分训练集和测试集

X_train, X_test, Y_train, Y_test = model_selection.train_test_split(X, Y, test_size=0.25, random_state=0)

#停用词

stopwords = ['a', 'about', 'above', 'across', 'after', 'afterwards', 'again', 'against', 'all', 'almost', 'alone',

'along', 'already', 'also', 'although', 'always', 'am', 'among', 'amongst', 'amoungst', 'amount',

'an', 'and', 'another', 'any', 'anyhow', 'anyone', 'anything', 'anyway', 'anywhere', 'are', 'around',

'as', 'at', 'back', 'be', 'became', 'because', 'become', 'becomes', 'becoming', 'been', 'before',

'beforehand', 'behind', 'being', 'below', 'beside', 'besides', 'between', 'beyond', 'bill', 'both',

'bottom', 'but', 'by', 'call', 'can', 'cannot', 'cant', 'co', 'con', 'could', 'couldnt', 'cry', 'de',

'describe', 'detail', 'did', 'do', 'does', 'doing', 'don', 'done', 'down', 'due', 'during', 'each', 'eg',

'eight', 'either', 'eleven', 'else', 'elsewhere', 'empty', 'enough', 'etc', 'even', 'ever', 'every', 'everyone',

'everything', 'everywhere', 'except', 'few', 'fifteen', 'fify', 'fill', 'find', 'fire', 'first', 'five', 'for',

'former', 'formerly', 'forty', 'found', 'four', 'from', 'front', 'full', 'further', 'get', 'give', 'go', 'had',

'has', 'hasnt', 'have', 'having', 'he', 'hence', 'her', 'here', 'hereafter', 'hereby', 'herein', 'hereupon',

'hers', 'herself', 'him', 'himself', 'his', 'how', 'however', 'hundred', 'i', 'ie', 'if', 'in', 'inc', 'indeed',

'interest', 'into', 'is', 'it', 'its', 'itself', 'just', 'keep', 'last', 'latter', 'latterly', 'least', 'less',

'ltd', 'made', 'many', 'may', 'me', 'meanwhile', 'might', 'mill', 'mine', 'more', 'moreover', 'most', 'mostly',

'move', 'much', 'must', 'my', 'myself', 'name', 'namely', 'neither', 'never', 'nevertheless', 'next', 'nine',

'no', 'nobody', 'none', 'noone', 'nor', 'not', 'nothing', 'now', 'nowhere', 'of', 'off', 'often', 'on', 'once',

'one', 'only', 'onto', 'or', 'other', 'others', 'otherwise', 'our', 'ours', 'ourselves', 'out', 'over', 'own',

'part', 'per', 'perhaps', 'please', 'put', 'rather', 're', 's', 'same', 'see', 'seem', 'seemed', 'seeming',

'seems', 'serious', 'several', 'she', 'should', 'show', 'side', 'since', 'sincere', 'six', 'sixty', 'so',

'some', 'somehow', 'someone', 'something', 'sometime', 'sometimes', 'somewhere', 'still', 'such', 'system',

't', 'take', 'ten', 'than', 'that', 'the', 'their', 'theirs', 'them', 'themselves', 'then', 'thence', 'there',

'thereafter', 'thereby', 'therefore', 'therein', 'thereupon', 'these', 'they', 'thickv', 'thin', 'third', 'this',

'those', 'though', 'three', 'through', 'throughout', 'thru', 'thus', 'to', 'together', 'too', 'top', 'toward',

'towards', 'twelve', 'twenty', 'two', 'un', 'under', 'until', 'up', 'upon', 'us', 'very', 'via', 'was', 'we',

'well', 'were', 'what', 'whatever', 'when', 'whence', 'whenever', 'where', 'whereafter', 'whereas', 'whereby',

'wherein', 'whereupon', 'wherever', 'whether', 'which', 'while', 'whither', 'who', 'whoever', 'whole', 'whom',

'whose', 'why', 'will', 'with', 'within', 'without', 'would', 'yet', 'you', 'your', 'yours', 'yourself',

'yourselves']

#去除停用词后,构造一个单词与单词数量对应的字典

vocab = {}

for i in range(len(X_train)):

word_list = []

for word in X_train[i][1].split():

word_new = word.strip(string.punctuation).lower()

if (len(word_new)>2) and (word_new not in stopwords):

if word_new in vocab:

vocab[word_new]+=1

else:

vocab[word_new]=1

#画出单词数与频率的图像,以此决定临界值(为了选feature)

num_words = [0 for i in range(max(vocab.values())+1)]

freq = [i for i in range(max(vocab.values())+1)]

for key in vocab:

num_words[vocab[key]]+=1

plt.plot(freq,num_words)

plt.axis([1, 10, 0, 20000])

plt.xlabel("Frequency")

plt.ylabel("No of words")

plt.grid()

plt.show()

#临界值

cutoff_freq = 80

num_words_above_cutoff = len(vocab)-sum(num_words[0:cutoff_freq])

print("Number of words with frequency higher than cutoff frequency({}) :".format(cutoff_freq),num_words_above_cutoff)

#选取大于临界值的feature

features = []

for key in vocab:

if vocab[key] >=cutoff_freq:

features.append(key)

#训练集的feature矩阵

X_train_dataset = np.zeros((len(X_train),len(features)))

for i in range(len(X_train)):

word_list = [ word.strip(string.punctuation).lower() for word in X_train[i][1].split()]

for word in word_list:

if word in features:

X_train_dataset[i][features.index(word)] += 1

#测试集的feature矩阵

X_test_dataset = np.zeros((len(X_test),len(features)))

for i in range(len(X_test)):

word_list = [ word.strip(string.punctuation).lower() for word in X_test[i][1].split()]

for word in word_list:

if word in features:

X_test_dataset[i][features.index(word)] += 1

clf = MultinomialNB()

#训练数据

clf.fit(X_train_dataset,Y_train)

#对测试集做预测

Y_test_pred = clf.predict(X_test_dataset)

sklearn_score_train = clf.score(X_train_dataset,Y_train)

print("Sklearn's score on training data :",sklearn_score_train)

sklearn_score_test = clf.score(X_test_dataset,Y_test)

print("Sklearn's score on testing data :",sklearn_score_test)

print("Classification report for testing data :-")

print(classification_report(Y_test, Y_test_pred))

print(np.mean(Y_test==Y_test_pred))#86%的准确率