- 需要软件和下载地址

提取码:0o35

复制这段内容后打开百度网盘手机App,操作更方便哦

开始安装…

一. 安装配置

我的环境变量配置在 root用户的当前环境变量,

因为我是使用root用户操作的.hadoop用户也可以,但是我这里hadoop用户会报以前创建文件夹或执行文件的权限

所以我使用root用户操作,vim ~/.bash_profile

- 安装JDK

- 解压

- 配置环境变量

- 安装Maven

- 解压Maven

- 配置环境变量

- 配置setting.xml

<mirror>

<id>alimaven</id>

<name>aliyun maven</name>

<url>http://maven.aliyun.com/nexus/content/groups/public/</url>

<mirrorOf>central</mirrorOf>

</mirror>

- 安装protobuf

-

解压/安装

tar -zxvf protobuf-2.5.0.tar.gz -C …/servers/

cd /export/servers/protobuf-2.5.0

./configure

make && make install -

配置环境变量

-

测试是否安装成功

protoc --version

-

安装以下依赖包

yum install autoconf automake libtool cmake

yum install ncurses-devel

yum install openssl-devel

yum install lzo-devel zlib-devel gcc gcc-c++ -

bzip2压缩需要的依赖包

- 下载

yum install -y bzip2-devel

- 安装protobuf

- 解压/安装

tar -zxvf protobuf-2.5.0.tar.gz -C …/servers/

cd /export/servers/protobuf-2.5.0

./configure

make && make install

- 配置环境变量

二. 最终环境变量

export JAVA_HOME=/home/hadoop/app/jdk1.7.0_79

export PROTOC_HOME=/root/protobuf-2.5.0

export MAVEN_HOME=/home/hadoop/app/apache-maven-3.3.9

export FINDBUGS_HOME=/home/hadoop/software/compile/findbugs-1.3.9

export PATH=$JAVA_HOME/bin:$PROTOC_HOME/bin:$MAVEN_HOME/bin:$FINDBUGS_HOME/bin:$PATH

三.hadoop源码编译

- hadoop源码安装

- 解压

- 编译安装

mvn package -Pdist,native -DskipTests –Dtar

- 支持snappy需要下载安装snappy

- 安装/编译snappy

tar -zxf snappy-1.1.1.tar.gz -C …/servers/

cd …/servers/snappy-1.1.1/

./configure

make && make install - 支持snappy的源码编译

mvn package -DskipTests -Pdist,native -Dtar -Drequire.snappy -e -X

-X查看详细日志

四.结果

- 编译成功

[INFO] --- maven-javadoc-plugin:2.8.1:jar (module-javadocs) @ hadoop-dist ---

[INFO] Building jar: /home/hadoop/software/compile/hadoop-2.6.0-cdh5.7.0/hadoop-dist/target/hadoop-dist-2.6.0-cdh5.7.0-javadoc.jar

[INFO] ------------------------------------------------------------------------

[INFO] Reactor Summary:

[INFO]

[INFO] Apache Hadoop Main ................................. SUCCESS [ 4.355 s]

[INFO] Apache Hadoop Project POM .......................... SUCCESS [ 2.377 s]

[INFO] Apache Hadoop Annotations .......................... SUCCESS [ 6.614 s]

[INFO] Apache Hadoop Assemblies ........................... SUCCESS [ 1.079 s]

[INFO] Apache Hadoop Project Dist POM ..................... SUCCESS [ 2.813 s]

[INFO] Apache Hadoop Maven Plugins ........................ SUCCESS [ 6.289 s]

[INFO] Apache Hadoop MiniKDC .............................. SUCCESS [ 7.335 s]

[INFO] Apache Hadoop Auth ................................. SUCCESS [ 7.251 s]

[INFO] Apache Hadoop Auth Examples ........................ SUCCESS [ 5.306 s]

[INFO] Apache Hadoop Common ............................... SUCCESS [04:02 min]

[INFO] Apache Hadoop NFS .................................. SUCCESS [ 10.869 s]

[INFO] Apache Hadoop KMS .................................. SUCCESS [01:13 min]

[INFO] Apache Hadoop Common Project ....................... SUCCESS [ 0.094 s]

[INFO] Apache Hadoop HDFS ................................. SUCCESS [05:32 min]

[INFO] Apache Hadoop HttpFS ............................... SUCCESS [03:57 min]

[INFO] Apache Hadoop HDFS BookKeeper Journal .............. SUCCESS [ 14.586 s]

[INFO] Apache Hadoop HDFS-NFS ............................. SUCCESS [ 10.348 s]

[INFO] Apache Hadoop HDFS Project ......................... SUCCESS [ 0.365 s]

[INFO] hadoop-yarn ........................................ SUCCESS [ 0.144 s]

[INFO] hadoop-yarn-api .................................... SUCCESS [02:29 min]

[INFO] hadoop-yarn-common ................................. SUCCESS [ 42.984 s]

[INFO] hadoop-yarn-server ................................. SUCCESS [ 0.211 s]

[INFO] hadoop-yarn-server-common .......................... SUCCESS [ 17.051 s]

[INFO] hadoop-yarn-server-nodemanager ..................... SUCCESS [ 28.840 s]

[INFO] hadoop-yarn-server-web-proxy ....................... SUCCESS [ 4.770 s]

[INFO] hadoop-yarn-server-applicationhistoryservice ....... SUCCESS [ 10.825 s]

[INFO] hadoop-yarn-server-resourcemanager ................. SUCCESS [ 33.446 s]

[INFO] hadoop-yarn-server-tests ........................... SUCCESS [ 2.175 s]

[INFO] hadoop-yarn-client ................................. SUCCESS [ 9.191 s]

[INFO] hadoop-yarn-applications ........................... SUCCESS [ 0.096 s]

[INFO] hadoop-yarn-applications-distributedshell .......... SUCCESS [ 4.704 s]

[INFO] hadoop-yarn-applications-unmanaged-am-launcher ..... SUCCESS [ 3.566 s]

[INFO] hadoop-yarn-site ................................... SUCCESS [ 0.139 s]

[INFO] hadoop-yarn-registry ............................... SUCCESS [ 9.109 s]

[INFO] hadoop-yarn-project ................................ SUCCESS [ 10.264 s]

[INFO] hadoop-mapreduce-client ............................ SUCCESS [ 0.259 s]

[INFO] hadoop-mapreduce-client-core ....................... SUCCESS [ 34.068 s]

[INFO] hadoop-mapreduce-client-common ..................... SUCCESS [ 27.019 s]

[INFO] hadoop-mapreduce-client-shuffle .................... SUCCESS [ 7.110 s]

[INFO] hadoop-mapreduce-client-app ........................ SUCCESS [ 17.977 s]

[INFO] hadoop-mapreduce-client-hs ......................... SUCCESS [ 13.277 s]

[INFO] hadoop-mapreduce-client-jobclient .................. SUCCESS [ 11.089 s]

[INFO] hadoop-mapreduce-client-hs-plugins ................. SUCCESS [ 4.022 s]

[INFO] hadoop-mapreduce-client-nativetask ................. SUCCESS [02:03 min]

[INFO] Apache Hadoop MapReduce Examples ................... SUCCESS [ 8.514 s]

[INFO] hadoop-mapreduce ................................... SUCCESS [ 8.381 s]

[INFO] Apache Hadoop MapReduce Streaming .................. SUCCESS [ 7.468 s]

[INFO] Apache Hadoop Distributed Copy ..................... SUCCESS [ 14.055 s]

[INFO] Apache Hadoop Archives ............................. SUCCESS [ 4.625 s]

[INFO] Apache Hadoop Archive Logs ......................... SUCCESS [ 4.193 s]

[INFO] Apache Hadoop Rumen ................................ SUCCESS [ 9.228 s]

[INFO] Apache Hadoop Gridmix .............................. SUCCESS [ 7.490 s]

[INFO] Apache Hadoop Data Join ............................ SUCCESS [ 4.210 s]

[INFO] Apache Hadoop Ant Tasks ............................ SUCCESS [ 3.682 s]

[INFO] Apache Hadoop Extras ............................... SUCCESS [ 4.795 s]

[INFO] Apache Hadoop Pipes ................................ SUCCESS [ 11.485 s]

[INFO] Apache Hadoop OpenStack support .................... SUCCESS [ 9.499 s]

[INFO] Apache Hadoop Amazon Web Services support .......... SUCCESS [ 9.641 s]

[INFO] Apache Hadoop Azure support ........................ SUCCESS [ 7.469 s]

[INFO] Apache Hadoop Client ............................... SUCCESS [ 9.368 s]

[INFO] Apache Hadoop Mini-Cluster ......................... SUCCESS [ 4.012 s]

[INFO] Apache Hadoop Scheduler Load Simulator ............. SUCCESS [ 6.967 s]

[INFO] Apache Hadoop Tools Dist ........................... SUCCESS [ 13.934 s]

[INFO] Apache Hadoop Tools ................................ SUCCESS [ 0.069 s]

[INFO] Apache Hadoop Distribution ......................... SUCCESS [ 56.287 s]

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 29:06 min

[INFO] Finished at: 2018-12-05T22:51:11+08:00

[INFO] Final Memory: 146M/412M

-

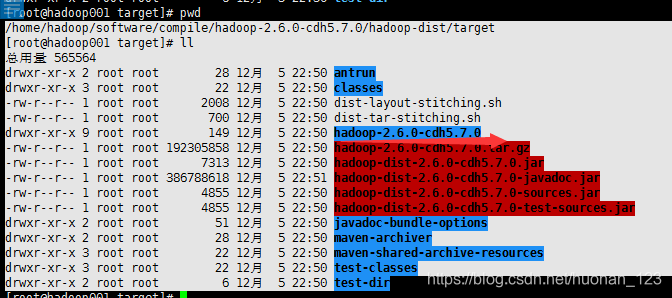

结果如下图

-

Hadoop 源码编译参照

扫描二维码关注公众号,回复: 5576089 查看本文章

参考博客:https://blog.csdn.net/weixin_43070138/article/details/82194208

五. 编译Lzo压缩配置

[hadoop@hadoop001 native]$ lzop --version

lzop 1.03

LZO library 2.06

Copyright (C) 1996-2010 Markus Franz Xaver Johannes Oberhumer

- 操作上面完成之后

- 查看/home/hadoop/app/hadoop-lzo-master/build/native/Linux-amd64-64/lib是否生成lzo库的链接

[hadoop@hadoop001 lib]$ ll

总用量 196

-rw-r--r-- 1 hadoop hadoop 116430 12月 3 23:09 libgplcompression.a

-rw-rw-r-- 1 hadoop hadoop 1132 12月 3 23:09 libgplcompression.la

lrwxrwxrwx 1 hadoop hadoop 26 12月 3 23:09 libgplcompression.so -> libgplcompression.so.0.0.0

lrwxrwxrwx 1 hadoop hadoop 26 12月 3 23:09 libgplcompression.so.0 -> libgplcompression.so.0.0.0

-rwxrwxr-x 1 hadoop hadoop 77424 12月 3 23:09 libgplcompression.so.0.0.0

-

复制home/hadoop/app/hadoop-lzo-master/build/native/Linux-amd64-64/lib下的所有包到你自己安装好的$HADOOP_HOME/lib/nativ

(网上是一顿配置,我这里直接把生成的文件复制过去)