TensorBoard简介

目前Tensorboard可以展示网络结构以及几种数据:标量指标、图片、音频、计算图的有向图、参数变量的分布和直方图等。Tensorboard的工作方式是启动一个Web服务,该服务进程从Tensorflow程序执行所得到的事件日志文件(event files)中读取概要(summary)数据,然后将数据在网页中绘制可视化的图表。

计算图可视化

如果只是想显示网络结构,一般使用tf.name_scope(),记住一点,同命名空间下的所有节点会被缩略成一个节点,只有顶层节点空间中的节点才会被现实在tensorboard可视化效果上。

监控指标可视化

1.具体的整个流程,参考香港大学的tensorflow—ppt

1. From TF graph, decide which tensors you want to log(从tensorflow中决定哪一个tensors被记录)

w2_hist = tf.summary.histogram("weights2", W2)

cost_summ = tf.summary.scalar("cost", cost)

2. Merge all summaries(将所有概要操作合成一个算子)

summary = tf.summary.merge_all()

3. Create writer and add graph(创建概要写入操作)

# Create summary writer writer = tf.summary.FileWriter(‘./logs’) writer.add_graph(sess.graph) 或者 writer = tf.summary.FileWriter(logdir, sess.graph)

4. Run summary merge and add_summary(运行和加入summary)

s, _ = sess.run([summary, optimizer], feed_dict=feed_dict) writer.add_summary(s, global_step=global_step)

5. Launch TensorBoard(运行tensorboard)

tensorboard --logdir=./logs

2.监控指标

标量数据,如准确率、代价函数,使用tf.summary.scalar加入算子

参数数据,如参数矩阵weights、偏置矩阵bias,一般使用tf.summary.histogram记录

图像数据,用tf.summary.image加入记录算子

音频数据,用tf.summary.audio加入算子

与其他算子一样,记录概要的节点由于没有被任何计算节点缩依赖,所以并不会自动执行,需要手动通过Session.run()接口触发。

3.函数介绍

I、SCALAR

tf.summary.scalar(name, tensor, collections=None, family=None)

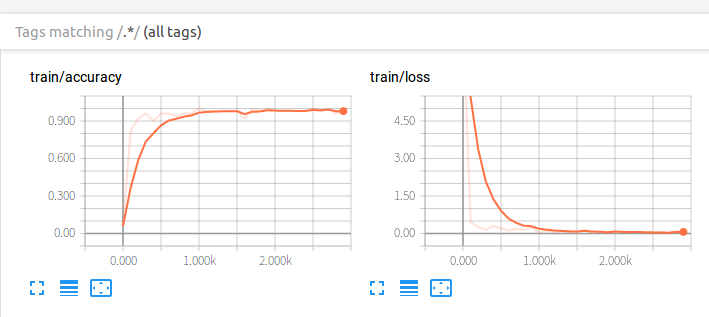

可视化训练过程中随着迭代次数准确率(val acc)、损失值(train/test loss)、学习率(learning rate)、每一层的权重和偏置的统计量(mean、std、max/min)等的变化曲线

输入参数:

name:此操作节点的名字,TensorBoard 中绘制的图形的纵轴也将使用此名字

tensor: 需要监控的变量 A real numeric Tensor containing a single value.

输出:

A scalar Tensor of type string. Which contains a Summary protobuf.

II、IMAGE

tf.summary.image(name, tensor, max_outputs=3, collections=None, family=None)

可视化当前轮训练使用的训练/测试图片或者 feature maps

输入参数:

name:此操作节点的名字,TensorBoard 中绘制的图形的纵轴也将使用此名字

tensor: A r A 4-D uint8 or float32 Tensor of shape [batch_size, height, width, channels] where channels is 1, 3, or 4

max_outputs:Max number of batch elements to generate images for

输出:

A scalar Tensor of type string. Which contains a Summary protobuf.

III、HISTOGRAM

tf.summary.histogram(name, values, collections=None, family=None)

可视化张量的取值分布

输入参数:

name:此操作节点的名字,TensorBoard 中绘制的图形的纵轴也将使用此名字

tensor: A real numeric Tensor. Any shape. Values to use to build the histogram

输出:

A scalar Tensor of type string. Which contains a Summary protobuf.

Ⅳ、tf.summary.FileWriter

tf.summary.FileWriter(logdir, graph=None, flush_secs=120, max_queue=10) 负责将事件日志(graph、scalar/image/histogram、event)写入到指定的文件中 初始化参数: logdir:事件写入的目录 graph:如果在初始化的时候传入sess,graph的话,相当于调用add_graph() 方法,用于计算图的可视化 flush_sec:How often, in seconds, to flush the added summaries and events to disk. max_queue:Maximum number of summaries or events pending to be written to disk before one of the ‘add’ calls block. 其它常用方法: add_event(event):Adds an event to the event file add_graph(graph, global_step=None):Adds a Graph to the event file,Most users pass a graph in the constructor instead add_summary(summary, global_step=None):Adds a Summary protocol buffer to the event file,一定注意要传入 global_step close():Flushes the event file to disk and close the file flush():Flushes the event file to disk add_meta_graph(meta_graph_def,global_step=None) add_run_metadata(run_metadata, tag, global_step=None)

代码测试

# -*- coding: utf-8 -*-

"""

Created on Mon Mar 26 21:14:59 2018

@author: pc314

"""

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets('MNIST_data', one_hot=True)

def weight_variable(shape):

initial = tf.truncated_normal(shape, stddev=0.1)

return tf.Variable(initial)

def bias_variable(shape):

initial = tf.constant(0.1, shape=shape)

return tf.Variable(initial)

myGraph = tf.Graph()

with myGraph.as_default():

with tf.name_scope('inputsAndLabels'):

x_raw = tf.placeholder(tf.float32, shape=[None, 784])

y = tf.placeholder(tf.float32, shape=[None, 10])

with tf.name_scope('hidden1'):

x = tf.reshape(x_raw, shape=[-1,28,28,1])

W_conv1 = weight_variable([5,5,1,32])

b_conv1 = bias_variable([32])

l_conv1 = tf.nn.relu(tf.nn.conv2d(x,W_conv1, strides=[1,1,1,1],padding='SAME') + b_conv1)

l_pool1 = tf.nn.max_pool(l_conv1, ksize=[1,2,2,1], strides=[1,2,2,1], padding='SAME')

tf.summary.image('x_input',x,max_outputs=10)

tf.summary.histogram('W_con1',W_conv1)

tf.summary.histogram('b_con1',b_conv1)

with tf.name_scope('hidden2'):

W_conv2 = weight_variable([5,5,32,64])

b_conv2 = bias_variable([64])

l_conv2 = tf.nn.relu(tf.nn.conv2d(l_pool1, W_conv2, strides=[1,1,1,1], padding='SAME')+b_conv2)

l_pool2 = tf.nn.max_pool(l_conv2, ksize=[1,2,2,1],strides=[1,2,2,1], padding='SAME')

tf.summary.histogram('W_con2', W_conv2)

tf.summary.histogram('b_con2', b_conv2)

with tf.name_scope('fc1'):

W_fc1 = weight_variable([64*7*7, 1024])

b_fc1 = bias_variable([1024])

l_pool2_flat = tf.reshape(l_pool2, [-1, 64*7*7])

l_fc1 = tf.nn.relu(tf.matmul(l_pool2_flat, W_fc1) + b_fc1)

keep_prob = tf.placeholder(tf.float32)

l_fc1_drop = tf.nn.dropout(l_fc1, keep_prob)

tf.summary.histogram('W_fc1', W_fc1)

tf.summary.histogram('b_fc1', b_fc1)

with tf.name_scope('fc2'):

W_fc2 = weight_variable([1024, 10])

b_fc2 = bias_variable([10])

y_conv = tf.matmul(l_fc1_drop, W_fc2) + b_fc2

tf.summary.histogram('W_fc1', W_fc1)

tf.summary.histogram('b_fc1', b_fc1)

with tf.name_scope('train'):

cross_entropy = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=y_conv, labels=y))

train_step = tf.train.AdamOptimizer(learning_rate=1e-4).minimize(cross_entropy)

correct_prediction = tf.equal(tf.argmax(y_conv,1), tf.argmax(y, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

tf.summary.scalar('loss', cross_entropy)

tf.summary.scalar('accuracy', accuracy)

with tf.Session(graph=myGraph) as sess:

sess.run(tf.global_variables_initializer())

saver = tf.train.Saver()

merged = tf.summary.merge_all()

summary_writer = tf.summary.FileWriter('logs/', graph=sess.graph)

for i in range(3001):

batch = mnist.train.next_batch(50)

sess.run(train_step,feed_dict={x_raw:batch[0], y:batch[1], keep_prob:0.5})

if i%100 == 0:

train_accuracy = accuracy.eval(feed_dict={x_raw:batch[0], y:batch[1], keep_prob:1.0})

print('step %d training accuracy:%g'%(i, train_accuracy))

summary = sess.run(merged,feed_dict={x_raw:batch[0], y:batch[1], keep_prob:1.0})

summary_writer.add_summary(summary,i)

test_accuracy = accuracy.eval(feed_dict={x_raw:mnist.test.images, y:mnist.test.labels, keep_prob:1.0})

print('test accuracy:%g' %test_accuracy)

#saver.save(sess,save_path='./model/mnistmodel',global_step=1)

运行的过程中,还是运行之后都可以使用tensorboard进行观察。因为我是使用的是anaconda,所以首先激活tensorflow环境,然后到logs文件夹下,运行这段命令就可以了

tensorboard --logdir=logs

然后就看到有一个网址,我的是http://pc314:6006。将这个网址复制到浏览器中,就可以看到需要可视化的东西了。如图展示一部分。

参考

https://blog.csdn.net/mzpmzk/article/details/78647699?locationNum=2&fps=1这篇博客写的是真的NB,很好。