文章目录

一、.preprocessing

1.1 数据标准化

1.1.1 转化为标准正态分布

.StandardScaler(copy=True, with_mean=True, with_std=True):该标准器本质上是保留了原数据的均值和标准差,并可以同样标准化作用于测试数据。.scale(X, axis=0, with_mean=True, with_std=True, copy=True)

1.1.2 转化到某个区间

.MinMaxScaler(feature_range=(0, 1), copy=True)把数据转化到某个区间.MaxAbsScaler(copy=True),用法同MinMaxScaler,范围变成[-1,1].minmax_scale(X, feature_range=(0, 1), axis=0, copy=True).maxabs_scale(X, axis=0, copy=True)

1.1.3 Scaler方法

.fit(X_train).transform(X_test).fit_transform(X_train)

1.1.4 归一化

.normalize(X, norm=’l2’, axis=1, copy=True, return_norm=False).Normalizer(norm=’l2’, copy=True)

1.1.5 非线性标准化

1.2 特征编码

1..OneHotEncoder(n_values=None, categorical_features=None, categories=None, drop=None, sparse=True, dtype=<class ‘numpy.float64’>, handle_unknown=’error’)

sparse:默认为True表示用稀疏矩阵表示,一般使用.toarray()转换到False,即数组。

.OrdinalEncoder(categories=’auto’, dtype=<class ‘numpy.float64’>).LabelEncoder()

二、.model_selection

2.1 数据集分割

X_train, X_test, y_train, y_test = .train_test_split(data,target, test_size=0.4, random_state=0,stratify=None)

test_size:测试集的比例

n_splits:k值,进行k次的分割

stratify:指定分层抽样变量,按该变量的类型分布分层抽样。

.ShuffleSplit(n_splits=10, test_size=None, train_size=None, random_state=None):打乱后分割.StratifiedShuffleSplit(n_splits=10, test_size=None, train_size=None, random_state=None)

2.2 用于交叉验证的数据集分割

model_selction.KFold(n_splits=’warn’, shuffle=False, random_state=None)

示例:kf = KFold(n_splits=2)

kf.split(X_train, y_train)

n_splits:k折的k。

shuffle:是否打乱,默认否。

.RepeatedKFold(n_splits=5, n_repeats=10, random_state=None):重复.KFoldn_repeats次。.LeaveOneOut()LeavePOut().StratifiedKFold(n_splits=3, shuffle=False, random_state=None)- GroupKFold

- LeaveOneGroupOut

- LeavePGroupsOut

- GroupShuffleSplit

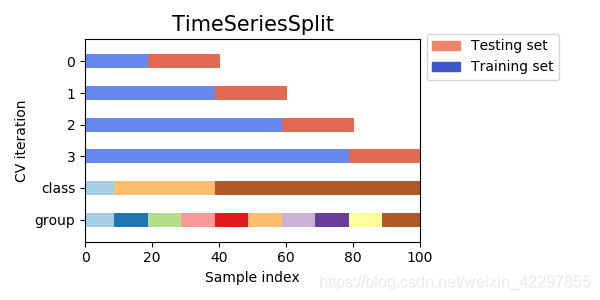

.TimeSeriesSplit(n_splits=’warn’, max_train_size=None)

超参数搜索

1.model_selection.GridSearchCV(estimator, param_grid, scoring=None, n_jobs=None, iid=’warn’, refit=True, cv=’warn’, verbose=0, pre_dispatch=‘2*n_jobs’, error_score=’raise-deprecating’, return_train_score=False) 网格搜索

参数:

param_grid:指定参数空间,以字典形式给出。若有多个参数空间则用list框起来。

scoring:评价准则,若不指定则默认学习器自带的评价准则

n_jobs:指定要并行计算的线程数,默认为None即1,如果设定为-1则表示使用全部cpu。

iid

refit

verbose

pre_dispatch

error_score

return_train_score

属性:

cv_results_:返回网格搜索的结果

best_estimator_:返回最优的学习器

best_params_:返回最优的参数

best_score_:返回最优的评价值

2.model_selection.RandomizedSearchCV(estimator, param_distributions, n_iter=10, scoring=None, n_jobs=None, iid=’warn’, refit=True, cv=’warn’, verbose=0, pre_dispatch=‘2*n_jobs’, random_state=None, error_score=’raise-deprecating’, return_train_score=False)随机搜索

参数:

estimator:略

param_distributions:参数的分布,写法和上面的param_grid相似,字典值里是一个随机分布,如果给的是一个list则默认均匀分布。

n_iter

scoring:略

n_jobs:略

iid

refit

cv:略

verbose

pre_dispatch

random_state:略

error_score

return_train_score

属性同GridSearchCV

交叉验证简单评估

.cross_val_score(estimator, X, y=None, groups=None, scoring=None, cv=’warn’, n_jobs=None, verbose=0, fit_params=None, pre_dispatch=‘2*n_jobs’, error_score=’raise-deprecating’)

示例:scores = cross_val_score(clf, data, target, cv=5)

cv:当cv为整数时默认使用kfold或分层折叠策略,如果估计量来自ClassifierMixin,则使用后者。另外还可以指定其它的交叉验证迭代器或者是自定义迭代器。

scoring:指定评分方式,详见这里

- cross_validate

- cross_val_predict

三、.cross_validation

四、.metrics

建议与评价指标详解配合使用。

4.1 自定义评价指标

.make_scorer

4.2 分类器评价指标

.accuracy_score(y_true, y_pred, normalize=True, sample_weight=None)

normalize:默认返回正确率,若为False则返回预测正确的样本数。

.balanced_accuracy_score(y_true, y_pred, sample_weight=None, adjusted=False)

adjusted:

.average_precision_score(y_true, y_score, average=’macro’, pos_label=1, sample_weight=None).recall_score(y_true, y_pred, labels=None, pos_label=1, average=’binary’, sample_weight=None).precision_score(y_true, y_pred, labels=None, pos_label=1, average=’binary’, sample_weight=None).f1_score(y_true, y_pred, labels=None, pos_label=1, average=’binary’, sample_weight=None)

average:可指定micro和macro

.log_loss(y_true, y_pred, eps=1e-15, normalize=True, sample_weight=None, labels=None)

average_precision_score

brier_score_loss

jaccard_score

.roc_auc_score(y_true, y_score, average=’macro’, sample_weight=None, max_fpr=None)

4.3聚类器评价指标

.adjusted_rand_score(labels_true, labels_pred):ARI指数.mutual_info_score(labels_true, labels_pred, contingency=None):互信息.adjusted_mutual_info_score(labels_true, labels_pred, average_method=’warn’).normalized_mutual_info_score(labels_true, labels_pred, average_method=’warn’).completeness_score(labels_true, labels_pred)完备性.homogeneity_score(labels_true, labels_pred):同质性.homogeneity_completeness_v_measure(labels_true, labels_pred, beta=1.0).v_measure_score(labels_true, labels_pred, beta=1.0).fowlkes_mallows_score(labels_true, labels_pred, sparse=False).silhouette_score(X, labels, metric=’euclidean’, sample_size=None, random_state=None, **kwds)轮廓系数.calinski_harabasz_score(X, labels).davies_bouldin_score(X, labels).contingency_matrix(labels_true, labels_pred, eps=None, sparse=False)

4.4 回归器评价指标

.mean_squared_error(y_true, y_pred, sample_weight=None, multioutput=’uniform_average’).max_error(y_true, y_pred).mean_absolute_error(y_true, y_pred, sample_weight=None, multioutput=’uniform_average’)

explained_variance_score

mean_squared_log_error

median_absolute_error

r2_score

五、.pipeline

1.make_pipeline

六、.datasets

1.load_iris

七、.feature_extraction特征提取

7.1

.text.CountVectorizer(input=’content’, encoding=’utf-8’, decode_error=’strict’, strip_accents=None, lowercase=True, preprocessor=None, tokenizer=None, stop_words=None, token_pattern=’(?u)\b\w\w+\b’, ngram_range=(1, 1), analyzer=’word’, max_df=1.0, min_df=1, max_features=None, vocabulary=None, binary=False, dtype=<class ‘numpy.int64’>)对单列文本做标记和计数。

八、.feature_selection

.VarianceThreshold(threshold=0.0):过滤法-方差阈值.SelectKBest(score_func=<function f_classif>, k=10)

score_func:指定过滤法中的评价准则,默认为f值。

.SelectPercentile(score_func=<function f_classif>, percentile=10).SelectFpr(score_func=<function f_classif>, alpha=0.05).SelectFdr(score_func=<function f_classif>, alpha=0.05).SelectFwe(score_func=<function f_classif>, alpha=0.05).GenericUnivariateSelect(score_func=<function f_classif>, mode=’percentile’, param=1e-05):通用的特征筛选器。.f_regression(X, y, center=True).mutual_info_regression(X, y, discrete_features=’auto’, n_neighbors=3, copy=True, random_state=None):互信息。.chi2(X, y).f_classif(X, y).mutual_info_classif(X, y, discrete_features=’auto’, n_neighbors=3, copy=True, random_state=None).RFE(estimator, n_features_to_select=None, step=1, verbose=0):RFE嵌入法。.SelectFromModel(estimator, threshold=None, prefit=False, norm_order=1, max_features=None):自选模型嵌入法。