环境

一个云账户,我使用的是Oracle的Oracle Cloud Infrastructure, 以下简称OCI。

目标

操作系统Oracle Linux 7,运行容器数据库,数据库为RAC,版本18.3(也可以12.2.0.1),实例名为ORCLCDB,带一个可插拔数据库orclpdb1。

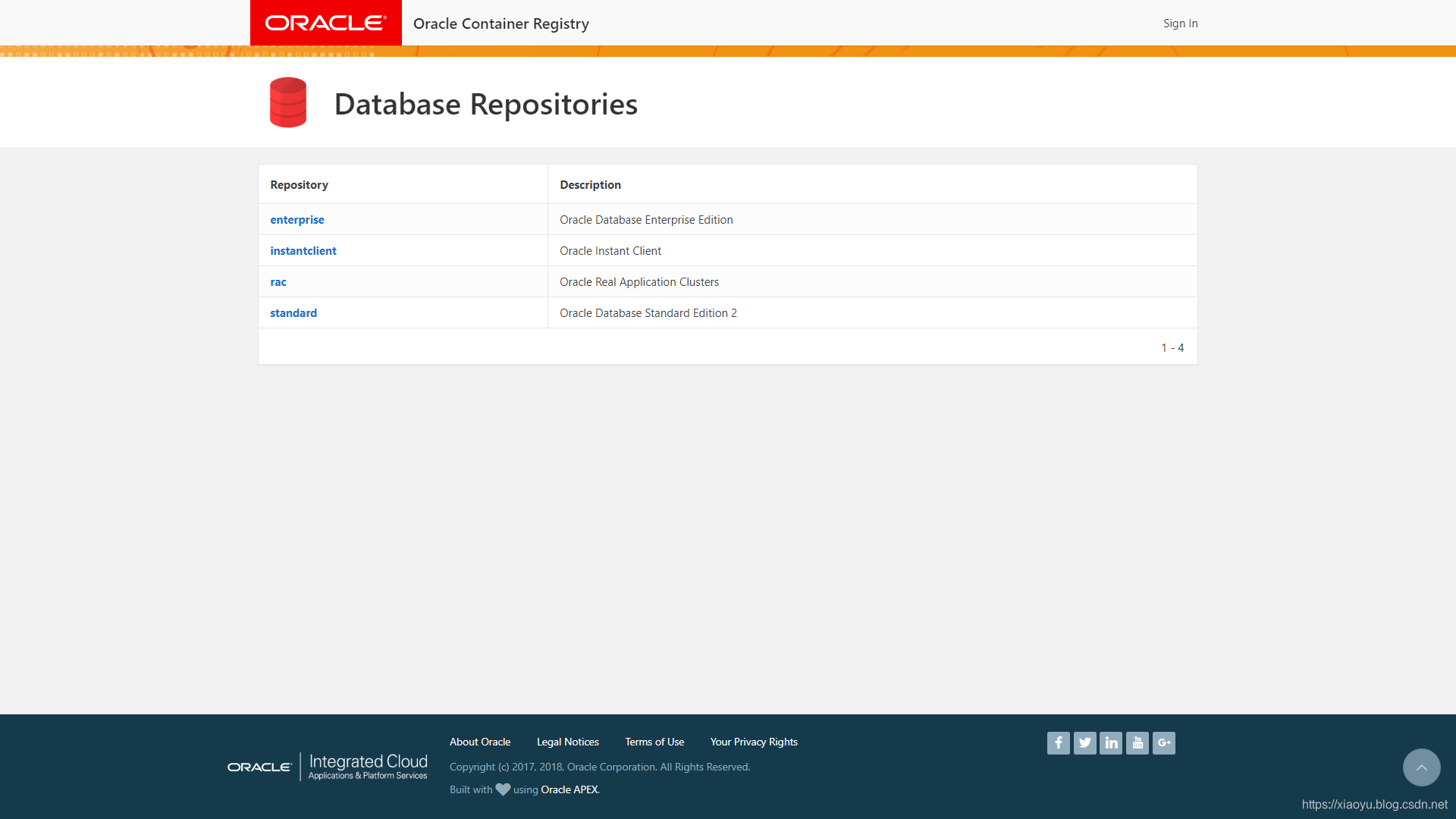

从Oracle Container Registry可以得到比Docker Hub更多的Oracle docker image,包括单实例,RAC,包括企业版,标准版和instant client。

创建Linux操作系统

在OCI中创建一个计算实例,配置为VM.Standard2.1。启动盘配100GB。

安装Docker

安装Docker,耗时1m9.076s:

sudo yum install -y yum-utils

sudo yum-config-manager --enable ol7_addons

sudo yum install -y docker-engine

sudo systemctl start docker

sudo systemctl enable docker

sudo usermod -aG docker vagrant (若是云环境则将vagrant替换为opc)

$ sudo docker version

Client: Docker Engine - Community

Version: 18.09.8-ol

API version: 1.39

Go version: go1.10.8

Git commit: 76804b7

Built: Fri Sep 27 21:00:18 2019

OS/Arch: linux/amd64

Experimental: false

Server: Docker Engine - Community

Engine:

Version: 18.09.8-ol

API version: 1.39 (minimum version 1.12)

Go version: go1.10.8

Git commit: 76804b7

Built: Fri Sep 27 20:54:00 2019

OS/Arch: linux/amd64

Experimental: false

Default Registry: docker.io

Pull Docker Image

访问网页,进入rac目录:

对于RAC,有2个image。分别为12.2.0.1(7 GB),18.3.0(10GB)。此处选择后者。

$ docker login container-registry.oracle.com

$ docker pull container-registry.oracle.com/database/rac:18.3.0

pull image耗时16m59.180s(另一次耗时17m19.484s)。包括下载和解压时间。

查看image:

$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

container-registry.oracle.com/database/rac 18.3.0 c8fa68d372f8 7 months ago 21.4GB

修改内核参数

容器会从宿主机中继承配置,在/etc/sysctl.conf:中加入参数:

fs.file-max = 6815744

net.core.rmem_max = 4194304

net.core.rmem_default = 262144

net.core.wmem_max = 1048576

net.core.wmem_default = 262144

net.core.rmem_default = 262144

并使其生效:

# sysctl -a

# sysctl -p

配置实时模式

RAC的某些进程需要运行在实时模式,因此需要在文件/etc/sysconfig/docker中添加以下:

OPTIONS='--selinux-enabled --cpu-rt-runtime=950000'

使其生效:

# systemctl daemon-reload

# systemctl stop docker

# systemctl start docker

SELINUX 配置为 permissive模式(/etc/selinux/config),过程略。

然后重启实例使得SELINUX生效。

准备共享卷

可以使用NFS卷或块存储,本例使用块存储。块存储上会建立ASM。

创建一个50G块存储并挂载到计算实例,即sdb:

$ lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sdb 8:16 0 50G 0 disk

sda 8:0 0 80G 0 disk

├─sda2 8:2 0 8G 0 part [SWAP]

├─sda3 8:3 0 38.4G 0 part /

└─sda1 8:1 0 200M 0 part /boot/efi

清除块存储上的信息,以确保其上无文件系统,或者是之前已经创建过的ASM设备:

dd if=/dev/zero of=/dev/sdb bs=8k count=100000

因此在每一

创建虚拟网络

创建两个网络:

docker network create --driver=bridge --subnet=172.16.1.0/24 rac_pub1_nw

docker network create --driver=bridge --subnet=192.168.17.0/24 rac_priv1_nw

查看:

$ docker network ls

NETWORK ID NAME DRIVER SCOPE

18c4cc718e9b bridge bridge local

29b88df2d8ca host host local

367874e35d76 none null local

84cd1b5b93fc rac_priv1_nw bridge local

52e338e30263 rac_pub1_nw bridge local

此网络为宿主机上的两个RAC容器共同使用。

创建共享主机解析文件

# mkdir /opt/containers

# touch /opt/containers/rac_host_file

口令管理

这一部分,是仿造Github上的Docker项目的README。

因为按照联机帮助,在命令行中设置口令并未生效。

以下设置的口令为oracle和grid操作系统用户以及数据库共同使用。

mkdir /opt/.secrets/

openssl rand -hex 64 -out /opt/.secrets/pwd.key

-- 将口令明码写入临时文件

echo Oracle.123# >/opt/.secrets/common_os_pwdfile

-- 加密后存储

openssl enc -aes-256-cbc -salt -in /opt/.secrets/common_os_pwdfile -out /opt/.secrets/common_os_pwdfile.enc -pass file:/opt/.secrets/pwd.key

-- 删除临时文件

rm -f /opt/.secrets/common_os_pwdfile

创建RAC节点1容器

docker create -t -i \

--hostname racnode1 \

--volume /boot:/boot:ro \

--volume /dev/shm \

--tmpfs /dev/shm:rw,exec,size=4G \

--volume /opt/containers/rac_host_file:/etc/hosts \

--volume /opt/.secrets:/run/secrets \

--dns-search=example.com \

--device=/dev/sdb:/dev/asm_disk1 \

--privileged=false \

--cap-add=SYS_NICE \

--cap-add=SYS_RESOURCE \

--cap-add=NET_ADMIN \

-e NODE_VIP=172.16.1.160 \

-e VIP_HOSTNAME=racnode1-vip \

-e PRIV_IP=192.168.17.150 \

-e PRIV_HOSTNAME=racnode1-priv \

-e PUBLIC_IP=172.16.1.150 \

-e PUBLIC_HOSTNAME=racnode1 \

-e SCAN_NAME=racnode-scan \

-e SCAN_IP=172.16.1.70 \

-e OP_TYPE=INSTALL \

-e DOMAIN=example.com \

-e ASM_DEVICE_LIST=/dev/asm_disk1 \

-e ASM_DISCOVERY_DIR=/dev \

-e COMMON_OS_PWD_FILE=common_os_pwdfile.enc \

--restart=always --tmpfs=/run -v /sys/fs/cgroup:/sys/fs/cgroup:ro \

--cpu-rt-runtime=95000 --ulimit rtprio=99 \

--name racnode1 \

container-registry.oracle.com/database/rac:18.3.0

为第一个RAC容器分配网络:

docker network disconnect bridge racnode1

docker network connect rac_pub1_nw --ip 172.16.1.150 racnode1

docker network connect rac_priv1_nw --ip 192.168.17.150 racnode1

启动第一个RAC容器,然后会自动开始安装GI和数据库:

docker start racnode1

查看容器运行日志:

docker logs -f racnode1

直到出现以下输出,表示第一个RAC节点就绪:

####################################

ORACLE RAC DATABASE IS READY TO USE!

####################################

从日志可知,整个过程耗时1小时24分(另两次用了1小时23分,1小时9分)。

以下是完整的,正确执行的日志,尽管其中有许多错误和警告:

[opc@instance-20191111-0822 ~]$ docker logs -f racnode1

PATH=/bin:/usr/bin:/sbin:/usr/sbin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

HOSTNAME=racnode1

TERM=xterm

NODE_VIP=172.16.1.160

VIP_HOSTNAME=racnode1-vip

PRIV_IP=192.168.17.150

PRIV_HOSTNAME=racnode1-priv

PUBLIC_IP=172.16.1.150

PUBLIC_HOSTNAME=racnode1

SCAN_NAME=racnode-scan

SCAN_IP=172.16.1.70

OP_TYPE=INSTALL

DOMAIN=example.com

ASM_DEVICE_LIST=/dev/asm_disk1

ASM_DISCOVERY_DIR=/dev

COMMON_OS_PWD_FILE=common_os_pwdfile.enc

SETUP_LINUX_FILE=setupLinuxEnv.sh

INSTALL_DIR=/opt/scripts

GRID_BASE=/u01/app/grid

GRID_HOME=/u01/app/18.3.0/grid

INSTALL_FILE_1=LINUX.X64_180000_grid_home.zip

GRID_INSTALL_RSP=grid.rsp

GRID_SW_INSTALL_RSP=grid_sw_inst.rsp

GRID_SETUP_FILE=setupGrid.sh

FIXUP_PREQ_FILE=fixupPreq.sh

INSTALL_GRID_BINARIES_FILE=installGridBinaries.sh

INSTALL_GRID_PATCH=applyGridPatch.sh

INVENTORY=/u01/app/oraInventory

CONFIGGRID=configGrid.sh

ADDNODE=AddNode.sh

DELNODE=DelNode.sh

ADDNODE_RSP=grid_addnode.rsp

SETUPSSH=setupSSH.expect

GRID_PATCH=p28322130_183000OCWRU_Linux-x86-64.zip

PATCH_NUMBER=28322130

DOCKERORACLEINIT=dockeroracleinit

GRID_USER_HOME=/home/grid

SETUPGRIDENV=setupGridEnv.sh

RESET_OS_PASSWORD=resetOSPassword.sh

MULTI_NODE_INSTALL=MultiNodeInstall.py

DB_BASE=/u01/app/oracle

DB_HOME=/u01/app/oracle/product/18.3.0/dbhome_1

INSTALL_FILE_2=LINUX.X64_180000_db_home.zip

DB_INSTALL_RSP=db_inst.rsp

DBCA_RSP=dbca.rsp

DB_SETUP_FILE=setupDB.sh

PWD_FILE=setPassword.sh

RUN_FILE=runOracle.sh

STOP_FILE=stopOracle.sh

ENABLE_RAC_FILE=enableRAC.sh

CHECK_DB_FILE=checkDBStatus.sh

USER_SCRIPTS_FILE=runUserScripts.sh

REMOTE_LISTENER_FILE=remoteListener.sh

INSTALL_DB_BINARIES_FILE=installDBBinaries.sh

FUNCTIONS=functions.sh

COMMON_SCRIPTS=/common_scripts

CHECK_SPACE_FILE=checkSpace.sh

EXPECT=/usr/bin/expect

BIN=/usr/sbin

container=true

INSTALL_SCRIPTS=/opt/scripts/install

SCRIPT_DIR=/opt/scripts/startup

GRID_PATH=/u01/app/18.3.0/grid/bin:/u01/app/18.3.0/grid/OPatch/:/usr/sbin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

DB_PATH=/u01/app/oracle/product/18.3.0/dbhome_1/bin:/u01/app/oracle/product/18.3.0/dbhome_1/OPatch/:/usr/sbin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

GRID_LD_LIBRARY_PATH=/u01/app/18.3.0/grid/lib:/usr/lib:/lib

DB_LD_LIBRARY_PATH=/u01/app/oracle/product/18.3.0/dbhome_1/lib:/usr/lib:/lib

HOME=/home/grid

Failed to parse kernel command line, ignoring: No such file or directory

systemd 219 running in system mode. (+PAM +AUDIT +SELINUX +IMA -APPARMOR +SMACK +SYSVINIT +UTMP +LIBCRYPTSETUP +GCRYPT +GNUTLS +ACL +XZ +LZ4 -SECCOMP +BLKID +ELFUTILS +KMOD +IDN)

Detected virtualization other.

Detected architecture x86-64.

Welcome to Oracle Linux Server 7.6!

Set hostname to <racnode1>.

Failed to parse kernel command line, ignoring: No such file or directory

Failed to parse kernel command line, ignoring: No such file or directoryFailed to parse kernel command line, ignoring: No such file or directory

/usr/lib/systemd/system-generators/systemd-fstab-generator failed with error code 1.

Binding to IPv6 address not available since kernel does not support IPv6.

Binding to IPv6 address not available since kernel does not support IPv6.

Cannot add dependency job for unit display-manager.service, ignoring: Unit not found.

[ OK ] Reached target Swap.

[ OK ] Started Dispatch Password Requests to Console Directory Watch.

[ OK ] Started Forward Password Requests to Wall Directory Watch.

[ OK ] Created slice Root Slice.

[ OK ] Created slice System Slice.

[ OK ] Created slice User and Session Slice.

[ OK ] Reached target Slices.

[ OK ] Listening on Journal Socket.

Couldn't determine result for ConditionKernelCommandLine=|rd.modules-load for systemd-modules-load.service, assuming failed: No such file or directory

Couldn't determine result for ConditionKernelCommandLine=|modules-load for systemd-modules-load.service, assuming failed: No such file or directory

Starting Read and set NIS domainname from /etc/sysconfig/network...

Starting Journal Service...

[ OK ] Listening on Delayed Shutdown Socket.

[ OK ] Reached target RPC Port Mapper.

Starting Configure read-only root support...

Starting Rebuild Hardware Database...

[ OK ] Reached target Local File Systems (Pre).

[ OK ] Reached target Local Encrypted Volumes.

[ OK ] Created slice system-getty.slice.

[ OK ] Listening on /dev/initctl Compatibility Named Pipe.

[ OK ] Started Journal Service.

[ OK ] Started Read and set NIS domainname from /etc/sysconfig/network.

Starting Flush Journal to Persistent Storage...

[ OK ] Started Configure read-only root support.

[ OK ] Reached target Local File Systems.

Starting Preprocess NFS configuration...

Starting Rebuild Journal Catalog...

Starting Mark the need to relabel after reboot...

Starting Load/Save Random Seed...

[ OK ] Started Mark the need to relabel after reboot.

[ OK ] Started Load/Save Random Seed.

[ OK ] Started Flush Journal to Persistent Storage.

[ OK ] Started Rebuild Journal Catalog.

[ OK ] Started Preprocess NFS configuration.

Starting Create Volatile Files and Directories...

[ OK ] Started Create Volatile Files and Directories.

Starting Update UTMP about System Boot/Shutdown...

Mounting RPC Pipe File System...

[FAILED] Failed to mount RPC Pipe File System.

See 'systemctl status var-lib-nfs-rpc_pipefs.mount' for details.

[DEPEND] Dependency failed for rpc_pipefs.target.

[DEPEND] Dependency failed for RPC security service for NFS client and server.

[ OK ] Started Update UTMP about System Boot/Shutdown.

[ OK ] Started Rebuild Hardware Database.

Starting Update is Completed...

[ OK ] Started Update is Completed.

[ OK ] Reached target System Initialization.

[ OK ] Listening on RPCbind Server Activation Socket.

Starting RPC bind service...

[ OK ] Listening on D-Bus System Message Bus Socket.

[ OK ] Reached target Sockets.

[ OK ] Started Daily Cleanup of Temporary Directories.

[ OK ] Reached target Timers.

[ OK ] Started Flexible branding.

[ OK ] Reached target Paths.

[ OK ] Reached target Basic System.

[ OK ] Started Self Monitoring and Reporting Technology (SMART) Daemon.

Starting OpenSSH Server Key Generation...

[ OK ] Started D-Bus System Message Bus.

Starting Login Service...

Starting LSB: Bring up/down networking...

Starting Resets System Activity Logs...

Starting GSSAPI Proxy Daemon...

[ OK ] Started RPC bind service.

Starting Cleanup of Temporary Directories...

[ OK ] Started Resets System Activity Logs.

[ OK ] Started Login Service.

[ OK ] Started Cleanup of Temporary Directories.

[ OK ] Started GSSAPI Proxy Daemon.

[ OK ] Reached target NFS client services.

[ OK ] Reached target Remote File Systems (Pre).

[ OK ] Reached target Remote File Systems.

Starting Permit User Sessions...

[ OK ] Started Permit User Sessions.

[ OK ] Started Command Scheduler.

[ OK ] Started OpenSSH Server Key Generation.

[ OK ] Started LSB: Bring up/down networking.

[ OK ] Reached target Network.

Starting /etc/rc.d/rc.local Compatibility...

Starting OpenSSH server daemon...

[ OK ] Reached target Network is Online.

Starting Notify NFS peers of a restart...

[ OK ] Started /etc/rc.d/rc.local Compatibility.

[ OK ] Started Notify NFS peers of a restart.

[ OK ] Started Console Getty.

[ OK ] Reached target Login Prompts.

[ OK ] Started OpenSSH server daemon.

[ OK ] Reached target Multi-User System.

[ OK ] Reached target Graphical Interface.

Starting Update UTMP about System Runlevel Changes...

[ OK ] Started Update UTMP about System Runlevel Changes.

11-13-2019 00:53:47 UTC : : Process id of the program :

11-13-2019 00:53:47 UTC : : #################################################

11-13-2019 00:53:47 UTC : : Starting Grid Installation

11-13-2019 00:53:47 UTC : : #################################################

11-13-2019 00:53:47 UTC : : Pre-Grid Setup steps are in process

11-13-2019 00:53:47 UTC : : Process id of the program :

11-13-2019 00:53:47 UTC : : Disable failed service var-lib-nfs-rpc_pipefs.mount

Failed to parse kernel command line, ignoring: No such file or directory

Failed to parse kernel command line, ignoring: No such file or directory

Failed to parse kernel command line, ignoring: No such file or directory

11-13-2019 00:53:48 UTC : : Resetting Failed Services

11-13-2019 00:53:48 UTC : : Sleeping for 60 seconds

Oracle Linux Server 7.6

Kernel 4.14.35-1902.6.6.el7uek.x86_64 on an x86_64

racnode1 login: 11-13-2019 00:54:48 UTC : : Systemctl state is running!

11-13-2019 00:54:48 UTC : : Setting correct permissions for /bin/ping

11-13-2019 00:54:48 UTC : : Public IP is set to 172.16.1.150

11-13-2019 00:54:48 UTC : : RAC Node PUBLIC Hostname is set to racnode1

11-13-2019 00:54:48 UTC : : racnode1 already exists : 172.16.1.150 racnode1.example.com racnode1

192.168.17.150 racnode1-priv.example.com racnode1-priv

172.16.1.160 racnode1-vip.example.com racnode1-vip, no update required

11-13-2019 00:54:48 UTC : : racnode1-priv already exists : 192.168.17.150 racnode1-priv.example.com racnode1-priv, no update required

11-13-2019 00:54:48 UTC : : racnode1-vip already exists : 172.16.1.160 racnode1-vip.example.com racnode1-vip, no update required

11-13-2019 00:54:48 UTC : : racnode-scan already exists : 172.16.1.70 racnode-scan.example.com racnode-scan, no update required

11-13-2019 00:54:48 UTC : : Preapring Device list

11-13-2019 00:54:48 UTC : : Changing Disk permission and ownership /dev/asm_disk1

11-13-2019 00:54:48 UTC : : #####################################################################

11-13-2019 00:54:48 UTC : : RAC setup will begin in 2 minutes

11-13-2019 00:54:48 UTC : : ####################################################################

11-13-2019 00:54:50 UTC : : ###################################################

11-13-2019 00:54:50 UTC : : Pre-Grid Setup steps completed

11-13-2019 00:54:50 UTC : : ###################################################

11-13-2019 00:54:50 UTC : : Checking if grid is already configured

11-13-2019 00:54:50 UTC : : Process id of the program :

11-13-2019 00:54:50 UTC : : Public IP is set to 172.16.1.150

11-13-2019 00:54:50 UTC : : RAC Node PUBLIC Hostname is set to racnode1

11-13-2019 00:54:50 UTC : : Domain is defined to example.com

11-13-2019 00:54:50 UTC : : Default setting of AUTO GNS VIP set to false. If you want to use AUTO GNS VIP, please pass DHCP_CONF as an env parameter set to true

11-13-2019 00:54:50 UTC : : RAC VIP set to 172.16.1.160

11-13-2019 00:54:50 UTC : : RAC Node VIP hostname is set to racnode1-vip

11-13-2019 00:54:50 UTC : : SCAN_NAME name is racnode-scan

11-13-2019 00:54:50 UTC : : SCAN PORT is set to empty string. Setting it to 1521 port.

11-13-2019 00:54:50 UTC : : 172.16.1.70

11-13-2019 00:54:50 UTC : : SCAN Name resolving to IP. Check Passed!

11-13-2019 00:54:50 UTC : : SCAN_IP name is 172.16.1.70

11-13-2019 00:54:50 UTC : : RAC Node PRIV IP is set to 192.168.17.150

11-13-2019 00:54:50 UTC : : RAC Node private hostname is set to racnode1-priv

11-13-2019 00:54:50 UTC : : CMAN_NAME set to the empty string

11-13-2019 00:54:50 UTC : : CMAN_IP set to the empty string

11-13-2019 00:54:50 UTC : : Cluster Name is not defined

11-13-2019 00:54:50 UTC : : Cluster name is set to 'racnode-c'

11-13-2019 00:54:50 UTC : : Password file generated

11-13-2019 00:54:50 UTC : : Common OS Password string is set for Grid user

11-13-2019 00:54:50 UTC : : Common OS Password string is set for Oracle user

11-13-2019 00:54:50 UTC : : Common OS Password string is set for Oracle Database

11-13-2019 00:54:50 UTC : : Setting CONFIGURE_GNS to false

11-13-2019 00:54:50 UTC : : GRID_RESPONSE_FILE env variable set to empty. configGrid.sh will use standard cluster responsefile

11-13-2019 00:54:50 UTC : : Location for User script SCRIPT_ROOT set to /common_scripts

11-13-2019 00:54:50 UTC : : IGNORE_CVU_CHECKS is set to true

11-13-2019 00:54:50 UTC : : Oracle SID is set to ORCLCDB

11-13-2019 00:54:50 UTC : : Oracle PDB name is set to ORCLPDB

11-13-2019 00:54:50 UTC : : Check passed for network card eth1 for public IP 172.16.1.150

11-13-2019 00:54:50 UTC : : Public Netmask : 255.255.255.0

11-13-2019 00:54:50 UTC : : Check passed for network card eth0 for private IP 192.168.17.150

11-13-2019 00:54:50 UTC : : Building NETWORK_STRING to set networkInterfaceList in Grid Response File

11-13-2019 00:54:50 UTC : : Network InterfaceList set to eth1:172.16.1.0:1,eth0:192.168.17.0:5

11-13-2019 00:54:50 UTC : : Setting random password for grid user

11-13-2019 00:54:50 UTC : : Setting random password for oracle user

11-13-2019 00:54:50 UTC : : Calling setupSSH function

11-13-2019 00:54:50 UTC : : SSh will be setup among racnode1 nodes

11-13-2019 00:54:50 UTC : : Running SSH setup for grid user between nodes racnode1

11-13-2019 00:55:26 UTC : : Running SSH setup for oracle user between nodes racnode1

11-13-2019 00:55:33 UTC : : SSH check fine for the racnode1

11-13-2019 00:55:33 UTC : : SSH check fine for the oracle@racnode1

11-13-2019 00:55:33 UTC : : Preapring Device list

11-13-2019 00:55:33 UTC : : Changing Disk permission and ownership

11-13-2019 00:55:33 UTC : : ASM Disk size : 0

11-13-2019 00:55:33 UTC : : ASM Device list will be with failure groups /dev/asm_disk1,

11-13-2019 00:55:33 UTC : : ASM Device list will be groups /dev/asm_disk1

11-13-2019 00:55:33 UTC : : CLUSTER_TYPE env variable is set to STANDALONE, will not process GIMR DEVICE list as default Diskgroup is set to DATA. GIMR DEVICE List will be processed when CLUSTER_TYPE is set to DOMAIN for DSC

11-13-2019 00:55:33 UTC : : Nodes in the cluster racnode1

11-13-2019 00:55:33 UTC : : Setting Device permissions for RAC Install on racnode1

11-13-2019 00:55:33 UTC : : Preapring ASM Device list

11-13-2019 00:55:33 UTC : : Changing Disk permission and ownership

11-13-2019 00:55:33 UTC : : Command : su - $GRID_USER -c "ssh $node sudo chown $GRID_USER:asmadmin $device" execute on racnode1

11-13-2019 00:55:33 UTC : : Command : su - $GRID_USER -c "ssh $node sudo chmod 660 $device" execute on racnode1

11-13-2019 00:55:33 UTC : : Populate Rac Env Vars on Remote Hosts

11-13-2019 00:55:33 UTC : : Command : su - $GRID_USER -c "ssh $node sudo echo \"export ASM_DEVICE_LIST=${ASM_DEVICE_LIST}\" >> $RAC_ENV_FILE" execute on racnode1

11-13-2019 00:55:33 UTC : : Generating Reponsefile

11-13-2019 00:55:34 UTC : : Running cluvfy Checks

11-13-2019 00:55:34 UTC : : Performing Cluvfy Checks

11-13-2019 00:55:59 UTC : : Checking /tmp/cluvfy_check.txt if there is any failed check.

ERROR:

PRVG-10467 : The default Oracle Inventory group could not be determined.

Verifying Physical Memory ...PASSED

Verifying Available Physical Memory ...PASSED

Verifying Swap Size ...FAILED (PRVF-7573)

Verifying Free Space: racnode1:/usr,racnode1:/var,racnode1:/etc,racnode1:/sbin,racnode1:/tmp ...PASSED

Verifying User Existence: grid ...

Verifying Users With Same UID: 54332 ...PASSED

Verifying User Existence: grid ...PASSED

Verifying Group Existence: asmadmin ...PASSED

Verifying Group Existence: dba ...PASSED

Verifying Group Membership: dba ...PASSED

Verifying Group Membership: asmadmin ...PASSED

Verifying Run Level ...PASSED

Verifying Hard Limit: maximum open file descriptors ...PASSED

Verifying Soft Limit: maximum open file descriptors ...PASSED

Verifying Hard Limit: maximum user processes ...PASSED

Verifying Soft Limit: maximum user processes ...PASSED

Verifying Soft Limit: maximum stack size ...PASSED

Verifying Architecture ...PASSED

Verifying OS Kernel Version ...PASSED

Verifying OS Kernel Parameter: semmsl ...PASSED

Verifying OS Kernel Parameter: semmns ...PASSED

Verifying OS Kernel Parameter: semopm ...PASSED

Verifying OS Kernel Parameter: semmni ...PASSED

Verifying OS Kernel Parameter: shmmax ...PASSED

Verifying OS Kernel Parameter: shmmni ...PASSED

Verifying OS Kernel Parameter: shmall ...FAILED (PRVG-1201)

Verifying OS Kernel Parameter: file-max ...PASSED

Verifying OS Kernel Parameter: aio-max-nr ...FAILED (PRVG-1205)

Verifying OS Kernel Parameter: panic_on_oops ...PASSED

Verifying Package: binutils-2.23.52.0.1 ...PASSED

Verifying Package: compat-libcap1-1.10 ...PASSED

Verifying Package: libgcc-4.8.2 (x86_64) ...PASSED

Verifying Package: libstdc++-4.8.2 (x86_64) ...PASSED

Verifying Package: libstdc++-devel-4.8.2 (x86_64) ...PASSED

Verifying Package: sysstat-10.1.5 ...PASSED

Verifying Package: ksh ...PASSED

Verifying Package: make-3.82 ...PASSED

Verifying Package: glibc-2.17 (x86_64) ...PASSED

Verifying Package: glibc-devel-2.17 (x86_64) ...PASSED

Verifying Package: libaio-0.3.109 (x86_64) ...PASSED

Verifying Package: libaio-devel-0.3.109 (x86_64) ...PASSED

Verifying Package: nfs-utils-1.2.3-15 ...PASSED

Verifying Package: smartmontools-6.2-4 ...PASSED

Verifying Package: net-tools-2.0-0.17 ...PASSED

Verifying Port Availability for component "Oracle Remote Method Invocation (ORMI)" ...PASSED

Verifying Port Availability for component "Oracle Notification Service (ONS)" ...PASSED

Verifying Port Availability for component "Oracle Cluster Synchronization Services (CSSD)" ...PASSED

Verifying Port Availability for component "Oracle Notification Service (ONS) Enterprise Manager support" ...PASSED

Verifying Port Availability for component "Oracle Database Listener" ...PASSED

Verifying Users With Same UID: 0 ...PASSED

Verifying Current Group ID ...PASSED

Verifying Root user consistency ...PASSED

Verifying Host name ...PASSED

Verifying Node Connectivity ...

Verifying Hosts File ...PASSED

Verifying Check that maximum (MTU) size packet goes through subnet ...PASSED

Verifying Node Connectivity ...WARNING (PRVF-6006, PRKC-1071, PRVG-11078)

Verifying Multicast or broadcast check ...PASSED

Verifying ASM Integrity ...

Verifying Node Connectivity ...

Verifying Hosts File ...PASSED

Verifying Check that maximum (MTU) size packet goes through subnet ...PASSED

Verifying Node Connectivity ...WARNING (PRVF-6006, PRKC-1071, PRVG-11078)

Verifying ASM Integrity ...WARNING (PRVF-6006, PRKC-1071, PRVG-11078)

Verifying Device Checks for ASM ...

Verifying Access Control List check ...PASSED

Verifying Device Checks for ASM ...PASSED

Verifying Network Time Protocol (NTP) ...

Verifying '/etc/ntp.conf' ...PASSED

Verifying '/var/run/ntpd.pid' ...PASSED

Verifying '/var/run/chronyd.pid' ...PASSED

Verifying Network Time Protocol (NTP) ...FAILED (PRVG-1017)

Verifying Same core file name pattern ...PASSED

Verifying User Mask ...PASSED

Verifying User Not In Group "root": grid ...PASSED

Verifying Time zone consistency ...PASSED

Verifying VIP Subnet configuration check ...PASSED

Verifying resolv.conf Integrity ...FAILED (PRVG-10048)

Verifying DNS/NIS name service ...

Verifying Name Service Switch Configuration File Integrity ...PASSED

Verifying DNS/NIS name service ...FAILED (PRVG-1101)

Verifying Single Client Access Name (SCAN) ...WARNING (PRVG-11368)

Verifying Domain Sockets ...PASSED

Verifying /boot mount ...PASSED

Verifying Daemon "avahi-daemon" not configured and running ...PASSED

Verifying Daemon "proxyt" not configured and running ...PASSED

Verifying loopback network interface address ...PASSED

Verifying Oracle base: /u01/app/grid ...

Verifying '/u01/app/grid' ...PASSED

Verifying Oracle base: /u01/app/grid ...PASSED

Verifying User Equivalence ...PASSED

Verifying Network interface bonding status of private interconnect network interfaces ...PASSED

Verifying /dev/shm mounted as temporary file system ...PASSED

Verifying File system mount options for path /var ...PASSED

Verifying zeroconf check ...PASSED

Verifying ASM Filter Driver configuration ...PASSED

Verifying Access control attributes for cluster manifest file ...PASSED

Pre-check for cluster services setup was unsuccessful on all the nodes.

Failures were encountered during execution of CVU verification request "stage -pre crsinst".

Verifying Swap Size ...FAILED

racnode1: PRVF-7573 : Sufficient swap size is not available on node "racnode1"

[Required = 14.4158GB (1.5116076E7KB) ; Found = 8GB (8388604.0KB)]

Verifying OS Kernel Parameter: shmall ...FAILED

racnode1: PRVG-1201 : OS kernel parameter "shmall" does not have expected

configured value on node "racnode1" [Expected = "2251799813685247" ;

Current = "18446744073692774000"; Configured = "1073741824"].

Verifying OS Kernel Parameter: aio-max-nr ...FAILED

racnode1: PRVG-1205 : OS kernel parameter "aio-max-nr" does not have expected

current value on node "racnode1" [Expected = "1048576" ; Current =

"65536"; Configured = "1048576"].

Verifying Node Connectivity ...WARNING

PRVF-6006 : unable to reach the IP addresses "192.168.17.150" from the local

node

PRKC-1071 : Nodes "192.168.17.150" did not respond to ping in "3" seconds,

null

PRVG-11078 : node connectivity failed for subnet "192.168.17.0"

Verifying ASM Integrity ...WARNING

Verifying Node Connectivity ...WARNING

PRVF-6006 : unable to reach the IP addresses "192.168.17.150" from the local

node

PRKC-1071 : Nodes "192.168.17.150" did not respond to ping in "3" seconds,

null

PRVG-11078 : node connectivity failed for subnet "192.168.17.0"

Verifying Network Time Protocol (NTP) ...FAILED

racnode1: PRVG-1017 : NTP configuration file "/etc/ntp.conf" is present on

nodes "racnode1" on which NTP daemon or service was not running

Verifying resolv.conf Integrity ...FAILED

racnode1: PRVG-10048 : Name "racnode1" was not resolved to an address of the

specified type by name servers o"127.0.0.11".

Verifying DNS/NIS name service ...FAILED

PRVG-1101 : SCAN name "racnode-scan" failed to resolve

Verifying Single Client Access Name (SCAN) ...WARNING

racnode1: PRVG-11368 : A SCAN is recommended to resolve to "3" or more IP

addresses, but SCAN "racnode-scan" resolves to only "172.16.1.70"

CVU operation performed: stage -pre crsinst

Date: Nov 13, 2019 12:55:36 AM

CVU home: /u01/app/18.3.0/grid/

User: grid

11-13-2019 00:55:59 UTC : : CVU Checks are ignored as IGNORE_CVU_CHECKS set to true. It is recommended to set IGNORE_CVU_CHECKS to false and meet all the cvu checks requirement. RAC installation might fail, if there are failed cvu checks.

11-13-2019 00:55:59 UTC : : Running Grid Installation

11-13-2019 00:56:45 UTC : : Running root.sh

11-13-2019 00:56:45 UTC : : Nodes in the cluster racnode1

11-13-2019 00:56:45 UTC : : Running root.sh on racnode1

Failed to parse kernel command line, ignoring: No such file or directory

Failed to parse kernel command line, ignoring: No such file or directory

Failed to parse kernel command line, ignoring: No such file or directory

Failed to parse kernel command line, ignoring: No such file or directoryFailed to parse kernel command line, ignoring: No such file or directory

Failed to parse kernel command line, ignoring: No such file or directory

Failed to parse kernel command line, ignoring: No such file or directory

Failed to parse kernel command line, ignoring: No such file or directory

Failed to parse kernel command line, ignoring: No such file or directory

Failed to parse kernel command line, ignoring: No such file or directory

Failed to parse kernel command line, ignoring: No such file or directory

Failed to parse kernel command line, ignoring: No such file or directory

11-13-2019 01:06:43 UTC : : Running post root.sh steps

11-13-2019 01:06:43 UTC : : Running post root.sh steps to setup Grid env

11-13-2019 01:49:43 UTC : : Checking Cluster Status

11-13-2019 01:49:43 UTC : : Nodes in the cluster

11-13-2019 01:49:43 UTC : : Removing /tmp/cluvfy_check.txt as cluster check has passed

11-13-2019 01:49:43 UTC : : Generating DB Responsefile Running DB creation

11-13-2019 01:49:43 UTC : : Running DB creation

11-13-2019 02:17:11 UTC : : Checking DB status

11-13-2019 02:17:14 UTC : : #################################################################

11-13-2019 02:17:14 UTC : : Oracle Database ORCLCDB is up and running on racnode1

11-13-2019 02:17:14 UTC : : #################################################################

11-13-2019 02:17:14 UTC : : Running User Script

11-13-2019 02:17:14 UTC : : Setting Remote Listener

11-13-2019 02:17:14 UTC : : ####################################

11-13-2019 02:17:14 UTC : : ORACLE RAC DATABASE IS READY TO USE!

11-13-2019 02:17:14 UTC : : ####################################

^C

[opc@instance-20191111-0822 ~]$

[opc@instance-20191111-0822 ~]$ docker exec -i -t racnode1 /bin/bash

[grid@racnode1 ~]$ id

uid=54332(grid) gid=54321(oinstall) groups=54321(oinstall),54322(dba),54330(racdba),54334(asmadmin),54335(asmdba),54336(asmoper)

[grid@racnode1 ~]$ su - oracle

Password:

Last login: Wed Nov 13 02:17:14 UTC 2019

[oracle@racnode1 ~]$ su - grid

Password:

Last login: Wed Nov 13 01:09:29 UTC 2019

[grid@racnode1 ~]$ id

uid=54332(grid) gid=54321(oinstall) groups=54321(oinstall),54322(dba),54330(racdba),54334(asmadmin),54335(asmdba),54336(asmoper)

[grid@racnode1 ~]$ docker ps -a

-bash: docker: command not found

[grid@racnode1 ~]$ id

uid=54332(grid) gid=54321(oinstall) groups=54321(oinstall),54322(dba),54330(racdba),54334(asmadmin),54335(asmdba),54336(asmoper)

[grid@racnode1 ~]$ exit

logout

[oracle@racnode1 ~]$ exit

logout

[grid@racnode1 ~]$ exit

exit

[opc@instance-20191111-0822 ~]$ docker logs -f racnode1

[opc@instance-20191111-0822 ~]$ docker logs -f racnode1

PATH=/bin:/usr/bin:/sbin:/usr/sbin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

HOSTNAME=racnode1

TERM=xterm

NODE_VIP=172.16.1.160

VIP_HOSTNAME=racnode1-vip

PRIV_IP=192.168.17.150

PRIV_HOSTNAME=racnode1-priv

PUBLIC_IP=172.16.1.150

PUBLIC_HOSTNAME=racnode1

SCAN_NAME=racnode-scan

SCAN_IP=172.16.1.70

OP_TYPE=INSTALL

DOMAIN=example.com

ASM_DEVICE_LIST=/dev/asm_disk1

ASM_DISCOVERY_DIR=/dev

COMMON_OS_PWD_FILE=common_os_pwdfile.enc

SETUP_LINUX_FILE=setupLinuxEnv.sh

INSTALL_DIR=/opt/scripts

GRID_BASE=/u01/app/grid

GRID_HOME=/u01/app/18.3.0/grid

INSTALL_FILE_1=LINUX.X64_180000_grid_home.zip

GRID_INSTALL_RSP=grid.rsp

GRID_SW_INSTALL_RSP=grid_sw_inst.rsp

GRID_SETUP_FILE=setupGrid.sh

FIXUP_PREQ_FILE=fixupPreq.sh

INSTALL_GRID_BINARIES_FILE=installGridBinaries.sh

INSTALL_GRID_PATCH=applyGridPatch.sh

INVENTORY=/u01/app/oraInventory

CONFIGGRID=configGrid.sh

ADDNODE=AddNode.sh

DELNODE=DelNode.sh

ADDNODE_RSP=grid_addnode.rsp

SETUPSSH=setupSSH.expect

GRID_PATCH=p28322130_183000OCWRU_Linux-x86-64.zip

PATCH_NUMBER=28322130

DOCKERORACLEINIT=dockeroracleinit

GRID_USER_HOME=/home/grid

SETUPGRIDENV=setupGridEnv.sh

RESET_OS_PASSWORD=resetOSPassword.sh

MULTI_NODE_INSTALL=MultiNodeInstall.py

DB_BASE=/u01/app/oracle

DB_HOME=/u01/app/oracle/product/18.3.0/dbhome_1

INSTALL_FILE_2=LINUX.X64_180000_db_home.zip

DB_INSTALL_RSP=db_inst.rsp

DBCA_RSP=dbca.rsp

DB_SETUP_FILE=setupDB.sh

PWD_FILE=setPassword.sh

RUN_FILE=runOracle.sh

STOP_FILE=stopOracle.sh

ENABLE_RAC_FILE=enableRAC.sh

CHECK_DB_FILE=checkDBStatus.sh

USER_SCRIPTS_FILE=runUserScripts.sh

REMOTE_LISTENER_FILE=remoteListener.sh

INSTALL_DB_BINARIES_FILE=installDBBinaries.sh

FUNCTIONS=functions.sh

COMMON_SCRIPTS=/common_scripts

CHECK_SPACE_FILE=checkSpace.sh

EXPECT=/usr/bin/expect

BIN=/usr/sbin

container=true

INSTALL_SCRIPTS=/opt/scripts/install

SCRIPT_DIR=/opt/scripts/startup

GRID_PATH=/u01/app/18.3.0/grid/bin:/u01/app/18.3.0/grid/OPatch/:/usr/sbin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

DB_PATH=/u01/app/oracle/product/18.3.0/dbhome_1/bin:/u01/app/oracle/product/18.3.0/dbhome_1/OPatch/:/usr/sbin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

GRID_LD_LIBRARY_PATH=/u01/app/18.3.0/grid/lib:/usr/lib:/lib

DB_LD_LIBRARY_PATH=/u01/app/oracle/product/18.3.0/dbhome_1/lib:/usr/lib:/lib

HOME=/home/grid

Failed to parse kernel command line, ignoring: No such file or directory

systemd 219 running in system mode. (+PAM +AUDIT +SELINUX +IMA -APPARMOR +SMACK +SYSVINIT +UTMP +LIBCRYPTSETUP +GCRYPT +GNUTLS +ACL +XZ +LZ4 -SECCOMP +BLKID +ELFUTILS +KMOD +IDN)

Detected virtualization other.

Detected architecture x86-64.

Welcome to Oracle Linux Server 7.6!

Set hostname to <racnode1>.

Failed to parse kernel command line, ignoring: No such file or directory

Failed to parse kernel command line, ignoring: No such file or directoryFailed to parse kernel command line, ignoring: No such file or directory

/usr/lib/systemd/system-generators/systemd-fstab-generator failed with error code 1.

Binding to IPv6 address not available since kernel does not support IPv6.

Binding to IPv6 address not available since kernel does not support IPv6.

Cannot add dependency job for unit display-manager.service, ignoring: Unit not found.

[ OK ] Reached target Swap.

[ OK ] Started Dispatch Password Requests to Console Directory Watch.

[ OK ] Started Forward Password Requests to Wall Directory Watch.

[ OK ] Created slice Root Slice.

[ OK ] Created slice System Slice.

[ OK ] Created slice User and Session Slice.

[ OK ] Reached target Slices.

[ OK ] Listening on Journal Socket.

Couldn't determine result for ConditionKernelCommandLine=|rd.modules-load for systemd-modules-load.service, assuming failed: No such file or directory

Couldn't determine result for ConditionKernelCommandLine=|modules-load for systemd-modules-load.service, assuming failed: No such file or directory

Starting Read and set NIS domainname from /etc/sysconfig/network...

Starting Journal Service...

[ OK ] Listening on Delayed Shutdown Socket.

[ OK ] Reached target RPC Port Mapper.

Starting Configure read-only root support...

Starting Rebuild Hardware Database...

[ OK ] Reached target Local File Systems (Pre).

[ OK ] Reached target Local Encrypted Volumes.

[ OK ] Created slice system-getty.slice.

[ OK ] Listening on /dev/initctl Compatibility Named Pipe.

[ OK ] Started Journal Service.

[ OK ] Started Read and set NIS domainname from /etc/sysconfig/network.

Starting Flush Journal to Persistent Storage...

[ OK ] Started Configure read-only root support.

[ OK ] Reached target Local File Systems.

Starting Preprocess NFS configuration...

Starting Rebuild Journal Catalog...

Starting Mark the need to relabel after reboot...

Starting Load/Save Random Seed...

[ OK ] Started Mark the need to relabel after reboot.

[ OK ] Started Load/Save Random Seed.

[ OK ] Started Flush Journal to Persistent Storage.

[ OK ] Started Rebuild Journal Catalog.

[ OK ] Started Preprocess NFS configuration.

Starting Create Volatile Files and Directories...

[ OK ] Started Create Volatile Files and Directories.

Starting Update UTMP about System Boot/Shutdown...

Mounting RPC Pipe File System...

[FAILED] Failed to mount RPC Pipe File System.

See 'systemctl status var-lib-nfs-rpc_pipefs.mount' for details.

[DEPEND] Dependency failed for rpc_pipefs.target.

[DEPEND] Dependency failed for RPC security service for NFS client and server.

[ OK ] Started Update UTMP about System Boot/Shutdown.

[ OK ] Started Rebuild Hardware Database.

Starting Update is Completed...

[ OK ] Started Update is Completed.

[ OK ] Reached target System Initialization.

[ OK ] Listening on RPCbind Server Activation Socket.

Starting RPC bind service...

[ OK ] Listening on D-Bus System Message Bus Socket.

[ OK ] Reached target Sockets.

[ OK ] Started Daily Cleanup of Temporary Directories.

[ OK ] Reached target Timers.

[ OK ] Started Flexible branding.

[ OK ] Reached target Paths.

[ OK ] Reached target Basic System.

[ OK ] Started Self Monitoring and Reporting Technology (SMART) Daemon.

Starting OpenSSH Server Key Generation...

[ OK ] Started D-Bus System Message Bus.

Starting Login Service...

Starting LSB: Bring up/down networking...

Starting Resets System Activity Logs...

Starting GSSAPI Proxy Daemon...

[ OK ] Started RPC bind service.

Starting Cleanup of Temporary Directories...

[ OK ] Started Resets System Activity Logs.

[ OK ] Started Login Service.

[ OK ] Started Cleanup of Temporary Directories.

[ OK ] Started GSSAPI Proxy Daemon.

[ OK ] Reached target NFS client services.

[ OK ] Reached target Remote File Systems (Pre).

[ OK ] Reached target Remote File Systems.

Starting Permit User Sessions...

[ OK ] Started Permit User Sessions.

[ OK ] Started Command Scheduler.

[ OK ] Started OpenSSH Server Key Generation.

[ OK ] Started LSB: Bring up/down networking.

[ OK ] Reached target Network.

Starting /etc/rc.d/rc.local Compatibility...

Starting OpenSSH server daemon...

[ OK ] Reached target Network is Online.

Starting Notify NFS peers of a restart...

[ OK ] Started /etc/rc.d/rc.local Compatibility.

[ OK ] Started Notify NFS peers of a restart.

[ OK ] Started Console Getty.

[ OK ] Reached target Login Prompts.

[ OK ] Started OpenSSH server daemon.

[ OK ] Reached target Multi-User System.

[ OK ] Reached target Graphical Interface.

Starting Update UTMP about System Runlevel Changes...

[ OK ] Started Update UTMP about System Runlevel Changes.

11-13-2019 00:53:47 UTC : : Process id of the program :

11-13-2019 00:53:47 UTC : : #################################################

11-13-2019 00:53:47 UTC : : Starting Grid Installation

11-13-2019 00:53:47 UTC : : #################################################

11-13-2019 00:53:47 UTC : : Pre-Grid Setup steps are in process

11-13-2019 00:53:47 UTC : : Process id of the program :

11-13-2019 00:53:47 UTC : : Disable failed service var-lib-nfs-rpc_pipefs.mount

Failed to parse kernel command line, ignoring: No such file or directory

Failed to parse kernel command line, ignoring: No such file or directory

Failed to parse kernel command line, ignoring: No such file or directory

11-13-2019 00:53:48 UTC : : Resetting Failed Services

11-13-2019 00:53:48 UTC : : Sleeping for 60 seconds

Oracle Linux Server 7.6

Kernel 4.14.35-1902.6.6.el7uek.x86_64 on an x86_64

racnode1 login: 11-13-2019 00:54:48 UTC : : Systemctl state is running!

11-13-2019 00:54:48 UTC : : Setting correct permissions for /bin/ping

11-13-2019 00:54:48 UTC : : Public IP is set to 172.16.1.150

11-13-2019 00:54:48 UTC : : RAC Node PUBLIC Hostname is set to racnode1

11-13-2019 00:54:48 UTC : : racnode1 already exists : 172.16.1.150 racnode1.example.com racnode1

192.168.17.150 racnode1-priv.example.com racnode1-priv

172.16.1.160 racnode1-vip.example.com racnode1-vip, no update required

11-13-2019 00:54:48 UTC : : racnode1-priv already exists : 192.168.17.150 racnode1-priv.example.com racnode1-priv, no update required

11-13-2019 00:54:48 UTC : : racnode1-vip already exists : 172.16.1.160 racnode1-vip.example.com racnode1-vip, no update required

11-13-2019 00:54:48 UTC : : racnode-scan already exists : 172.16.1.70 racnode-scan.example.com racnode-scan, no update required

11-13-2019 00:54:48 UTC : : Preapring Device list

11-13-2019 00:54:48 UTC : : Changing Disk permission and ownership /dev/asm_disk1

11-13-2019 00:54:48 UTC : : #####################################################################

11-13-2019 00:54:48 UTC : : RAC setup will begin in 2 minutes

11-13-2019 00:54:48 UTC : : ####################################################################

11-13-2019 00:54:50 UTC : : ###################################################

11-13-2019 00:54:50 UTC : : Pre-Grid Setup steps completed

11-13-2019 00:54:50 UTC : : ###################################################

11-13-2019 00:54:50 UTC : : Checking if grid is already configured

11-13-2019 00:54:50 UTC : : Process id of the program :

11-13-2019 00:54:50 UTC : : Public IP is set to 172.16.1.150

11-13-2019 00:54:50 UTC : : RAC Node PUBLIC Hostname is set to racnode1

11-13-2019 00:54:50 UTC : : Domain is defined to example.com

11-13-2019 00:54:50 UTC : : Default setting of AUTO GNS VIP set to false. If you want to use AUTO GNS VIP, please pass DHCP_CONF as an env parameter set to true

11-13-2019 00:54:50 UTC : : RAC VIP set to 172.16.1.160

11-13-2019 00:54:50 UTC : : RAC Node VIP hostname is set to racnode1-vip

11-13-2019 00:54:50 UTC : : SCAN_NAME name is racnode-scan

11-13-2019 00:54:50 UTC : : SCAN PORT is set to empty string. Setting it to 1521 port.

11-13-2019 00:54:50 UTC : : 172.16.1.70

11-13-2019 00:54:50 UTC : : SCAN Name resolving to IP. Check Passed!

11-13-2019 00:54:50 UTC : : SCAN_IP name is 172.16.1.70

11-13-2019 00:54:50 UTC : : RAC Node PRIV IP is set to 192.168.17.150

11-13-2019 00:54:50 UTC : : RAC Node private hostname is set to racnode1-priv

11-13-2019 00:54:50 UTC : : CMAN_NAME set to the empty string

11-13-2019 00:54:50 UTC : : CMAN_IP set to the empty string

11-13-2019 00:54:50 UTC : : Cluster Name is not defined

11-13-2019 00:54:50 UTC : : Cluster name is set to 'racnode-c'

11-13-2019 00:54:50 UTC : : Password file generated

11-13-2019 00:54:50 UTC : : Common OS Password string is set for Grid user

11-13-2019 00:54:50 UTC : : Common OS Password string is set for Oracle user

11-13-2019 00:54:50 UTC : : Common OS Password string is set for Oracle Database

11-13-2019 00:54:50 UTC : : Setting CONFIGURE_GNS to false

11-13-2019 00:54:50 UTC : : GRID_RESPONSE_FILE env variable set to empty. configGrid.sh will use standard cluster responsefile

11-13-2019 00:54:50 UTC : : Location for User script SCRIPT_ROOT set to /common_scripts

11-13-2019 00:54:50 UTC : : IGNORE_CVU_CHECKS is set to true

11-13-2019 00:54:50 UTC : : Oracle SID is set to ORCLCDB

11-13-2019 00:54:50 UTC : : Oracle PDB name is set to ORCLPDB

11-13-2019 00:54:50 UTC : : Check passed for network card eth1 for public IP 172.16.1.150

11-13-2019 00:54:50 UTC : : Public Netmask : 255.255.255.0

11-13-2019 00:54:50 UTC : : Check passed for network card eth0 for private IP 192.168.17.150

11-13-2019 00:54:50 UTC : : Building NETWORK_STRING to set networkInterfaceList in Grid Response File

11-13-2019 00:54:50 UTC : : Network InterfaceList set to eth1:172.16.1.0:1,eth0:192.168.17.0:5

11-13-2019 00:54:50 UTC : : Setting random password for grid user

11-13-2019 00:54:50 UTC : : Setting random password for oracle user

11-13-2019 00:54:50 UTC : : Calling setupSSH function

11-13-2019 00:54:50 UTC : : SSh will be setup among racnode1 nodes

11-13-2019 00:54:50 UTC : : Running SSH setup for grid user between nodes racnode1

11-13-2019 00:55:26 UTC : : Running SSH setup for oracle user between nodes racnode1

11-13-2019 00:55:33 UTC : : SSH check fine for the racnode1

11-13-2019 00:55:33 UTC : : SSH check fine for the oracle@racnode1

11-13-2019 00:55:33 UTC : : Preapring Device list

11-13-2019 00:55:33 UTC : : Changing Disk permission and ownership

11-13-2019 00:55:33 UTC : : ASM Disk size : 0

11-13-2019 00:55:33 UTC : : ASM Device list will be with failure groups /dev/asm_disk1,

11-13-2019 00:55:33 UTC : : ASM Device list will be groups /dev/asm_disk1

11-13-2019 00:55:33 UTC : : CLUSTER_TYPE env variable is set to STANDALONE, will not process GIMR DEVICE list as default Diskgroup is set to DATA. GIMR DEVICE List will be processed when CLUSTER_TYPE is set to DOMAIN for DSC

11-13-2019 00:55:33 UTC : : Nodes in the cluster racnode1

11-13-2019 00:55:33 UTC : : Setting Device permissions for RAC Install on racnode1

11-13-2019 00:55:33 UTC : : Preapring ASM Device list

11-13-2019 00:55:33 UTC : : Changing Disk permission and ownership

11-13-2019 00:55:33 UTC : : Command : su - $GRID_USER -c "ssh $node sudo chown $GRID_USER:asmadmin $device" execute on racnode1

11-13-2019 00:55:33 UTC : : Command : su - $GRID_USER -c "ssh $node sudo chmod 660 $device" execute on racnode1

11-13-2019 00:55:33 UTC : : Populate Rac Env Vars on Remote Hosts

11-13-2019 00:55:33 UTC : : Command : su - $GRID_USER -c "ssh $node sudo echo \"export ASM_DEVICE_LIST=${ASM_DEVICE_LIST}\" >> $RAC_ENV_FILE" execute on racnode1

11-13-2019 00:55:33 UTC : : Generating Reponsefile

11-13-2019 00:55:34 UTC : : Running cluvfy Checks

11-13-2019 00:55:34 UTC : : Performing Cluvfy Checks

11-13-2019 00:55:59 UTC : : Checking /tmp/cluvfy_check.txt if there is any failed check.

ERROR:

PRVG-10467 : The default Oracle Inventory group could not be determined.

Verifying Physical Memory ...PASSED

Verifying Available Physical Memory ...PASSED

Verifying Swap Size ...FAILED (PRVF-7573)

Verifying Free Space: racnode1:/usr,racnode1:/var,racnode1:/etc,racnode1:/sbin,racnode1:/tmp ...PASSED

Verifying User Existence: grid ...

Verifying Users With Same UID: 54332 ...PASSED

Verifying User Existence: grid ...PASSED

Verifying Group Existence: asmadmin ...PASSED

Verifying Group Existence: dba ...PASSED

Verifying Group Membership: dba ...PASSED

Verifying Group Membership: asmadmin ...PASSED

Verifying Run Level ...PASSED

Verifying Hard Limit: maximum open file descriptors ...PASSED

Verifying Soft Limit: maximum open file descriptors ...PASSED

Verifying Hard Limit: maximum user processes ...PASSED

Verifying Soft Limit: maximum user processes ...PASSED

Verifying Soft Limit: maximum stack size ...PASSED

Verifying Architecture ...PASSED

Verifying OS Kernel Version ...PASSED

Verifying OS Kernel Parameter: semmsl ...PASSED

Verifying OS Kernel Parameter: semmns ...PASSED

Verifying OS Kernel Parameter: semopm ...PASSED

Verifying OS Kernel Parameter: semmni ...PASSED

Verifying OS Kernel Parameter: shmmax ...PASSED

Verifying OS Kernel Parameter: shmmni ...PASSED

Verifying OS Kernel Parameter: shmall ...FAILED (PRVG-1201)

Verifying OS Kernel Parameter: file-max ...PASSED

Verifying OS Kernel Parameter: aio-max-nr ...FAILED (PRVG-1205)

Verifying OS Kernel Parameter: panic_on_oops ...PASSED

Verifying Package: binutils-2.23.52.0.1 ...PASSED

Verifying Package: compat-libcap1-1.10 ...PASSED

Verifying Package: libgcc-4.8.2 (x86_64) ...PASSED

Verifying Package: libstdc++-4.8.2 (x86_64) ...PASSED

Verifying Package: libstdc++-devel-4.8.2 (x86_64) ...PASSED

Verifying Package: sysstat-10.1.5 ...PASSED

Verifying Package: ksh ...PASSED

Verifying Package: make-3.82 ...PASSED

Verifying Package: glibc-2.17 (x86_64) ...PASSED

Verifying Package: glibc-devel-2.17 (x86_64) ...PASSED

Verifying Package: libaio-0.3.109 (x86_64) ...PASSED

Verifying Package: libaio-devel-0.3.109 (x86_64) ...PASSED

Verifying Package: nfs-utils-1.2.3-15 ...PASSED

Verifying Package: smartmontools-6.2-4 ...PASSED

Verifying Package: net-tools-2.0-0.17 ...PASSED

Verifying Port Availability for component "Oracle Remote Method Invocation (ORMI)" ...PASSED

Verifying Port Availability for component "Oracle Notification Service (ONS)" ...PASSED

Verifying Port Availability for component "Oracle Cluster Synchronization Services (CSSD)" ...PASSED

Verifying Port Availability for component "Oracle Notification Service (ONS) Enterprise Manager support" ...PASSED

Verifying Port Availability for component "Oracle Database Listener" ...PASSED

Verifying Users With Same UID: 0 ...PASSED

Verifying Current Group ID ...PASSED

Verifying Root user consistency ...PASSED

Verifying Host name ...PASSED

Verifying Node Connectivity ...

Verifying Hosts File ...PASSED

Verifying Check that maximum (MTU) size packet goes through subnet ...PASSED

Verifying Node Connectivity ...WARNING (PRVF-6006, PRKC-1071, PRVG-11078)

Verifying Multicast or broadcast check ...PASSED

Verifying ASM Integrity ...

Verifying Node Connectivity ...

Verifying Hosts File ...PASSED

Verifying Check that maximum (MTU) size packet goes through subnet ...PASSED

Verifying Node Connectivity ...WARNING (PRVF-6006, PRKC-1071, PRVG-11078)

Verifying ASM Integrity ...WARNING (PRVF-6006, PRKC-1071, PRVG-11078)

Verifying Device Checks for ASM ...

Verifying Access Control List check ...PASSED

Verifying Device Checks for ASM ...PASSED

Verifying Network Time Protocol (NTP) ...

Verifying '/etc/ntp.conf' ...PASSED

Verifying '/var/run/ntpd.pid' ...PASSED

Verifying '/var/run/chronyd.pid' ...PASSED

Verifying Network Time Protocol (NTP) ...FAILED (PRVG-1017)

Verifying Same core file name pattern ...PASSED

Verifying User Mask ...PASSED

Verifying User Not In Group "root": grid ...PASSED

Verifying Time zone consistency ...PASSED

Verifying VIP Subnet configuration check ...PASSED

Verifying resolv.conf Integrity ...FAILED (PRVG-10048)

Verifying DNS/NIS name service ...

Verifying Name Service Switch Configuration File Integrity ...PASSED

Verifying DNS/NIS name service ...FAILED (PRVG-1101)

Verifying Single Client Access Name (SCAN) ...WARNING (PRVG-11368)

Verifying Domain Sockets ...PASSED

Verifying /boot mount ...PASSED

Verifying Daemon "avahi-daemon" not configured and running ...PASSED

Verifying Daemon "proxyt" not configured and running ...PASSED

Verifying loopback network interface address ...PASSED

Verifying Oracle base: /u01/app/grid ...

Verifying '/u01/app/grid' ...PASSED

Verifying Oracle base: /u01/app/grid ...PASSED

Verifying User Equivalence ...PASSED

Verifying Network interface bonding status of private interconnect network interfaces ...PASSED

Verifying /dev/shm mounted as temporary file system ...PASSED

Verifying File system mount options for path /var ...PASSED

Verifying zeroconf check ...PASSED

Verifying ASM Filter Driver configuration ...PASSED

Verifying Access control attributes for cluster manifest file ...PASSED

Pre-check for cluster services setup was unsuccessful on all the nodes.

Failures were encountered during execution of CVU verification request "stage -pre crsinst".

Verifying Swap Size ...FAILED

racnode1: PRVF-7573 : Sufficient swap size is not available on node "racnode1"

[Required = 14.4158GB (1.5116076E7KB) ; Found = 8GB (8388604.0KB)]

Verifying OS Kernel Parameter: shmall ...FAILED

racnode1: PRVG-1201 : OS kernel parameter "shmall" does not have expected

configured value on node "racnode1" [Expected = "2251799813685247" ;

Current = "18446744073692774000"; Configured = "1073741824"].

Verifying OS Kernel Parameter: aio-max-nr ...FAILED

racnode1: PRVG-1205 : OS kernel parameter "aio-max-nr" does not have expected

current value on node "racnode1" [Expected = "1048576" ; Current =

"65536"; Configured = "1048576"].

Verifying Node Connectivity ...WARNING

PRVF-6006 : unable to reach the IP addresses "192.168.17.150" from the local

node

PRKC-1071 : Nodes "192.168.17.150" did not respond to ping in "3" seconds,

null

PRVG-11078 : node connectivity failed for subnet "192.168.17.0"

Verifying ASM Integrity ...WARNING

Verifying Node Connectivity ...WARNING

PRVF-6006 : unable to reach the IP addresses "192.168.17.150" from the local

node

PRKC-1071 : Nodes "192.168.17.150" did not respond to ping in "3" seconds,

null

PRVG-11078 : node connectivity failed for subnet "192.168.17.0"

Verifying Network Time Protocol (NTP) ...FAILED

racnode1: PRVG-1017 : NTP configuration file "/etc/ntp.conf" is present on

nodes "racnode1" on which NTP daemon or service was not running

Verifying resolv.conf Integrity ...FAILED

racnode1: PRVG-10048 : Name "racnode1" was not resolved to an address of the

specified type by name servers o"127.0.0.11".

Verifying DNS/NIS name service ...FAILED

PRVG-1101 : SCAN name "racnode-scan" failed to resolve

Verifying Single Client Access Name (SCAN) ...WARNING

racnode1: PRVG-11368 : A SCAN is recommended to resolve to "3" or more IP

addresses, but SCAN "racnode-scan" resolves to only "172.16.1.70"

CVU operation performed: stage -pre crsinst

Date: Nov 13, 2019 12:55:36 AM

CVU home: /u01/app/18.3.0/grid/

User: grid

11-13-2019 00:55:59 UTC : : CVU Checks are ignored as IGNORE_CVU_CHECKS set to true. It is recommended to set IGNORE_CVU_CHECKS to false and meet all the cvu checks requirement. RAC installation might fail, if there are failed cvu checks.

11-13-2019 00:55:59 UTC : : Running Grid Installation

11-13-2019 00:56:45 UTC : : Running root.sh

11-13-2019 00:56:45 UTC : : Nodes in the cluster racnode1

11-13-2019 00:56:45 UTC : : Running root.sh on racnode1

Failed to parse kernel command line, ignoring: No such file or directory

Failed to parse kernel command line, ignoring: No such file or directory

Failed to parse kernel command line, ignoring: No such file or directory

Failed to parse kernel command line, ignoring: No such file or directoryFailed to parse kernel command line, ignoring: No such file or directory

Failed to parse kernel command line, ignoring: No such file or directory

Failed to parse kernel command line, ignoring: No such file or directory

Failed to parse kernel command line, ignoring: No such file or directory

Failed to parse kernel command line, ignoring: No such file or directory

Failed to parse kernel command line, ignoring: No such file or directory

Failed to parse kernel command line, ignoring: No such file or directory

Failed to parse kernel command line, ignoring: No such file or directory

11-13-2019 01:06:43 UTC : : Running post root.sh steps

11-13-2019 01:06:43 UTC : : Running post root.sh steps to setup Grid env

11-13-2019 01:49:43 UTC : : Checking Cluster Status

11-13-2019 01:49:43 UTC : : Nodes in the cluster

11-13-2019 01:49:43 UTC : : Removing /tmp/cluvfy_check.txt as cluster check has passed

11-13-2019 01:49:43 UTC : : Generating DB Responsefile Running DB creation

11-13-2019 01:49:43 UTC : : Running DB creation

11-13-2019 02:17:11 UTC : : Checking DB status

11-13-2019 02:17:14 UTC : : #################################################################

11-13-2019 02:17:14 UTC : : Oracle Database ORCLCDB is up and running on racnode1

11-13-2019 02:17:14 UTC : : #################################################################

11-13-2019 02:17:14 UTC : : Running User Script

11-13-2019 02:17:14 UTC : : Setting Remote Listener

11-13-2019 02:17:14 UTC : : ####################################

11-13-2019 02:17:14 UTC : : ORACLE RAC DATABASE IS READY TO USE!

11-13-2019 02:17:14 UTC : : ####################################

此时的主机名解析文件:

$ cat /opt/containers/rac_host_file

127.0.0.1 localhost.localdomain localhost

172.16.1.150 racnode1.example.com racnode1

192.168.17.150 racnode1-priv.example.com racnode1-priv

172.16.1.160 racnode1-vip.example.com racnode1-vip

172.16.1.70 racnode-scan.example.com racnode-scan

连接到第一个RAC节点,并确认GI和数据库安装正常:

docker exec -i -t racnode1 /bin/bash

查看集群状态,正常:

[grid@racnode1 ~]$ id

uid=54332(grid) gid=54321(oinstall) groups=54321(oinstall),54322(dba),54330(racdba),54334(asmadmin),54335(asmdba),54336(asmoper)

[grid@racnode1 ~]$ crsctl check cluster

CRS-4537: Cluster Ready Services is online

CRS-4529: Cluster Synchronization Services is online

CRS-4533: Event Manager is online

[grid@racnode1 ~]$ ps -ef|grep smon

grid 9642 1 0 01:28 ? 00:00:00 mdb_smon_-MGMTDB

oracle 10943 1 0 02:14 ? 00:00:00 ora_smon_ORCLCDB1

root 16436 1 1 01:02 ? 00:02:08 /u01/app/18.3.0/grid/bin/osysmond.bin

grid 17404 1 0 01:03 ? 00:00:00 asm_smon_+ASM1

grid 25860 25630 0 02:51 pts/1 00:00:00 grep --color=auto smon

[grid@racnode1 ~]$ export ORACLE_HOME=/u01/app/18.3.0/grid

[grid@racnode1 ~]$ lsnrctl status

LSNRCTL for Linux: Version 18.0.0.0.0 - Production on 13-NOV-2019 02:51:42

Copyright (c) 1991, 2018, Oracle. All rights reserved.

Connecting to (DESCRIPTION=(ADDRESS=(PROTOCOL=IPC)(KEY=LISTENER)))

STATUS of the LISTENER

------------------------

Alias LISTENER

Version TNSLSNR for Linux: Version 18.0.0.0.0 - Production

Start Date 13-NOV-2019 01:06:31

Uptime 0 days 1 hr. 45 min. 10 sec

Trace Level off

Security ON: Local OS Authentication

SNMP OFF

Listener Parameter File /u01/app/18.3.0/grid/network/admin/listener.ora

Listener Log File /u01/app/grid/diag/tnslsnr/racnode1/listener/alert/log.xml

Listening Endpoints Summary...

(DESCRIPTION=(ADDRESS=(PROTOCOL=ipc)(KEY=LISTENER)))

(DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=172.16.1.150)(PORT=1521)))

(DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=172.16.1.160)(PORT=1521)))

(DESCRIPTION=(ADDRESS=(PROTOCOL=tcps)(HOST=racnode1.example.com)(PORT=5500))(Security=(my_wallet_directory=/u01/app/oracle/product/18.3.0/dbhome_1/admin/ORCLCDB/xdb_wallet))(Presentation=HTTP)(Session=RAW))

Services Summary...

Service "+ASM" has 1 instance(s).

Instance "+ASM1", status READY, has 1 handler(s) for this service...

Service "+ASM_DATA" has 1 instance(s).

Instance "+ASM1", status READY, has 1 handler(s) for this service...

Service "9731f177beb82dfde053960110ac1fcf" has 1 instance(s).

Instance "ORCLCDB1", status READY, has 1 handler(s) for this service...

Service "ORCLCDB" has 1 instance(s).

Instance "ORCLCDB1", status READY, has 1 handler(s) for this service...

Service "ORCLCDBXDB" has 1 instance(s).

Instance "ORCLCDB1", status READY, has 1 handler(s) for this service...

Service "orclpdb" has 1 instance(s).

Instance "ORCLCDB1", status READY, has 1 handler(s) for this service...

The command completed successfully

切换到oracle用户,登录数据库正常:

[oracle@racnode1 ~]$ export ORACLE_HOME=/u01/app/oracle/product/18.3.0/dbhome_1/

[oracle@racnode1 ~]$ cat $ORACLE_HOME/network/admin/tnsnames.ora

# tnsnames.ora Network Configuration File: /u01/app/oracle/product/18.3.0/dbhome_1/network/admin/tnsnames.ora

# Generated by Oracle configuration tools.

ORCLCDB =

(DESCRIPTION =

(ADDRESS = (PROTOCOL = TCP)(HOST = racnode-scan)(PORT = 1521))

(CONNECT_DATA =

(SERVER = DEDICATED)

(SERVICE_NAME = ORCLCDB)

)

)

[oracle@racnode1 ~]$ sqlplus sys/Oracle.123#@ORCLCDB as sysdba

SQL*Plus: Release 18.0.0.0.0 - Production on Wed Nov 13 02:58:25 2019

Version 18.3.0.0.0

Copyright (c) 1982, 2018, Oracle. All rights reserved.

Connected to:

Oracle Database 18c Enterprise Edition Release 18.0.0.0.0 - Production

Version 18.3.0.0.0

SQL> select $instance_name from v$instance;

select $instance_name from v$instance

*

ERROR at line 1:

ORA-00911: invalid character

SQL> select instance_name from v$instance;

INSTANCE_NAME

----------------

ORCLCDB1

SQL> exit

Disconnected from Oracle Database 18c Enterprise Edition Release 18.0.0.0.0 - Production

Version 18.3.0.0.0

添加第二个RAC节点

首先创建RAC节点2容器:

$ docker create -t -i \

--hostname racnode2 \

--volume /boot:/boot:ro \

--volume /dev/shm \

--tmpfs /dev/shm:rw,exec,size=4G \

--volume /opt/containers/rac_host_file:/etc/hosts \

--dns-search=example.com \

--device=/dev/sdb:/dev/asm_disk1 \

--privileged=false \

--cap-add=SYS_NICE \

--cap-add=SYS_RESOURCE \

--cap-add=NET_ADMIN \

-e NODE_VIP=172.16.1.161 \

-e VIP_HOSTNAME=racnode2-vip \

-e PRIV_IP=192.168.17.151 \

-e PRIV_HOSTNAME=racnode2-priv \

-e PUBLIC_IP=172.16.1.151 \

-e PUBLIC_HOSTNAME=racnode2 \

-e SCAN_NAME=racnode-scan \

-e SCAN_IP=172.16.1.70 \

-e DOMAIN=example.com \

-e ASM_DEVICE_LIST=/dev/asm_disk1 \

-e ASM_DISCOVERY_DIR=/dev \

-e OS_PASSWORD=Oracle_18c \

-e EXISTING_CLS_NODES=racnode1 \

-e ORACLE_SID=ORCLCDB \

-e OP_TYPE=ADDNODE \

--restart=always --tmpfs=/run -v /sys/fs/cgroup:/sys/fs/cgroup:ro \

--cpu-rt-runtime=95000 --ulimit rtprio=99 \

--name racnode2 \

container-registry.oracle.com/database/rac:18.3.0

为racnode2分配网络:

docker network disconnect bridge racnode2

docker network connect rac_pub1_nw --ip 172.16.1.151 racnode2

docker network connect rac_priv1_nw --ip 192.168.17.151 racnode2

启动racnode2:

docker start racnode2

查看启动日志:

docker logs -f racnode2

如果你按照Oracle Container Registry上的文档做,就会出现以下错误,因为OS_PASSWORD 和ORACLE_PWD 并未生效。解决方法就是参照Github上的文档:

...

11-10-2019 11:55:55 UTC : : Cluster Nodes are racnode1 racnode2

11-10-2019 11:55:55 UTC : : Running SSH setup for grid user between nodes racnode1 racnode2

11-10-2019 11:57:08 UTC : : Running SSH setup for oracle user between nodes racnode1 racnode2

11-10-2019 11:58:22 UTC : : SSH check failed for the grid@racnode1 beuase of permission denied error! SSH setup did not complete sucessfully

11-10-2019 11:58:22 UTC : : Error has occurred in Grid Setup, Please verify!

已在GitHub中提交了issue。

正常执行完成的日志如下, 耗时23分钟:

[opc@instance-20191111-0822 ~]$ docker logs -f racnode2

PATH=/bin:/usr/bin:/sbin:/usr/sbin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

HOSTNAME=racnode2

TERM=xterm

EXISTING_CLS_NODES=racnode1

NODE_VIP=172.16.1.161

VIP_HOSTNAME=racnode2-vip

PRIV_IP=192.168.17.151

PRIV_HOSTNAME=racnode2-priv

PUBLIC_IP=172.16.1.151

PUBLIC_HOSTNAME=racnode2

DOMAIN=example.com

SCAN_NAME=racnode-scan

SCAN_IP=172.16.1.70

ASM_DISCOVERY_DIR=/dev

ASM_DEVICE_LIST=/dev/asm_disk1

ORACLE_SID=ORCLCDB

OP_TYPE=ADDNODE

COMMON_OS_PWD_FILE=common_os_pwdfile.enc

PWD_KEY=pwd.key

SETUP_LINUX_FILE=setupLinuxEnv.sh

INSTALL_DIR=/opt/scripts

GRID_BASE=/u01/app/grid

GRID_HOME=/u01/app/18.3.0/grid

INSTALL_FILE_1=LINUX.X64_180000_grid_home.zip

GRID_INSTALL_RSP=grid.rsp

GRID_SW_INSTALL_RSP=grid_sw_inst.rsp

GRID_SETUP_FILE=setupGrid.sh

FIXUP_PREQ_FILE=fixupPreq.sh

INSTALL_GRID_BINARIES_FILE=installGridBinaries.sh

INSTALL_GRID_PATCH=applyGridPatch.sh

INVENTORY=/u01/app/oraInventory

CONFIGGRID=configGrid.sh

ADDNODE=AddNode.sh

DELNODE=DelNode.sh

ADDNODE_RSP=grid_addnode.rsp

SETUPSSH=setupSSH.expect

GRID_PATCH=p28322130_183000OCWRU_Linux-x86-64.zip

PATCH_NUMBER=28322130

DOCKERORACLEINIT=dockeroracleinit

GRID_USER_HOME=/home/grid

SETUPGRIDENV=setupGridEnv.sh

RESET_OS_PASSWORD=resetOSPassword.sh

MULTI_NODE_INSTALL=MultiNodeInstall.py

DB_BASE=/u01/app/oracle

DB_HOME=/u01/app/oracle/product/18.3.0/dbhome_1

INSTALL_FILE_2=LINUX.X64_180000_db_home.zip

DB_INSTALL_RSP=db_inst.rsp

DBCA_RSP=dbca.rsp

DB_SETUP_FILE=setupDB.sh

PWD_FILE=setPassword.sh

RUN_FILE=runOracle.sh

STOP_FILE=stopOracle.sh

ENABLE_RAC_FILE=enableRAC.sh

CHECK_DB_FILE=checkDBStatus.sh

USER_SCRIPTS_FILE=runUserScripts.sh

REMOTE_LISTENER_FILE=remoteListener.sh

INSTALL_DB_BINARIES_FILE=installDBBinaries.sh

FUNCTIONS=functions.sh

COMMON_SCRIPTS=/common_scripts

CHECK_SPACE_FILE=checkSpace.sh

EXPECT=/usr/bin/expect

BIN=/usr/sbin

container=true

INSTALL_SCRIPTS=/opt/scripts/install

SCRIPT_DIR=/opt/scripts/startup

GRID_PATH=/u01/app/18.3.0/grid/bin:/u01/app/18.3.0/grid/OPatch/:/usr/sbin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

DB_PATH=/u01/app/oracle/product/18.3.0/dbhome_1/bin:/u01/app/oracle/product/18.3.0/dbhome_1/OPatch/:/usr/sbin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

GRID_LD_LIBRARY_PATH=/u01/app/18.3.0/grid/lib:/usr/lib:/lib

DB_LD_LIBRARY_PATH=/u01/app/oracle/product/18.3.0/dbhome_1/lib:/usr/lib:/lib

HOME=/home/grid

Failed to parse kernel command line, ignoring: No such file or directory

systemd 219 running in system mode. (+PAM +AUDIT +SELINUX +IMA -APPARMOR +SMACK +SYSVINIT +UTMP +LIBCRYPTSETUP +GCRYPT +GNUTLS +ACL +XZ +LZ4 -SECCOMP +BLKID +ELFUTILS +KMOD +IDN)

Detected virtualization other.

Detected architecture x86-64.

Welcome to Oracle Linux Server 7.6!

Set hostname to <racnode2>.

Failed to parse kernel command line, ignoring: No such file or directoryFailed to parse kernel command line, ignoring: No such file or directory

Failed to parse kernel command line, ignoring: No such file or directory

/usr/lib/systemd/system-generators/systemd-fstab-generator failed with error code 1.

Binding to IPv6 address not available since kernel does not support IPv6.

Binding to IPv6 address not available since kernel does not support IPv6.

Cannot add dependency job for unit display-manager.service, ignoring: Unit not found.

[ OK ] Started Dispatch Password Requests to Console Directory Watch.

[ OK ] Created slice Root Slice.

[ OK ] Created slice System Slice.

[ OK ] Listening on /dev/initctl Compatibility Named Pipe.

[ OK ] Reached target Local Encrypted Volumes.

[ OK ] Started Forward Password Requests to Wall Directory Watch.

[ OK ] Listening on Journal Socket.

[ OK ] Reached target RPC Port Mapper.

[ OK ] Reached target Swap.

Starting Read and set NIS domainname from /etc/sysconfig/network...

Starting Rebuild Hardware Database...

Starting Configure read-only root support...

Couldn't determine result for ConditionKernelCommandLine=|rd.modules-load for systemd-modules-load.service, assuming failed: No such file or directory

Couldn't determine result for ConditionKernelCommandLine=|modules-load for systemd-modules-load.service, assuming failed: No such file or directory

[ OK ] Reached target Local File Systems (Pre).

[ OK ] Created slice User and Session Slice.

[ OK ] Reached target Slices.

[ OK ] Listening on Delayed Shutdown Socket.

[ OK ] Created slice system-getty.slice.

Starting Journal Service...

[ OK ] Started Read and set NIS domainname from /etc/sysconfig/network.

[ OK ] Started Journal Service.

Starting Flush Journal to Persistent Storage...

[ OK ] Started Configure read-only root support.

Starting Load/Save Random Seed...

[ OK ] Reached target Local File Systems.

Starting Mark the need to relabel after reboot...

Starting Preprocess NFS configuration...

Starting Rebuild Journal Catalog...

[ OK ] Started Mark the need to relabel after reboot.

[ OK ] Started Flush Journal to Persistent Storage.

Starting Create Volatile Files and Directories...

[ OK ] Started Load/Save Random Seed.

[ OK ] Started Rebuild Journal Catalog.

[ OK ] Started Preprocess NFS configuration.

[ OK ] Started Create Volatile Files and Directories.

Starting Update UTMP about System Boot/Shutdown...

Mounting RPC Pipe File System...

[ OK ] Started Update UTMP about System Boot/Shutdown.

[FAILED] Failed to mount RPC Pipe File System.

See 'systemctl status var-lib-nfs-rpc_pipefs.mount' for details.

[DEPEND] Dependency failed for rpc_pipefs.target.

[DEPEND] Dependency failed for RPC security service for NFS client and server.

[ OK ] Started Rebuild Hardware Database.

Starting Update is Completed...

[ OK ] Started Update is Completed.

[ OK ] Reached target System Initialization.

[ OK ] Listening on D-Bus System Message Bus Socket.

[ OK ] Listening on RPCbind Server Activation Socket.

[ OK ] Reached target Sockets.

Starting RPC bind service...

[ OK ] Started Flexible branding.

[ OK ] Reached target Paths.

[ OK ] Reached target Basic System.

Starting Login Service...

Starting OpenSSH Server Key Generation...

[ OK ] Started D-Bus System Message Bus.

Starting Resets System Activity Logs...

Starting GSSAPI Proxy Daemon...

Starting LSB: Bring up/down networking...

[ OK ] Started Self Monitoring and Reporting Technology (SMART) Daemon.

[ OK ] Started Daily Cleanup of Temporary Directories.

[ OK ] Reached target Timers.

[ OK ] Started RPC bind service.

Starting Cleanup of Temporary Directories...

[ OK ] Started Login Service.

[ OK ] Started Resets System Activity Logs.

[ OK ] Started Cleanup of Temporary Directories.

[ OK ] Started GSSAPI Proxy Daemon.

[ OK ] Reached target NFS client services.

[ OK ] Reached target Remote File Systems (Pre).

[ OK ] Reached target Remote File Systems.

Starting Permit User Sessions...

[ OK ] Started Permit User Sessions.

[ OK ] Started Command Scheduler.

[ OK ] Started OpenSSH Server Key Generation.

[ OK ] Started LSB: Bring up/down networking.

[ OK ] Reached target Network.

Starting OpenSSH server daemon...

Starting /etc/rc.d/rc.local Compatibility...

[ OK ] Reached target Network is Online.

Starting Notify NFS peers of a restart...

[ OK ] Started /etc/rc.d/rc.local Compatibility.

[ OK ] Started Notify NFS peers of a restart.

[ OK ] Started Console Getty.

[ OK ] Reached target Login Prompts.

[ OK ] Started OpenSSH server daemon.

[ OK ] Reached target Multi-User System.

[ OK ] Reached target Graphical Interface.

Starting Update UTMP about System Runlevel Changes...

[ OK ] Started Update UTMP about System Runlevel Changes.

11-13-2019 03:07:42 UTC : : Process id of the program :

11-13-2019 03:07:42 UTC : : #################################################

11-13-2019 03:07:42 UTC : : Starting Grid Installation

11-13-2019 03:07:42 UTC : : #################################################

11-13-2019 03:07:42 UTC : : Pre-Grid Setup steps are in process

11-13-2019 03:07:42 UTC : : Process id of the program :

11-13-2019 03:07:42 UTC : : Disable failed service var-lib-nfs-rpc_pipefs.mount

Failed to parse kernel command line, ignoring: No such file or directory

Failed to parse kernel command line, ignoring: No such file or directory

Failed to parse kernel command line, ignoring: No such file or directory

11-13-2019 03:07:42 UTC : : Resetting Failed Services

11-13-2019 03:07:42 UTC : : Sleeping for 60 seconds

Oracle Linux Server 7.6

Kernel 4.14.35-1902.6.6.el7uek.x86_64 on an x86_64

racnode2 login: 11-13-2019 03:08:42 UTC : : Systemctl state is running!

11-13-2019 03:08:42 UTC : : Setting correct permissions for /bin/ping

11-13-2019 03:08:42 UTC : : Public IP is set to 172.16.1.151

11-13-2019 03:08:42 UTC : : RAC Node PUBLIC Hostname is set to racnode2

11-13-2019 03:08:42 UTC : : Preparing host line for racnode2

11-13-2019 03:08:42 UTC : : Adding \n172.16.1.151\tracnode2.example.com\tracnode2 to /etc/hosts

11-13-2019 03:08:42 UTC : : Preparing host line for racnode2-priv

11-13-2019 03:08:42 UTC : : Adding \n192.168.17.151\tracnode2-priv.example.com\tracnode2-priv to /etc/hosts

11-13-2019 03:08:42 UTC : : Preparing host line for racnode2-vip

11-13-2019 03:08:42 UTC : : Adding \n172.16.1.161\tracnode2-vip.example.com\tracnode2-vip to /etc/hosts

11-13-2019 03:08:42 UTC : : racnode-scan already exists : 172.16.1.70 racnode-scan.example.com racnode-scan, no update required

11-13-2019 03:08:42 UTC : : Preapring Device list

11-13-2019 03:08:42 UTC : : Changing Disk permission and ownership /dev/asm_disk1

11-13-2019 03:08:42 UTC : : #####################################################################

11-13-2019 03:08:42 UTC : : RAC setup will begin in 2 minutes

11-13-2019 03:08:42 UTC : : ####################################################################

11-13-2019 03:08:44 UTC : : ###################################################

11-13-2019 03:08:44 UTC : : Pre-Grid Setup steps completed

11-13-2019 03:08:44 UTC : : ###################################################

11-13-2019 03:08:44 UTC : : Checking if grid is already configured

11-13-2019 03:08:44 UTC : : Public IP is set to 172.16.1.151

11-13-2019 03:08:44 UTC : : RAC Node PUBLIC Hostname is set to racnode2

11-13-2019 03:08:44 UTC : : Domain is defined to example.com

11-13-2019 03:08:45 UTC : : Setting Existing Cluster Node for node addition operation. This will be retrieved from racnode1

11-13-2019 03:08:45 UTC : : Existing Node Name of the cluster is set to racnode1

11-13-2019 03:08:45 UTC : : 172.16.1.150

11-13-2019 03:08:45 UTC : : Existing Cluster node resolved to IP. Check passed

11-13-2019 03:08:45 UTC : : Default setting of AUTO GNS VIP set to false. If you want to use AUTO GNS VIP, please pass DHCP_CONF as an env parameter set to true

11-13-2019 03:08:45 UTC : : RAC VIP set to 172.16.1.161

11-13-2019 03:08:45 UTC : : RAC Node VIP hostname is set to racnode2-vip

11-13-2019 03:08:45 UTC : : SCAN_NAME name is racnode-scan

11-13-2019 03:08:45 UTC : : 172.16.1.70

11-13-2019 03:08:45 UTC : : SCAN Name resolving to IP. Check Passed!

11-13-2019 03:08:45 UTC : : SCAN_IP name is 172.16.1.70

11-13-2019 03:08:45 UTC : : RAC Node PRIV IP is set to 192.168.17.151

11-13-2019 03:08:45 UTC : : RAC Node private hostname is set to racnode2-priv

11-13-2019 03:08:45 UTC : : CMAN_NAME set to the empty string

11-13-2019 03:08:45 UTC : : CMAN_IP set to the empty string

11-13-2019 03:08:45 UTC : : Password file generated

11-13-2019 03:08:45 UTC : : Common OS Password string is set for Grid user

11-13-2019 03:08:45 UTC : : Common OS Password string is set for Oracle user

11-13-2019 03:08:45 UTC : : GRID_RESPONSE_FILE env variable set to empty. AddNode.sh will use standard cluster responsefile

11-13-2019 03:08:45 UTC : : Location for User script SCRIPT_ROOT set to /common_scripts

11-13-2019 03:08:45 UTC : : ORACLE_SID is set to ORCLCDB

11-13-2019 03:08:45 UTC : : Setting random password for root/grid/oracle user

11-13-2019 03:08:45 UTC : : Setting random password for grid user