部分代码取自于《深度学习入门-基于python的理论与实践》随书代码

看完了《深度学习的数学》之后自己用c++实现了一下,但是失败了,看完了新买的《深度学习入门-基于python的理论与实践》,上面有随书附赠的代码,拿来自己实现学习一下。

首先的对于层的实现:

1 # coding: utf-8 2 import sys, os 3 sys.path.append(os.pardir) # 为了导入父目录的文件而进行的设定 4 import numpy as np 5 from common.layers import * 6 from common.gradient import numerical_gradient 7 from collections import OrderedDict 8 9 10 class TwoLayerNet: 11 12 def __init__(self, input_size, hidden_size, output_size, weight_init_std = 0.01): 13 # 初始化权重 14 self.params = {} 15 self.params['W1'] = weight_init_std * np.random.randn(input_size, hidden_size) 16 self.params['b1'] = np.zeros(hidden_size) 17 self.params['W2'] = weight_init_std * np.random.randn(hidden_size, output_size) 18 self.params['b2'] = np.zeros(output_size) 19 20 # 生成层 21 self.layers = OrderedDict() 22 self.layers['Affine1'] = Affine(self.params['W1'], self.params['b1']) 23 self.layers['Relu1'] = Relu() 24 self.layers['Affine2'] = Affine(self.params['W2'], self.params['b2']) 25 26 self.lastLayer = SoftmaxWithLoss() 27 28 def predict(self, x): 29 for layer in self.layers.values(): 30 x = layer.forward(x) 31 32 return x 33 34 # x:输入数据, t:监督数据 35 def loss(self, x, t): 36 y = self.predict(x) 37 return self.lastLayer.forward(y, t) 38 39 def accuracy(self, x, t): 40 y = self.predict(x) 41 y = np.argmax(y, axis=1) 42 if t.ndim != 1 : t = np.argmax(t, axis=1) 43 44 accuracy = np.sum(y == t) / float(x.shape[0]) 45 return accuracy 46 47 # x:输入数据, t:监督数据 48 def numerical_gradient(self, x, t): 49 loss_W = lambda W: self.loss(x, t) 50 51 grads = {} 52 grads['W1'] = numerical_gradient(loss_W, self.params['W1']) 53 grads['b1'] = numerical_gradient(loss_W, self.params['b1']) 54 grads['W2'] = numerical_gradient(loss_W, self.params['W2']) 55 grads['b2'] = numerical_gradient(loss_W, self.params['b2']) 56 57 return grads 58 59 def gradient(self, x, t): 60 # forward 61 self.loss(x, t) 62 63 # backward 64 dout = 1 65 dout = self.lastLayer.backward(dout) 66 67 layers = list(self.layers.values()) 68 layers.reverse() 69 for layer in layers: 70 dout = layer.backward(dout) 71 72 # 设定 73 grads = {} 74 grads['W1'], grads['b1'] = self.layers['Affine1'].dW, self.layers['Affine1'].db 75 grads['W2'], grads['b2'] = self.layers['Affine2'].dW, self.layers['Affine2'].db 76 77 return grads

其中的部分函数库可以下载Anaconda和图灵社区的随书代码获得(不购买也可以下载)

然后是最重要的主程序代码:

1 # coding: utf-8 2 import sys, os 3 sys.path.append(os.pardir) 4 5 import numpy as np 6 from dataset.mnist import load_mnist 7 from two_layer_net import TwoLayerNet 8 9 # 读入数据 10 (x_train, t_train), (x_test, t_test) = load_mnist(normalize=True, one_hot_label=True) 11 12 network = TwoLayerNet(input_size=784, hidden_size=50, output_size=10) 13 14 iters_num = 10000 15 train_size = x_train.shape[0] 16 batch_size = 100 17 learning_rate = 0.1 18 19 train_loss_list = [] 20 train_acc_list = [] 21 test_acc_list = [] 22 23 iter_per_epoch = max(train_size / batch_size, 1) 24 25 for i in range(iters_num): 26 batch_mask = np.random.choice(train_size, batch_size) 27 x_batch = x_train[batch_mask] 28 t_batch = t_train[batch_mask] 29 30 # 梯度 31 #grad = network.numerical_gradient(x_batch, t_batch) 32 grad = network.gradient(x_batch, t_batch) 33 34 # 更新 35 for key in ('W1', 'b1', 'W2', 'b2'): 36 network.params[key] -= learning_rate * grad[key] 37 38 loss = network.loss(x_batch, t_batch) 39 train_loss_list.append(loss) 40 41 if i % iter_per_epoch == 0: 42 train_acc = network.accuracy(x_train, t_train) 43 test_acc = network.accuracy(x_test, t_test) 44 train_acc_list.append(train_acc) 45 test_acc_list.append(test_acc) 46 print(train_acc, test_acc)

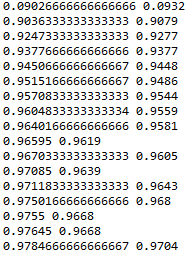

运行一下(第一位是训练正确率,第二位是测试正确率):

通过运行结果可以看出来,正确率达到了97%;

可是只看他给出的正确率并感受不到这个程序真的完成了数字识别,我们想单独看一看他对数字的识别

扫描二维码关注公众号,回复:

8050569 查看本文章

在主程序下加入以下代码:

#重新读入,且不进行正规化 (x_train, t_train), (x_test, t_test) = load_mnist(flatten=True, normalize=False) batch_mask = np.random.choice(train_size, 1) x_batch = x_train[batch_mask] t_batch = t_train[batch_mask] img = x_batch[0] label = t_batch[0] y=network.predict(x_batch) y=np.argmax(y, axis=1) print("I think it is",y) #计算机认为的答案 print("Answer is ",label) #正确答案 img = img.reshape(28, 28) # 把图像的形状变为原来的尺寸 img_show(img) #显示图像

需要注意以下这个程序的读入与上一个程序不同。

运行后可以看到:

通过肉眼与我们的真·图像识别也可以看出这个确实是3

有些偏差的也能识别: